About Apache Pulsar

Apache Pulsar is a top-level project of Apache Software Foundation. It is a native distributed message flow platform of the next generation cloud. It integrates message, storage and lightweight functional computing. It adopts the design of separation of computing and storage architecture, supports multi tenant, persistent storage and multi machine room cross regional data replication, and has strong consistency, high throughput Stream data storage features such as low latency and high scalability.

GitHub address: http://github.com/apache/pulsar/

This article is translated from: Using Apache Pulsar With Kotlin, by Gilles Barbier.

Original link: https://gillesbarbier.medium....

Introduction to the translator

Song Bo, working in Beijing Baiguan Technology Co., Ltd., is a senior development engineer, focusing on the fields of micro services, cloud computing and big data.

Apache Pulsar Usually described as the next generation Kafka, it is a rising star in the developer tool set. Pulsar is a multi tenant, high-performance solution for server to server messaging. It is usually used as the core of scalable applications.

Pulsar can work with Kotlin Because it is written in Java. However, its API does not take into account the powerful functions brought by Kotlin, such as Data class,Synergetic process or Reflection free serialization.

In this article, I will discuss how to use Pulsar through Kotlin.

Use native serialization for message body

A default way to define messages in Kotlin is to use Data class , the main purpose of these classes is to save data. For such data classes, Kotlin will automatically provide methods such as equals(), toString(), copy(), etc., so as to shorten the code length and reduce the risk of errors.

Using Java to create a Pulsar producer:

Producer<MyAvro> avroProducer = client

.newProducer(Schema.AVRO(MyAvro.class))

.topic("some-avro-topic")

.create();The Schema The Avro (myavro. Class) instruction will introspect the MyAvro Java class and infer a Schema from it. This needs to verify whether the new producer will produce messages that are actually compatible with existing consumers. However, the Java implementation of the Kotlin data class does not work well with the default serializer used by Pulsar. Fortunately, starting with version 2.7.0, Pulsar allows you to use custom serializers for producers and consumers.

First, you need to install the official Kotlin serialization plug-in . Use it to create a message class as follows:

@Serializable

data class RunTask(

val taskName: TaskName,

val taskId: TaskId,

val taskInput: TaskInput,

val taskOptions: TaskOptions,

val taskMeta: TaskMeta

)Note the @ Serializable annotation. With it, you can use runtask Serialiser () allows the serializer to work without introspection, which will greatly improve efficiency!

Currently, the serialization plug-in only supports JSON (and some other formats in beta, such as protobuf). So we still need avro4k Library to extend it and support Avro format.

Using these tools, we can create a Producer task like the following:

import com.github.avrokotlin.avro4k.Avro

import com.github.avrokotlin.avro4k.io.AvroEncodeFormat

import io.infinitic.common.tasks.executors.messages.RunTask

import kotlinx.serialization.KSerializer

import org.apache.avro.file.SeekableByteArrayInput

import org.apache.avro.generic.GenericDatumReader

import org.apache.avro.generic.GenericRecord

import org.apache.avro.io.DecoderFactory

import org.apache.pulsar.client.api.Consumer

import org.apache.pulsar.client.api.Producer

import org.apache.pulsar.client.api.PulsarClient

import org.apache.pulsar.client.api.Schema

import org.apache.pulsar.client.api.schema.SchemaDefinition

import org.apache.pulsar.client.api.schema.SchemaReader

import org.apache.pulsar.client.api.schema.SchemaWriter

import java.io.ByteArrayOutputStream

import java.io.InputStream

// Convert T instance to Avro schemaless binary format

fun <T : Any> writeBinary(t: T, serializer: KSerializer<T>): ByteArray {

val out = ByteArrayOutputStream()

Avro.default.openOutputStream(serializer) {

encodeFormat = AvroEncodeFormat.Binary

schema = Avro.default.schema(serializer)

}.to(out).write(t).close()

return out.toByteArray()

}

// Convert Avro schemaless byte array to T instance

fun <T> readBinary(bytes: ByteArray, serializer: KSerializer<T>): T {

val datumReader = GenericDatumReader<GenericRecord>(Avro.default.schema(serializer))

val decoder = DecoderFactory.get().binaryDecoder(SeekableByteArrayInput(bytes), null)

return Avro.default.fromRecord(serializer, datumReader.read(null, decoder))

}

// custom Pulsar SchemaReader

class RunTaskSchemaReader: SchemaReader<RunTask> {

override fun read(bytes: ByteArray, offset: Int, length: Int) =

read(bytes.inputStream(offset, length))

override fun read(inputStream: InputStream) =

readBinary(inputStream.readBytes(), RunTask.serializer())

}

// custom Pulsar SchemaWriter

class RunTaskSchemaWriter : SchemaWriter<RunTask> {

override fun write(message: RunTask) = writeBinary(message, RunTask.serializer())

}

// custom Pulsar SchemaDefinition<RunTask>

fun runTaskSchemaDefinition(): SchemaDefinition<RunTask> =

SchemaDefinition.builder<RunTask>()

.withJsonDef(Avro.default.schema(RunTask.serializer()).toString())

.withSchemaReader(RunTaskSchemaReader())

.withSchemaWriter(RunTaskSchemaWriter())

.withSupportSchemaVersioning(true)

.build()

// Create an instance of Producer<RunTask>

fun runTaskProducer(client: PulsarClient): Producer<RunTask> = client

.newProducer(Schema.AVRO(runTaskSchemaDefinition()))

.topic("some-avro-topic")

.create();

// Create an instance of Consumer<RunTask>

fun runTaskConsumer(client: PulsarClient): Consumer<RunTask> = client

.newConsumer(Schema.AVRO(runTaskSchemaDefinition()))

.topic("some-avro-topic")

.subscribe();

Seal class messages and each Topic One package

Pulsar each Topic Only one type of message is allowed. In some special cases, this does not meet all the needs. But this problem can be changed by using encapsulation mode.

First, use a sealed class from a Topic Create all types of messages:

@Serializable

sealed class TaskEngineMessage() {

abstract val taskId: TaskId

}

@Serializable

data class DispatchTask(

override val taskId: TaskId,

val taskName: TaskName,

val methodName: MethodName,

val methodParameterTypes: MethodParameterTypes?,

val methodInput: MethodInput,

val workflowId: WorkflowId?,

val methodRunId: MethodRunId?,

val taskMeta: TaskMeta,

val taskOptions: TaskOptions = TaskOptions()

) : TaskEngineMessage()

@Serializable

data class CancelTask(

override val taskId: TaskId,

val taskOutput: MethodOutput

) : TaskEngineMessage()

@Serializable

data class TaskCanceled(

override val taskId: TaskId,

val taskOutput: MethodOutput,

val taskMeta: TaskMeta

) : TaskEngineMessage()

@Serializable

data class TaskCompleted(

override val taskId: TaskId,

val taskName: TaskName,

val taskOutput: MethodOutput,

val taskMeta: TaskMeta

) : TaskEngineMessage()Then, create an encapsulation for these messages:

Note @Serializable

data class TaskEngineEnvelope(

val taskId: TaskId,

val type: TaskEngineMessageType,

val dispatchTask: DispatchTask? = null,

val cancelTask: CancelTask? = null,

val taskCanceled: TaskCanceled? = null,

val taskCompleted: TaskCompleted? = null,

) {

init {

val noNull = listOfNotNull(

dispatchTask,

cancelTask,

taskCanceled,

taskCompleted

)

require(noNull.size == 1)

require(noNull.first() == message())

require(noNull.first().taskId == taskId)

}

companion object {

fun from(msg: TaskEngineMessage) = when (msg) {

is DispatchTask -> TaskEngineEnvelope(

msg.taskId,

TaskEngineMessageType.DISPATCH_TASK,

dispatchTask = msg

)

is CancelTask -> TaskEngineEnvelope(

msg.taskId,

TaskEngineMessageType.CANCEL_TASK,

cancelTask = msg

)

is TaskCanceled -> TaskEngineEnvelope(

msg.taskId,

TaskEngineMessageType.TASK_CANCELED,

taskCanceled = msg

)

is TaskCompleted -> TaskEngineEnvelope(

msg.taskId,

TaskEngineMessageType.TASK_COMPLETED,

taskCompleted = msg

)

}

}

fun message(): TaskEngineMessage = when (type) {

TaskEngineMessageType.DISPATCH_TASK -> dispatchTask!!

TaskEngineMessageType.CANCEL_TASK -> cancelTask!!

TaskEngineMessageType.TASK_CANCELED -> taskCanceled!!

TaskEngineMessageType.TASK_COMPLETED -> taskCompleted!!

}

}

enum class TaskEngineMessageType {

CANCEL_TASK,

DISPATCH_TASK,

TASK_CANCELED,

TASK_COMPLETED

}Notice how Kotlin gracefully checks init! You can use taskengineenvirope From (MSG) makes it easy to create a package and use envelope Message() returns the original message.

Why is an explicit taskId value added here instead of using a global field message:TaskEngineMessage, and a field for each message type? Because in this way, I can use taskId or type, or a combination of both PulsarSQL To get the information of this Topic.

Build Worker through collaborative process

Using Thread in normal Java is complex and error prone. Fortunately, Koltin provided coroutines ——A simpler asynchronous processing abstraction -- and channels ——A convenient way to transfer data between processes.

I can create a Worker in the following ways:

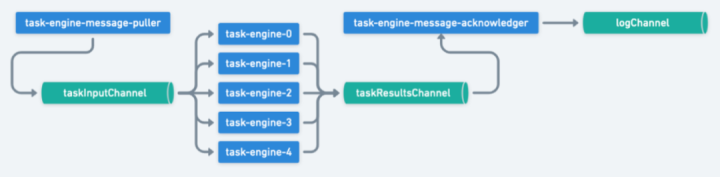

- A single ("task engine message puller") is dedicated to pulling messages from Pulsar

- N collaborative processes ("task engine - $I") process messages in parallel

- Confirm the process of Pulsar message after single ("task engine message acknoleger") processing

After there are many processes like this, I have added a logChannel to collect logs. Please note that in order to confirm the Pulsar message in a process different from the one receiving it, I need to encapsulate the TaskEngineMessage into messagetoprocess < TaskEngineMessage > containing Pulsar messageId:

typealias TaskEngineMessageToProcess = MessageToProcess<TaskEngineMessage>

fun CoroutineScope.startPulsarTaskEngineWorker(

taskEngineConsumer: Consumer<TaskEngineEnvelope>,

taskEngine: TaskEngine,

logChannel: SendChannel<TaskEngineMessageToProcess>?,

enginesNumber: Int

) = launch(Dispatchers.IO) {

val taskInputChannel = Channel<TaskEngineMessageToProcess>()

val taskResultsChannel = Channel<TaskEngineMessageToProcess>()

// coroutine dedicated to pulsar message pulling

launch(CoroutineName("task-engine-message-puller")) {

while (isActive) {

val message: Message<TaskEngineEnvelope> = taskEngineConsumer.receiveAsync().await()

try {

val envelope = readBinary(message.data, TaskEngineEnvelope.serializer())

taskInputChannel.send(MessageToProcess(envelope.message(), message.messageId))

} catch (e: Exception) {

taskEngineConsumer.negativeAcknowledge(message.messageId)

throw e

}

}

}

// coroutines dedicated to Task Engine

repeat(enginesNumber) {

launch(CoroutineName("task-engine-$it")) {

for (messageToProcess in taskInputChannel) {

try {

messageToProcess.output = taskEngine.handle(messageToProcess.message)

} catch (e: Exception) {

messageToProcess.exception = e

}

taskResultsChannel.send(messageToProcess)

}

}

}

// coroutine dedicated to pulsar message acknowledging

launch(CoroutineName("task-engine-message-acknowledger")) {

for (messageToProcess in taskResultsChannel) {

if (messageToProcess.exception == null) {

taskEngineConsumer.acknowledgeAsync(messageToProcess.messageId).await()

} else {

taskEngineConsumer.negativeAcknowledge(messageToProcess.messageId)

}

logChannel?.send(messageToProcess)

}

}

}

data class MessageToProcess<T> (

val message: T,

val messageId: MessageId,

var exception: Exception? = null,

var output: Any? = null

)summary

In this article, we introduce how to use Pulsar implemented in Kotlin:

- Code message (including encapsulation of Pulsar Topic receiving various types of messages);

- Create the producer / consumer of Pulsar;

- Build a simple Worker that can process many messages in parallel.

Pay attention to the official account "Apache Pulsar" to get more dry cargo.

Join Apache Pulsar Chinese communication group 👇🏻