Build Kubernetes high availability cluster

This article takes kubernetes version 1.20.5 as an example!

If not specified, the following commands are executed on all nodes!

1, System resource planning

| Node name | System name | CPU / memory | network card | disk | IP address | OS |

|---|---|---|---|---|---|---|

| Master1 | master1 | 2C/4G | ens33 | 128G | 192.168.0.11 | CentOS7 |

| Master2 | master2 | 2C/4G | ens33 | 128G | 192.168.0.12 | CentOS7 |

| Master3 | master3 | 2C/4G | ens33 | 128G | 192.168.0.13 | CentOS7 |

| Worker1 | worker1 | 2C/4G | ens33 | 128G | 192.168.0.21 | CentOS7 |

| Worker2 | worker2 | 2C/4G | ens33 | 128G | 192.168.0.22 | CentOS7 |

| Worker3 | worker3 | 2C/4G | ens33 | 128G | 192.168.0.23 | CentOS7 |

2, System software installation and setting

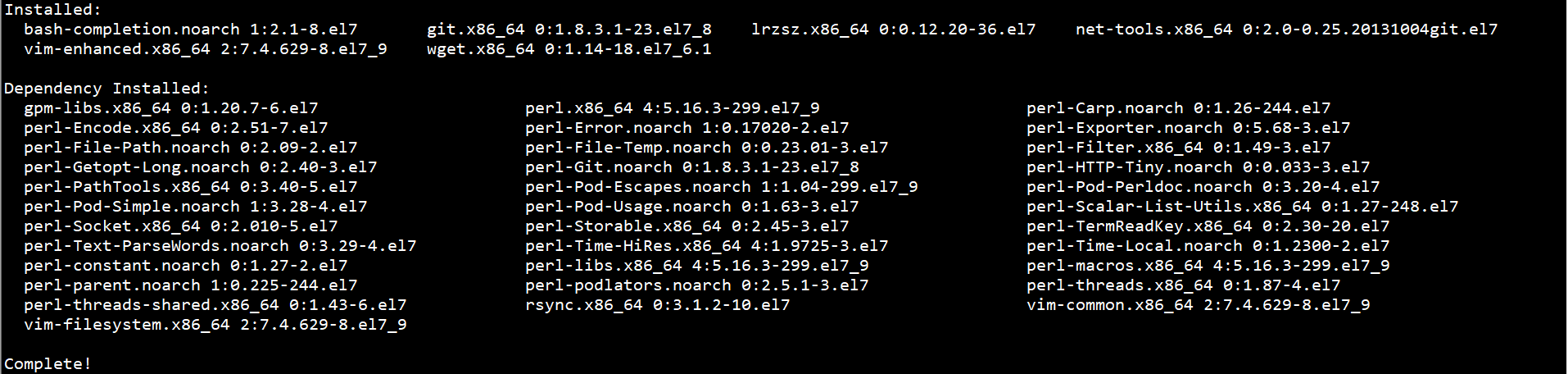

1. Install basic software

yum -y install vim git lrzsz wget net-tools bash-completion

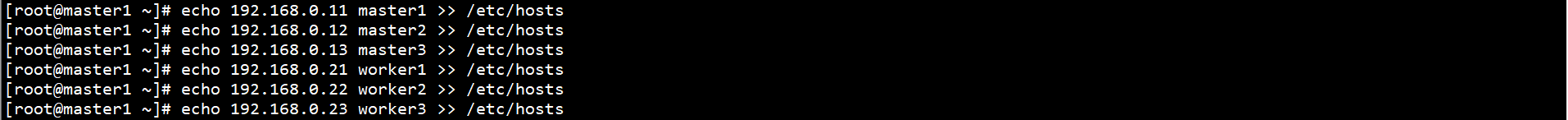

2. Set name resolution

echo 192.168.0.11 master1 >> /etc/hosts echo 192.168.0.12 master2 >> /etc/hosts echo 192.168.0.13 master3 >> /etc/hosts echo 192.168.0.21 worker1 >> /etc/hosts echo 192.168.0.22 worker2 >> /etc/hosts echo 192.168.0.23 worker3 >> /etc/hosts

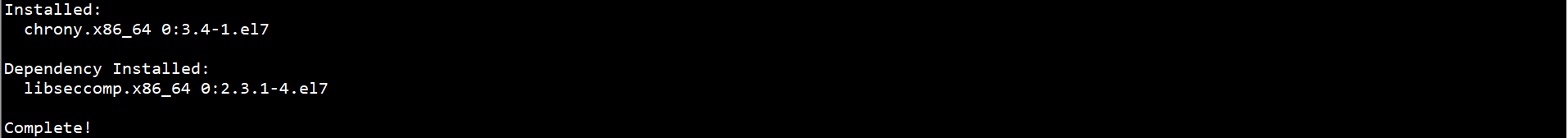

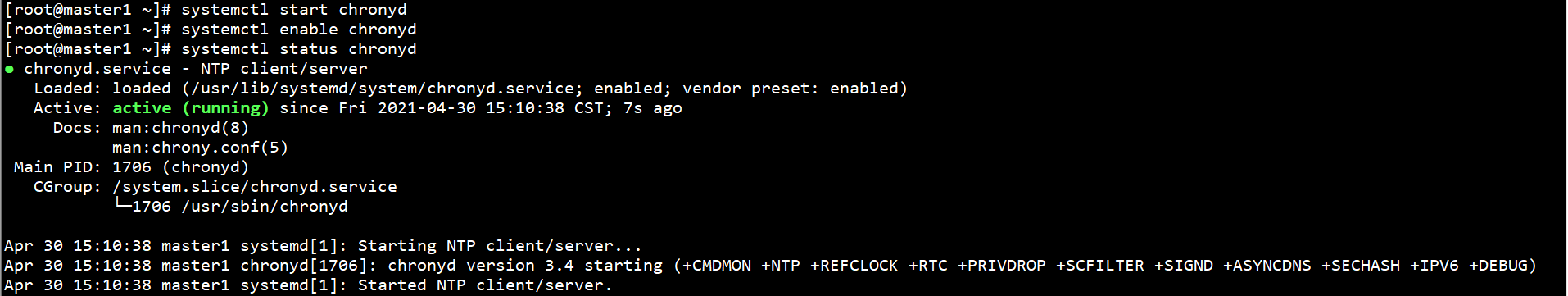

3. Set NTP

yum -y install chrony

systemctl start chronyd systemctl enable chronyd systemctl status chronyd

chronyc sources

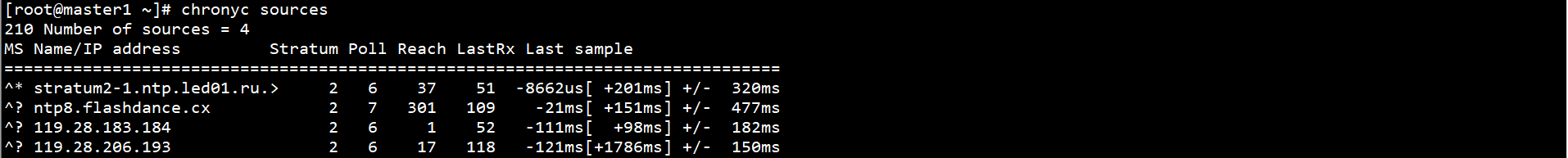

4. Set SELinux and firewall

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

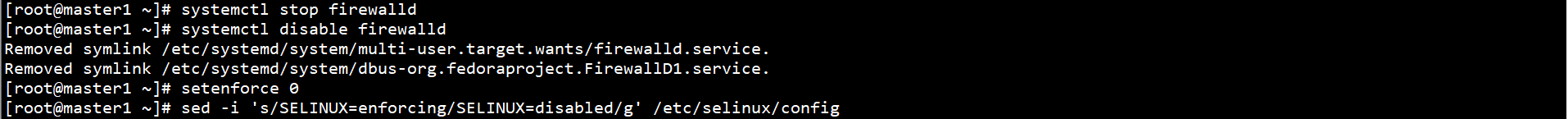

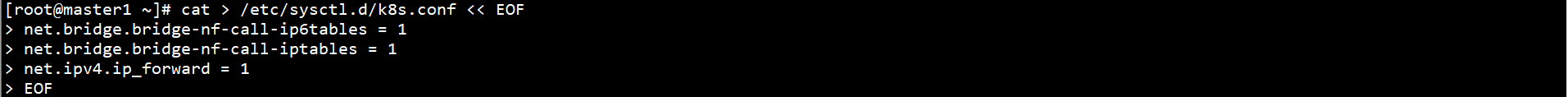

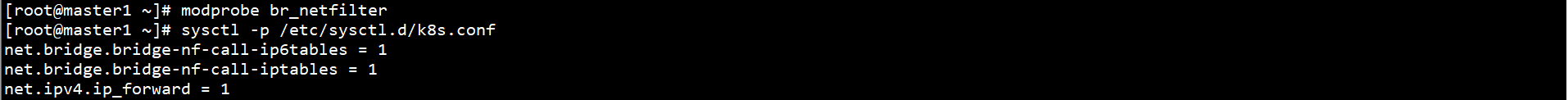

5. Set up bridge

Configure L2 bridge to be filtered by iptables FORWARD rules when forwarding packets. CNI plug-in needs this configuration

Create / etc / sysctl d/k8s. Conf file, add the following:

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF

Execute the command to make the modification effective:

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf

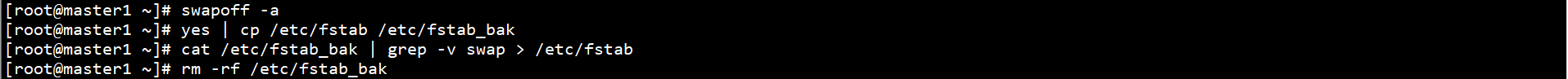

6. Set swap

Close the system swap partition:

swapoff -a yes | cp /etc/fstab /etc/fstab_bak cat /etc/fstab_bak | grep -v swap > /etc/fstab rm -rf /etc/fstab_bak

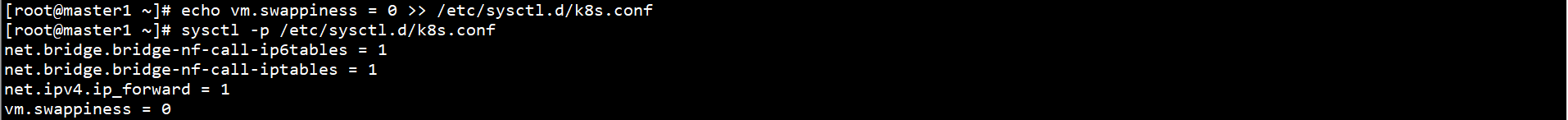

echo vm.swappiness = 0 >> /etc/sysctl.d/k8s.conf sysctl -p /etc/sysctl.d/k8s.conf

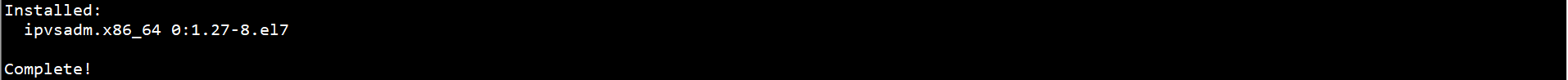

7. Set ipvs

Install ipvsadm ipset:

yum -y install ipvsadm ipset

To create an ipvs setup script:

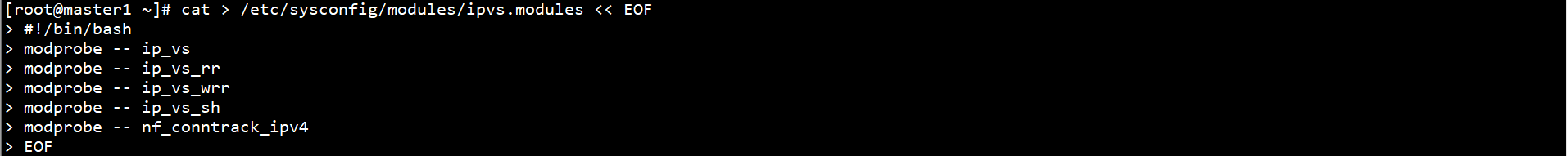

cat > /etc/sysconfig/modules/ipvs.modules << EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF

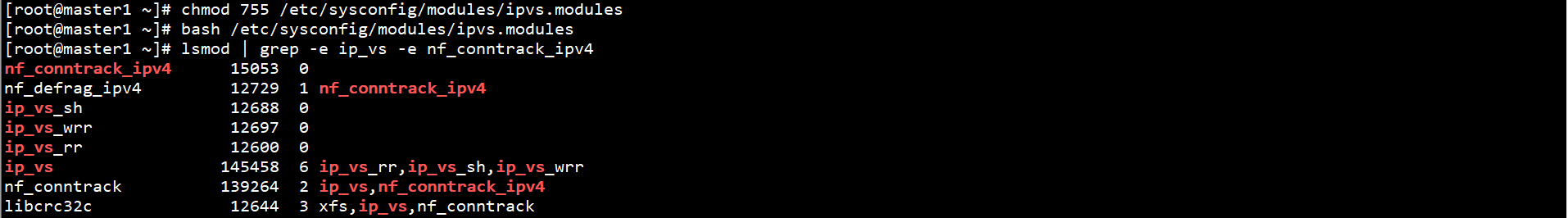

Execute the script to verify the modification results:

chmod 755 /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules lsmod | grep -e ip_vs -e nf_conntrack_ipv4

3, Load balancing configuration

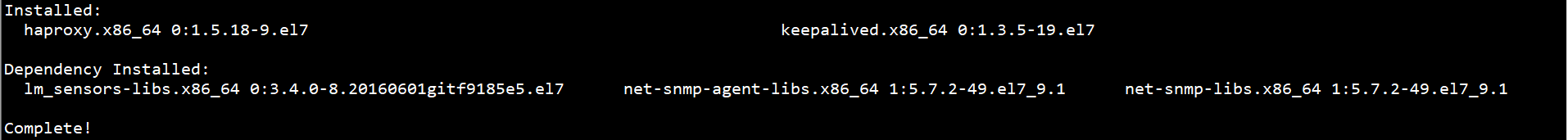

1. Installing HAProxy and Keepalived

Install HAProxy and Keepalived on all Master nodes:

yum -y install haproxy keepalived

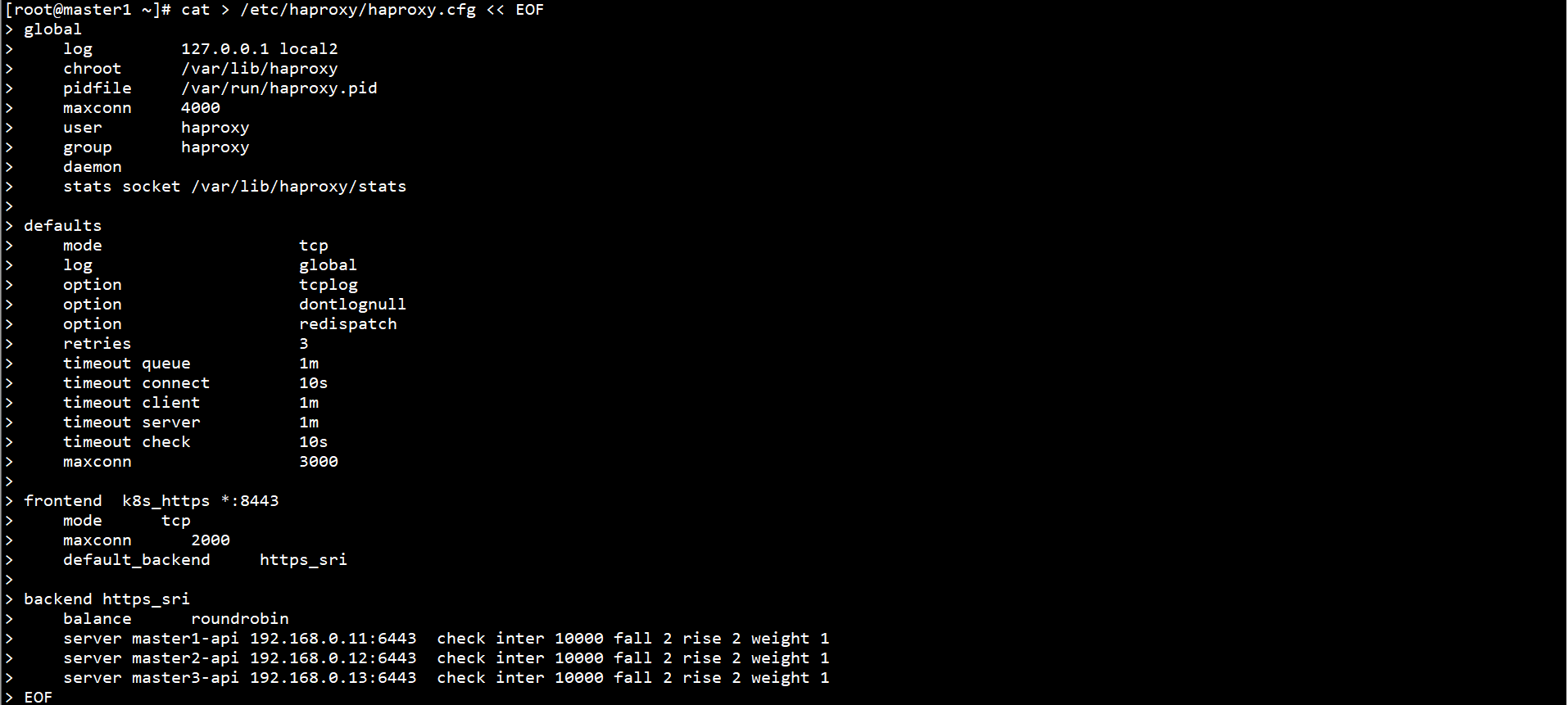

Create a HAProxy profile on all Master nodes:

cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

frontend k8s_https *:8443

mode tcp

maxconn 2000

default_backend https_sri

backend https_sri

balance roundrobin

server master1-api 192.168.0.11:6443 check inter 10000 fall 2 rise 2 weight 1

server master2-api 192.168.0.12:6443 check inter 10000 fall 2 rise 2 weight 1

server master3-api 192.168.0.13:6443 check inter 10000 fall 2 rise 2 weight 1

EOF

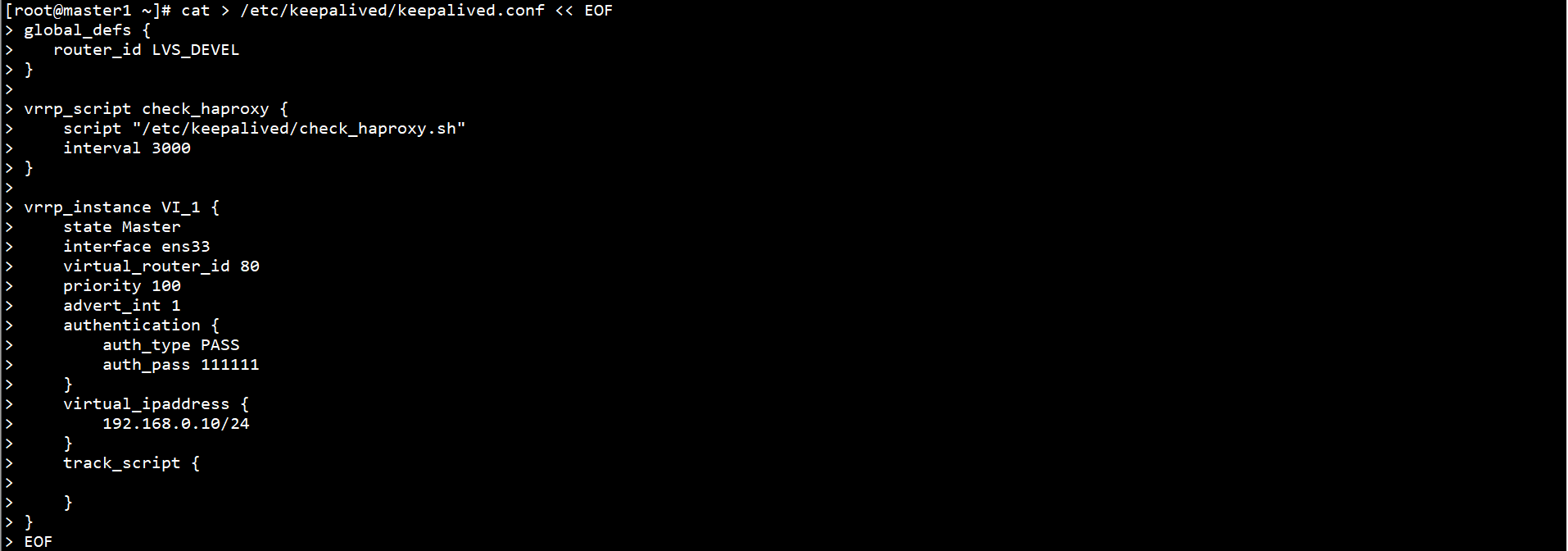

Create a Keepalived profile on the Master1 node:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Master

interface ens33

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.0.10/24

}

track_script {

}

}

EOF

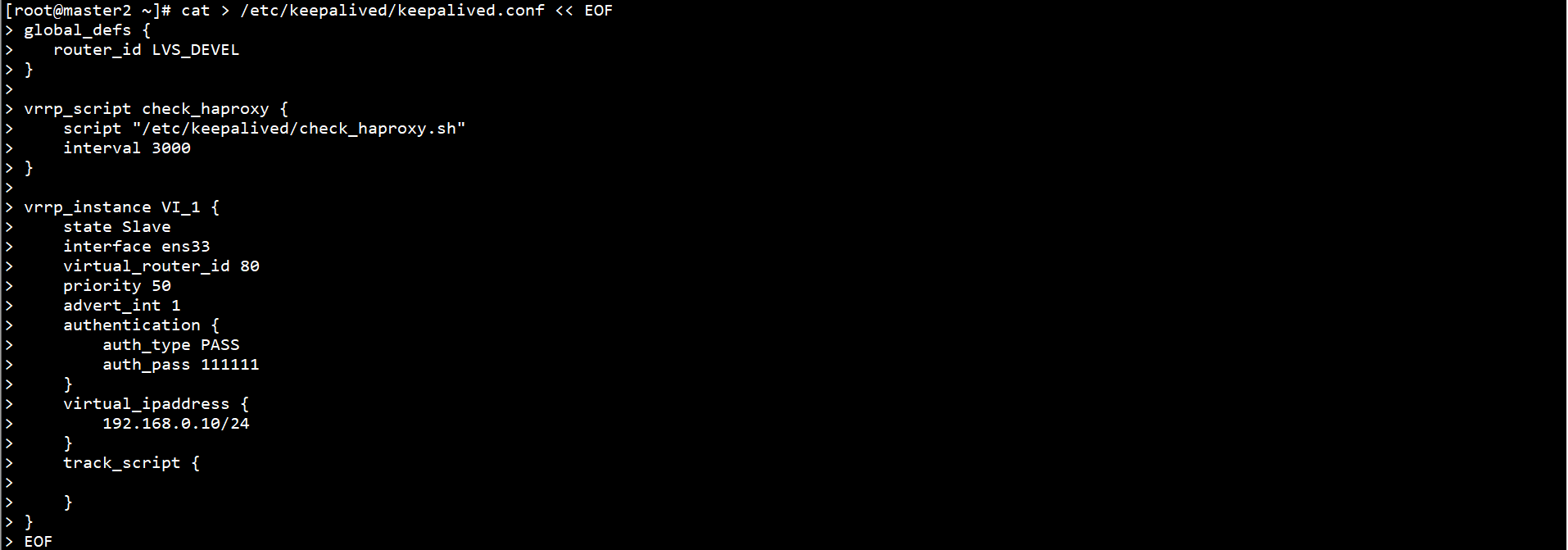

Create a Keepalived profile on the Master2 node:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Slave

interface ens33

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.0.10/24

}

track_script {

}

}

EOF

Create a Keepalived profile on the Master3 node:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Slave

interface ens33

virtual_router_id 80

priority 30

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.0.10/24

}

track_script {

}

}

EOF

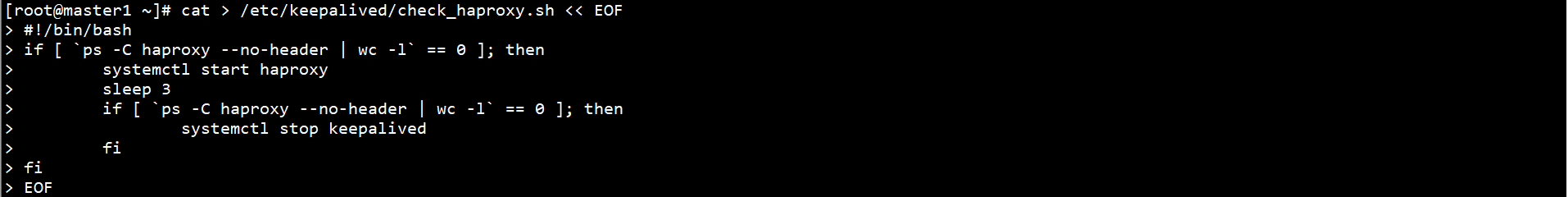

Create HAProxy check script on all Master nodes:

cat > /etc/keepalived/check_haproxy.sh << EOF

#!/bin/bash

if [ `ps -C haproxy --no-header | wc -l` == 0 ]; then

systemctl start haproxy

sleep 3

if [ `ps -C haproxy --no-header | wc -l` == 0 ]; then

systemctl stop keepalived

fi

fi

EOF

chmod +x /etc/keepalived/check_haproxy.sh

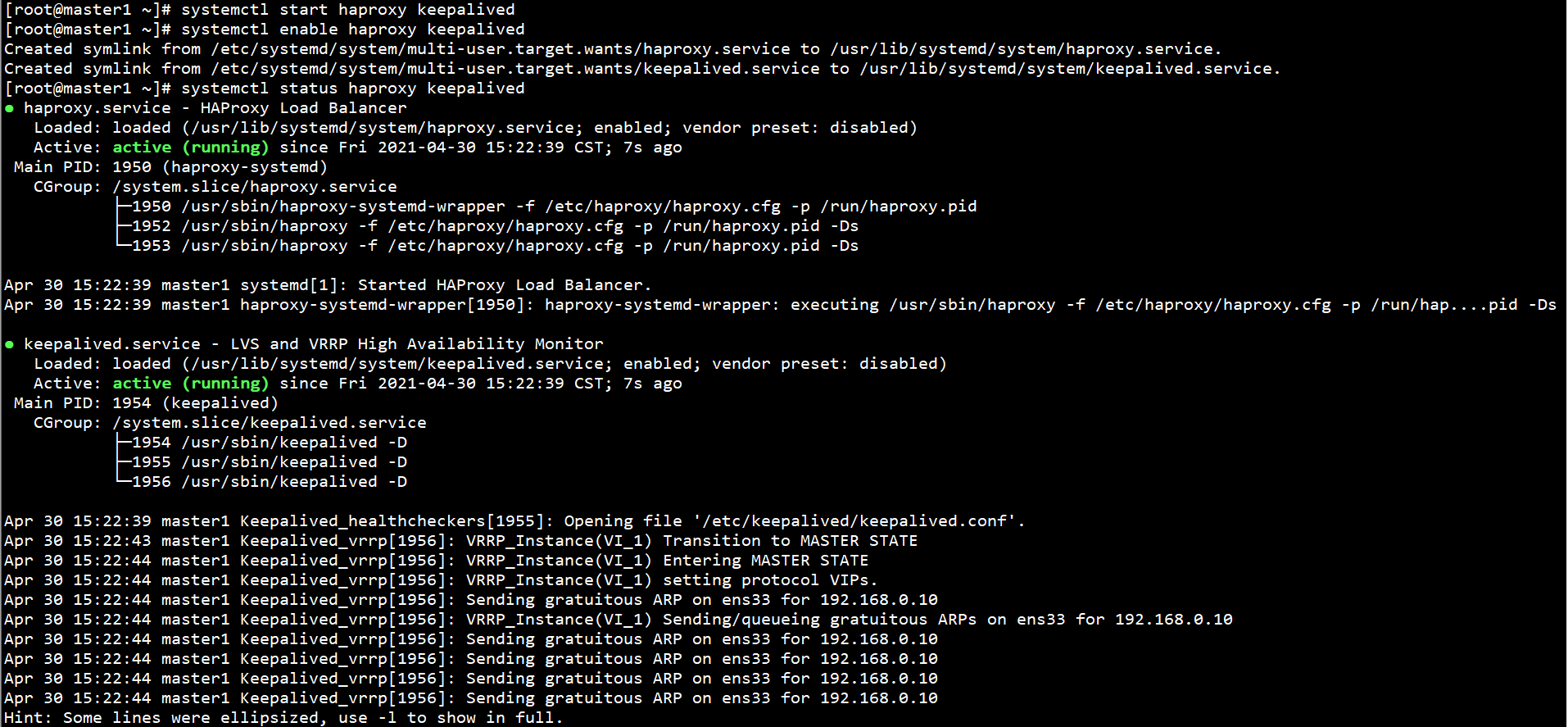

Start HAProxy and Keepalived on all Master nodes and set self startup:

systemctl start haproxy keepalived systemctl enable haproxy keepalived systemctl status haproxy keepalived

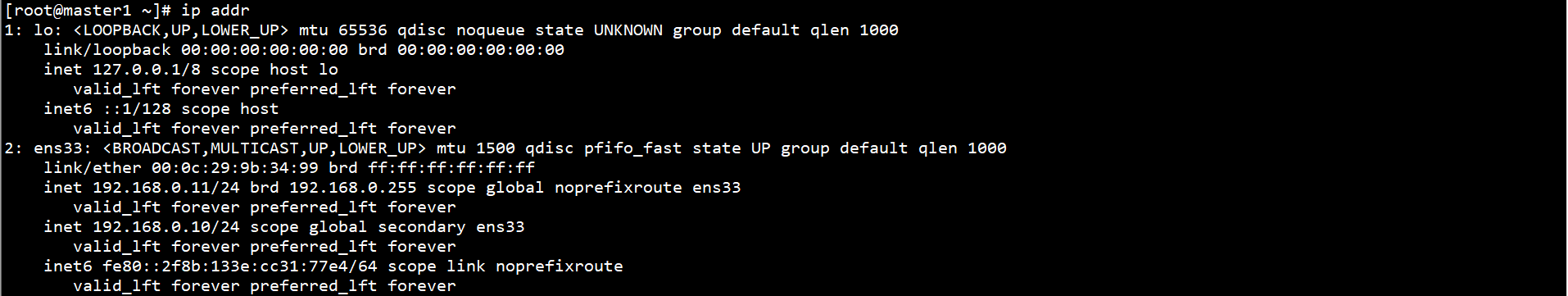

Check the status of keepalived on the Maste1 node:

ip addr

192.168.0.10 virtual IP is bound to the ens33 network card.

3, Kubernetes cluster configuration

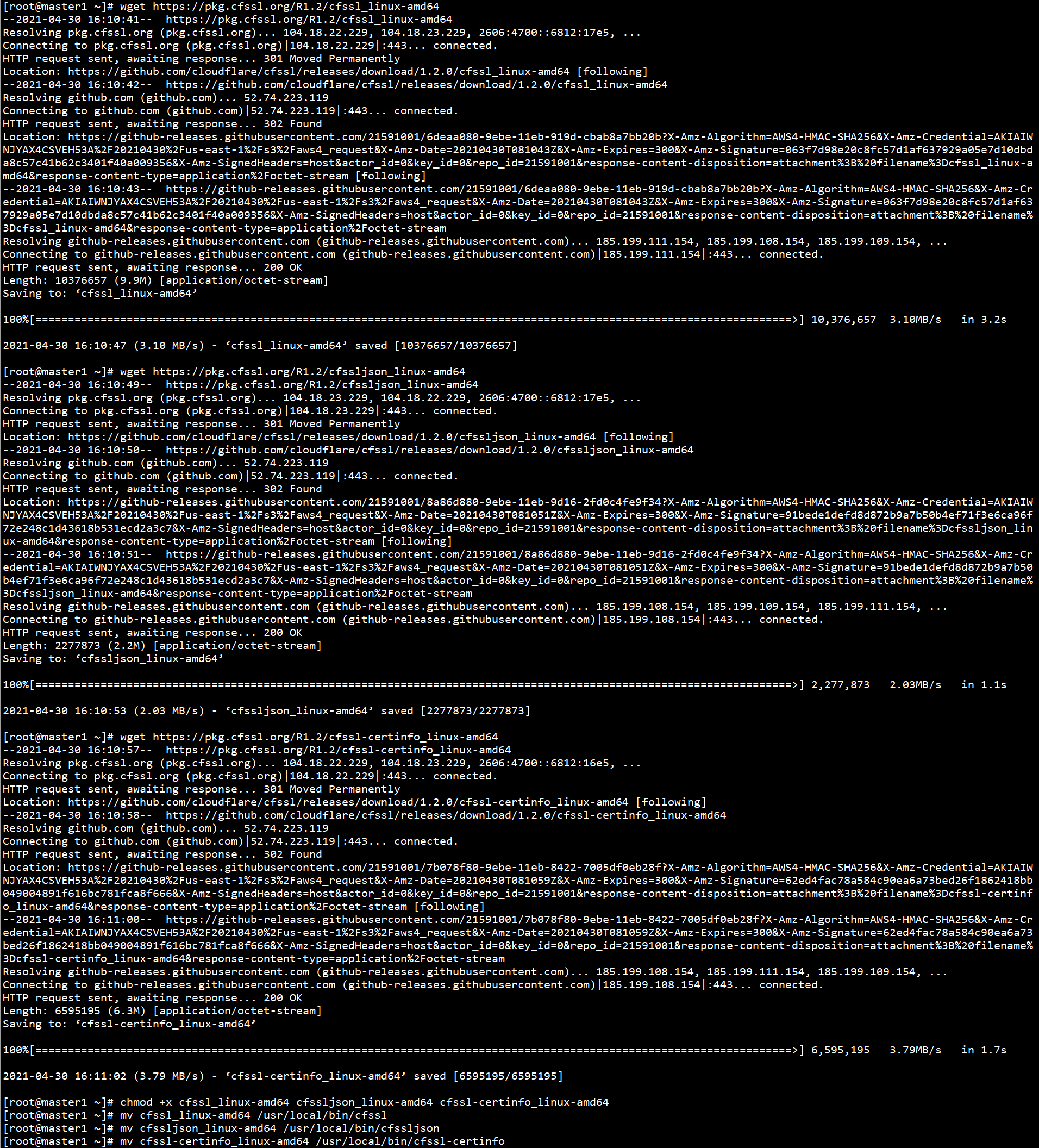

1. Deploy certificate generation tool

Download the certificate generation tool on the Master1 node:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2. Deploy ETCD cluster

Create configuration directory and certificate directory on all Master nodes:

mkdir -p /etc/etcd/ssl/

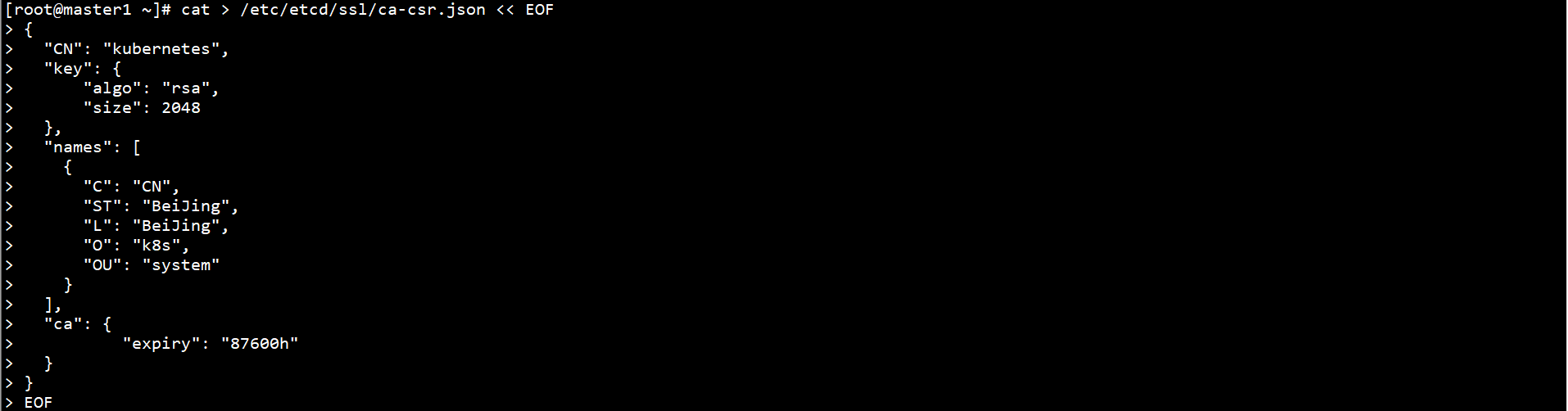

Create a CA CSR request file on the Master1 node:

cat > /etc/etcd/ssl/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

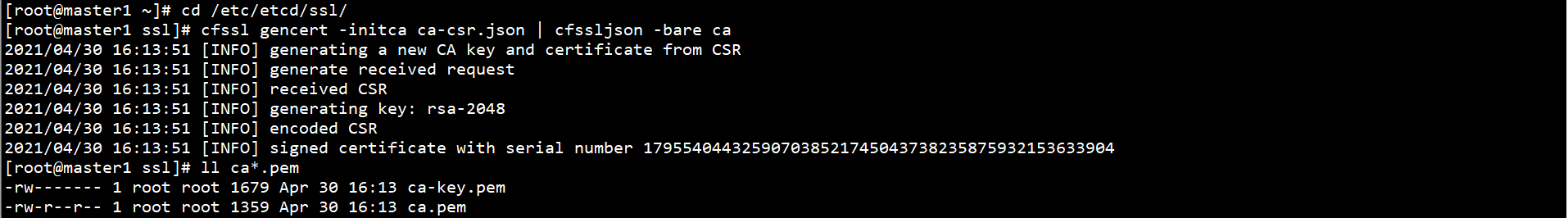

Generate CA certificate on Master1 node:

cd /etc/etcd/ssl/ cfssl gencert -initca ca-csr.json | cfssljson -bare ca ll ca*.pem

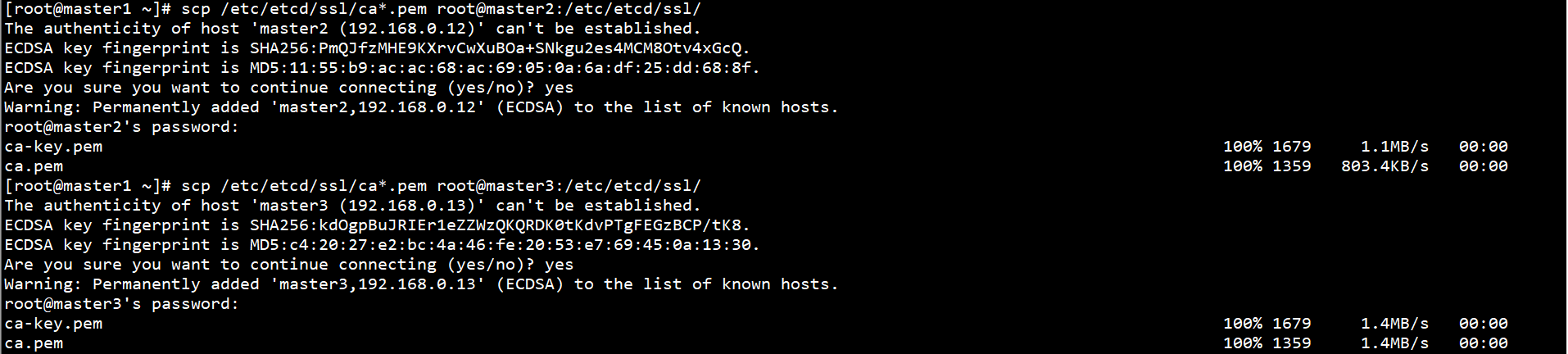

Copy the CA certificate on Master1 node to other Master nodes:

scp /etc/etcd/ssl/ca*.pem root@master2:/etc/etcd/ssl/ scp /etc/etcd/ssl/ca*.pem root@master3:/etc/etcd/ssl/

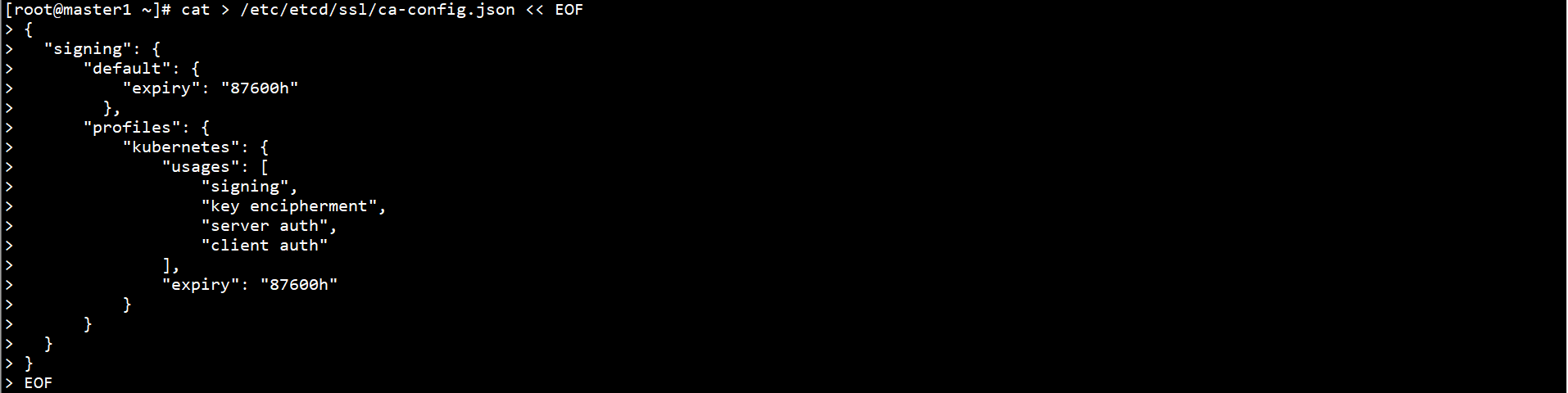

Generate Certificate Policy on Master1 node:

cat > /etc/etcd/ssl/ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

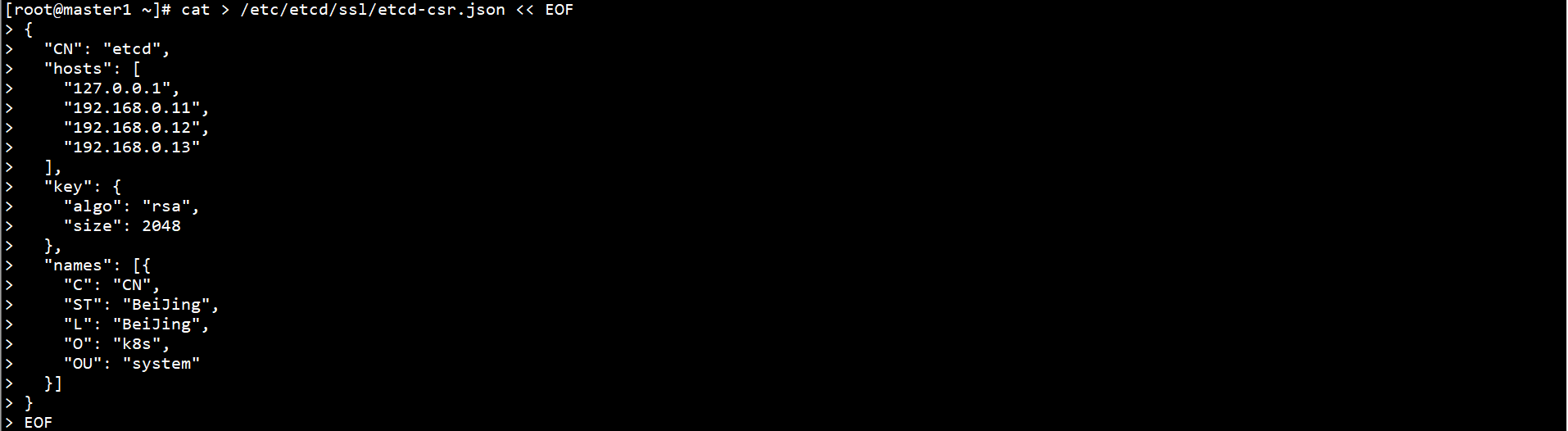

Create ETCD CSR request file on Master1 node:

cat > /etc/etcd/ssl/etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.0.11",

"192.168.0.12",

"192.168.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}]

}

EOF

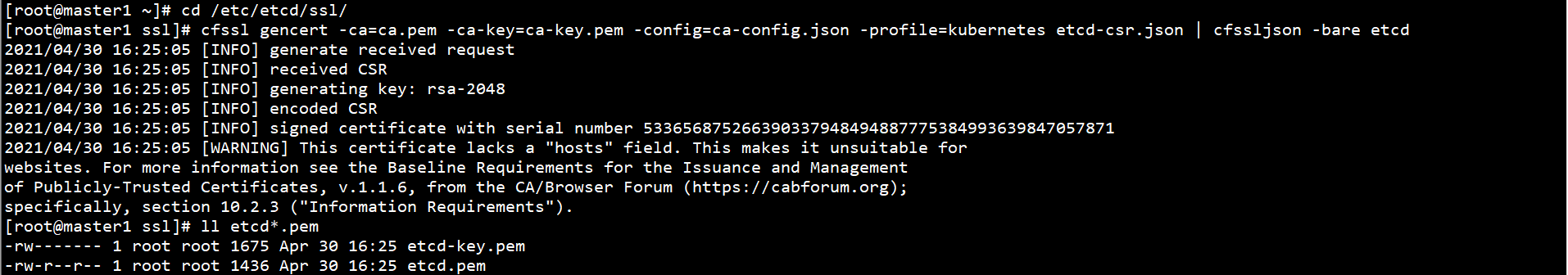

Generate ETCD certificate on Master1 node:

cd /etc/etcd/ssl/ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd ll etcd*.pem

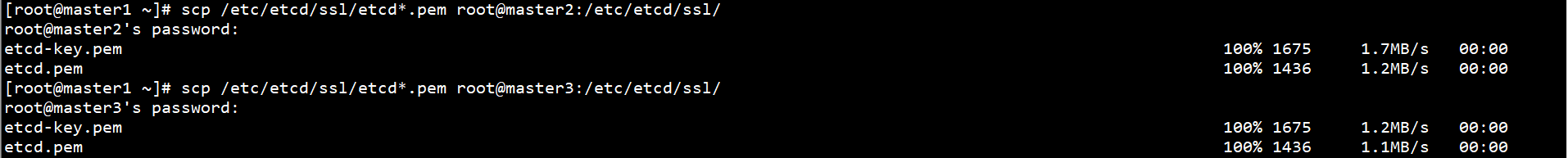

Copy the ETCD certificate on Master1 node to other Master nodes:

scp /etc/etcd/ssl/etcd*.pem root@master2:/etc/etcd/ssl/ scp /etc/etcd/ssl/etcd*.pem root@master3:/etc/etcd/ssl/

Download ETCD binaries:

Reference address: https://github.com/etcd-io/etcd/releases

Download address: https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-amd64.tar.gz

Extract ETCD binary files on all Master nodes to the system directory:

tar -xf /root/etcd-v3.4.15-linux-amd64.tar.gz -C /root/

mv /root/etcd-v3.4.15-linux-amd64/{etcd,etcdctl} /usr/local/bin/

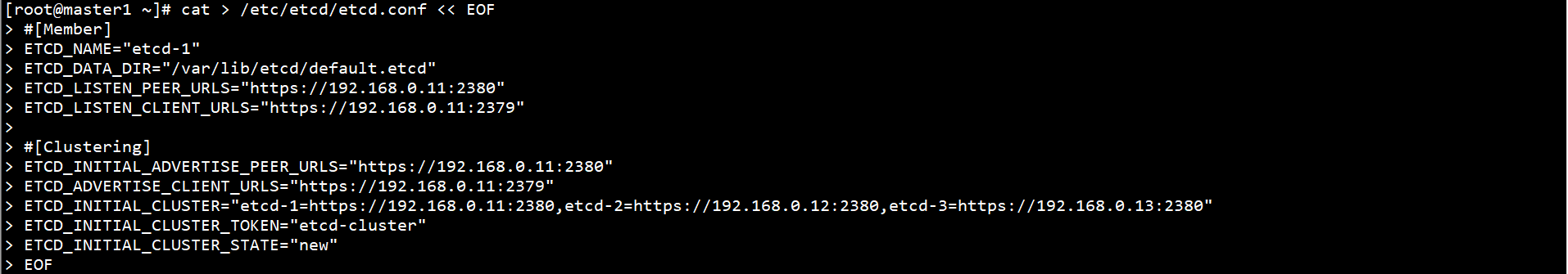

Create ETCD profiles on all Master nodes:

cat > /etc/etcd/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.0.11:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.0.11:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.11:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.0.11:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.0.11:2380,etcd-2=https://192.168.0.12:2380,etcd-3=https://192.168.0.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF

The red part is modified according to the actual name and address of the node.

ETCD configuration file parameter description:

ETCD_NAME: node name, unique in the cluster

ETCD_DATA_DIR: Data Directory

ETCD_LISTEN_PEER_URLS: cluster communication listening address

ETCD_LISTEN_CLIENT_URLS: client access listening address

ETCD_INITIAL_ADVERTISE_PEER_URLS: cluster notification address

ETCD_ADVERTISE_CLIENT_URLS: client notification address

ETCD_INITIAL_CLUSTER: cluster node address

ETCD_INITIAL_CLUSTER_TOKEN: cluster token

ETCD_INITIAL_CLUSTER_STATE: the current state of joining a cluster. New is a new cluster, and existing means joining an existing cluster

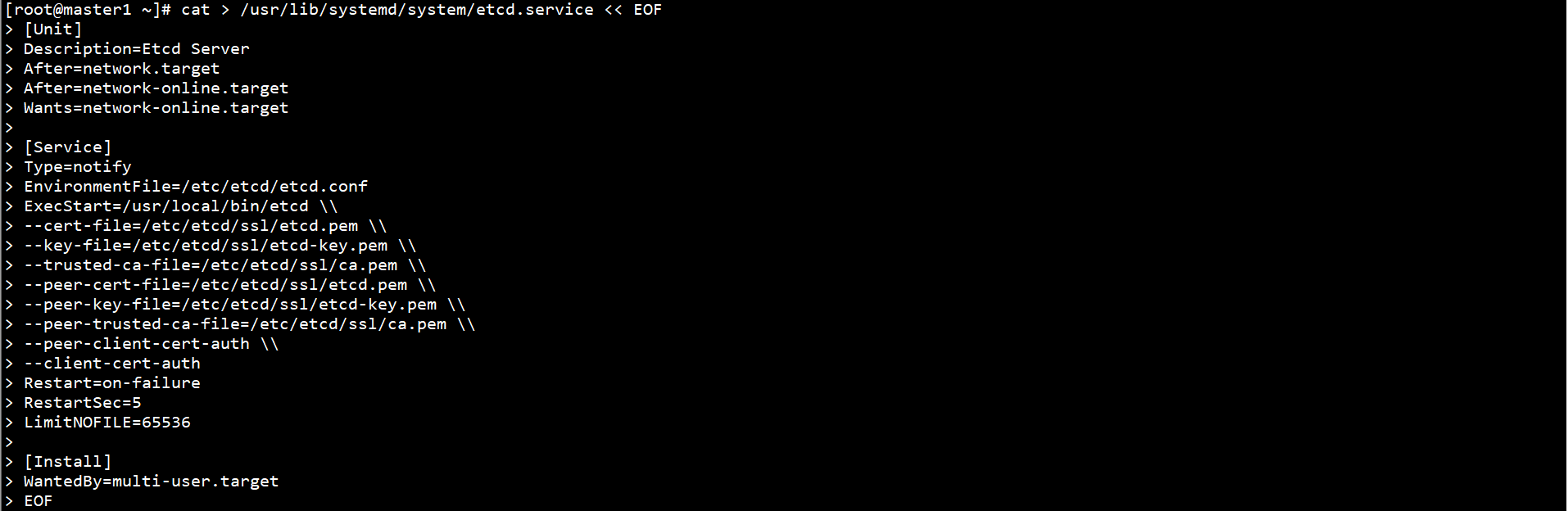

Configure systemd management ETCD on all Master nodes:

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd \\ --cert-file=/etc/etcd/ssl/etcd.pem \\ --key-file=/etc/etcd/ssl/etcd-key.pem \\ --trusted-ca-file=/etc/etcd/ssl/ca.pem \\ --peer-cert-file=/etc/etcd/ssl/etcd.pem \\ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \\ --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\ --peer-client-cert-auth \\ --client-cert-auth Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

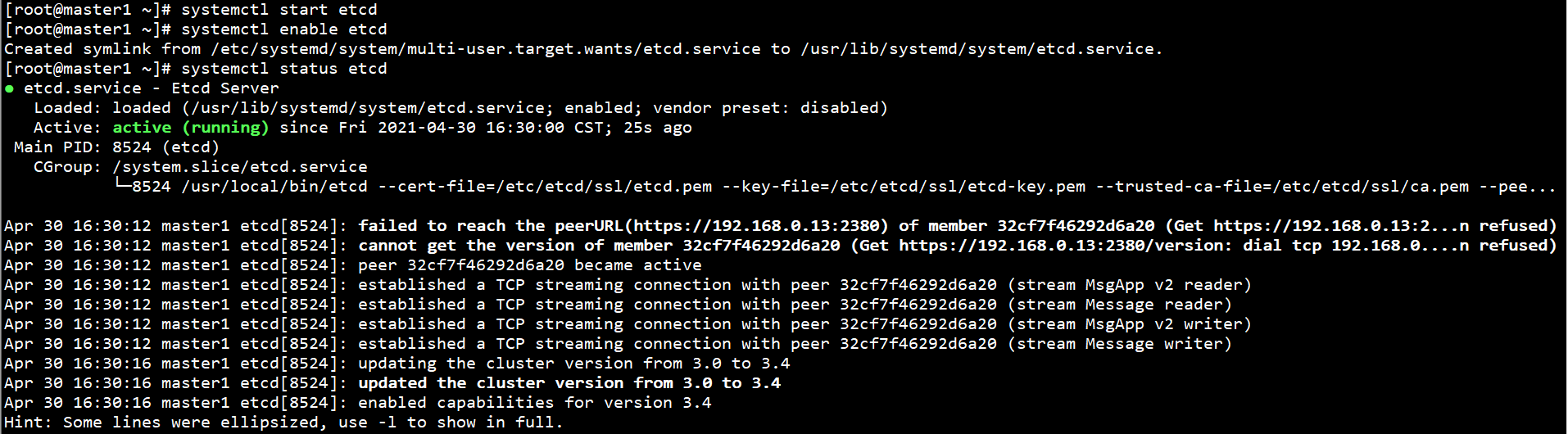

Start ETCD on all Master nodes and set self startup:

systemctl start etcd systemctl enable etcd systemctl status etcd

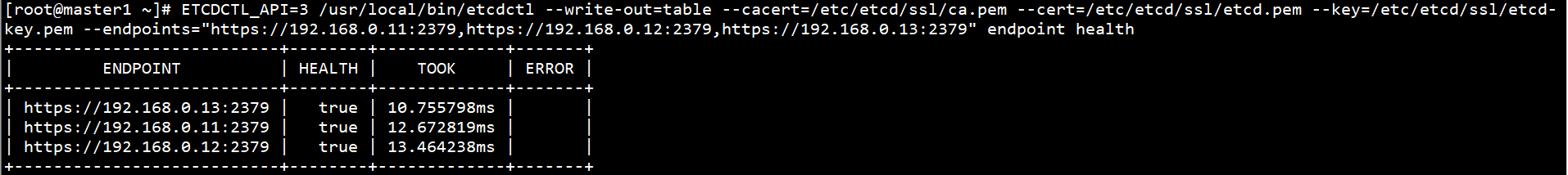

View ETCD cluster status on any Master node:

ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints="https://192.168.0.11:2379,https://192.168.0.12:2379,https://192.168.0.13:2379" endpoint health

3. Deploy Docker

Download Docker binaries:

Reference address: https://download.docker.com/linux/static/stable/x86_64/

Download address: https://download.docker.com/linux/static/stable/x86_64/docker-20.10.5.tgz

Unzip Docker binaries to the system directory:

tar -xf /root/docker-20.10.5.tgz -C /root/ mv /root/docker/* /usr/local/bin

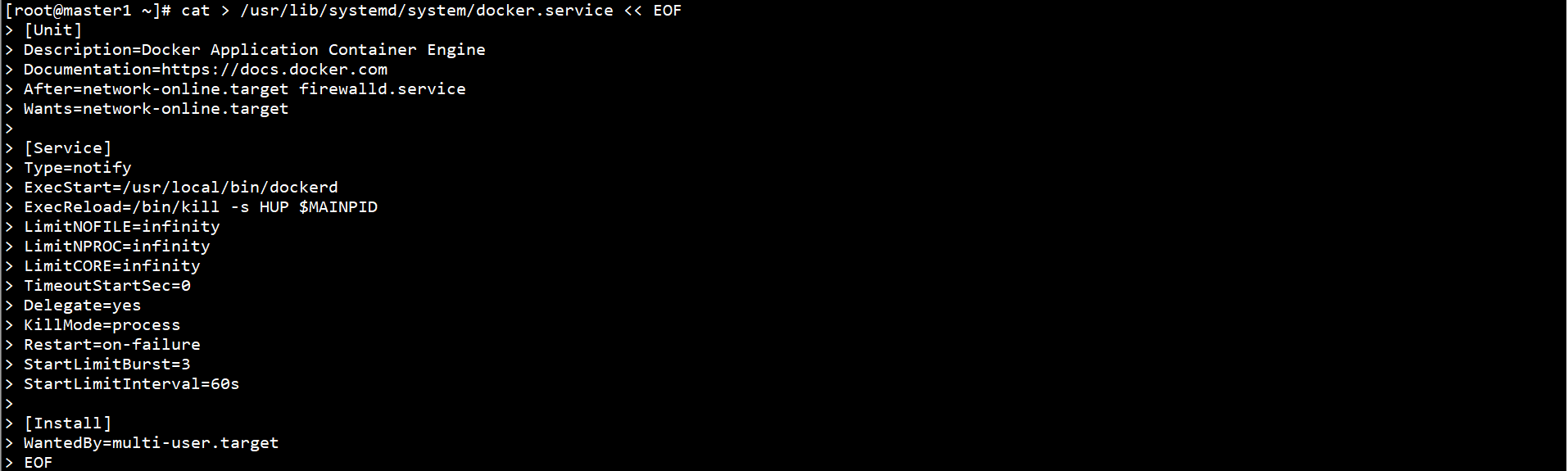

Configure systemd management Docker:

cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/local/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF

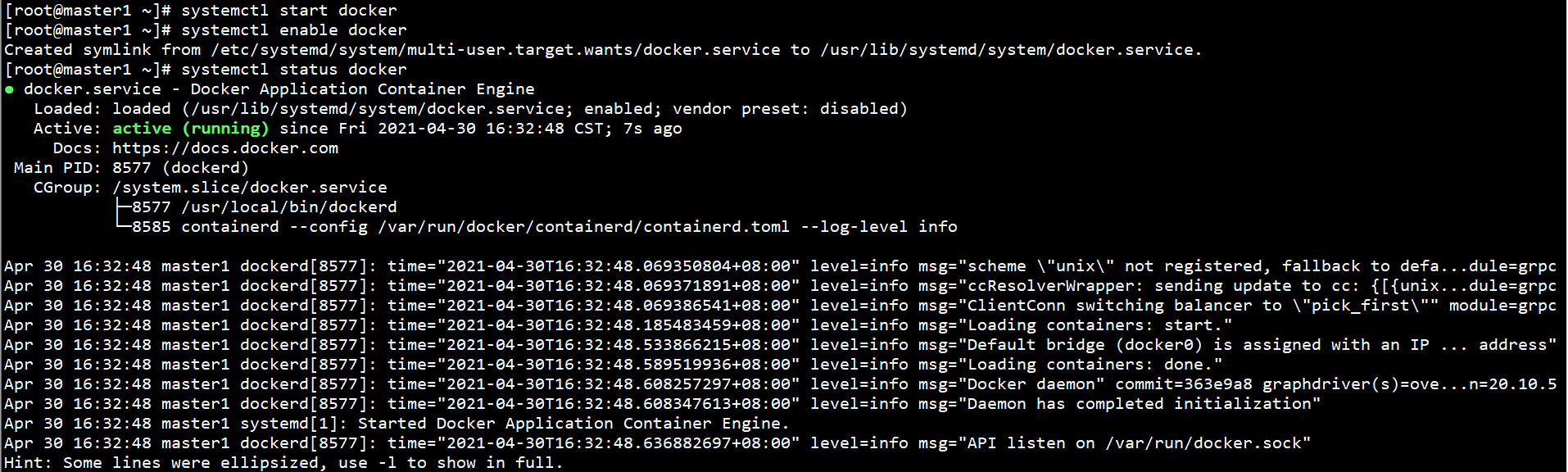

Start Docker and set self startup:

systemctl start docker systemctl enable docker systemctl status docker

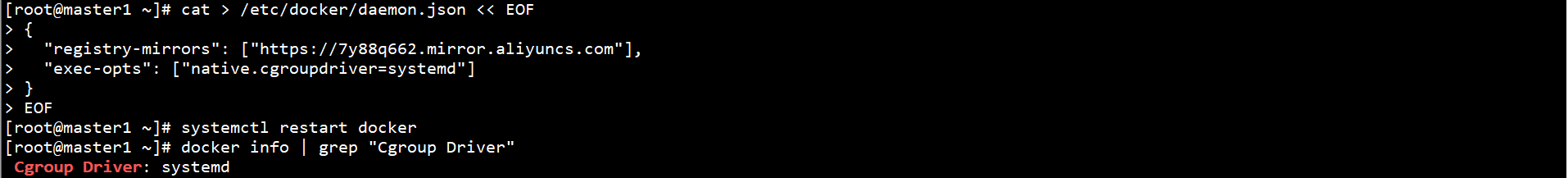

Configure Docker image source and Cgroup driver:

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://7y88q662.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker docker info | grep "Cgroup Driver"

4. Deploy Master node

Download Kubernetes binaries on all Master nodes:

Reference address: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md

Download address: https://dl.k8s.io/v1.20.5/kubernetes-server-linux-amd64.tar.gz

Unzip Kubernetes binaries to the system directory:

tar -xf /root/kubernetes-server-linux-amd64.tar.gz -C /root/

cp /root/kubernetes/server/bin/{kubectl,kube-apiserver,kube-scheduler,kube-controller-manager} /usr/local/bin/

Create configuration directory and certificate directory on all nodes:

mkdir -p /etc/kubernetes/ssl/

Create log directory on all Master nodes:

mkdir /var/log/kubernetes/

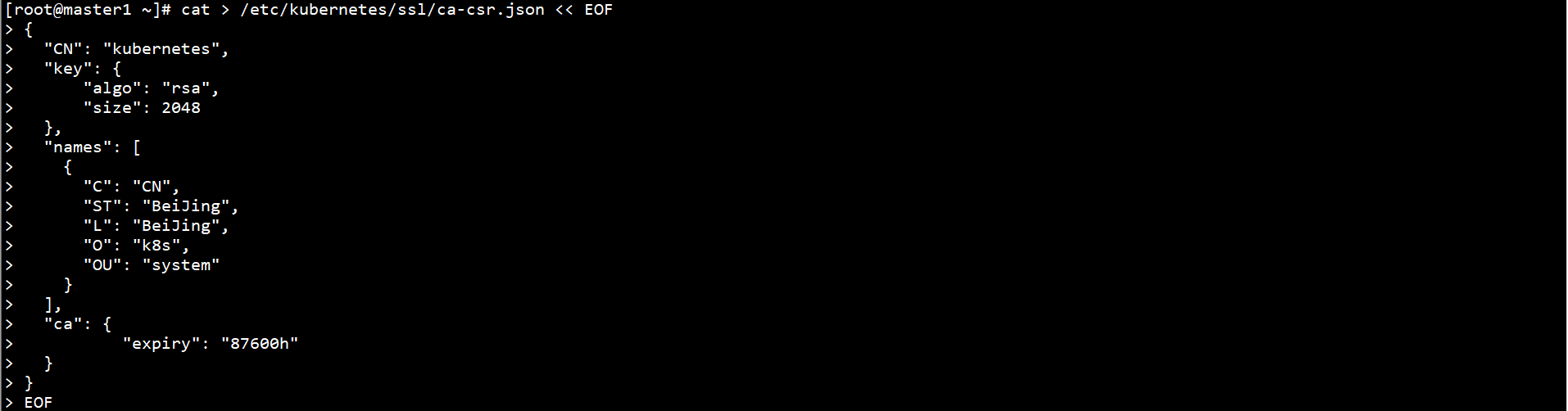

Create request file on masterca 1:

cat > /etc/kubernetes/ssl/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

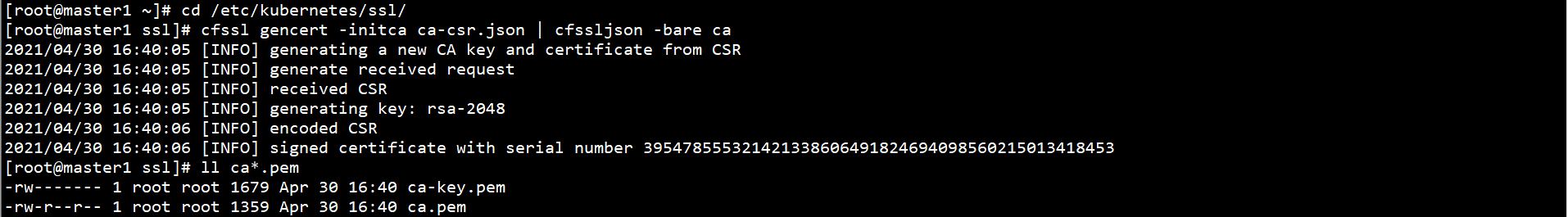

Generate CA certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -initca ca-csr.json | cfssljson -bare ca ll ca*.pem

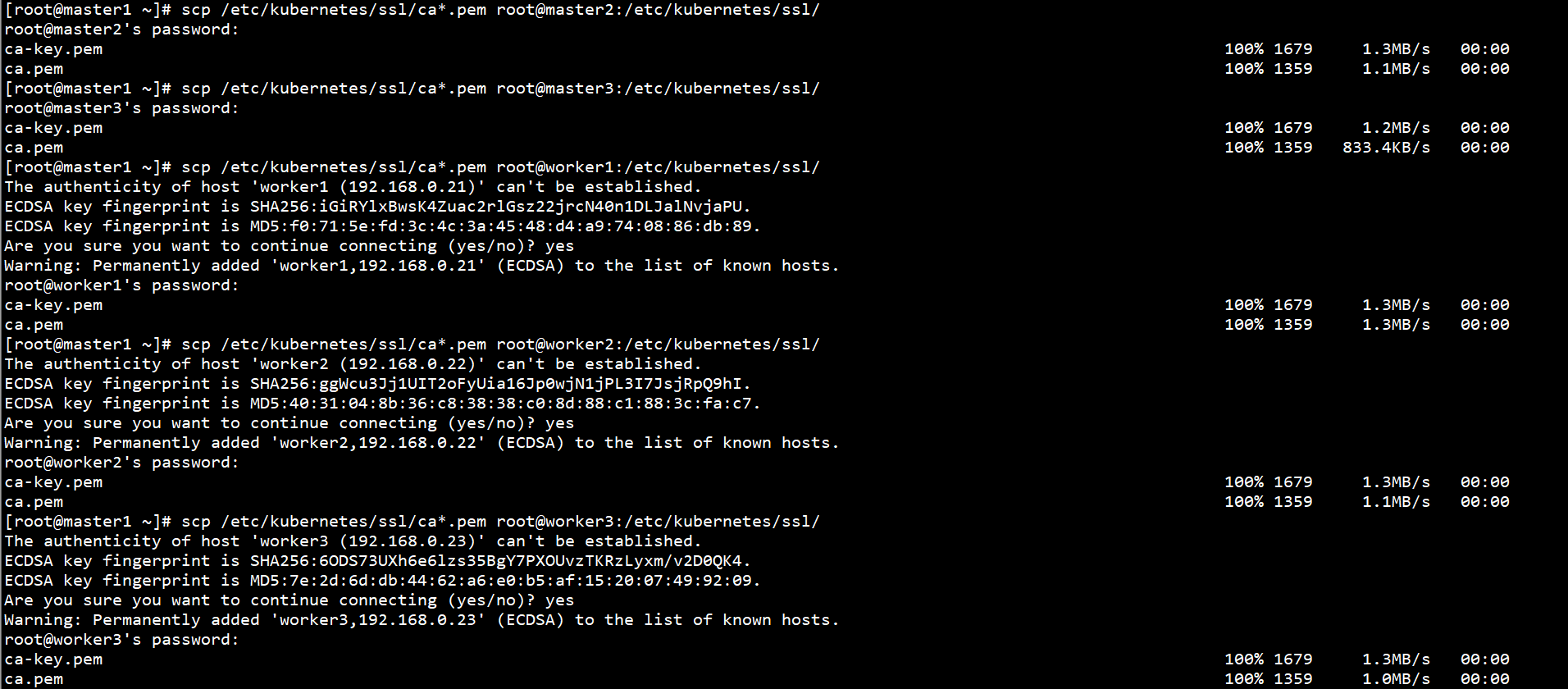

Copy the CA certificate on Master1 node to other Master nodes and Worker nodes:

scp /etc/kubernetes/ssl/ca*.pem root@master2:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/ca*.pem root@master3:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/ca*.pem root@worker1:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/ca*.pem root@worker2:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/ca*.pem root@worker3:/etc/kubernetes/ssl/

Deploy Kubernetes on the Master node:

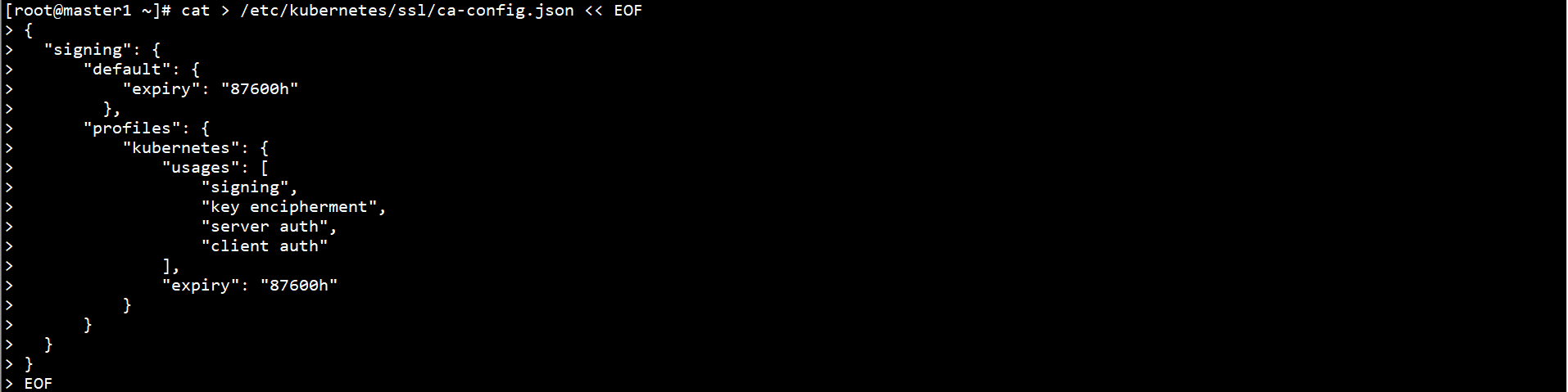

Generate Certificate Policy on Master1 node:

cat > /etc/kubernetes/ssl/ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

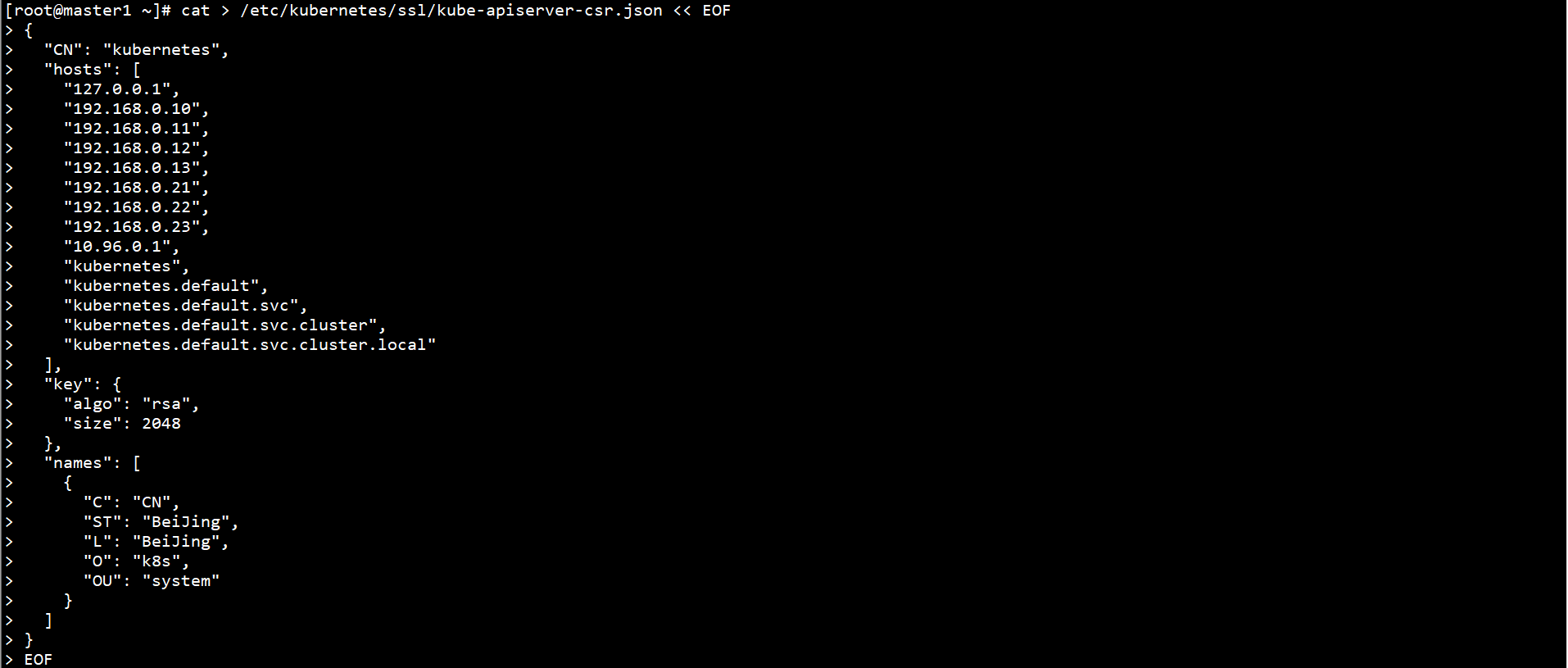

Create Kube apiserver CSR request file on Master1 node:

cat > /etc/kubernetes/ssl/kube-apiserver-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.0.10",

"192.168.0.11",

"192.168.0.12",

"192.168.0.13",

"192.168.0.21",

"192.168.0.22",

"192.168.0.23",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

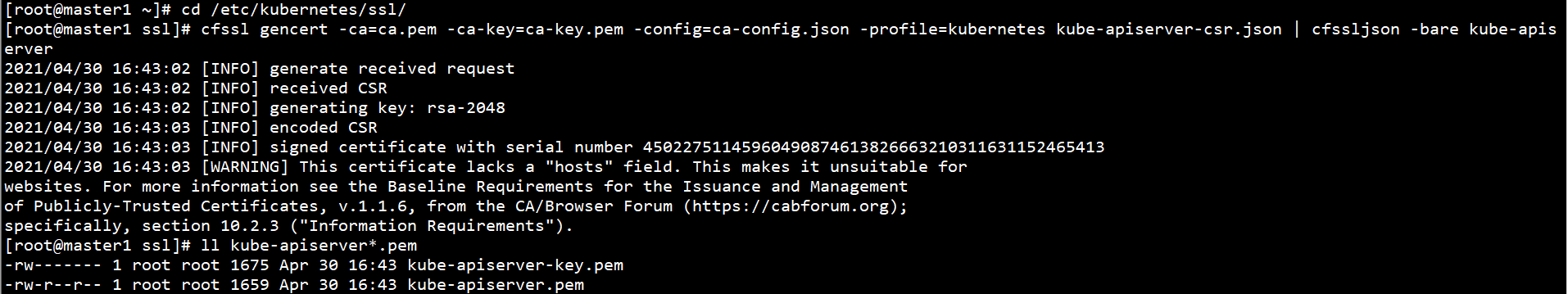

Generate Kube apiserver certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver ll kube-apiserver*.pem

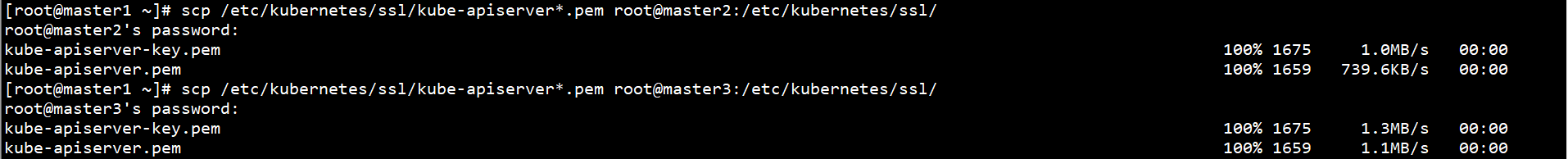

Copy the Kube apiserver certificate on the Master1 node to the other Master nodes:

scp /etc/kubernetes/ssl/kube-apiserver*.pem root@master2:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/kube-apiserver*.pem root@master3:/etc/kubernetes/ssl/

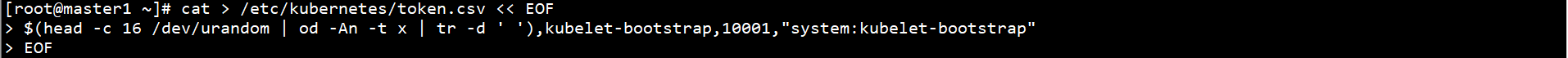

Generate a token on the Master1 node:

cat > /etc/kubernetes/token.csv << EOF $(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF

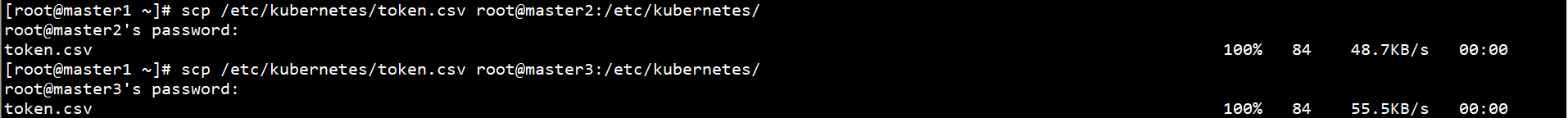

Copy the token on Master1 node to other Master nodes:

scp /etc/kubernetes/token.csv root@master2:/etc/kubernetes/ scp /etc/kubernetes/token.csv root@master3:/etc/kubernetes/

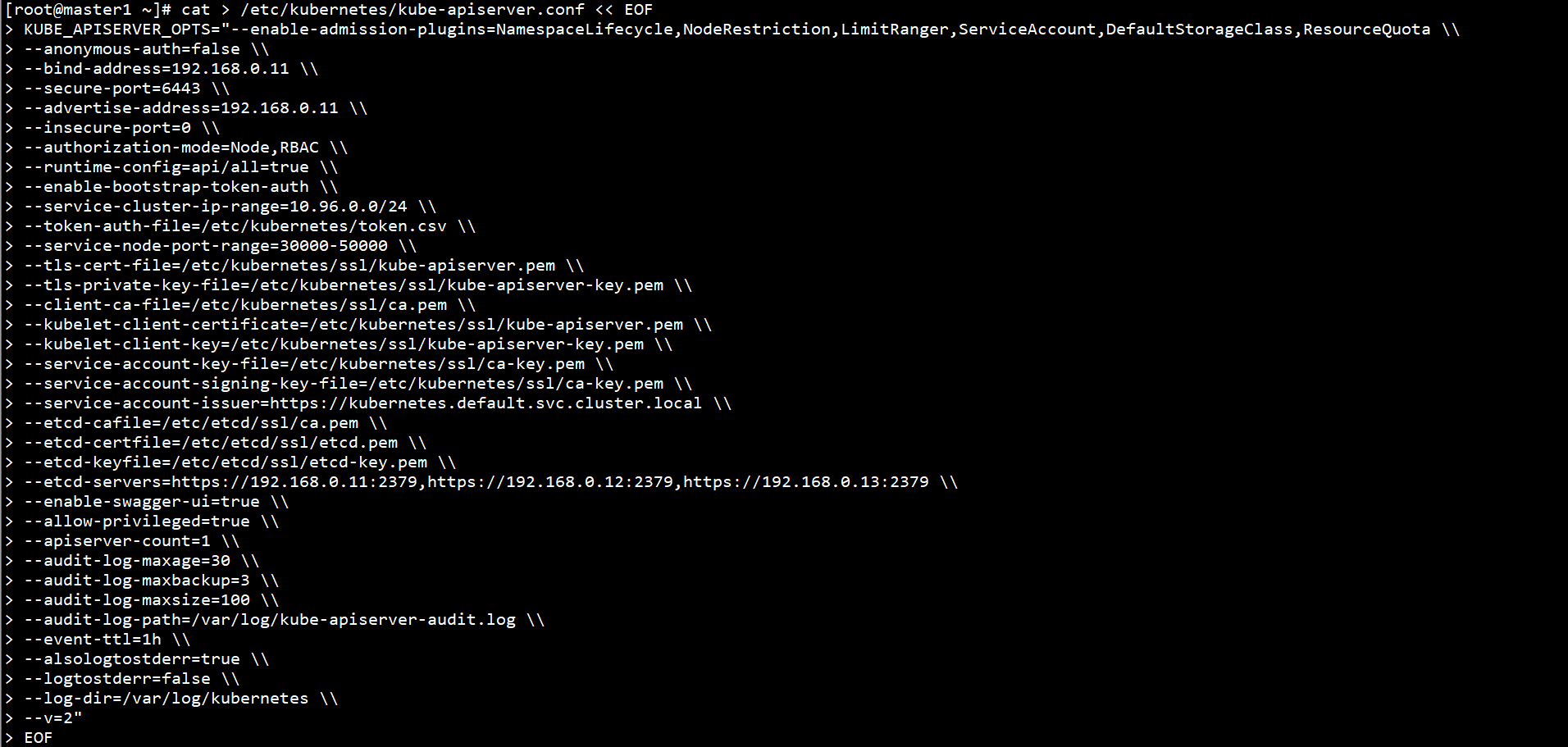

Create Kube apiserver profile on all Master nodes:

cat > /etc/kubernetes/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\ --anonymous-auth=false \\ --bind-address=192.168.0.11 \\ --secure-port=6443 \\ --advertise-address=192.168.0.11 \\ --insecure-port=0 \\ --authorization-mode=Node,RBAC \\ --runtime-config=api/all=true \\ --enable-bootstrap-token-auth \\ --service-cluster-ip-range=10.96.0.0/24 \\ --token-auth-file=/etc/kubernetes/token.csv \\ --service-node-port-range=30000-50000 \\ --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \\ --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \\ --client-ca-file=/etc/kubernetes/ssl/ca.pem \\ --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \\ --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \\ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --service-account-issuer=https://kubernetes.default.svc.cluster.local \\ --etcd-cafile=/etc/etcd/ssl/ca.pem \\ --etcd-certfile=/etc/etcd/ssl/etcd.pem \\ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\ --etcd-servers=https://192.168.0.11:2379,https://192.168.0.12:2379,https://192.168.0.13:2379 \\ --enable-swagger-ui=true \\ --allow-privileged=true \\ --apiserver-count=1 \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/var/log/kube-apiserver-audit.log \\ --event-ttl=1h \\ --alsologtostderr=true \\ --logtostderr=false \\ --log-dir=/var/log/kubernetes \\ --v=2" EOF

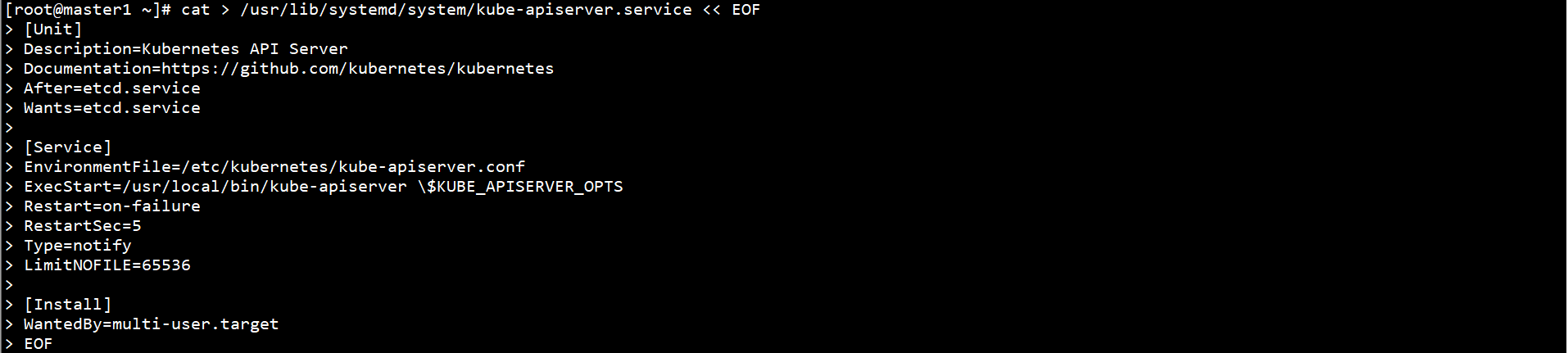

Configure systemd management Kube apiserver on all Master nodes:

At all Master Configuration on node systemd Administration kube-apiserver: cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=etcd.service Wants=etcd.service [Service] EnvironmentFile=/etc/kubernetes/kube-apiserver.conf ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

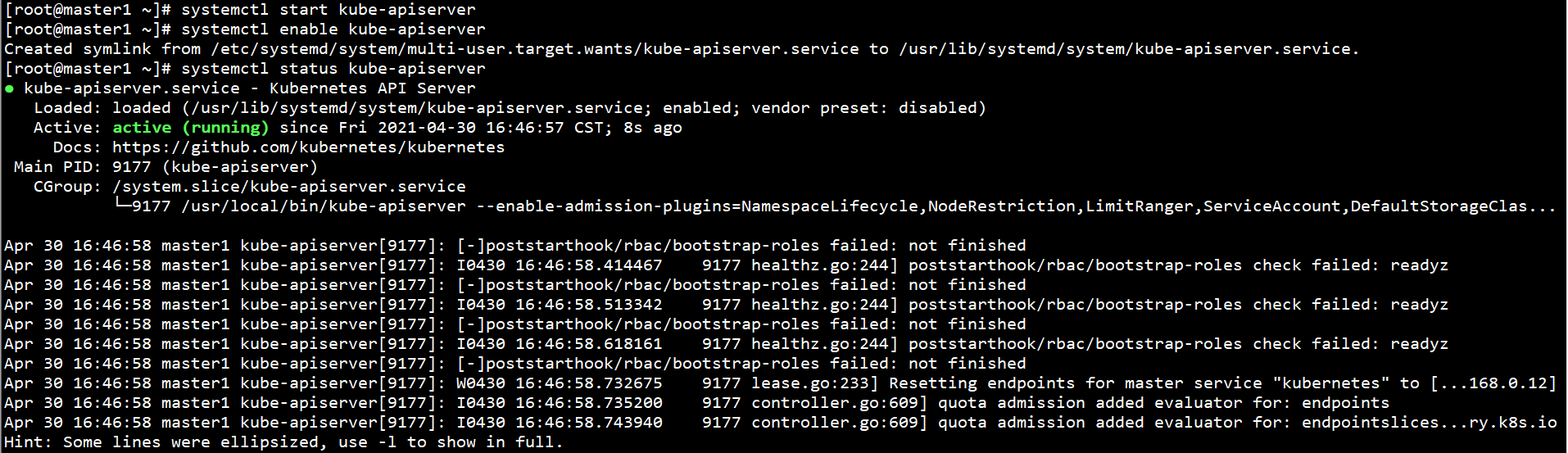

Start Kube apiserver on all Master nodes and set self startup:

systemctl start kube-apiserver systemctl enable kube-apiserver systemctl status kube-apiserver

Deploy kubectl components on the Master node:

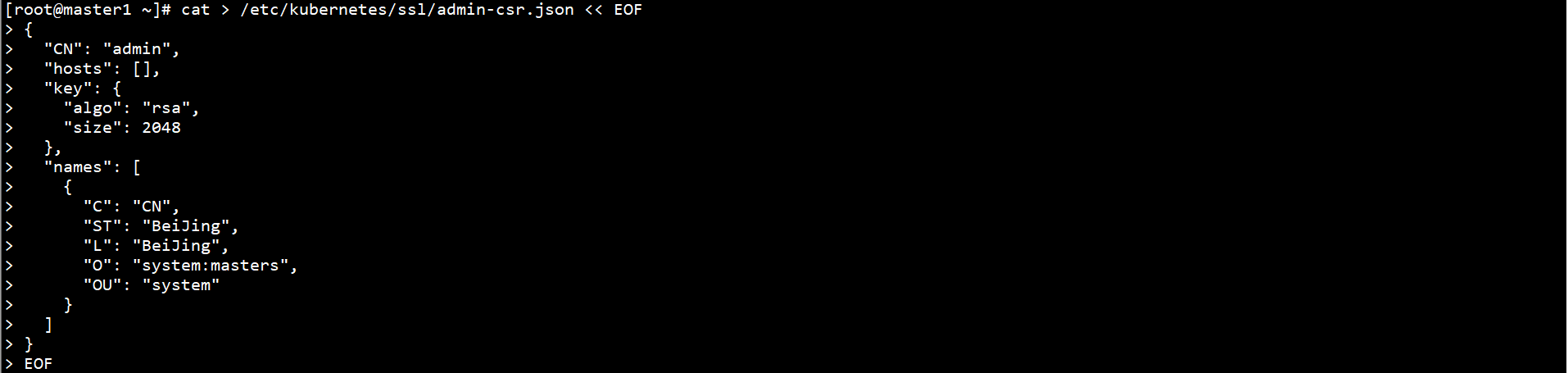

Create kubectl CSR request file on Master1 node:

cat > /etc/kubernetes/ssl/admin-csr.json << EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "system"

}

]

}

EOF

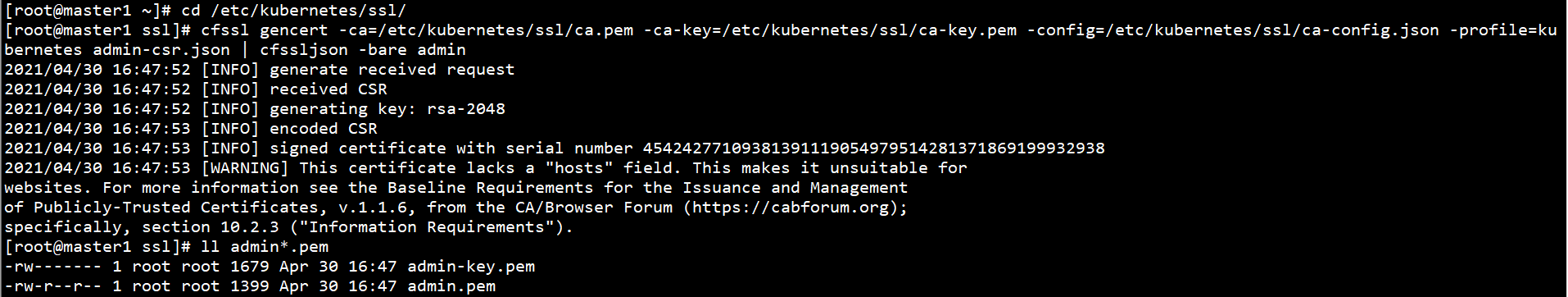

Generate kubectl certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin ll admin*.pem

Generate Kube on the Master1 node config:

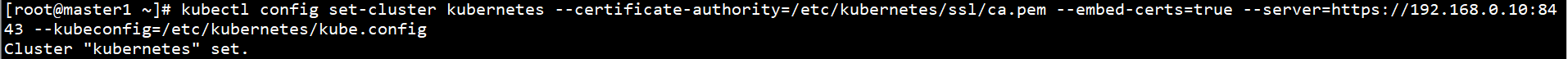

Set cluster parameters:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:8443 --kubeconfig=/etc/kubernetes/kube.config

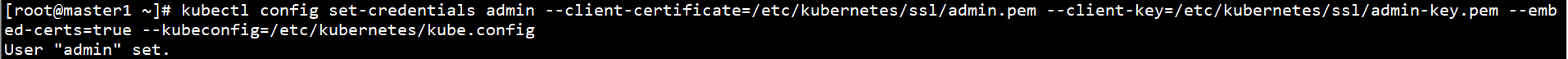

Set client authentication parameters:

kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --client-key=/etc/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube.config

Set context parameters:

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=/etc/kubernetes/kube.config

Set default context:

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube.config

Create kube directories on all Master nodes:

mkdir ~/.kube

Put Kube Copy config to the default directory:

cp /etc/kubernetes/kube.config ~/.kube/config

Authorize kubernetes certificate to access kubelet api:

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

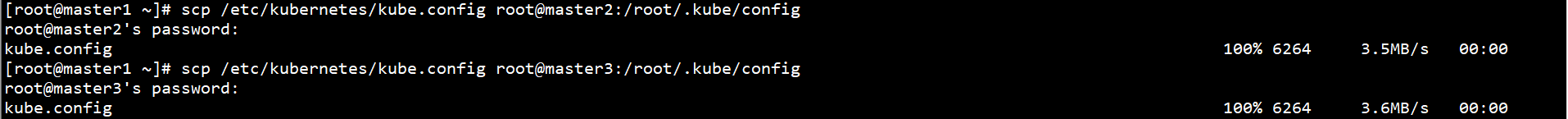

Connect Kube on Master1 node Copy config to other Master nodes:

scp /etc/kubernetes/kube.config root@master2:/root/.kube/config scp /etc/kubernetes/kube.config root@master3:/root/.kube/config

At this point, you can manage the cluster through kubectl.

Deploy Kube controller manager on the Master node:

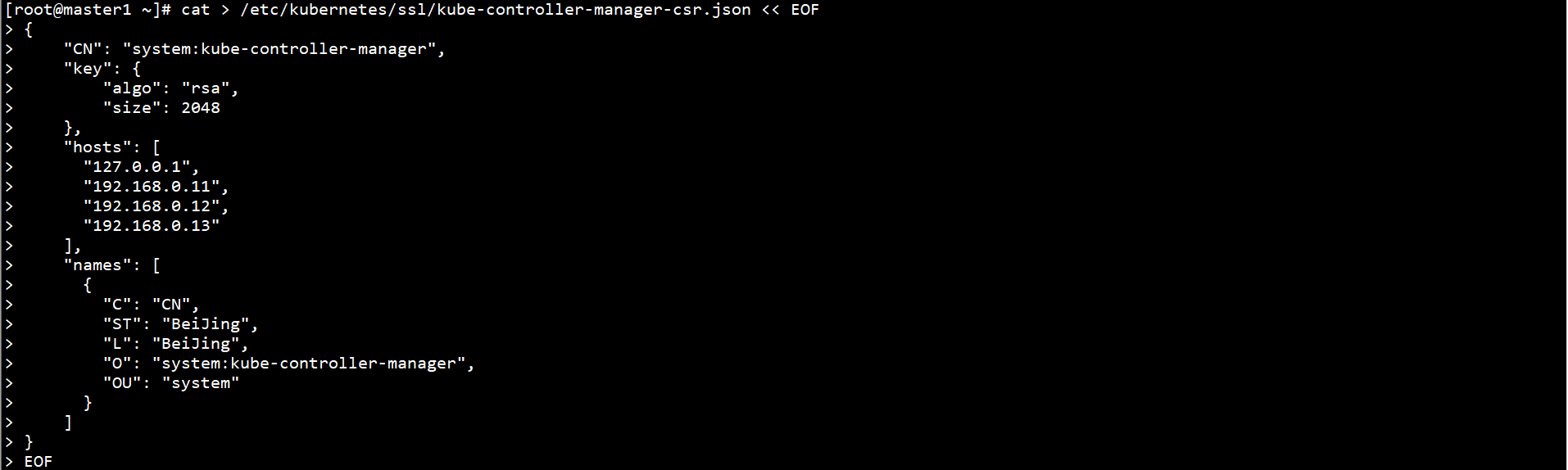

Create Kube controller manager CSR request file on Master1 node:

cat > /etc/kubernetes/ssl/kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.0.11",

"192.168.0.12",

"192.168.0.13"

],

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

EOF

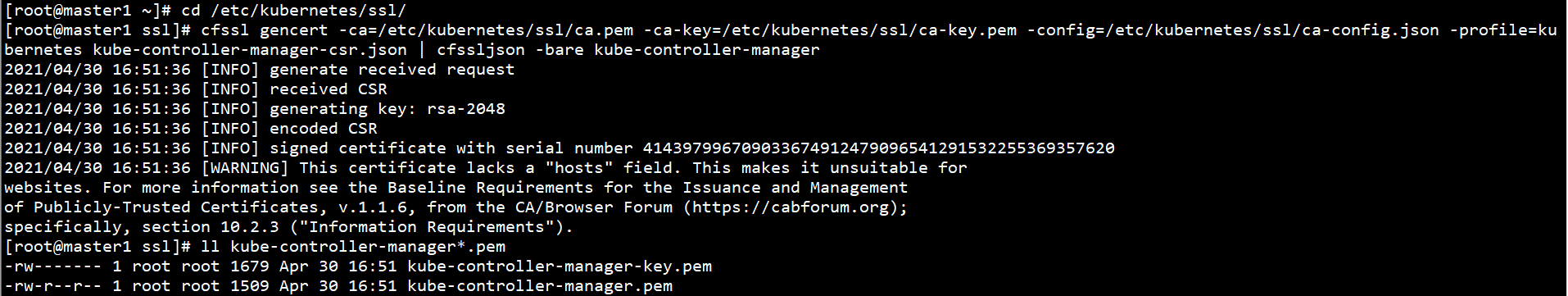

Generate Kube controller manager certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager ll kube-controller-manager*.pem

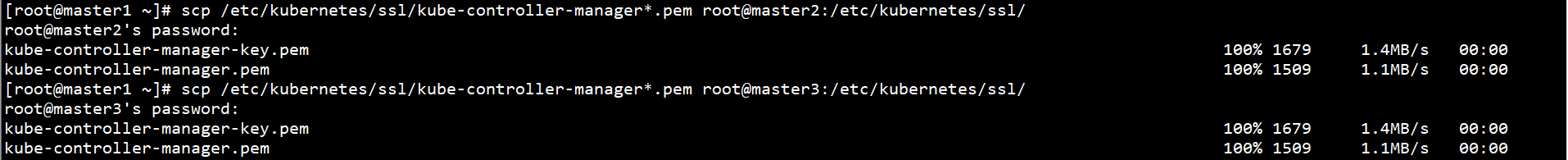

Copy the Kube controller manager certificate on the Master1 node to the other Master nodes:

scp /etc/kubernetes/ssl/kube-controller-manager*.pem root@master2:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/kube-controller-manager*.pem root@master3:/etc/kubernetes/ssl/

Generate Kube controller manager on the Master1 node kubeconfig:

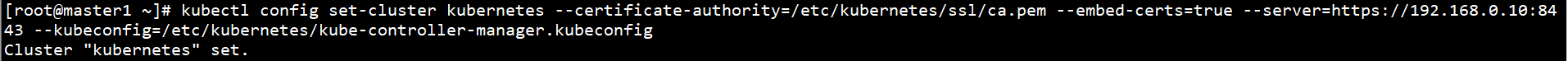

Set cluster parameters:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:8443 --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

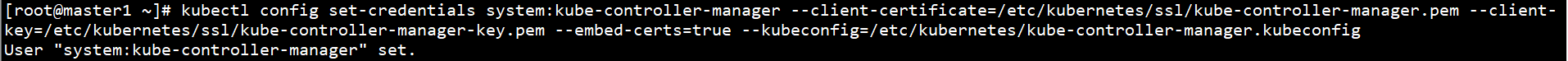

Set client authentication parameters:

kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem --client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

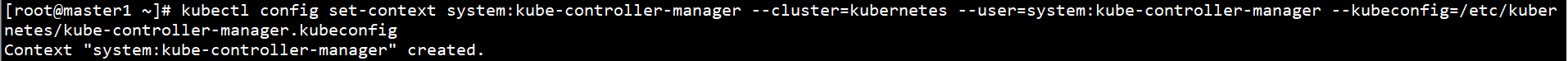

Set context parameters:

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

Set default context:

kubectl config use-context system:kube-controller-manager --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig

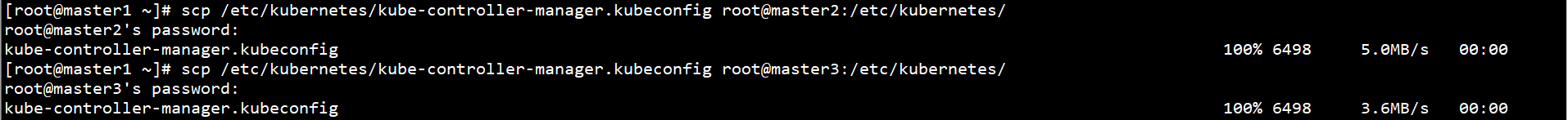

Connect Kube controller manager on Master1 node Copy kubeconfig to other Master nodes:

scp /etc/kubernetes/kube-controller-manager.kubeconfig root@master2:/etc/kubernetes/ scp /etc/kubernetes/kube-controller-manager.kubeconfig root@master3:/etc/kubernetes/

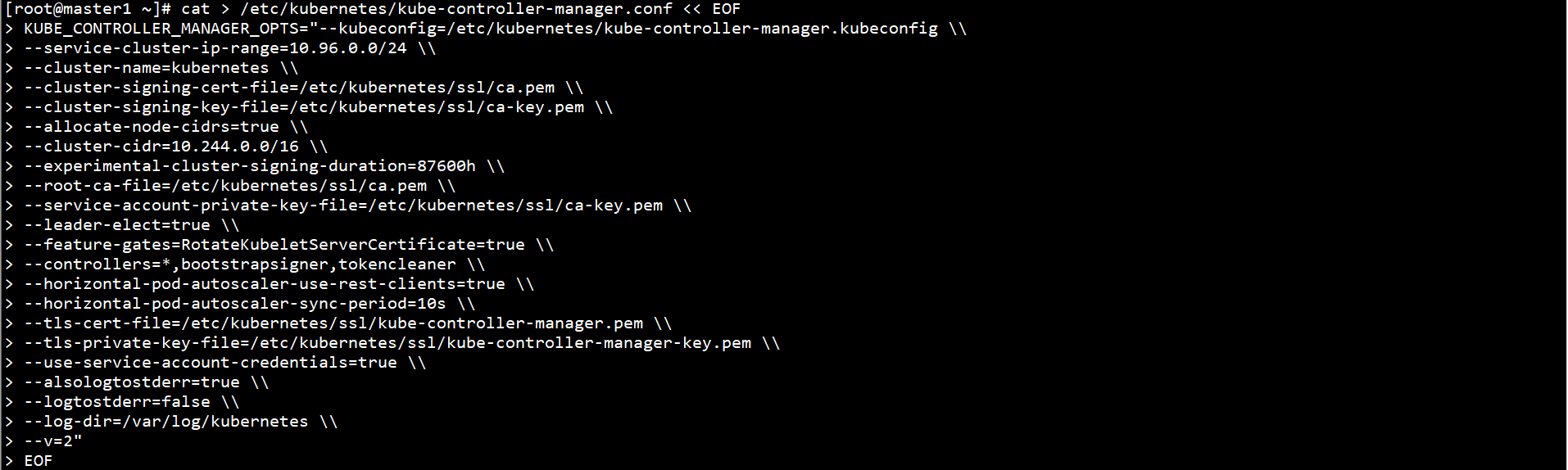

Create Kube Controller Manager Profile on all Master nodes:

cat > /etc/kubernetes/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ --service-cluster-ip-range=10.96.0.0/24 \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --experimental-cluster-signing-duration=87600h \\ --root-ca-file=/etc/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --leader-elect=true \\ --feature-gates=RotateKubeletServerCertificate=true \\ --controllers=*,bootstrapsigner,tokencleaner \\ --horizontal-pod-autoscaler-use-rest-clients=true \\ --horizontal-pod-autoscaler-sync-period=10s \\ --tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\ --tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\ --use-service-account-credentials=true \\ --alsologtostderr=true \\ --logtostderr=false \\ --log-dir=/var/log/kubernetes \\ --v=2" EOF

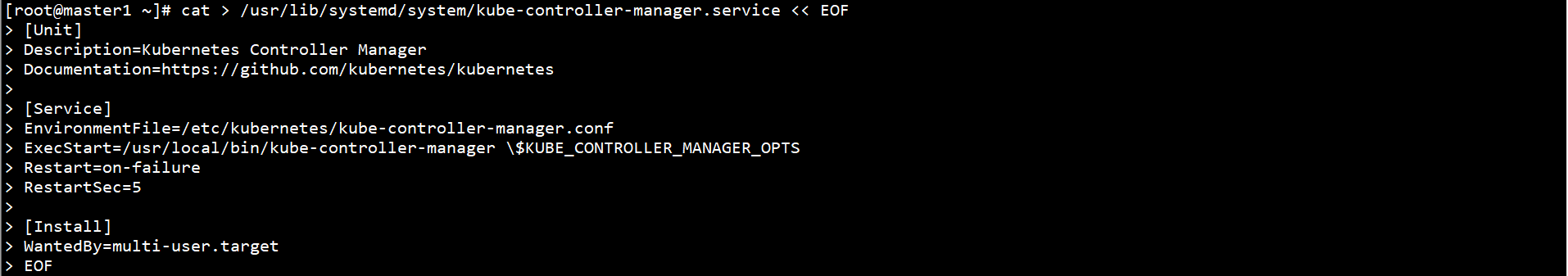

Configure systemd management Kube controller manager on all Master nodes:

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/kube-controller-manager.conf ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

Start Kube controller manager on all Master nodes and set self startup:

systemctl start kube-controller-manager systemctl enable kube-controller-manager systemctl status kube-controller-manager

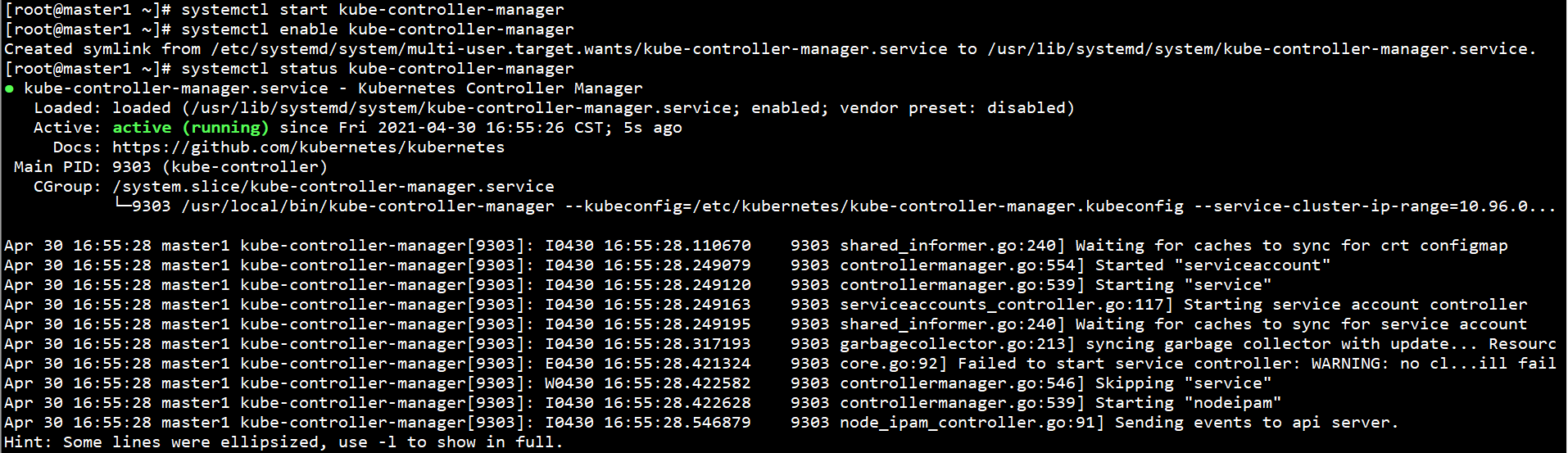

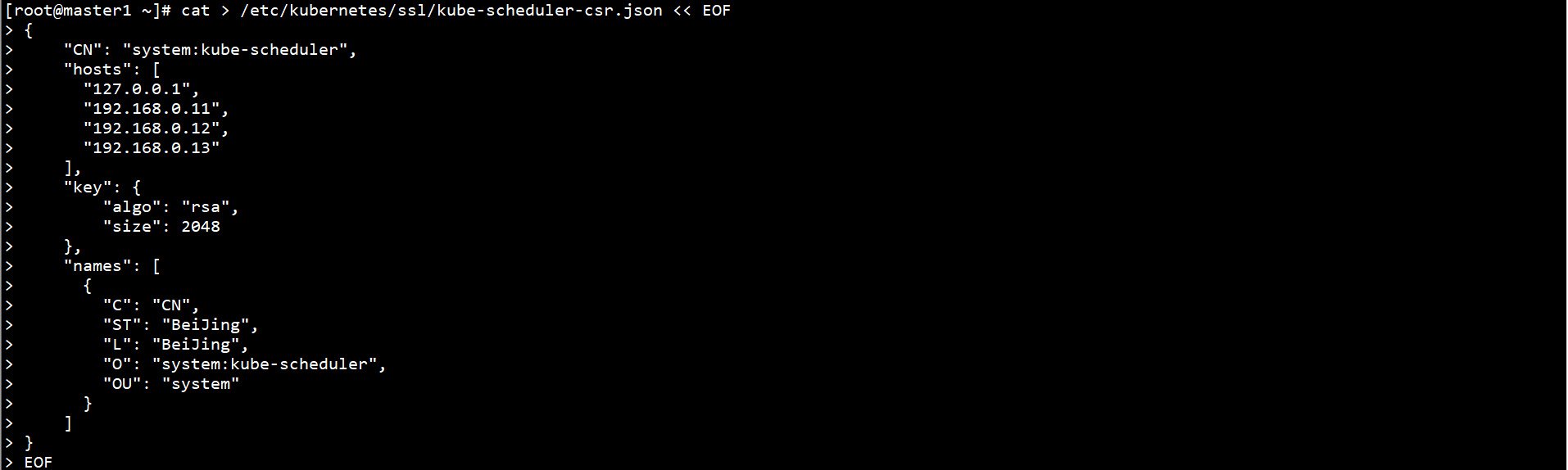

Deploy the Kube scheduler component on the Master node:

Create Kube scheduler CSR request file on Master1 node:

cat > /etc/kubernetes/ssl/kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.0.11",

"192.168.0.12",

"192.168.0.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

EOF

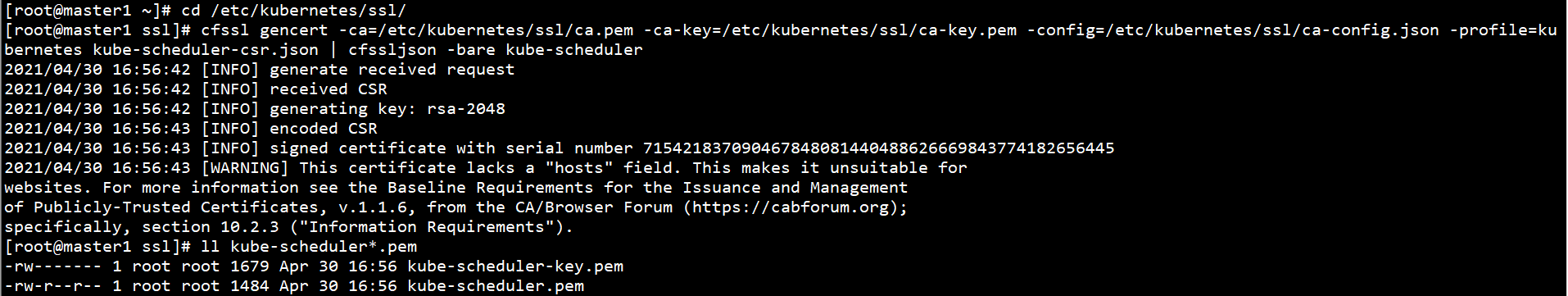

Generate Kube scheduler certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler ll kube-scheduler*.pem

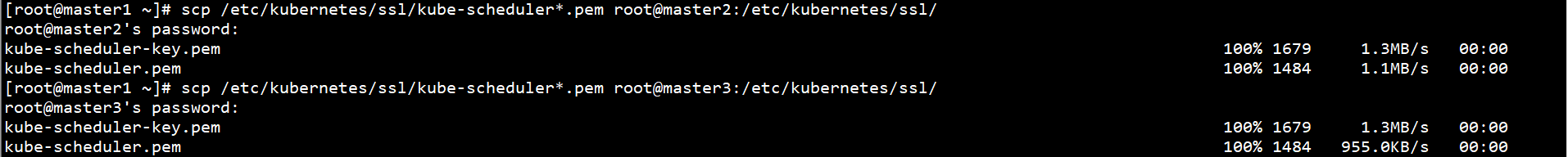

Copy the Kube scheduler certificate on the Master1 node to other Master nodes:

scp /etc/kubernetes/ssl/kube-scheduler*.pem root@master2:/etc/kubernetes/ssl/ scp /etc/kubernetes/ssl/kube-scheduler*.pem root@master3:/etc/kubernetes/ssl/

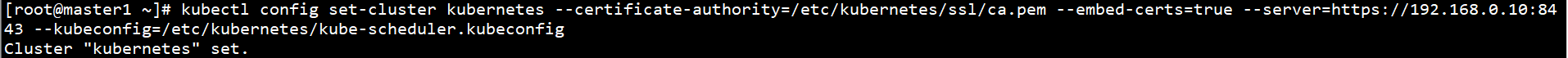

Generate Kube scheduler on Master1 node kubeconfig:

Set cluster parameters:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:8443 --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

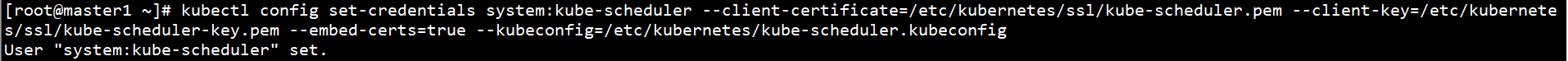

Set client authentication parameters:

kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem --client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

Set context parameters:

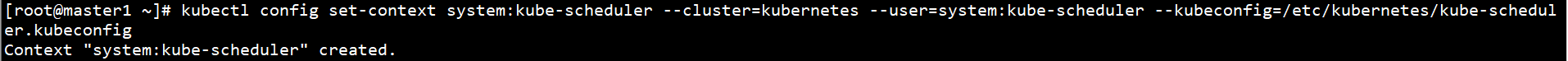

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

Set default context:

kubectl config use-context system:kube-scheduler --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig

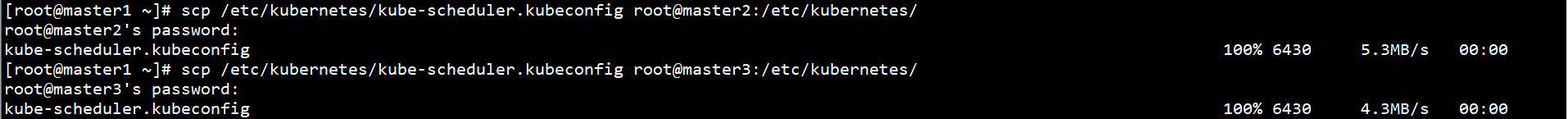

Connect Kube scheduler on Master1 node Copy kubeconfig to other Master nodes:

scp /etc/kubernetes/kube-scheduler.kubeconfig root@master2:/etc/kubernetes/ scp /etc/kubernetes/kube-scheduler.kubeconfig root@master3:/etc/kubernetes/

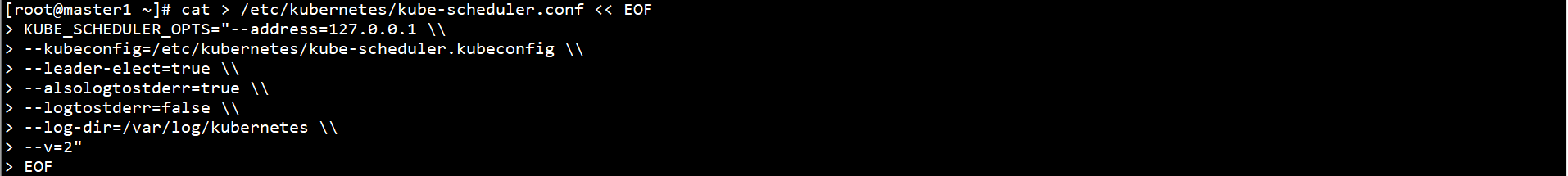

Create Kube scheduler profile on all Master nodes:

cat > /etc/kubernetes/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \\ --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\ --leader-elect=true \\ --alsologtostderr=true \\ --logtostderr=false \\ --log-dir=/var/log/kubernetes \\ --v=2" EOF

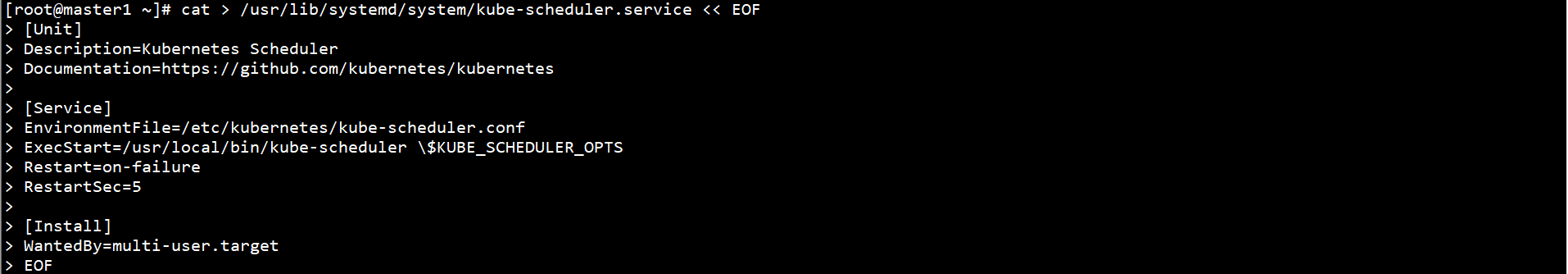

Configure systemd management Kube scheduler on all Master nodes:

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/kube-scheduler.conf ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

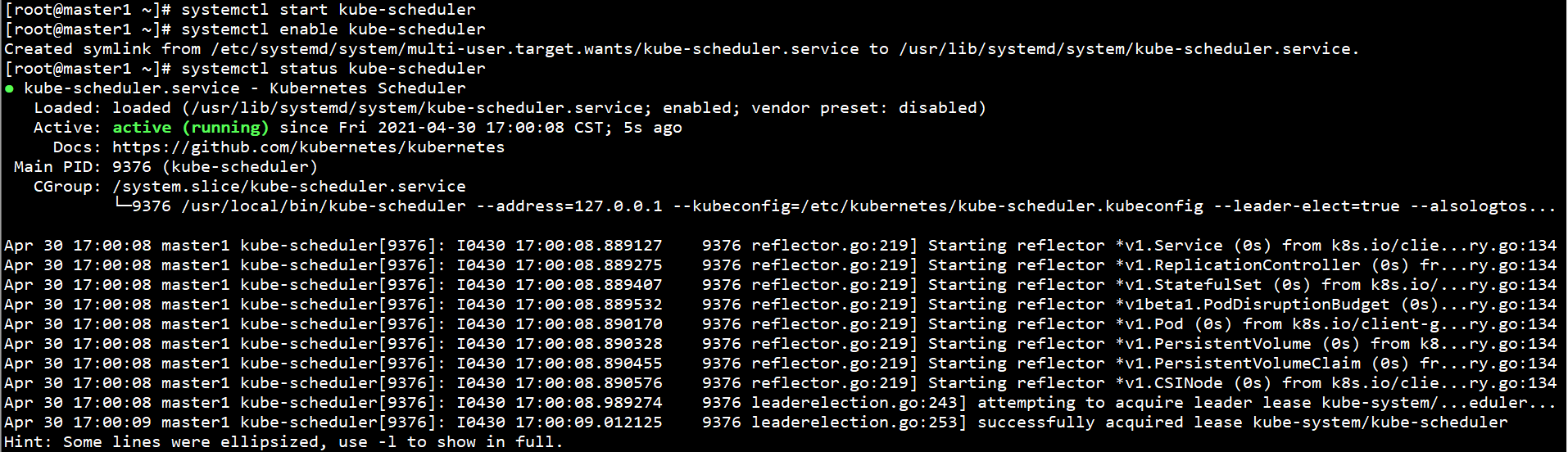

Start Kube scheduler on all Master nodes and set self startup:

systemctl start kube-scheduler systemctl enable kube-scheduler systemctl status kube-scheduler

5. Deploy Worker node

The Master node acts as a Worker node at the same time, and kubelet and Kube proxy need to be configured.

Deploy kubelet components on all nodes:

Copy the Kubernetes binaries on all Master nodes to the system directory:

cp /root/kubernetes/server/bin/kubelet /usr/local/bin

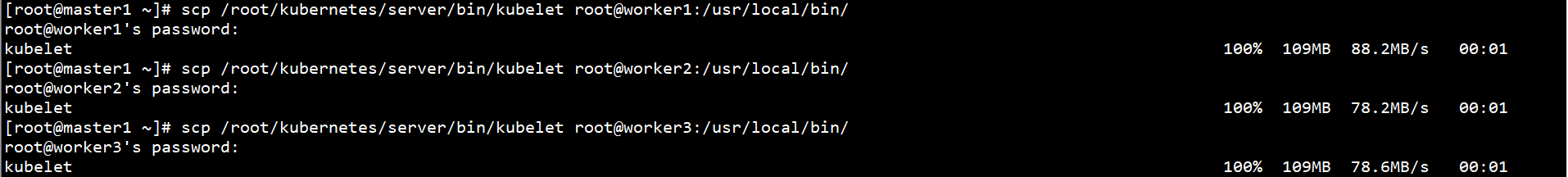

Copy the Kubernetes binary on the Master1 node to the Worker node:

scp /root/kubernetes/server/bin/kubelet root@worker1:/usr/local/bin/ scp /root/kubernetes/server/bin/kubelet root@worker2:/usr/local/bin/ scp /root/kubernetes/server/bin/kubelet root@worker3:/usr/local/bin/

Generate kubelet bootstrap pr kubeconfig:

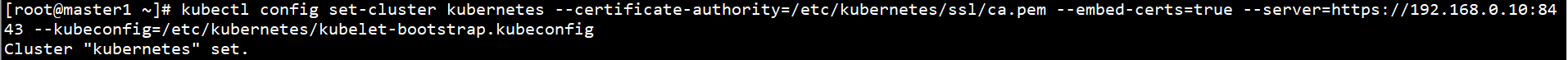

Set cluster parameters:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:8443 --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

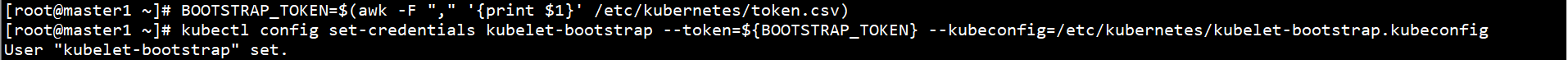

Set client authentication parameters:

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

Set context parameters:

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

Set default context:

kubectl config use-context default --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

Create role binding:

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

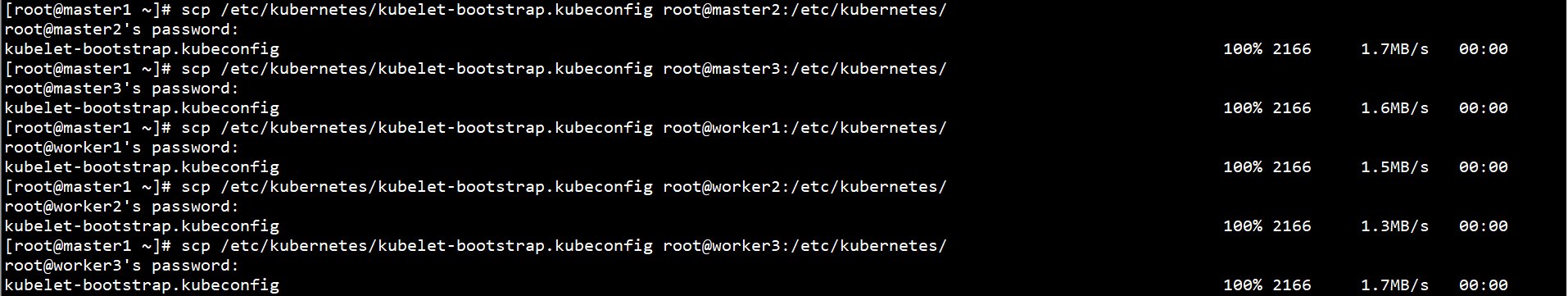

Connect kubelet bootstrap on Master1 node Copy kubeconfig to other Master and Worker nodes:

scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@master2:/etc/kubernetes/ scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@master3:/etc/kubernetes/ scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@worker1:/etc/kubernetes/ scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@worker2:/etc/kubernetes/ scp /etc/kubernetes/kubelet-bootstrap.kubeconfig root@worker3:/etc/kubernetes/

Create log directories on all Worker nodes:

mkdir /var/log/kubernetes/

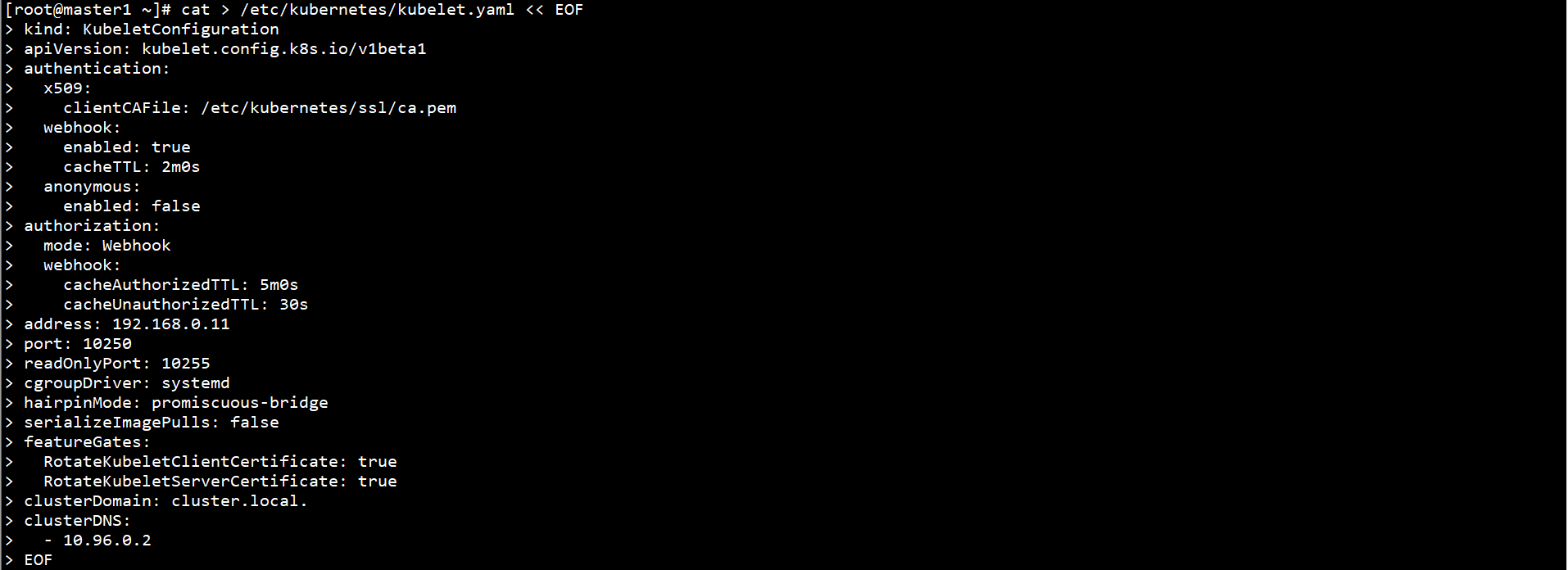

Create kubelet profiles on all nodes:

cat > /etc/kubernetes/kubelet.yaml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

webhook:

enabled: true

cacheTTL: 2m0s

anonymous:

enabled: false

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

address: 192.168.0.11

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

hairpinMode: promiscuous-bridge

serializeImagePulls: false

featureGates:

RotateKubeletClientCertificate: true

RotateKubeletServerCertificate: true

clusterDomain: cluster.local.

clusterDNS:

- 10.96.0.2

EOF

The red part is modified to the actual IP address of the node.

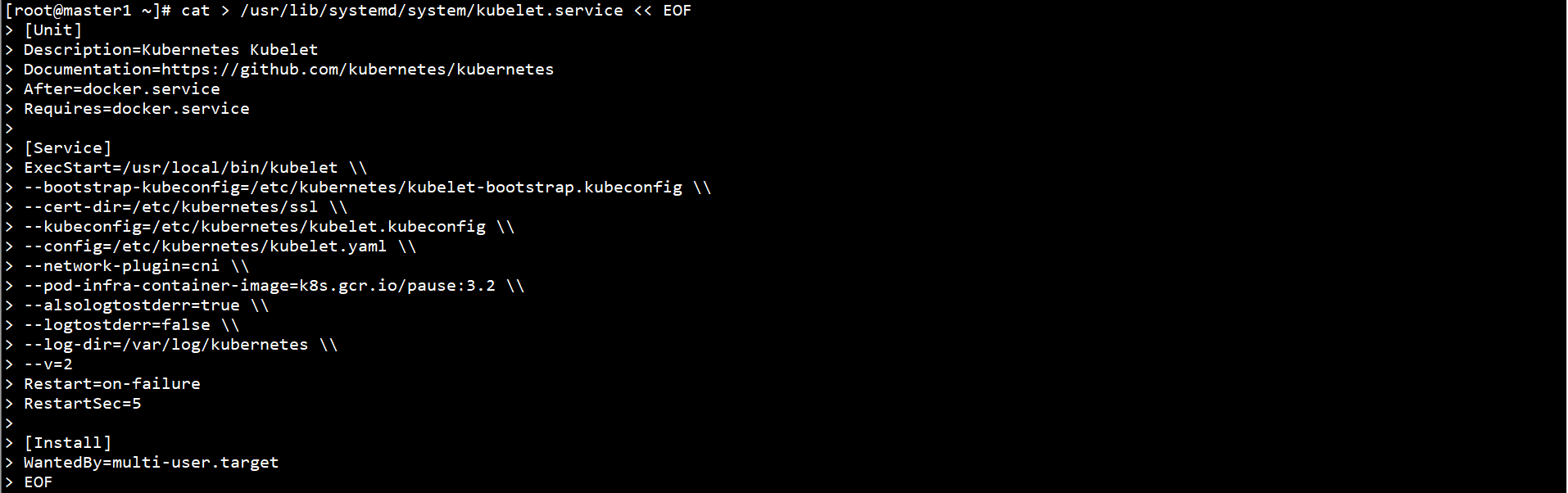

Configure systemd management kubelet on all nodes:

cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=docker.service Requires=docker.service [Service] ExecStart=/usr/local/bin/kubelet \\ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\ --cert-dir=/etc/kubernetes/ssl \\ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\ --config=/etc/kubernetes/kubelet.yaml \\ --network-plugin=cni \\ --pod-infra-container-image=k8s.gcr.io/pause:3.2 \\ --alsologtostderr=true \\ --logtostderr=false \\ --log-dir=/var/log/kubernetes \\ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

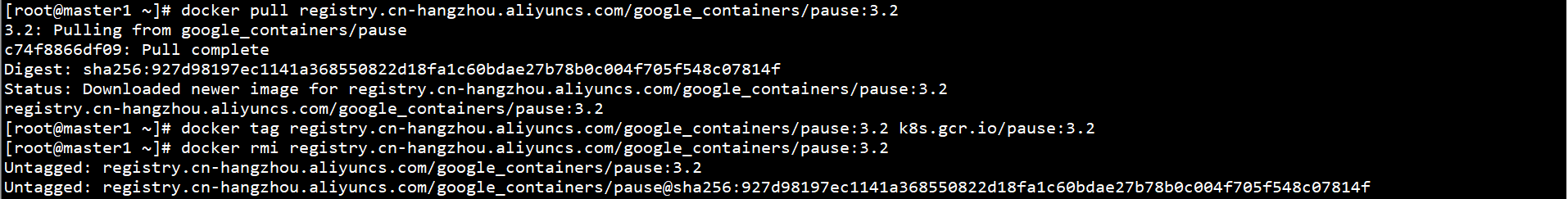

k8s.gcr.io/pause:3.2 cannot be downloaded directly. It needs to be downloaded through Alibaba cloud image warehouse:

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2 docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

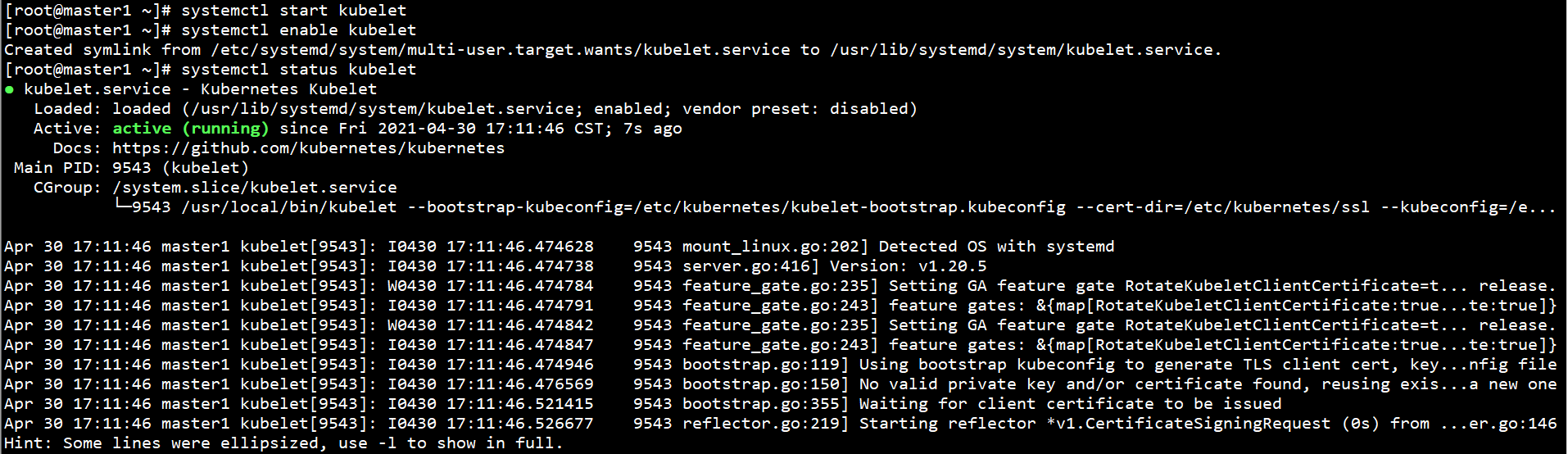

Start kubelet on all nodes and set self startup:

systemctl start kubelet systemctl enable kubelet systemctl status kubelet

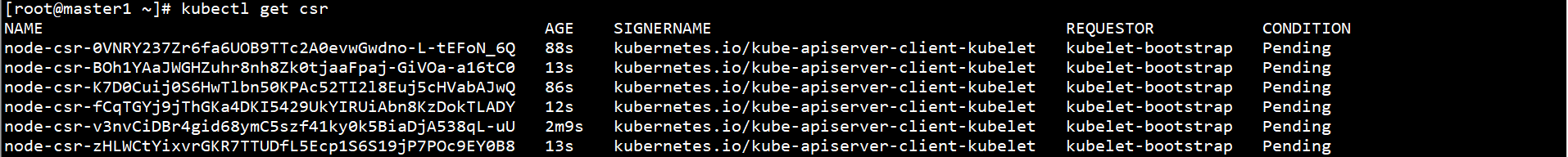

View kubelet Certificate Application on any Master node:

kubectl get csr

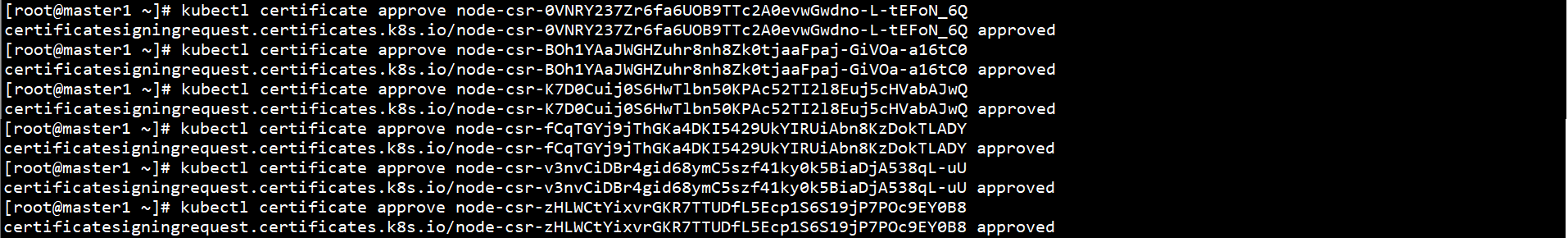

Approve kubelet Certificate Application on any Master node:

kubectl certificate approve node-csr-xxx

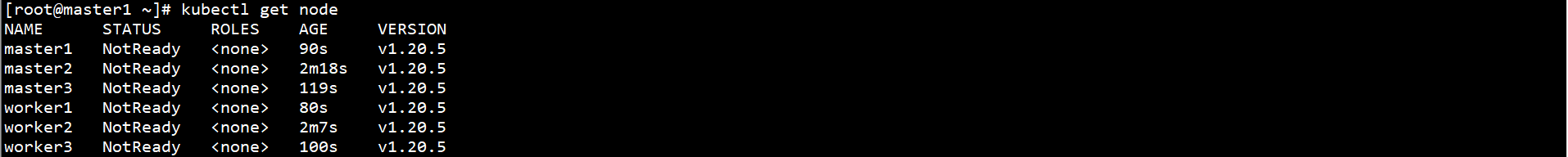

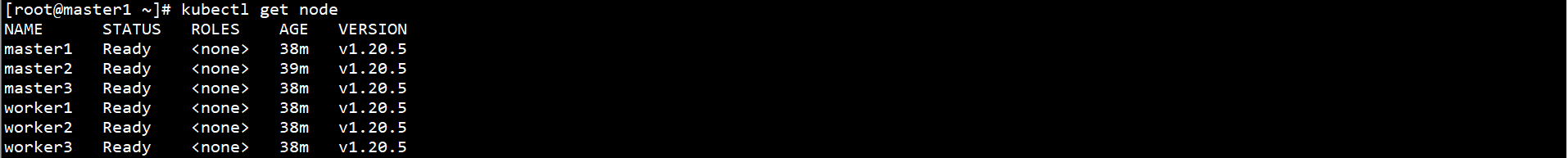

View Node status on any Master Node:

kubectl get node

Deploy Kube proxy components on all nodes:

Copy the Kubernetes binaries on all Master nodes to the system directory:

cp /root/kubernetes/server/bin/kube-proxy /usr/local/bin

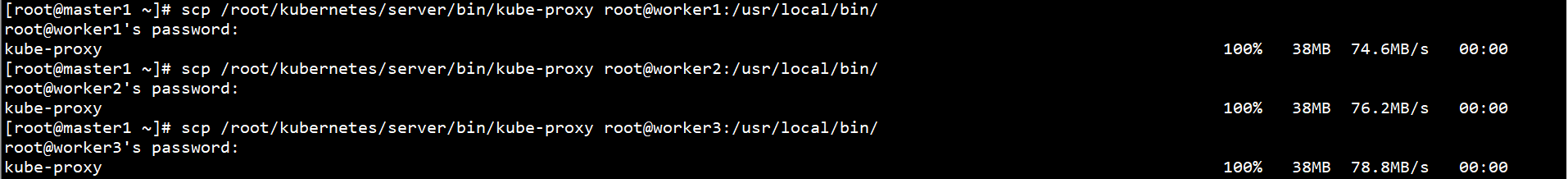

Copy the Kubernetes binary on the Master1 node to the Worker node:

scp /root/kubernetes/server/bin/kube-proxy root@worker1:/usr/local/bin/ scp /root/kubernetes/server/bin/kube-proxy root@worker2:/usr/local/bin/ scp /root/kubernetes/server/bin/kube-proxy root@worker3:/usr/local/bin/

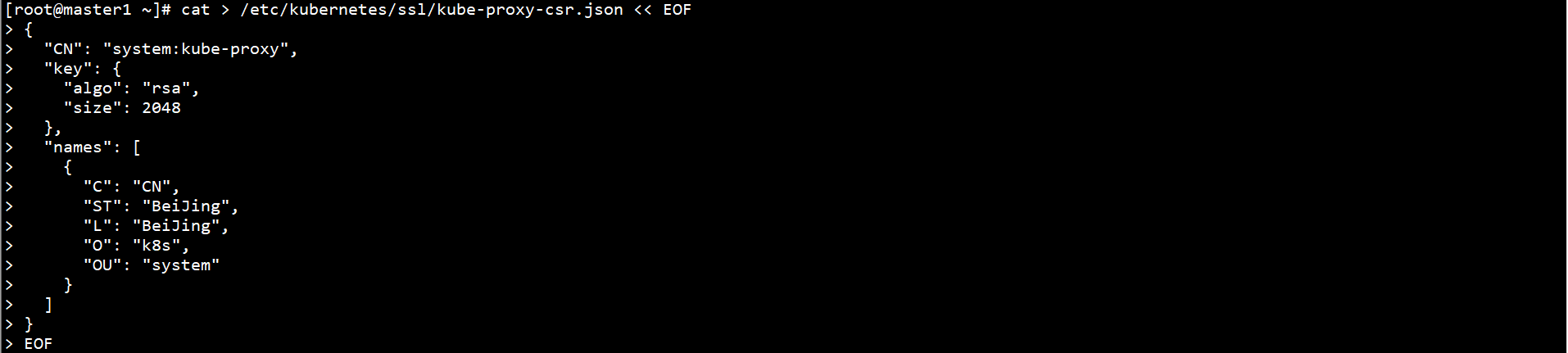

Create Kube proxy CSR request file on Master1 node:

cat > /etc/kubernetes/ssl/kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

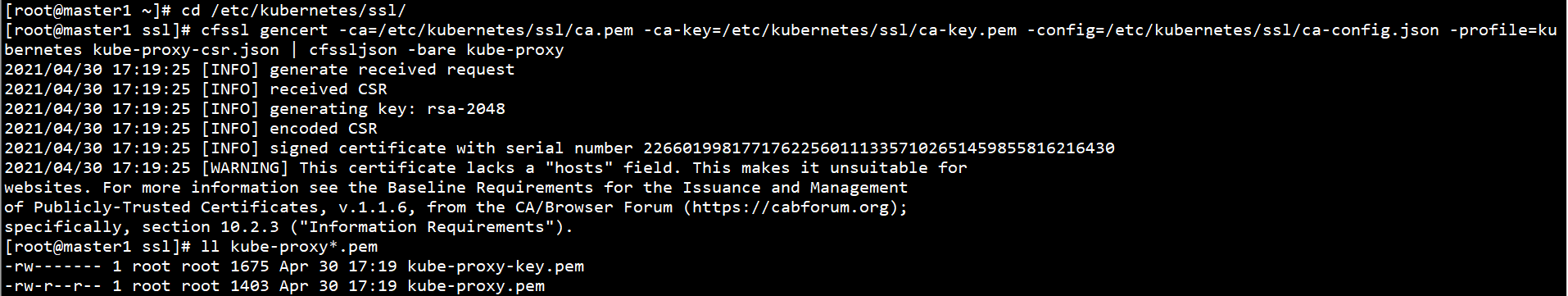

Generate Kube proxy certificate on Master1 node:

cd /etc/kubernetes/ssl/ cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ll kube-proxy*.pem

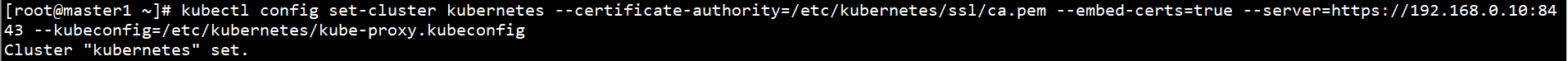

Generate Kube proxy on Master1 node kubeconfig:

Set cluster parameters:

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.0.10:8443 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

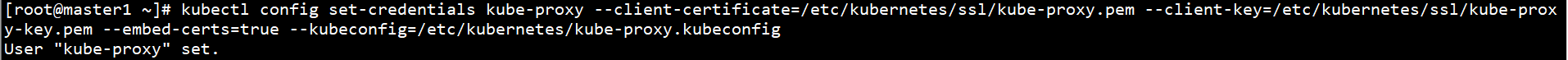

Set client authentication parameters:

kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Set context parameters:

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Set default context:

kubectl config use-context default --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

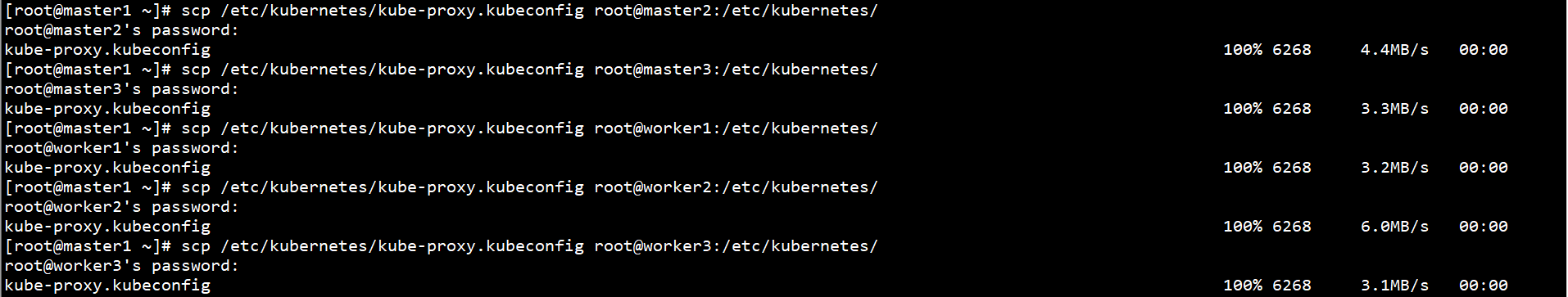

Connect Kube proxy on Master1 node Copy kubeconfig to the Worker node:

scp /etc/kubernetes/kube-proxy.kubeconfig root@master2:/etc/kubernetes/ scp /etc/kubernetes/kube-proxy.kubeconfig root@master3:/etc/kubernetes/ scp /etc/kubernetes/kube-proxy.kubeconfig root@worker1:/etc/kubernetes/ scp /etc/kubernetes/kube-proxy.kubeconfig root@worker2:/etc/kubernetes/ scp /etc/kubernetes/kube-proxy.kubeconfig root@worker3:/etc/kubernetes/

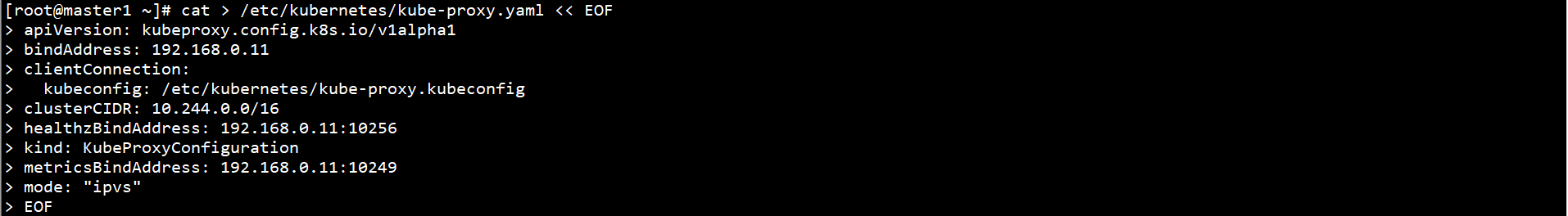

Kube proxy profile on all nodes:

cat > /etc/kubernetes/kube-proxy.yaml << EOF apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 192.168.0.11 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.244.0.0/16 healthzBindAddress: 192.168.0.11:10256 kind: KubeProxyConfiguration metricsBindAddress: 192.168.0.11:10249 mode: "ipvs" EOF

The red part is modified to the actual IP address of the node.

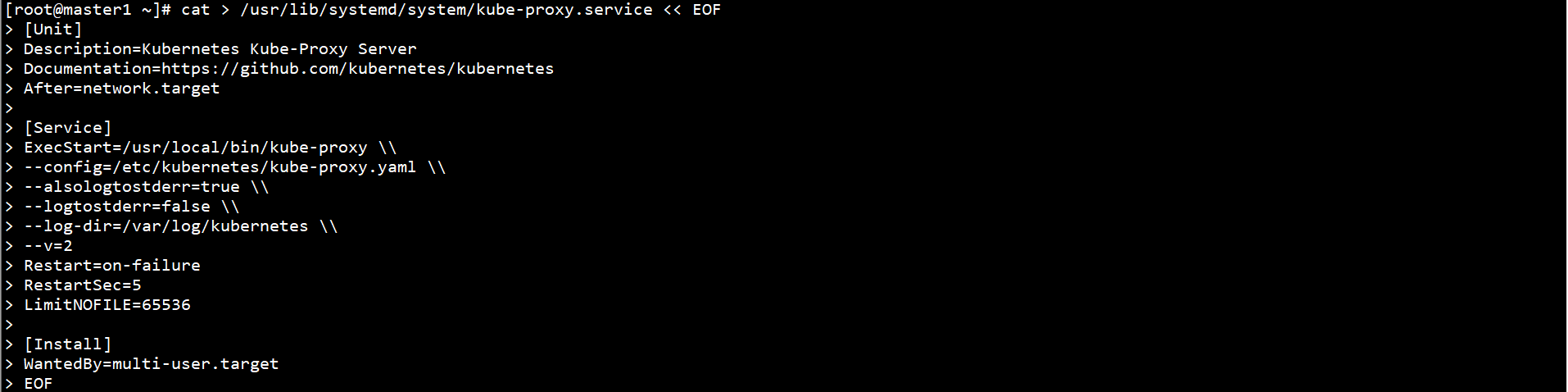

Configure systemd management Kube proxy on all nodes:

cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-proxy \\ --config=/etc/kubernetes/kube-proxy.yaml \\ --alsologtostderr=true \\ --logtostderr=false \\ --log-dir=/var/log/kubernetes \\ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

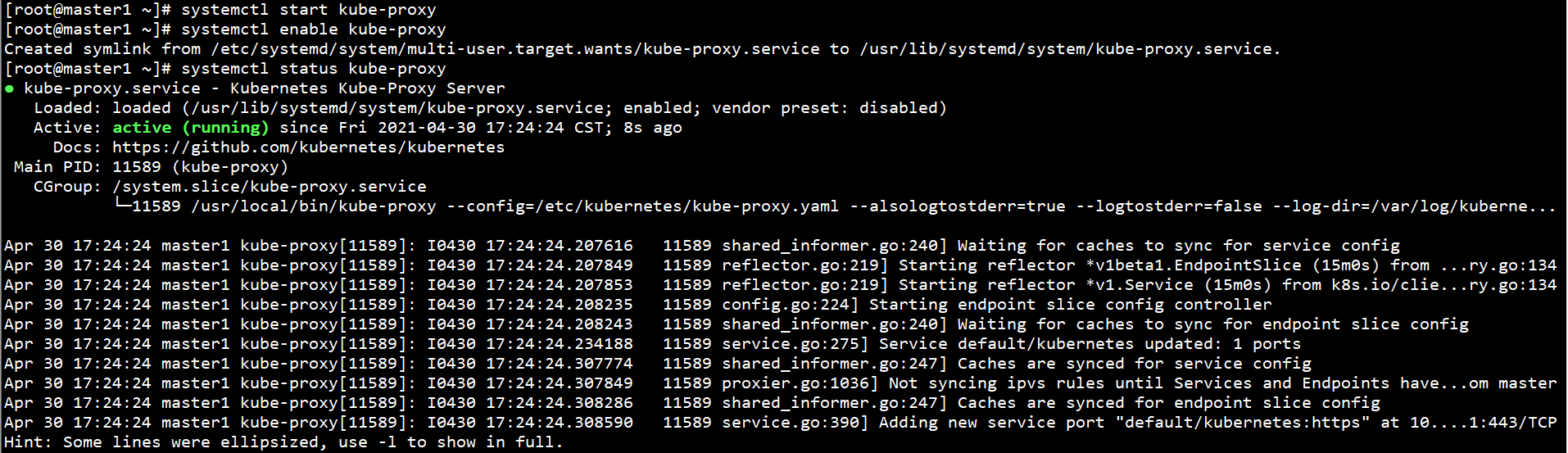

Start Kube apiserver on all nodes and set self startup:

systemctl start kube-proxy systemctl enable kube-proxy systemctl status kube-proxy

6. Deploy CNI network

Deploy CNI network on Master node:

Download calico deployment file:

Download address: https://docs.projectcalico.org/manifests/calico.yaml

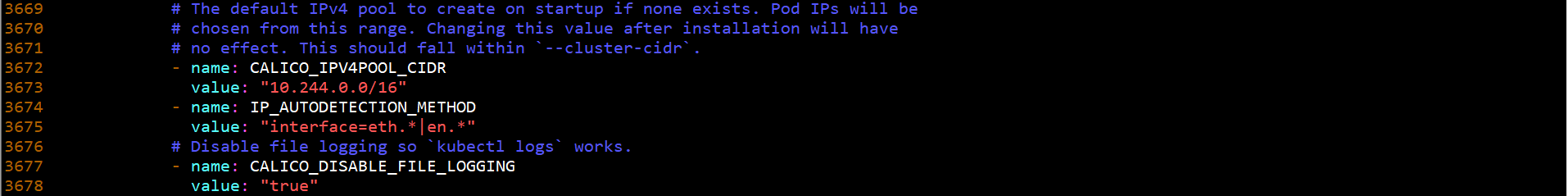

Modify calico on any Master node yaml:

increase

- name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" - name: IP_AUTODETECTION_METHOD value: "interface=eth.*|en.*"

calico. CIDR in yaml must be consistent with the parameters in the initialization cluster.

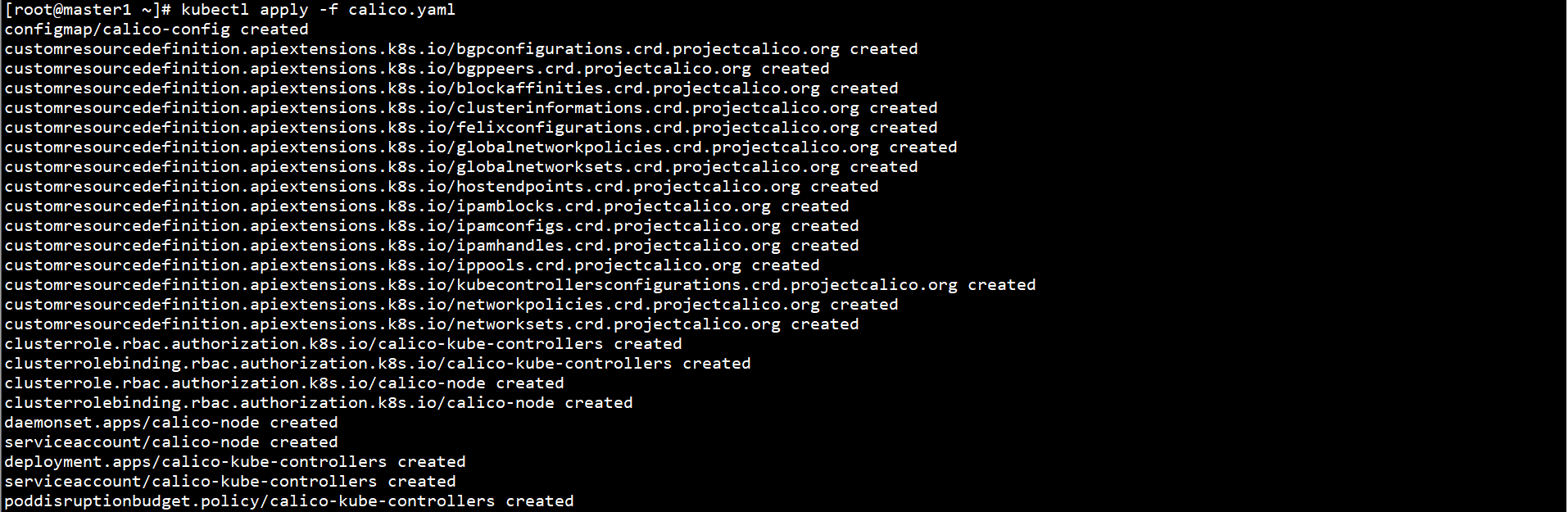

Deploy CNI network on any Master node:

kubectl apply -f calico.yaml

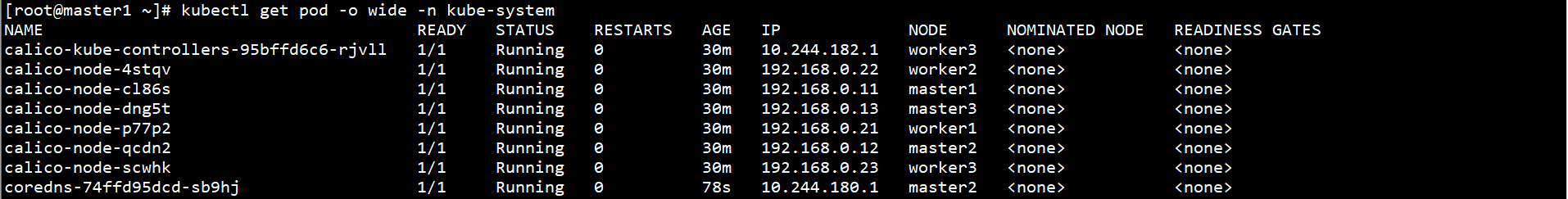

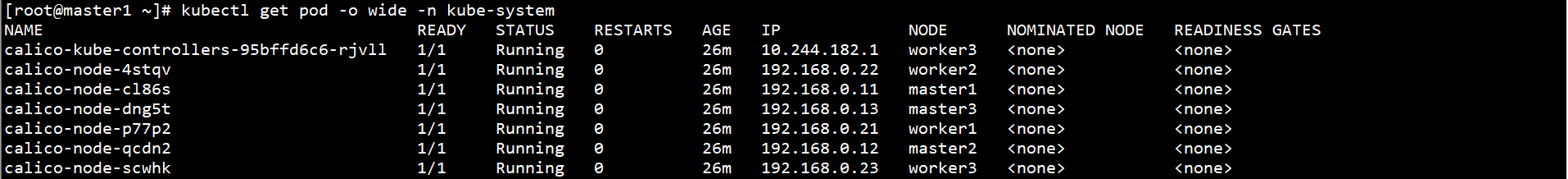

View Pod status on any Master node:

kubectl get pod -o wide -n kube-system

View Node status on any Master Node:

kubectl get node

7. Deploying CoreDNS

Unzip kubernetes Src. On any Master node tar. GZ file:

tar -xf /root/kubernetes/kubernetes-src.tar.gz -C /root/kubernetes/

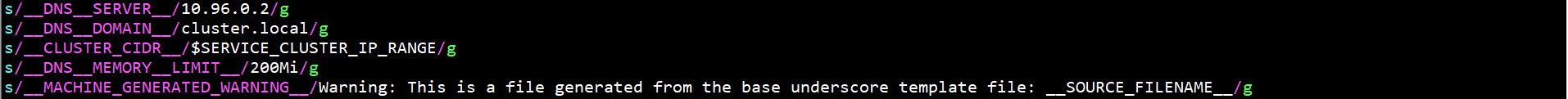

On any Master node, modify / root / kubernetes / cluster / addons / DNS / coredns / transforms2sed $DNS in sed file_ SERVER_ IP,$DNS_DOMAIN,$DNS_MEMORY_LIMIT parameters, as follows:

s/__DNS__SERVER__/10.96.0.2/g s/__DNS__DOMAIN__/cluster.local/g s/__CLUSTER_CIDR__/$SERVICE_CLUSTER_IP_RANGE/g s/__DNS__MEMORY__LIMIT__/200Mi/g s/__MACHINE_GENERATED_WARNING__/Warning: This is a file generated from the base underscore template file: __SOURCE_FILENAME__/g

Use the template file on any Master node to generate the CoreDNS configuration file CoreDNS yaml:

cd /root/kubernetes/cluster/addons/dns/coredns/

sed -f transforms2sed.sed coredns.yaml.base > coredns.yaml

Modify the CoreDNS configuration file on any Master node yaml

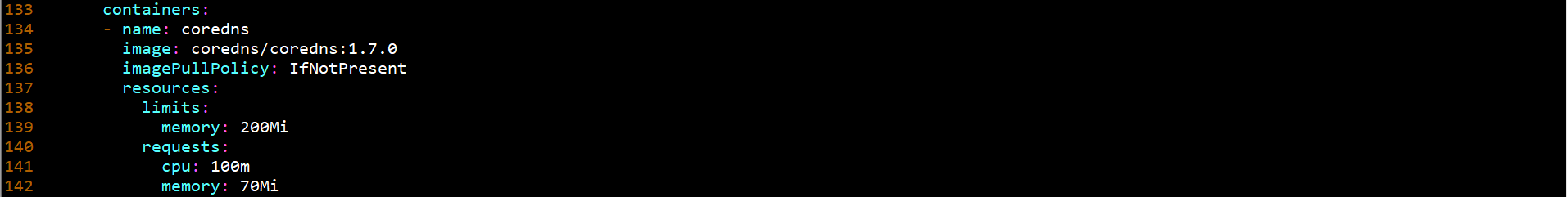

Modify some image parameters

image: coredns/coredns:1.7.0

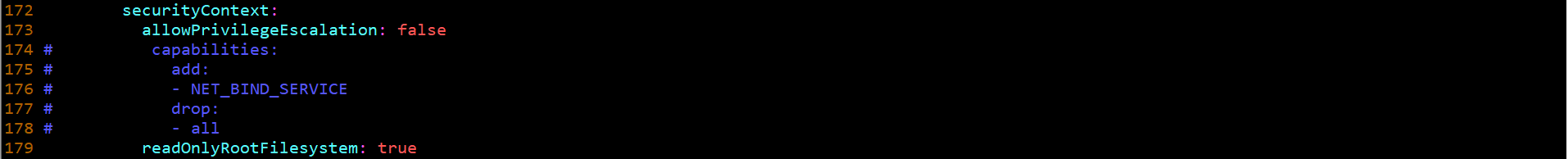

Delete the capabilities section:

capabilities: add: - NET_BIND_SERVICE drop: - all

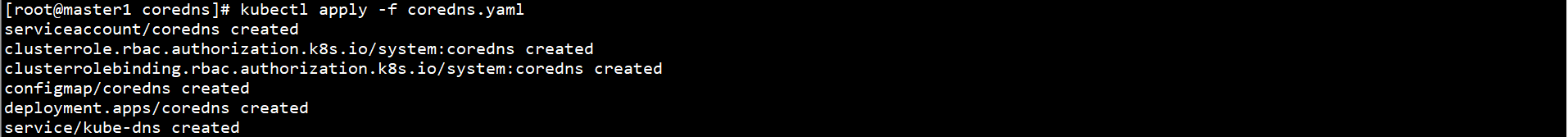

Deploy CoreDNS on any Master node:

kubectl apply -f coredns.yaml

View Pod status on any Master node:

kubectl get pod -o wide -n kube-system