MNIST Handwritten Digital Set

MNIST is a handwritten digit recognition data set developed by the U.S. Postal System. Handwritten content is 0-9, there are 60,000 image samples, we can get MNIST official website Free download, a total of 4. gz suffix compression files, which are binary content.

train-images-idx3-ubyte.gz Picture samples for training models

train-labels-idx1-ubyte.gz training set labels

t10k-images-idx3-ubyte.gz test set images

t10k-labels-idx1-ubyte.gz test set labels

The training data set contains 60,000 samples and the test data set contains 10,000 samples. Each picture in the MINIST data set consists of 28 x 28 pixels, each pixel is represented by a gray value.

We use the following code to peek into the internal data set partition of MNIST dataset and the appearance of handwritten numerals.

import matplotlib.pyplot as plt from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets('./mnist', one_hot=True) # Replace the array sheet with a picture print(mnist.train.images.shape) # Training Data Picture(55000, 784) print(mnist.train.labels.shape) # Training data label(55000, 10) print(mnist.test.images.shape) # Test data picture(10000, 784) print(mnist.test.labels.shape) # Test data picture(10000, 10) print(mnist.validation.images.shape) # Verify data pictures(5000, 784) print(mnist.validation.labels.shape) # Verify data pictures(5000, 784) print(mnist.train.labels[1]) # [0. 0. 0. 0. 0. 0. 0. 1. 0. 0.] image = mnist.train.images[1].reshape(28, 28) fig = plt.figure("Picture display") plt.imshow(image,cmap='gray') plt.axis('off') #Do not display coordinate dimensions plt.show()

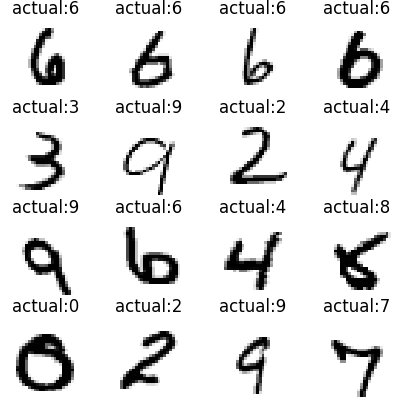

While drawing numbers, take out labels at the same time.

from tensorflow.examples.tutorials.mnist import input_data import math import matplotlib.pyplot as plt import numpy as np mnist = input_data.read_data_sets('./mnist', one_hot=True) # Picture sheet mnist Numbers of data sets def drawdigit(position,image, title): plt.subplot(*position) # Asterisk tuple reference plt.imshow(image, cmap='gray_r') plt.axis('off') plt.title(title) # Take one batch Data, then draw on a canvas batch_size Individual subgraph def batchDraw(batch_size): images, labels = mnist.train.next_batch(batch_size) row_num = math.ceil(batch_size ** 0.5) # ceil column_num = row_num plt.figure(figsize=(row_num, column_num)) # That's ok.column for i in range(row_num): for j in range(column_num): index = i * column_num + j if index < batch_size: position = (row_num, column_num, index+1) image = images[index].reshape(28, 28) # Remove the index of the largest number in the list title = 'actual:%d' % (np.argmax(labels[index])) drawdigit(position, image, title) if __name__ == '__main__': batchDraw(16) plt.show()

Code description:

mnist = input_data.read_data_sets("./mnist/", one_hot=True, reshape=False)

The image is composed of three RGB arrays, and the grayscale image is only one of the arrays, and the image is composed of pixels. The value of each pixel is between 0 and 225. Each number in the MNIST data set has 28*28=784 pixel values. The above code if reshape=True (default), the shape of the MNIST data=(?, 784), if res res. Hape = False MNIST data is (?, 28, 28, 1).

Keras

DNN Network

from keras.models import Model from keras.layers import Input, Dense, Dropout from keras import regularizers from keras.optimizers import Adam from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("mnist/", one_hot=True) x_train = mnist.train.images # Training data (55000, 784) y_train = mnist.train.labels # Training label x_test = mnist.test.images y_test = mnist.test.images # DNN network structure inputs = Input(shape=(784,)) h1 = Dense(64, activation='relu', kernel_regularizer=regularizers.l2(0.01))(inputs) # Weight Matrix l2 Regularization h1 = Dropout(0.2)(h1) h2 = Dense(64, activation='relu', kernel_regularizer=regularizers.l2(0.01))(h1) # Weight Matrix l2 Regularization h2 = Dropout(0.2)(h2) h3 = Dense(64, activation='relu', kernel_regularizer=regularizers.l2(0.01))(h2) # Weight Matrix l2 Regularization h3 = Dropout(0.2)(h3) outputs = Dense(10, activation='softmax', kernel_regularizer=regularizers.l2(0.01))(h3) # Weight Matrix l2 Regularization model = Model(input=inputs, output=outputs) # Compilation model opt = Adam(lr=0.01, beta_1=0.9, beta_2=0.999, epsilon=1e-08) # epsilon Fuzzy factor model.compile(optimizer=opt, loss='categorical_crossentropy', metrics=['accuracy']) # Cross Entropy Loss Function # Start training model.fit(x=x_train, y=y_train, validation_split=0.1, batch_size=128, epochs=4) model.save('k_DNN.h5')

CNN Network

from keras.models import Model from keras.layers import Input, Conv2D, MaxPooling2D, Reshape, Dense from keras import regularizers from keras.optimizers import Adam from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("./mnist/", one_hot=True, reshape=False) x_train = mnist.train.images # Training data (55000, 28, 28, 1) y_train = mnist.train.labels # Training label x_test = mnist.test.images y_test = mnist.test.images # network structure input = Input(shape=(28, 28, 1)) h1 = Conv2D(filters=64, kernel_size=(3,3), strides=(1, 1), padding='same', activation='relu')(input) h1 = MaxPooling2D(pool_size=2, strides=2, padding='valid')(h1) h1 = Conv2D(filters=32, kernel_size=(3,3), strides=(1, 1), padding='same', activation='relu')(h1) h1 = MaxPooling2D()(h1) h1 = Conv2D(filters=16, kernel_size=(3,3), strides=(1, 1), padding='same', activation='relu')(h1) h1 = Reshape((16 * 7 * 7,))(h1) # h1.shape (?, 16*7*7) output = Dense(10, activation="softmax", kernel_regularizer=regularizers.l2(0.01))(h1) model = Model(input=input, output=output) model.summary() # Compilation model opt = Adam(lr=0.01, beta_1=0.9, beta_2=0.999, epsilon=1e-08) model.compile(optimizer=opt, loss="categorical_crossentropy", metrics=["accuracy"]) # Start training model.fit(x=x_train, y=y_train, validation_split=0.1, epochs=5) model.save('k_CNN.h5')

RNN Network

from keras.models import Model from keras.layers import Input, LSTM, Dense from keras import regularizers from keras.optimizers import Adam from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("./mnist/", one_hot=True) x_train = mnist.train.images # (28, 28, 1) x_train = x_train.reshape(-1, 28, 28) y_train = mnist.train.labels # RNN network structure inputs = Input(shape=(28, 28)) h1 = LSTM(64, activation='relu', return_sequences=True, dropout=0.2)(inputs) h2 = LSTM(64, activation='relu', dropout=0.2)(h1) outputs = Dense(10, activation='softmax', kernel_regularizer=regularizers.l2(0.01))(h2) model = Model(input=inputs, output=outputs) # Compilation model opt = Adam(lr=0.003, beta_1=0.9, beta_2=0.999, epsilon=1e-08) model.compile(optimizer=opt, loss='categorical_crossentropy', metrics=['accuracy']) # Training model model.fit(x=x_train, y=y_train, validation_split=0.1, batch_size=128, epochs=5) model.save('k_RNN.h5')

Tensorflow

DNN Network

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("./mnist", one_hot=True) # train image shape: (55000, 784) # trian label shape: (55000, 10) # val image shape: (5000, 784) # test image shape: (10000, 784) epochs = 2 output_size = 10 input_size = 784 hidden1_size = 512 hidden2_size = 256 batch_size = 1000 learning_rate_base = 0.005 unit_list = [784, 512, 256, 10] batch_num = mnist.train.labels.shape[0] // batch_size # Fully Connected Neural Network def dense(x, w, b, keeppord): linear = tf.matmul(x, w) + b activation = tf.nn.relu(linear) y = tf.nn.dropout(activation,keeppord) return y def DNNModel(image, w, b, keeppord): dense1 = dense(image, w[0], b[0],keeppord) dense2 = dense(dense1, w[1], b[1],keeppord) output = tf.matmul(dense2, w[2]) + b[2] return output # Weight of Generating Network def gen_weights(unit_list): w = [] b = [] # Ergodic layers for i in range(len(unit_list)-1): sub_w = tf.Variable(tf.random_normal(shape=[unit_list[i], unit_list[i+1]])) sub_b = tf.Variable(tf.random_normal(shape=[unit_list[i+1]])) w.append(sub_w) b.append(sub_b) return w, b x = tf.placeholder(tf.float32, [None, 784]) y_true = tf.placeholder(tf.float32, [None, 10]) keepprob = tf.placeholder(tf.float32) global_step = tf.Variable(0) w, b = gen_weights(unit_list) y_pre = DNNModel(x, w, b, keepprob) loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(logits=y_pre, labels=y_true)) tf.summary.scalar("loss", loss) # Collecting scalars opt = tf.train.AdamOptimizer(0.001).minimize(loss, global_step=global_step) predict = tf.equal(tf.argmax(y_pre, axis=1), tf.argmax(y_true, axis=1)) # Returns the index of the maximum value of each row or column to determine whether it is equal acc = tf.reduce_mean(tf.cast(predict, tf.float32)) tf.summary.scalar("acc", acc) # Collecting scalars merged = tf.summary.merge_all() # Sum union variable saver = tf.train.Saver() # Save and load models init = tf.global_variables_initializer() # Initialize global variables with tf.Session() as sess: sess.run(init) writer = tf.summary.FileWriter("./logs/tensorboard", tf.get_default_graph()) # tensorboard Event file for i in range(batch_num * epochs): x_train, y_train = mnist.train.next_batch(batch_size) summary, _ = sess.run([merged, opt], feed_dict={x:x_train, y_true:y_train, keepprob: 0.75}) writer.add_summary(summary, i) # Write variables after each iteration to the event file # Recognition Rate of Evaluation Model on Verification Set if i % 50 == 0: feeddict = {x: mnist.validation.images, y_true: mnist.validation.labels, keepprob: 1.} # Verification set valloss, accuracy = sess.run([loss, acc], feed_dict=feeddict) print(i, 'th batch val loss:', valloss, ', accuracy:', accuracy) saver.save(sess, './checkpoints/tfdnn.ckpt') # Save the model print('Test Set Accuracy:', sess.run(acc, feed_dict={x:mnist.test.images, y_true:mnist.test.labels, keepprob:1.})) writer.close()

CNN Network

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data epochs = 10 batch_size = 100 mnist = input_data.read_data_sets("mnist/", one_hot=True, reshape=False) batch_nums = mnist.train.labels.shape[0] // batch_size # Convolutional structure def conv2d(x, w, b): # x = (?, 28,28,1) # filter = [filter_height, filter_width, in_channels, out_channels] # data_format = [Batch, Height, Width, Channel] # The first and fourth must be 1 return tf.nn.conv2d(x, filter=w, strides=[1, 1, 1, 1], padding='SAME') + b def pool(x): return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME') # Define network structure def cnn_net(x, keepprob): # x = reshape=False (?, 28,28,1) w1 = tf.Variable(tf.random_normal([5, 5, 1, 64])) b1 = tf.Variable(tf.random_normal([64])) w2 = tf.Variable(tf.random_normal([5, 5, 64, 32])) b2 = tf.Variable(tf.random_normal([32])) w3 = tf.Variable(tf.random_normal([7 * 7 * 32, 10])) b3 = tf.Variable(tf.random_normal([10])) hidden1 = pool(conv2d(x, w1, b1)) hidden1 = tf.nn.dropout(hidden1, keepprob) hidden2 = pool(conv2d(hidden1, w2, b2)) hidden2 = tf.reshape(hidden2, [-1, 7 * 7 * 32]) hidden2 = tf.nn.dropout(hidden2, keepprob) output = tf.matmul(hidden2, w3) + b3 return output # Define the required placeholders x = tf.placeholder(tf.float32, [None, 28, 28, 1]) y_true = tf.placeholder(tf.float32, [None, 10]) keepprob = tf.placeholder(tf.float32) # In the training model, the learning rate decreases gradually with the training. This function returns the attenuated learning rate. global_step = tf.Variable(0) learning_rate = tf.train.exponential_decay(0.01, global_step, 100, 0.96, staircase=True) # Loss function for training logits = cnn_net(x, keepprob) loss = tf.reduce_mean(tf.nn.tf.nn.softmax_cross_entropy_with_logits_v2(logits=logits, labels=y_true)) opt = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step) # Define an evaluation model predict = tf.equal(tf.argmax(logits, 1), tf.argmax(y_true, 1)) # predicted value accuracy = tf.reduce_mean(tf.cast(predict, tf.float32)) # Validation value init = tf.global_variables_initializer() # Start training with tf.Session() as sess: sess.run(init) for k in range(epochs): for i in range(batch_nums): train_x, train_y = mnist.train.next_batch(batch_size) sess.run(opt, {x: train_x, y_true: train_y, keepprob: 0.75}) # Recognition Rate of Evaluation Model on Verification Set if i % 50 == 0: acc = sess.run(accuracy, {x: mnist.validation.images[:1000], y_true: mnist.validation.labels[:1000], keepprob: 1.}) print(k, 'epochs, ', i, 'iters, ', ', acc :', acc)

RNN Network

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data epochs = 10 batch_size = 1000 mnist = input_data.read_data_sets("mnist/", one_hot=True) batch_nums = mnist.train.labels.shape[0] // batch_size # Define network structure def RNN_Model(x, batch_size, keepprob): # rnn_layers = [tf.nn.rnn_cell.LSTMCell(size) for size in [28, 28]] rnn_cell = tf.nn.rnn_cell.LSTMCell(28) rnn_drop = tf.nn.rnn_cell.DropoutWrapper(rnn_cell, output_keep_prob=keepprob) # Create multiple RNNCell Compositional RNN Unit. multi_rnn_cell = tf.nn.rnn_cell.MultiRNNCell([rnn_drop] * 2) initial_state = multi_rnn_cell.zero_state(batch_size, tf.float32) # Create by RNNCell Designated Recursive Neural Networks cell. Perform full dynamic expansion inputs outputs, states = tf.nn.dynamic_rnn(cell=multi_rnn_cell, inputs=x, dtype=tf.float32, initial_state=initial_state ) # outputs Of shape by[batch_size, max_time, 28] w = tf.Variable(tf.random_normal([28, 10])) b = tf.Variable(tf.random_normal([10])) output = tf.matmul(outputs[:, -1, :], w) + b return output, states # Define the required placeholders x = tf.placeholder(tf.float32, [None, 28, 28]) y_true = tf.placeholder(tf.float32, [None, 10]) keepprob = tf.placeholder(tf.float32) global_step = tf.Variable(0) # In the training model, the learning rate decreases gradually with the training. This function returns the attenuated learning rate. learning_rate = tf.train.exponential_decay(0.01, global_step, 10, 0.96, staircase=True) # Loss function for training y_pred, states = RNN_Model(x, batch_size, keepprob) loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_pred, labels=y_true)) opt = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step) # Minimizing loss function predict = tf.equal(tf.argmax(y_pred, 1), tf.argmax(y_true, 1)) # predicted value acc = tf.reduce_mean(tf.cast(predict, tf.float32)) # accuracy init = tf.global_variables_initializer() # Start training with tf.Session() as sess: sess.run(init) for k in range(epochs): for i in range(batch_nums): train_x, train_y = mnist.train.next_batch(batch_size) sess.run(opt, {x: train_x.reshape((-1, 28, 28)), y_true: train_y, keepprob: 0.8}) # Recognition Rate of Evaluation Model on Verification Set if i % 50 == 0: val_losses = 0 accuracy = 0 val_x, val_y = mnist.validation.next_batch(batch_size) for i in range(val_x.shape[0]): val_loss, accy = sess.run([loss, acc], {x: val_x.reshape((-1, 28, 28)), y_true: val_y, keepprob: 1.}) val_losses += val_loss accuracy += accy print('val_loss is :', val_losses / val_x.shape[0], ', accuracy is :', accuracy / val_x.shape[0])

Loading model

Deep learning training takes a long time, and we can't spend a lot of time retraining every time we need to predict, so we come up with a way to save the model, that is, to save the parameters we have trained.

import numpy as np from keras.models import load_model from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("./mnist/", one_hot=True, reshape=False) # (?, 28,28,1) x_test = mnist.test.images # (10000, 28,28,1) y_test = mnist.test.labels # (10000, 10) print(y_test[1]) # [0. 0. 1. 0. 0. 0. 0. 0. 0. 0.] model = load_model('k_CNN.h5') # Read model # Evaluation model evl = model.evaluate(x=x_test, y=y_test) evl_name = model.metrics_names for i in range(len(evl)): print(evl_name[i], ':\t', evl[i]) # loss : 0.19366768299341203 # acc : 0.9691 test = x_test[1].reshape(1, 28, 28, 1) y_predict = model.predict(test) # (1, 10) print(y_predict) # [[1.6e-06 6.0e-09 9.9e-01 5.8e-10 4.0e-07 2.5e-08 1.72e-06 1.2e-09 2.1e-07 8.5e-08]] y_true = 'actual:%d' % (np.argmax(y_test[1])) # actual:2 pre = 'actual:%d' % (np.argmax(y_predict)) # actual:2