1 thread creation and end

Four header files are introduced into the new C++11 standard to support multi-threaded programming. They are < atomic >, < thread >, < mutex >, < condition >, respectively_ Variable > and < future >.

- < atomic >: this header mainly declares two classes, std::atomic and std::atomic_flag, in addition, it also declares a set of C-style atomic types and C-Compatible atomic operation functions.

- < thread >: this header file mainly declares the std::thread class. In addition, STD:: this_ The thread namespace is also in the header file.

- < mutex >: this header file mainly declares classes related to mutex, including std::mutex series classes and std::lock_guard, std::unique_lock, and other types and functions.

- <condition_ Variable >: this header file mainly declares classes related to condition variables, including std::condition_variable and std::condition_variable_any.

- < Future >: this header file mainly declares std::promise, std::package_task two Provider classes, and std::future and STD:: shared_ There are two Future classes, and some related types and functions. The std::async() function is declared in this header file.

#include <iostream>

#include <utility>

#include <thread>

#include <chrono>

#include <functional>

#include <atomic>

void f1(int n)

{

for (int i = 0; i < 5; ++i) {

std::cout << "Thread " << n << " executing\n";

std::this_thread::sleep_for(std::chrono::milliseconds(1000));

}

}

void f2(int& n)

{

std::cout << "thread-id:" << std::this_thread::get_id() << "\n";

for (int i = 0; i < 5; ++i) {

std::cout << "Thread 2 executing:" << n << "\n";

++n;

std::this_thread::sleep_for(std::chrono::milliseconds(1000));

}

}

int main()

{

int n = 0;

std::thread t1; // t1 is not a thread t1 is not a thread

std::thread t2(f1, n + 1); // pass by value

std::thread t3(f2, std::ref(n)); // pass by reference

std::this_thread::sleep_for(std::chrono::milliseconds(2000));

std::cout << "\nThread 4 create :\n";

std::thread t4(std::move(t3)); // t4 is now running f2(). t3 is no longer a thread. At this time, t3 will not be a thread. t4 takes over t3 and continues to run F2

t2.join();

t4.join();

std::cout << "Final value of n is " << n << '\n';

}Thread creation method:

- (1) . default constructor to create an empty thread execution object.

- (2) . initialize the constructor and create a thread object. The thread object can be joinable. The newly generated thread will call the fn function, and the parameters of the function are given by args.

- (3) . copy constructor (disabled), which means that thread cannot be copied.

- (4). move constructor, move constructor. After the call is successful, x does not represent any thread execution object.

- Note: joinable Thread objects must be joined by the main thread or set to detached before they are destroyed

std::thread defines a thread object, passes in the thread functions and parameters required by the thread, and the thread starts automatically

End of thread:

join()

Create a thread to execute a thread function. Calling this function will block the current thread and will not return until the thread finishes executing the join; Wait for the t thread to end, and the current thread continues to run

detach()

After the detach call, the target thread becomes a daemon thread and runs in the background. The associated std::thread object loses its association with the target thread. The control right of the thread can no longer be obtained through the std::thread object, and the operating system is responsible for recycling resources; The main thread ends, the whole process ends, and all sub threads end automatically!

#include <iostream>

#include <thread>

using namespace std;

void threadHandle1(int time)

{

//Let the child thread sleep for time seconds

std::this_thread::sleep_for(std::chrono::seconds(time));

cout << "hello thread1!" << endl;

}

void threadHandle2(int time)

{

//Let the child thread sleep time seconds ace this_thread is a namespace

std::this_thread::sleep_for(std::chrono::seconds(time));

cout << "hello thread2!" << endl;

}

int main()

{

//Create a thread object and pass in a thread function (as a thread entry function),

//The new thread starts to run, without sequence, with the execution of the CPU scheduling algorithm

std::thread t1(threadHandle1, 2);

std::thread t2(threadHandle2, 3);

//The main thread runs here and waits for the sub thread to end before the main thread continues to run

t1.join();

t2.join();

//Set the child thread as a separate thread, and the child thread has nothing to do with the main thread

//View other threads when the main thread ends

//However, whether the sub thread runs or not has nothing to do with the main thread

//The sub thread is separated from the main

//When running the program, you can't see any output printing of this sub thread

//t1.detach();

cout << "main thread done!" << endl;

//After the main thread has finished running, check if the current process has unfinished child threads

//The process will terminate abnormally

return 0;

}

2 mutex

Mutex is also called mutex. Classes (including lock types) and functions related to mutex in C++ 11 are declared in the < mutex > header file. Therefore, if you need to use std::mutex, you must include the < mutex > header file.

< mutex > header file introduction

Mutex series (four kinds)

- std::mutex, the most basic Mutex class.

- std::recursive_mutex, recursive mutex class.

- std::time_mutex, timing mutex class.

- std::recursive_timed_mutex, timed recursive mutex class.

Lock class (two types)

- std::lock_guard, related to Mutex RAII, is convenient for threads to lock mutex.

- std::unique_lock, related to Mutex RAII, is convenient for threads to lock mutex, but provides better locking and unlocking control.

Other types

- std::once_flag

- std::adopt_lock_t

- std::defer_lock_t

- std::try_to_lock_t

function

- std::try_lock, trying to lock multiple mutexes at the same time.

- std::lock, which can lock multiple mutexes at the same time.

- std::call_once, if multiple threads need to call a function at the same time, call_once ensures that multiple threads call the function only once.

std::mutex introduction

Next, take std::mutex as an example to introduce the usage of mutex in C++11.

std::mutex is the most basic mutex in C++11. std::mutex object provides the feature of exclusive ownership - that is, it does not support recursive locking of std::mutex object, but std::recursive_lock can lock mutex objects recursively.

Member function of std::mutex

- Constructor, std::mutex does not allow copying of constructs, nor does it allow moving copies. The originally generated mutex object is in the unlocked state.

- lock(), the calling thread will lock the mutex. When a thread calls this function, the following three situations will occur: (1) if the mutex is not currently locked, the calling thread will lock the mutex until unlock is called. (2) . if the current mutex is locked by another thread, the current calling thread is blocked. (3) if the current mutex is locked by the current calling thread, a deadlock (deadlock) will occur.

- unlock(), which releases ownership of the mutex.

- try_lock() attempts to lock the mutex. If the mutex is occupied by other threads, the current thread will not be blocked. When a thread calls this function, the following three situations will also occur: (1) if the current mutex is not occupied by other threads, the thread will lock the mutex until the thread calls unlock to release the mutex. (2) if the current mutex is locked by other threads, the current calling thread returns to false without being blocked. (3) if the current mutex is locked by the current calling thread, a deadlock (deadlock) is generated.

In order to ensure that lock() and unlock() are used correspondingly, mutex is generally not used directly, but with lock_guard,unique_lock;

std::lock_guard

std::lock_guard is a simple implementation of RAII template class with simple functions.

1.std::lock_guard locks in the constructor and unlocks in the destructor.

// CLASS TEMPLATE lock_guard

template<class _Mutex>

class lock_guard

{ // class with destructor that unlocks a mutex

public:

using mutex_type = _Mutex;

explicit lock_guard(_Mutex& _Mtx)

: _MyMutex(_Mtx)

{ // construct and lock

_MyMutex.lock();

}

lock_guard(_Mutex& _Mtx, adopt_lock_t)

: _MyMutex(_Mtx)

{ // construct but don't lock

}

~lock_guard() noexcept

{ // unlock

_MyMutex.unlock();

}

lock_guard(const lock_guard&) = delete;

lock_guard& operator=(const lock_guard&) = delete;

private:

_Mutex& _MyMutex;

};From lock_ As can be seen from the guard source code, it locks during construction and releases the lock by executing the destructor out of the scope; At the same time, copy construction and assignment operators are not allowed; It is relatively simple and cannot be used in the transfer or return of function parameters, because its copy construction and assignment operators are disabled; Can only be used in simple critical area code mutual exclusion operation

std::unique_lock

Class unique_lock is a universal mutex wrapper that allows delayed locking, time limited attempts to lock, recursive locking, ownership transfer, and use with conditional variables.

unique_lock is better than lock_ The use of guard is more flexible and powerful.

Using unique_lock needs to pay more time, performance and cost.

template<class _Mutex>

class unique_lock

{ // whizzy class with destructor that unlocks mutex

public:

typedef _Mutex mutex_type;

// CONSTRUCT, ASSIGN, AND DESTROY

unique_lock() noexcept

: _Pmtx(nullptr), _Owns(false)

{ // default construct

}

explicit unique_lock(_Mutex& _Mtx)

: _Pmtx(_STD addressof(_Mtx)), _Owns(false)

{ // construct and lock

_Pmtx->lock();

_Owns = true;

}

unique_lock(_Mutex& _Mtx, adopt_lock_t)

: _Pmtx(_STD addressof(_Mtx)), _Owns(true)

{ // construct and assume already locked

}

unique_lock(_Mutex& _Mtx, defer_lock_t) noexcept

: _Pmtx(_STD addressof(_Mtx)), _Owns(false)

{ // construct but don't lock

}

unique_lock(_Mutex& _Mtx, try_to_lock_t)

: _Pmtx(_STD addressof(_Mtx)), _Owns(_Pmtx->try_lock())

{ // construct and try to lock

}

template<class _Rep,

class _Period>

unique_lock(_Mutex& _Mtx,

const chrono::duration<_Rep, _Period>& _Rel_time)

: _Pmtx(_STD addressof(_Mtx)), _Owns(_Pmtx->try_lock_for(_Rel_time))

{ // construct and lock with timeout

}

template<class _Clock,

class _Duration>

unique_lock(_Mutex& _Mtx,

const chrono::time_point<_Clock, _Duration>& _Abs_time)

: _Pmtx(_STD addressof(_Mtx)), _Owns(_Pmtx->try_lock_until(_Abs_time))

{ // construct and lock with timeout

}

unique_lock(_Mutex& _Mtx, const xtime *_Abs_time)

: _Pmtx(_STD addressof(_Mtx)), _Owns(false)

{ // try to lock until _Abs_time

_Owns = _Pmtx->try_lock_until(_Abs_time);

}

unique_lock(unique_lock&& _Other) noexcept

: _Pmtx(_Other._Pmtx), _Owns(_Other._Owns)

{ // destructive copy

_Other._Pmtx = nullptr;

_Other._Owns = false;

}

unique_lock& operator=(unique_lock&& _Other)

{ // destructive copy

if (this != _STD addressof(_Other))

{ // different, move contents

if (_Owns)

_Pmtx->unlock();

_Pmtx = _Other._Pmtx;

_Owns = _Other._Owns;

_Other._Pmtx = nullptr;

_Other._Owns = false;

}

return (*this);

}

~unique_lock() noexcept

{ // clean up

if (_Owns)

_Pmtx->unlock();

}

unique_lock(const unique_lock&) = delete;

unique_lock& operator=(const unique_lock&) = delete;

void lock()

{ // lock the mutex

_Validate();

_Pmtx->lock();

_Owns = true;

}

_NODISCARD bool try_lock()

{ // try to lock the mutex

_Validate();

_Owns = _Pmtx->try_lock();

return (_Owns);

}

template<class _Rep,

class _Period>

_NODISCARD bool try_lock_for(const chrono::duration<_Rep, _Period>& _Rel_time)

{ // try to lock mutex for _Rel_time

_Validate();

_Owns = _Pmtx->try_lock_for(_Rel_time);

return (_Owns);

}

template<class _Clock,

class _Duration>

_NODISCARD bool try_lock_until(const chrono::time_point<_Clock, _Duration>& _Abs_time)

{ // try to lock mutex until _Abs_time

_Validate();

_Owns = _Pmtx->try_lock_until(_Abs_time);

return (_Owns);

}

_NODISCARD bool try_lock_until(const xtime *_Abs_time)

{ // try to lock the mutex until _Abs_time

_Validate();

_Owns = _Pmtx->try_lock_until(_Abs_time);

return (_Owns);

}

void unlock()

{ // try to unlock the mutex

if (!_Pmtx || !_Owns)

_THROW(system_error(

_STD make_error_code(errc::operation_not_permitted)));

_Pmtx->unlock();

_Owns = false;

}

void swap(unique_lock& _Other) noexcept

{ // swap with _Other

_STD swap(_Pmtx, _Other._Pmtx);

_STD swap(_Owns, _Other._Owns);

}

_Mutex *release() noexcept

{ // disconnect

_Mutex *_Res = _Pmtx;

_Pmtx = nullptr;

_Owns = false;

return (_Res);

}

_NODISCARD bool owns_lock() const noexcept

{ // return true if this object owns the lock

return (_Owns);

}

explicit operator bool() const noexcept

{ // return true if this object owns the lock

return (_Owns);

}

_NODISCARD _Mutex *mutex() const noexcept

{ // return pointer to managed mutex

return (_Pmtx);

}

private:

_Mutex *_Pmtx;

bool _Owns;

void _Validate() const

{ // check if the mutex can be locked

if (!_Pmtx)

_THROW(system_error(

_STD make_error_code(errc::operation_not_permitted)));

if (_Owns)

_THROW(system_error(

_STD make_error_code(errc::resource_deadlock_would_occur)));

}

};

Among them, there are_ Mutex *_Pmtx; A pointer to a lock; L-value copy construction and assignment are not allowed, but R-value copy construction and assignment can be used during function call. Therefore, it can be used with conditional variables: cv.wait(lock)// Can be passed in as a function parameter;

Example:

For a code segment running in a multithreaded environment, it is necessary to consider whether there is a race condition. If there is a race condition, we say that the code segment is not thread safe and cannot run directly in a multithreaded environment. For such a code segment, we often call it a critical area resource. For a critical area resource, it is necessary to ensure that it is executed by atomic operation in a multithreaded environment, To ensure the atomic operation in the critical area, the mutually exclusive operation lock mechanism between threads needs to be used. The thread class library also provides a lighter atomic operation class based on CAS operation.

Without lock:

#include <iostream>

#Include < atomic > / / atomic classes provided by C + + 11 thread library

#Include < thread > / / header file of C + + thread class library

#include <vector>

int count = 0;

//Thread function

void sumTask()

{

//Each thread adds 10 times to count

for (int i = 0; i < 10; ++i)

{

count++;

std::this_thread::sleep_for(std::chrono::milliseconds(10));

}

}

int main()

{

//Create 10 threads and put them in the container

std::vector<std::thread> vec;

for (int i = 0; i < 10; ++i)

{

vec.push_back(std::thread(sumTask));

}

//Wait for thread execution to complete

for (unsigned int i = 0; i < vec.size(); ++i)

{

vec[i].join();

}

//End of all child threads

std::cout << "count : " << count << std::endl;

return 0;

}

Multiple threads operate on count at the same time, which does not guarantee that only one thread performs + + operation on count at the same time. The final result is not necessarily 100;

Using lock_guard:

#include <iostream>

#Include < atomic > / / atomic classes provided by C + + 11 thread library

#Include < thread > / / header file of C + + thread class library

#include <mutex>

#include <vector>

int count = 0;

std::mutex mutex;

//Thread function

void sumTask()

{

//Each thread adds 10 times to count

for (int i = 0; i < 10; ++i)

{

{

std::lock_guard<std::mutex> lock(mutex);

count++;

}

;

std::this_thread::sleep_for(std::chrono::milliseconds(10));

}

}

int main()

{

//Create 10 threads and put them in the container

std::vector<std::thread> vec;

for (int i = 0; i < 10; ++i)

{

vec.push_back(std::thread(sumTask));

}

//Wait for thread execution to complete

for (unsigned int i = 0; i < vec.size(); ++i)

{

vec[i].join();

}

//After all sub threads run, the result of count should be 10000 each time

std::cout << "count : " << count << std::endl;

return 0;

}

Lock the count + + operation to ensure that only one thread can operate on it at a time. The result is 100

Atomic variable

The above atomic operations need to add mutually exclusive operations in the multi-threaded environment, but mutex mutex is heavy after all, which is a little expensive for the system. The thread class library of C++11 provides atomic operation classes for simple types, such as std::atomic_int,atomic_long,atomic_bool, etc. the increase and decrease of their values are based on CAS operation, which not only ensures thread safety, but also has very high efficiency.

#include <iostream>

#Include < atomic > / / atomic classes provided by C + + 11 thread library

#Include < thread > / / header file of C + + thread class library

#include <vector>

//Atomic integer, CAS operation guarantees the atomic operation of self increment and self decrement to count

std::atomic_int count = 0;

//Thread function

void sumTask()

{

//Each thread adds 10 times to count

for (int i = 0; i < 10; ++i)

{

count++;

}

}

int main()

{

//Create 10 threads and put them in the container

std::vector<std::thread> vec;

for (int i = 0; i < 10; ++i)

{

vec.push_back(std::thread(sumTask));

}

//Wait for thread execution to complete

for (unsigned int i = 0; i < vec.size(); ++i)

{

vec[i].join();

}

//After all sub threads run, the result of count should be 10000 each time

std::cout << "count : " << count << std::endl;

return 0;

}

Thread synchronous communication

In the process of multithreading, each thread takes up CPU time slice to execute instructions and do things along with the OS scheduling algorithm. The operation of each thread has no order at all. However, in some application scenarios, a thread needs to wait for the running results of another thread before continuing to execute, which requires a synchronous communication mechanism between threads.

The most typical example of synchronous communication between threads is the producer consumer model. After the producer thread produces a product, it will notify the consumer thread to consume the product; If the consumer thread consumes the product and finds that no product has been produced, it needs to notify the producer thread to produce the product quickly. After the producer thread produces the product, the consumer thread can continue to execute.

Condition Variable provided by C++11 thread library_ Variable is the Condition Variable mechanism under the Linux platform, which is used to solve the problem of synchronous communication between threads. The following code demonstrates a producer consumer thread model:

#include <iostream> //std::cout

#include <thread> //std::thread

#include <mutex> //std::mutex, std::unique_lock

#include <condition_variable> //std::condition_variable

#include <vector>

//Define mutexes (condition variables need to be used with mutexes)

std::mutex mtx;

//Define condition variables (used for synchronous communication between threads)

std::condition_variable cv;

//Define a vector container as a container shared by producers and consumers

std::vector<int> vec;

//Producer thread function

void producer()

{

//Every time a producer produces one, he informs consumers to consume one

for (int i = 1; i <= 10; ++i)

{

//Get mtx mutex resource

std::unique_lock<std::mutex> lock(mtx);

//If the container is not empty, it means that there are still products not consumed. Wait for the consumer thread to consume before reproduction

while (!vec.empty())

{

//Judge that the container is not empty, enter the state of waiting condition variable, and release the mtx lock,

//Let the consumer thread grab the lock and be able to consume the product

cv.wait(lock);

}

vec.push_back(i); // Indicates the serial number i of the product produced by the producer

std::cout << "producer yield a product:" << i << std::endl;

/*

After the producer thread has finished producing the product, it notifies the consumer thread waiting on the cv condition variable,

You can start consuming the product, and then release the lock mtx

*/

cv.notify_all();

//Produce a product and sleep for 100ms

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

}

//Consumer thread function

void consumer()

{

//Every time a consumer consumes one, he informs the producer to produce one

for (int i = 1; i <= 10; ++i)

{

//Get mtx mutex resource

std::unique_lock<std::mutex> lock(mtx);

//If the container is empty, it means that there is no product to consume, waiting for the producer to produce and then consume

while (vec.empty())

{

//Judge that the container is empty, enter the state of waiting condition variable, and release the mtx lock,

//Let the producer thread grab the lock and be able to produce the product

cv.wait(lock);

}

int data = vec.back(); // Indicates the product serial number i consumed by the consumer

vec.pop_back();

std::cout << "consumer Consumer products:" << data << std::endl;

/*

When the consumer has consumed the product, it notifies the producer thread waiting on the cv condition variable,

You can start production and release the lock mtx

*/

cv.notify_all();

//Consume a product and sleep for 100ms

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

}

int main()

{

//Create producer and consumer threads

std::thread t1(producer);

std::thread t2(consumer);

//Main the main thread waits for all child threads to finish executing

t1.join();

t2.join();

return 0;

}

thread deadlock

Deadlock overview

Thread deadlock means that two or more threads hold the resources needed by each other. Due to the synchronized feature, a thread holds a resource or obtains a lock. Before the thread releases the lock, other threads cannot obtain the lock and will wait forever. Therefore, this leads to deadlock.

Conditions for deadlock generation

- Mutually exclusive condition: a resource or lock can only be occupied by one thread. After a thread obtains the lock first, other threads cannot obtain the lock before the thread releases the lock.

- Occupy and wait: when a thread has acquired a lock and then acquires another lock, the acquired lock will not be released even if it cannot be acquired.

- Inalienable condition: no thread can forcibly obtain the lock already occupied by other threads

- Circular waiting condition: thread A holds the lock of thread B, and thread B holds the lock of thread A.

Example:

When multiple threads of A program obtain multiple mutex resources, there may be A life and death lock problem. For example, thread A obtains lock 1 first, and thread B obtains lock 2, and then thread A needs to obtain lock 2 to continue execution. However, since lock 2 is held by thread B and has not been released, thread A is blocked in order to wait for lock 2 resources; At this time, thread B needs to obtain lock 1 to execute downward, but because lock 1 is held by thread A, thread A also enters blocking.

Thread A and thread B are waiting for each other to release the lock resources, but they are unwilling to release the original lock resources, resulting in threads A and B waiting for each other and process deadlock. The following code example demonstrates this problem:

#include <iostream> //std::cout

#include <thread> //std::thread

#include <mutex> //std::mutex, std::unique_lock

#include <condition_variable> //std::condition_variable

#include <vector>

//Lock resource 1

std::mutex mtx1;

//Lock resource 2

std::mutex mtx2;

//Function of thread A

void taskA()

{

//Ensure that thread A acquires lock 1 first

std::lock_guard<std::mutex> lockA(mtx1);

std::cout << "thread A Acquire lock 1" << std::endl;

//Thread A sleeps for 2s and then acquires lock 2 to ensure that lock 2 is acquired by thread B first, simulating the occurrence of deadlock problem

std::this_thread::sleep_for(std::chrono::seconds(2));

//Thread A acquires lock 2 first

std::lock_guard<std::mutex> lockB(mtx2);

std::cout << "thread A Acquire lock 2" << std::endl;

std::cout << "thread A Release all lock resources and end the operation!" << std::endl;

}

//Function of thread B

void taskB()

{

//Thread B sleeps for 1s to ensure that thread A obtains lock 1 first

std::this_thread::sleep_for(std::chrono::seconds(1));

std::lock_guard<std::mutex> lockB(mtx2);

std::cout << "thread B Acquire lock 2" << std::endl;

//Thread B attempted to acquire lock 1

std::lock_guard<std::mutex> lockA(mtx1);

std::cout << "thread B Acquire lock 1" << std::endl;

std::cout << "thread B Release all lock resources and end the operation!" << std::endl;

}

int main()

{

//Create producer and consumer threads

std::thread t1(taskA);

std::thread t2(taskB);

//Main the main thread waits for all child threads to finish executing

t1.join();

t2.join();

return 0;

}

Output:

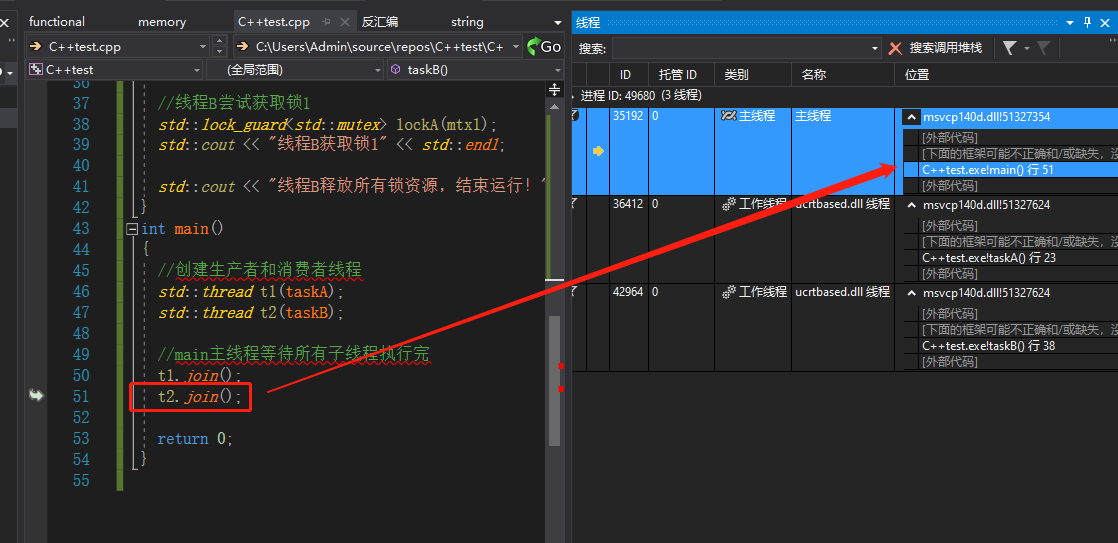

It can be seen that after thread A obtains lock 1 and thread B obtains lock 2, the process will not continue to execute and has been waiting here. If this is A problem scenario we encounter, how can we judge that it is caused by inter thread deadlock?

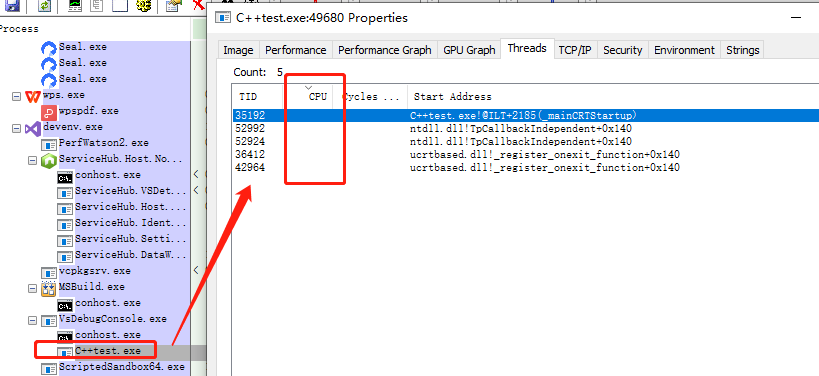

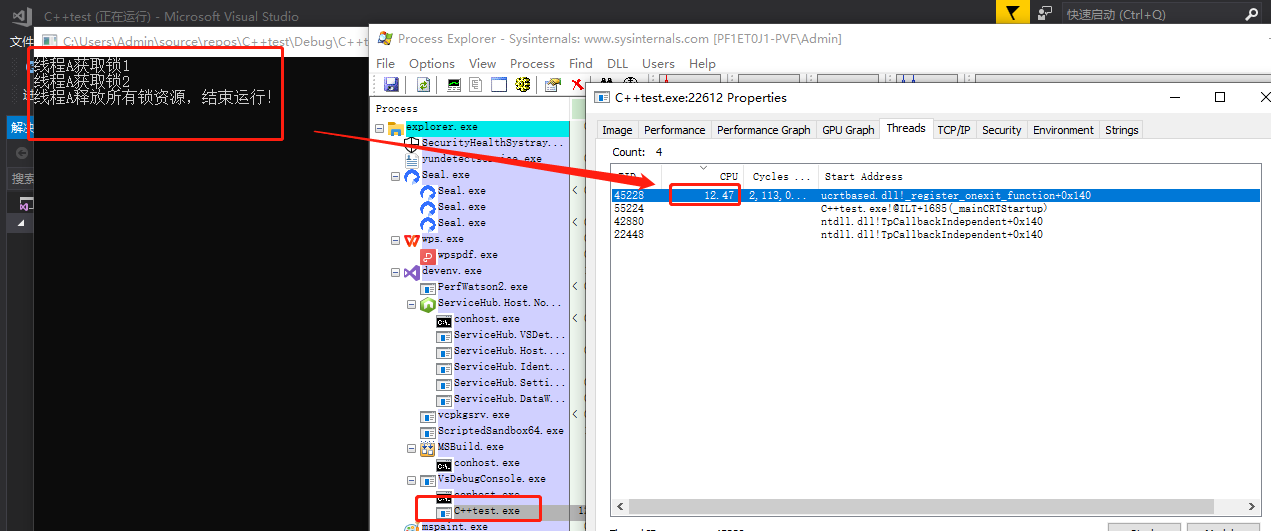

Open the process Explorer. Find the process, check the thread status, and find that the cpu utilization of the thread is 0, so it should not be an endless loop, but a deadlock:

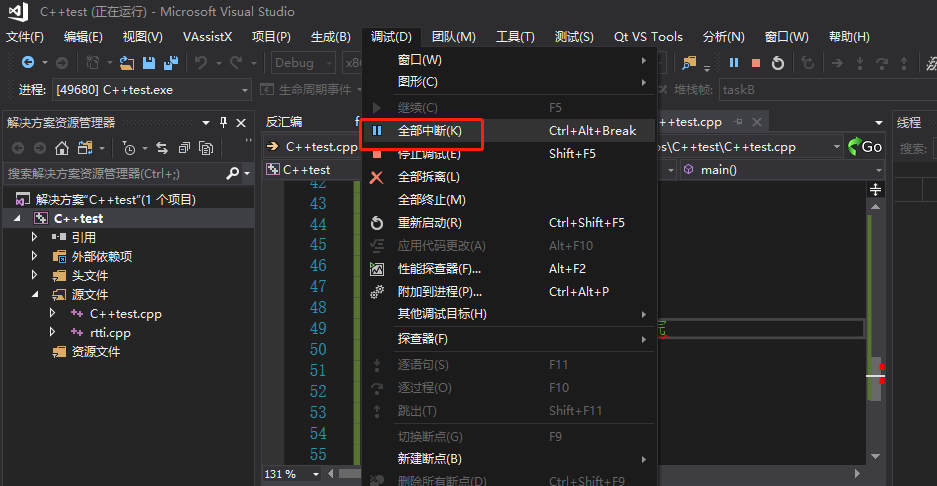

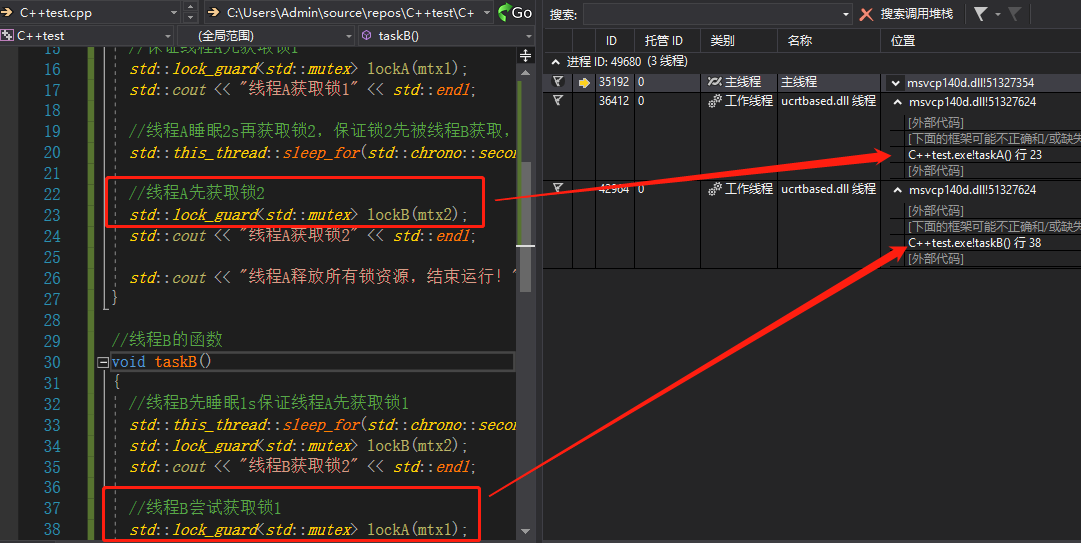

Click all interrupts of vs: view the function execution position of each thread

Click all interrupts of vs: view the function execution position of each thread

Find the position where the current thread is applying for a lock, and judge that it should be a lock.

At the same time, the main thread leaves and waits for the sub thread to end;

What if it's an endless cycle?, To add thread 2 to an endless loop:

#include <iostream> //std::cout

#include <thread> //std::thread

#include <mutex> //std::mutex, std::unique_lock

#include <condition_variable> //std::condition_variable

#include <vector>

//Lock resource 1

std::mutex mtx1;

//Lock resource 2

std::mutex mtx2;

//Function of thread A

void taskA()

{

//Ensure that thread A acquires lock 1 first

std::lock_guard<std::mutex> lockA(mtx1);

std::cout << "thread A Acquire lock 1" << std::endl;

//Thread A sleeps for 2s and then acquires lock 2 to ensure that lock 2 is acquired by thread B first, simulating the occurrence of deadlock problem

std::this_thread::sleep_for(std::chrono::seconds(2));

//Thread A acquires lock 2 first

std::lock_guard<std::mutex> lockB(mtx2);

std::cout << "thread A Acquire lock 2" << std::endl;

std::cout << "thread A Release all lock resources and end the operation!" << std::endl;

}

//Function of thread B

void taskB()

{

while (true)

{

}

}

int main()

{

//Create producer and consumer threads

std::thread t1(taskA);

std::thread t2(taskB);

//Main the main thread waits for all child threads to finish executing

t1.join();

t2.join();

return 0;

}

At this time, the worker thread is full of cpu. My computer has 8 cores, so it takes up 12.5% of a cpu