I. concurrent meaning

Concurrency requires extra effort in the implementation of programs. It is a sharp weapon for developing reliable and extensible programs.

The mainstream understanding of concurrency is: do not block the main thread / be able to handle multiple things at the same time. These two statements are actually interlinked, but they are not well understood.

Do not block the main thread. In fact, the main thread here is the calling thread, that is, the thread executing code. We should understand it as context. There are many ways to implement the non blocking mode, and concurrency is one of them.

Processing multiple things at the same time, which also refers to the context. In fact, it also implies that the context is not blocked, so that it can be released in time to deal with other things. Of course, it can also be understood as a multi-threaded environment.

Another point that needs to be raised is that multithreading is an implementation of concurrency, but this paper mainly introduces the concurrency mode, which is unified and abstract.

II. Development route of C# concurrency model

- APM

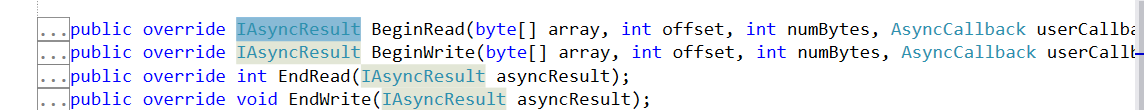

At the beginning, the asynchronous model in. NET separates processing from completion, that is, the implementation of Begin and End. Data transfer is carried out through IAsyncResult, which increases the complexity of asynchronous code development and reduces the readability of the program. Therefore, it is troublesome to apply. The typical API s are as follows:

- EAP

EAP implements asynchrony based on delegation and event, and also separates processing and completion. The specific reference methods are BeginInvoke and EndInvoke. Using IAsyncResult for data transmission also has the problem of APM.

- TPL

TPL is the introduction of async/await keyword, which combines processing and completion, simplifies program development and greatly improves development efficiency.

In fact, concurrency mode does not improve the execution speed of a single program code. It improves efficiency through resource optimization. What is resource optimization, we need to understand the environment in which CLR runs.

The CLR thread pool distinguishes between working threads and I/O threads. Working threads are responsible for scheduling and I/O threads are responsible for IO processing. Therefore, concurrent resource optimization is to release the scheduler in time when IO occurs (mostly IO operations) during program scheduling (code execution), rather than waiting synchronously, so that it can process other programs, The IO program processes io. When IO is completed, the IO thread notifies the scheduler (possibly UI or thread pool), and then processes the following programs, which releases the working thread, that is, does not block the working thread, so as to improve the execution efficiency.

The concurrency mode is designed in this way. The following mainly introduces the operation of context with async/await. The scheduling of async/await has the following principles:

- The first await and above programs are executed by calling threads, no matter under any circumstances

- Distinguish the execution of UI/Request threads. When there are multiple awaits, the second and subsequent await code blocks are scheduled by UI/Request without configuration in the case of UI/Request. In the case of thread pool, the scheduler is provided by thread pool

In the case of UI/Request threads, how to configure the second and subsequent await code blocks to use thread pool calls is the Task ConfigureAwait(false).

Three C# concurrent

1. Task based asynchronous model

Task based async/await is the asynchronous model

2. Parallel

Parallelism is also a mode of concurrency. In different scenarios, different technical stacks of concurrency should be used selectively in order to maximize efficiency. In the case of intensive I/0, async/await is very suitable, and in the case of intensive computing, parallel is very suitable.

- Data Parallel and PLINQ

Data parallelism is a concurrent mode For data. In C #, it is For sets, including Parallel For and ForEach, and PLINQ.

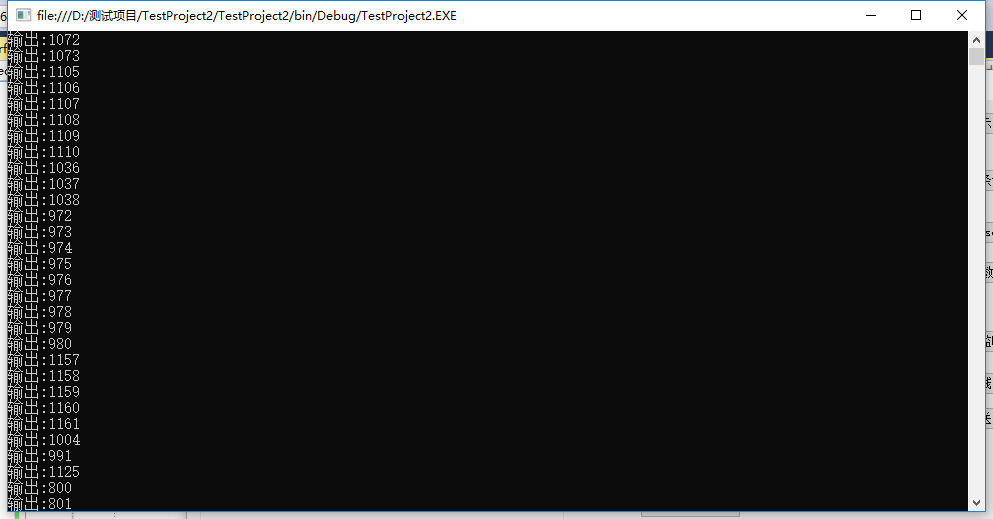

Parallel is parallel NET package can improve efficiency by making full use of multi-core computing power. It should be noted that this will lead to disorder, so it needs to be considered clearly.

- Data can be processed independently, stateless scene

var source = Enumerable.Range(0, 10000); Parallel.ForEach(source, item => { Console.WriteLine($"output:{item.ToString()}"); });

-

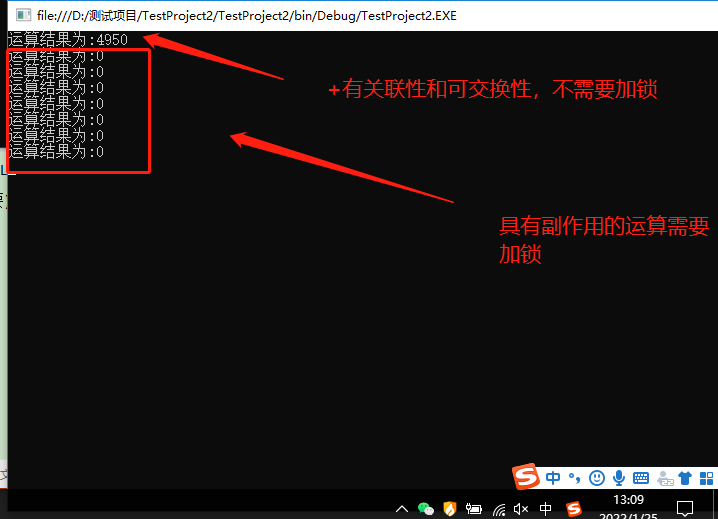

In the scenario where the data is stateful and needs to be regulated (PLINQ may be more suitable), attention should be paid to the lock of the processing result (if the processing has the property of monoid, it is not required)

var source = Enumerable.Range(0, 100); Parallel.ForEach<int, long>(source, () => 0L, (item, state, acc) => acc + item, result => { Console.WriteLine($"The operation result is:{result.ToString()}"); // Note sharing status });

- PLINQ

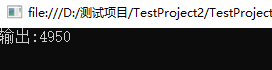

PLINQ benefits from the support of LINQ and can play a good role in the aggregation scenario, especially the aggregation's support for the protocol.

var source = Enumerable.Range(0, 100); var result = source.AsParallel().Sum(); // polymerization Console.WriteLine($"output:{result.ToString()}");

FP: Reduce protocol under PLINQ

public static T Reduce<T>(this ParallelQuery<T> query, Func<T, T, T> func) { return query.Aggregate((acc, item) => func(acc, item)); } public static T Reduce<T>(this ParallelQuery<T> query, T seed, Func<T, T, T> func) { return query.Aggregate(seed, (acc, item) => func(acc, item)); } public static TSeed Reduce<TSeed, TMessage>(this ParallelQuery<TMessage> query, TSeed seed, Func<TSeed, TMessage, TSeed> func) { return query.Aggregate(seed, (acc, item) => func(acc, item)); }

- Task Parallel

Task parallelism refers to the simultaneous execution of multiple independent methods, which should not affect each other.

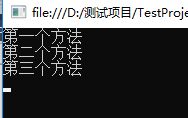

Parallel.Invoke(() => { Console.WriteLine($"First method"); }, () => { Console.WriteLine($"The second method"); }, () => { Console.WriteLine($"The third method"); });

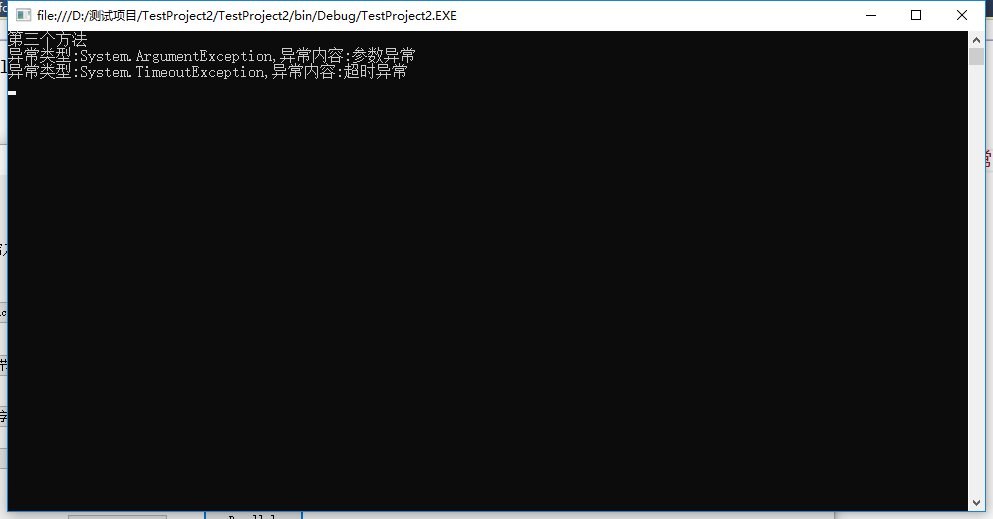

- exception handling

When Parallel tasks are Parallel, they will uniformly catch aggregateexception (. Net has no suppress exception handling).

try { Parallel.Invoke(() => { throw new TimeoutException("Timeout exception"); }, () => { throw new ArgumentException("Parameter exception"); }, () => { Console.WriteLine($"The third method"); }); } catch (AggregateException ex) { ex.Handle(exc => { Console.WriteLine($"Exception type:{exc.GetType()},Abnormal content:{exc.Message}"); return true; }); } catch (Exception ex) { }

3. Data structure

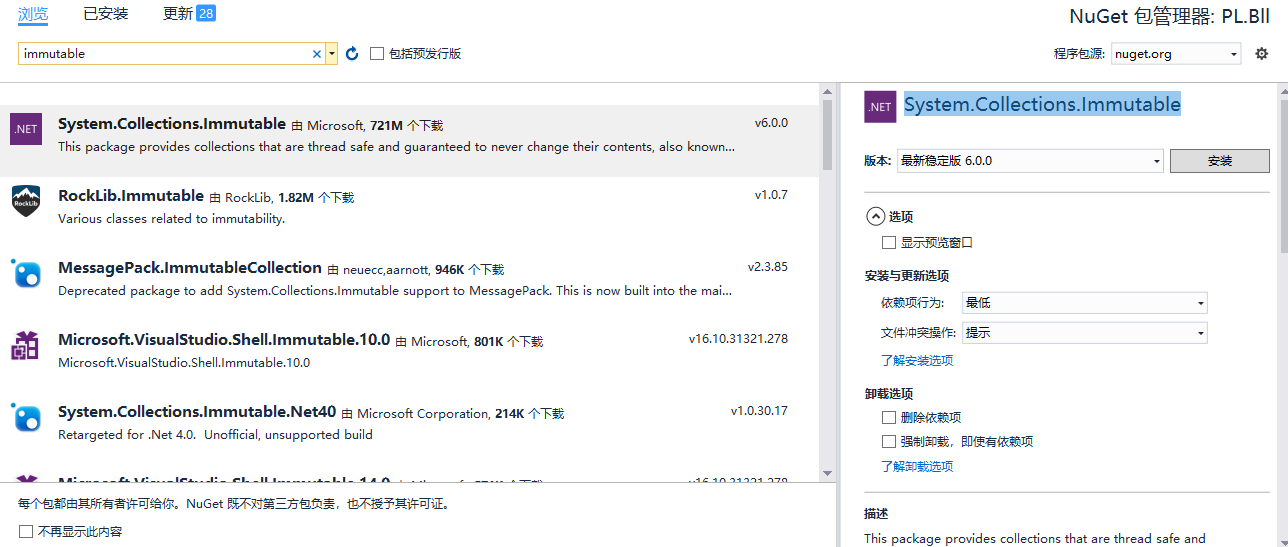

- Invariant data structure

Class library name: system Collections. Immutable

Invariance of data is a perfect method to solve data sharing in concurrent process. Common invariance data structures include:

ImmutableQueue -- immutable queue

ImmutableStack -- immutable stack

ImmutableList -- immutable list

ImmutableHashSet -- immutable set

ImmutableDictionary -- immutable dictionary

ImmutableDictionary -- immutable sort dictionary

Code examples are as follows:

Invariance of data is a perfect method to solve data sharing in concurrent process. Common invariance data structures include:

ImmutableQueue -- immutable queue

ImmutableStack -- immutable stack

ImmutableList -- immutable list

ImmutableHashSet -- immutable set

ImmutableDictionary -- immutable dictionary

ImmutableDictionary -- immutable sort dictionary

- Thread safe data structure

Class library name: system Collections. Concurrent

Thread safe data structure mainly applies CAS (Compare And Swap) algorithm to synchronize data similar to optimistic lock. Common thread safety data structures are as follows:

Concurrent Dictionary -- a thread safe dictionary

ConcurrentQueue -- thread safe queue

ConcurrentStack -- thread safe stack

ConcurrentBag -- thread safe Backpack

- Blocking data structure

Class library: system Collections. Concurrent

The data structure of blocking class is a natural producer / consumer mode, which provides operations different from other data structures, such as stop. The mode summarized here is to use exception exit loop instead of flag bit in the case of thread polling.

The commonly used blocking data structures are as follows:

BlockingCollection -- blocking queue

BlockStack -- blocking stack

BlockBag -- blocking Backpack

4. Cancellation

Cancellation is an embodiment of system reliability. In the process of Task-based development, the cancellation method must be considered NET provides cancellationtokensource - > CancellationToken class to cancel. Note that this class is helpful for task cancellation, that is, in the context of polling, you must manually add a cancellation program. Here, it should be noted that CancellationToken has two cancellation methods:

- Judgment flag bits CTS Token. IsCancellationRequested;

- Throw an exception to cancel CTS Token. ThrowIfCancellationRequested(); Raise system OperationCanceledException.

It is recommended to throw an exception. Many class libraries use the method of throwing an exception to stop.

Here are some other methods of CancellationTokenSource:

- Registering Callbacks

CancellationTokenSource can call back some program actions when canceling the trigger (calling the Cancel() method). The specific usage is as follows:

var cts = new CancellationTokenSource(); cts.Token.Register(() => { Console.WriteLine($"Callback when canceling"); });

2. Chain cancellation flag

CancellationTokenSource can be combined. When it is cancelled, the CancellationTokenSource of other combinations will also be cancelled. The specific usage is as follows:

var cts = new CancellationTokenSource(); var cts2 = new CancellationTokenSource(); var cts3 = CancellationTokenSource.CreateLinkedTokenSource(cts.Token, cts2.Token);

3. Delay cancellation

CancellationTokenSource can be configured to cancel the delay. The specific usage is as follows:

var cts = new CancellationTokenSource(); cts.CancelAfter(TimeSpan.FromSeconds(2)); // 2s Cancel after

5. Exception handling

Task based asynchronous mode, due to NET, you can directly use try catch to catch exceptions, but in several scenarios, you need to pay attention to:

- Task parallel Invoke

- Task.WhenAll(),Task.WhenAny(),Task.WaitAll(),Task.WaitAny();

In the above situation, since the executor is multiple tasks or methods, the exception is AggregateException. In imperative exception handling, because the template is unified, many side effects will be added. Therefore, in FP, it is encouraged to use the status of Success and Fault for exception handling, and this can force the development to handle it. The Option and Result modes will be introduced in the following FP module. The Result mode is used by Hsl.

In addition, task When and task There are obvious differences between Wait. When is used in asynchronous context and Wait is used in synchronous context. Be careful.

6. Lock and CAS(Atom atomic processing)

This article involves asynchronous mode, so there is not much to introduce the technology of thread synchronization. You can refer to the article "multithreading". Here we will introduce the atomic processing of CAS.

Atomic processing can replace lock, which is similar to the implementation of optimistic lock. It is mainly used in the scenario of multi-threaded data sharing NET.

Scenario 1: atomic operation of numbers

var res = 10; Interlocked.Increment(ref res); // Atom plus 1 Interlocked.Decrement(ref res); // Atomic minus 1 Interlocked.Add(ref res, 10); // Arbitrary addition

Scenario 2: Custom atomic operation class for multi-threaded data sharing

Therefore, in multi-threaded data sharing (of course, this situation should be avoided), you can use Interlocked for atomic processing. The following is a custom AtomUtil code example:

public sealed class AtomUtil<T> where T : class { #region Constructor public AtomUtil(T value) { this.value = value; } #endregion Constructor #region variable private volatile T value; // Current value of the instance public T Value => value; #endregion variable #region method // Assignment method -- CAS algorithm public T Swap(Func<T, T> factory) { T original, temp; do { original = value; temp = factory(original); } while (Interlocked.CompareExchange(ref value, temp, original) != original); return original; } #endregion method }

Four mode summary

1. TDF

TDF is the abbreviation of TPL DataFlow. The class library used is: Microsoft Tpl. Dataflow

TDF is used to process data flow. Blocks can be divided into source block, conversion block and target block. The source block is responsible for production data, and the conversion block is used for data processing. Similar to Map/Bind in FP, the target block is used to process data.

TDF can implement participant mode, which is similar to F#'s mailboxprocessor. Each block is composed of message cache, status and processing output.

- Foundation block

Commonly used blocks include BufferBlock, TransformBlock and ActionBlock.

BufferBlock is responsible for caching data and can play the role of throttling (by configuring the boundedcapacity of DataflowBlockOptions).

TransformBlock is used for data conversion processing.

ActionBlock is used to execute code.

The application of the above three blocks is embodied in the agent (participant mode).

Block connection execution has the same advantages as CQRS in DDD, which can help sort out business processes (ES in DDD can also realize event traceability).

- Block cancellation

Block cancellation is divided into two steps:

- LinkTo sets PropagateCompletion = true, which is used to pass exceptions and cancellations

- Complete(), waiting for cancellation

The example code is as follows:

// 1. End the first step,Configure end of delivery var options = new DataflowLinkOptions() { PropagateCompletion = true // Pass exception and end }; // 2. Block combination BufferBlock.LinkTo(TransfromBlock, options); TransfromBlock.LinkTo(ActionBlock, options); // 3. end BufferBlock.Complete(); // end await BufferBlock.Completion.ContinueWith(t => TransfromBlock.Complete()); await TransfromBlock.Completion.ContinueWith(t => ActionBlock.Complete()); await ActionBlock.Completion;

- Block exception handling

- agent

Agent is the realization of participant mode, lightweight production and consumption, and can be used to decouple business processing.

Agents can be divided into stateless agents (processing only), stateful processing (Protocol) and two-way communication agents. The specific implementation is as follows:

public sealed class StatefulDataFlowAgent<TState, TMessage> : IAgent<TMessage> { #region Constructor public StatefulDataFlowAgent(TState initial, Func<TState, TMessage, Task<TState>> action, CancellationTokenSource Cts) { AccState = initial; var options = new ExecutionDataflowBlockOptions() { CancellationToken = Cts == null ? CancellationToken.None : Cts.Token, }; ActionBlock = new ActionBlock<TMessage>(async (data) => AccState = await action(AccState, data), options); } #endregion Constructor #region variable public TState AccState; // Accumulation state private ActionBlock<TMessage> ActionBlock; // Executive body #endregion variable #region method // Add data synchronously public void Send(TMessage data) { ActionBlock.Post(data); } // Add data asynchronously public Task SendAsync(TMessage data) { return ActionBlock.SendAsync(data); } #endregion method }

public sealed class StatefulReplayDataFlowAgent<TState, TMessage, TReplay> : IReplayAgent<TMessage, TReplay> { #region Constructor public StatefulReplayDataFlowAgent(TState initial, Func<TState, TMessage, Task<TState>> projection, Func<TState, TMessage, Task<Tuple<TState, TReplay>>> ask, CancellationTokenSource Cts) { AccState = initial; var options = new ExecutionDataflowBlockOptions() { CancellationToken = Cts == null ? CancellationToken.None : Cts.Token, }; ActionBlock = new ActionBlock<Tuple<TMessage, TaskCompletionSource<TReplay>>>( async (data) => { if (data.Item2 == null) AccState = await projection(AccState, data.Item1); else { var result = await ask(AccState, data.Item1); AccState = result.Item1; data.Item2.SetResult(result.Item2); } } , options ); } #endregion Constructor #region variable public TState AccState; // Accumulation state private ActionBlock<Tuple<TMessage, TaskCompletionSource<TReplay>>> ActionBlock; // Executive body #endregion variable #region method // Add data synchronously public void Send(TMessage data) { ActionBlock.Post(Tuple.Create<TMessage, TaskCompletionSource<TReplay>>(data, null)); } // Add data asynchronously public Task SendAsync(TMessage data) { return ActionBlock.SendAsync(Tuple.Create<TMessage, TaskCompletionSource<TReplay>>(data, null)); } // Synchronous communication public TReplay Ask(TMessage data) { var cts = new TaskCompletionSource<TReplay>(); ActionBlock.Post(Tuple.Create(data, cts)); return cts.Task.Result; } // Asynchronous communication public Task<TReplay> AskAsync(TMessage data) { var cts = new TaskCompletionSource<TReplay>(); ActionBlock.Post(Tuple.Create(data, cts)); return cts.Task; } #endregion method }

public sealed class StatelessDataFlowAgent<T> : IAgent<T> { #region Constructor public StatelessDataFlowAgent(Func<T, Task> action, CancellationTokenSource Cts) { var options = new ExecutionDataflowBlockOptions() { CancellationToken = Cts == null ? CancellationToken.None : Cts.Token, }; ActionBlock = new ActionBlock<T>(async (data) => await action(data), options); } #endregion Constructor #region variable private ActionBlock<T> ActionBlock; #endregion variable #region method // Add data synchronously public void Send(T data) { ActionBlock.Post(data); } // Add data asynchronously public Task SendAsync(T data) { return ActionBlock.SendAsync(data); } #endregion method }

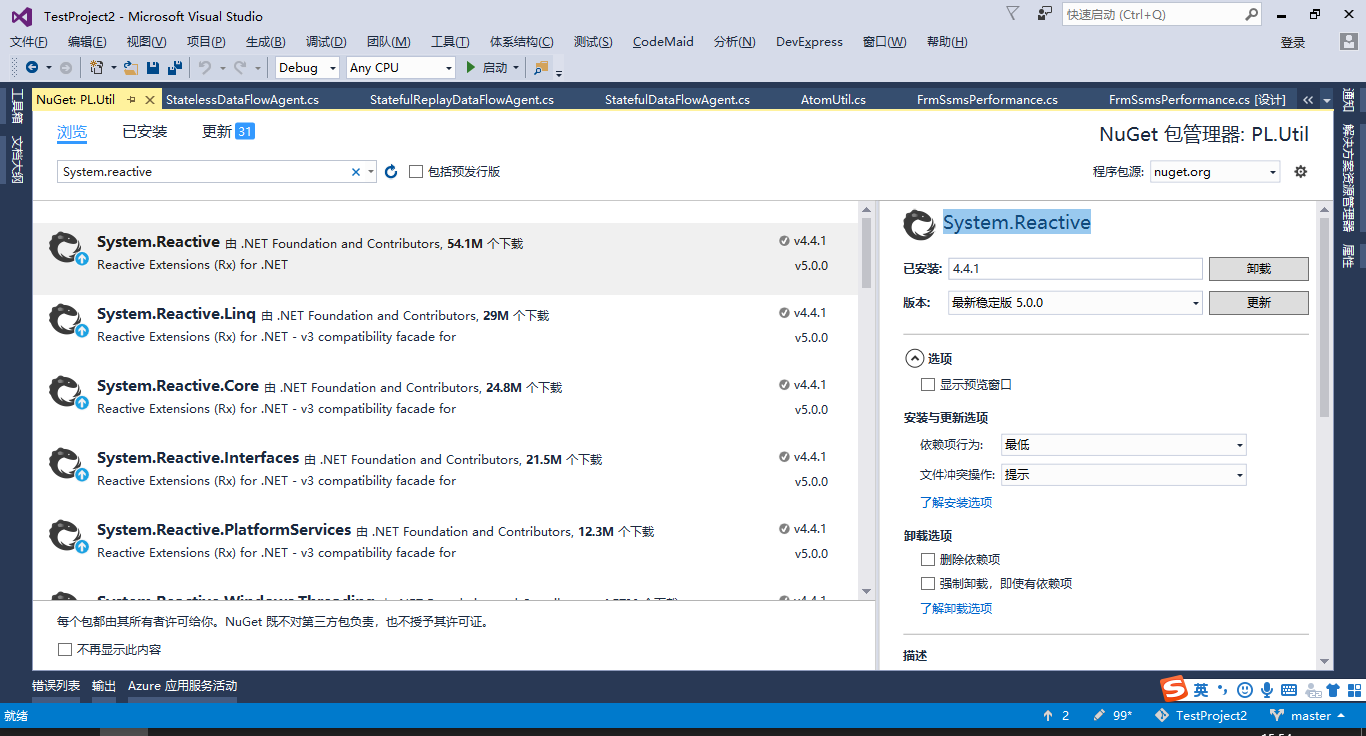

2. Rx

Rx uses class library: system Reactive

Rx is Reactive, which is mainly used to deal with continuous data flow. Push method is used to replace pull method. It has a functional programming style. At present, there are two aspects that can be applied.

- UI notification: realize UI notification through throttling to prevent UI thread from faking death

- Observer mode Subject

Rx use NET. IObserver is the observer and publishes data. IObserver is the subscriber and receives subscriptions.

This involves NET. If you are interested, you can learn about ienumtable, IQueryable, and IObservable(Linq to Events).

- Rx conversion

Rx can be converted with Event,TPL, etc.

// Rx register var changedObservable = Observable.FromEventPattern<FileSystemEventArgs>(FileWatcher, "Changed"); var createdObservable = Observable.FromEventPattern<FileSystemEventArgs>(FileWatcher, "Created"); var deletedObservable = Observable.FromEventPattern<FileSystemEventArgs>(FileWatcher, "Deleted");

- Rx processing

Cache, window, throttling, timeout, sampling

Rx has very powerful processing power and rich API s, which requires continuous consideration of system Reactive. Linq.

1. Functional programming FP

- Pure function

Pure function refers to the function whose output is determined only by input, which has no side effects.

- side effect

- raise an exception

- I/O

- Reference global variable (status)

- exception handling

In FP, exceptions are side effects, which are handled through the transfer of state, that is, the function is handled with success and failure, and its state is judged and handled. Option includes success and None, and Result includes success and failure. The Result mode is more comprehensive, and the specific implementation is as follows:

public class Result<T> { #region Constructor // Normal condition public Result(T ok) { this.Ok = ok; Error = default(Exception); } // Abnormal condition public Result(Exception ex) { this.Error = ex; this.Ok = default(T); } #endregion Constructor #region variable public T Ok { get; } // Normal return result public Exception Error { get; } // Abnormal return result public bool IsFailed { get { return Error != null; } } // Is the result abnormal public bool IsOk { get { return !IsFailed; } } // Is the result normal #endregion variable #region method // Result processing public R Match<R>(Func<T, R> okMap, Func<Exception, R> failureMap) { return IsOk ? okMap(Ok) : failureMap(Error); } // Result processing public void Match(Action<T> okMap, Action<Exception> failureMap) { if (IsOk) okMap?.Invoke(Ok); else failureMap?.Invoke(Error); } #region Implicit conversion // Normal results public static implicit operator Result<T>(T ok) => new Result<T>(ok); // Abnormal result public static implicit operator Result<T>(Exception ex) => new Result<T>(ex); #endregion Implicit conversion #endregion method }

FP can also use functions to handle exceptions. Catch's functions are as follows, which can handle exceptions without side effects:

public static Task<T> Catch<T, TError>(this Task<T> task, Func<TError, T> errorHandle) where TError : Exception { var tcs = new TaskCompletionSource<T>(); task.ContinueWith(innerTask => { if (innerTask.IsFaulted && innerTask?.Exception?.InnerException is TError) tcs.SetResult(errorHandle((TError)innerTask.Exception.InnerException)); else if (innerTask.IsCanceled) tcs.SetCanceled(); else if (innerTask.IsFaulted) tcs.SetException(innerTask?.Exception?.InnerException ?? new InvalidOperationException()); else tcs.SetResult(task.Result); }); return tcs.Task; }

- Function combination

Monoids, monads and functors belong to category theory.

Monoids require functions to be related and exchangeable, and the input and output are of the same type, such as +, *, and the order does not affect the results under concurrency.

The list includes return and Bind. Return is used for promotion and Bind is used for conversion, that is, functions are combined.

Functors are used to deconstruct functions, deconstruct multi parameter functions into single parameter functions, and also used for function combination.

- Fork / join

Fork/Join is very similar to MapReduce. It converts and regulates data, and can be used for throttling and processing. It is used for IO intensive operations. The code is as follows:

using PL.Util.FP.Agent; using PL.Util.FP.Agent.Service; using System; using System.Collections.Generic; using System.Threading; using System.Threading.Tasks; using System.Threading.Tasks.Dataflow; namespace PL.Util.FP { public static class ForkJoinUtil { /// <summary> /// Iemnurable expand Fork/Join pattern /// </summary> /// <returns></returns> public static async Task<R> ForkJoin<T1, T2, R>(this IEnumerable<T1> source, Func<T1, Task<T2>> map, Func<R, T2, Task<R>> aggregate, R initianState, CancellationTokenSource cts, int paritionLevel = 8, int boundCapacity = 20) { cts = cts ?? new CancellationTokenSource(); // Data cache add var inputBuffer = new BufferBlock<T1>(new DataflowBlockOptions() { CancellationToken = cts.Token, BoundedCapacity = boundCapacity, }); // map transformation var blockOptions = new ExecutionDataflowBlockOptions() { CancellationToken = cts.Token, BoundedCapacity = boundCapacity, MaxDegreeOfParallelism = paritionLevel }; var mapperBlock = new TransformBlock<T1, T2>(map, blockOptions); // combination inputBuffer.LinkTo(mapperBlock, new DataflowLinkOptions() { PropagateCompletion = true, }); // reduce agent var reduceAgent = AgentUtil.Start(initianState, aggregate, cts); // TDF Convert to Rx,Agent data processing IDisposable disposable = mapperBlock.AsObservable().Subscribe(async item => await reduceAgent.SendAsync(item)); // Data addition foreach (var item in source) { // Send Allow delay,Post Data will be discarded await inputBuffer.SendAsync(item); } // Data processing completed inputBuffer.Complete(); var tcs = new TaskCompletionSource<R>(); await inputBuffer.Completion.ContinueWith(t => mapperBlock.Complete()); await mapperBlock.Completion.ContinueWith(t => { var agent = reduceAgent as StatefulDataFlowAgent<R, T2>; disposable.Dispose(); tcs.SetResult(agent.AccState); }); // Return protocol data return await tcs.Task; } } }

- Object pool

Realize the concept of asynchronous pooling with TDF. The code is as follows:

public class ObjectPoolAsyncUtil<T> : IDisposable { #region Constructor public ObjectPoolAsyncUtil(int initialCount, Func<T> factory, CancellationToken cts, int timeOut = 0) { this.TimeOut = timeOut; this.BufferBlock = new BufferBlock<T>(new DataflowBlockOptions() { CancellationToken = cts }); this.Factory = () => factory(); for (int i = 0; i < initialCount; i++) this.BufferBlock.Post(this.Factory()); } #endregion Constructor #region variable private BufferBlock<T> BufferBlock; // Maintain object lifecycle private Func<T> Factory; // Get object method private int TimeOut; // Get object timeout #endregion variable #region method #region Return instance public Task<bool> PutAsync(T t) { return this.BufferBlock.SendAsync(t); } #endregion Return instance #region Get instance public Task<T> GetAsync() { var tcs = new TaskCompletionSource<T>(); // CPS Continuity style, non blocking programming mode BufferBlock.ReceiveAsync(TimeSpan.FromSeconds(TimeOut)).ContinueWith(t => { if (t.IsFaulted) { if (t.Exception.InnerException is TimeoutException) tcs.SetResult(this.Factory()); else tcs.SetException(t.Exception); } else if (t.IsCanceled) tcs.SetCanceled(); else tcs.SetResult(t.Result); }); return tcs.Task; } #endregion Get instance #region Destroy public void Dispose() { this.BufferBlock.Complete(); } #endregion Destroy #endregion method }

- Continuity CPS

Continuity implements a complete concurrent non blocking model.

Utilize ContinueWith and TaskCompletionSource.

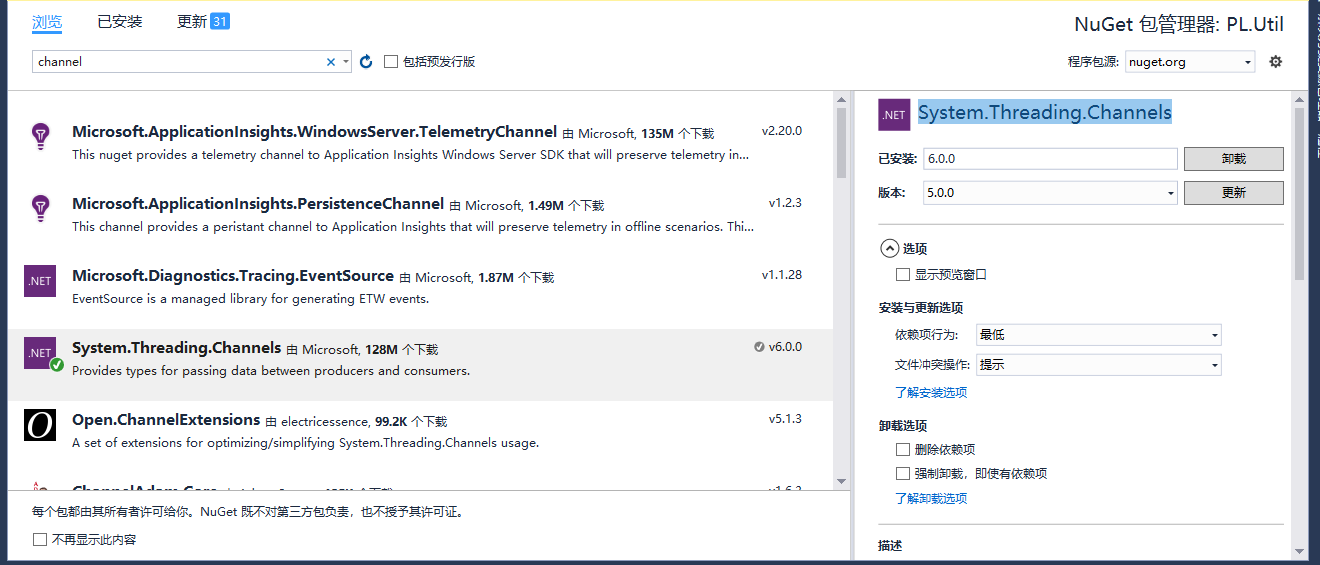

1. Producer consumer Channel

Use class library: system Threading. Channels

It can realize asynchronous bounded queue and unbounded queue. The specific code can be studied by yourself

V. problem handling

- Disabling synchronization semantics in UI/Requst

In the context of UI/Requset, synchronization semantics are prohibited when applying tasks, such as:

Task.Wait(),Task.WaitAll(),Task.Result, etc., which will lead to false death.

2. Disable async void

async void is prohibited, which will prevent exception handling.