Concurrent and asynchronous

Many scenarios need concurrency, such as writing a fast response user interface, which requires concurrent execution of time-consuming tasks to ensure the responsiveness of the user interface. Through parallel programming, the load is divided into multiple cores, so multi-core and processor computers can improve the execution speed of intensive computing code.

The mechanism by which a program executes code at the same time is called multithreading. Both CLR and operating system support multithreading, which is the basic concept of concurrency. Therefore, to introduce concurrent programming, we must first have the basic knowledge of threads, especially the shared state of threads.

thread

Each thread runs in an operating system process. This process provides an independent environment for program execution. In a single threaded program, there is only one thread running in the process, so the thread can use the process environment independently. In a multithreaded program, multiple threads run in one process. They share the same execution environment (especially memory). This explains the role of multithreading to a certain extent. For example, you can use one thread to obtain data in the background and another thread to display the obtained data. These data are called shared state

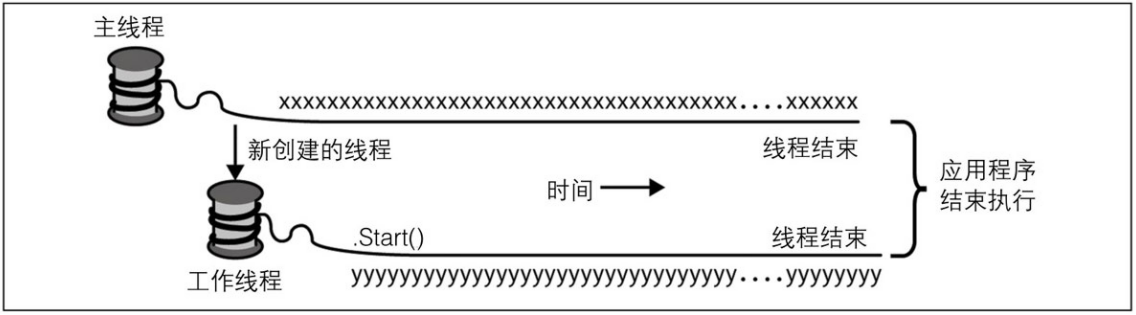

Create thread

To create and Start a Thread, first instantiate the Thread object and call the Start method. The simplest constructor of Thread receives a ThreadStart delegate: a parameterless method that represents the starting position of execution. For example:

using System;

using System.Threading;

using System.Threading.Tasks;

namespace Concurrent and asynchronous

{

internal class Program

{

static void Main(string[] args)

{

Thread t = new Thread(WriteY);

t.Start();

for (int i = 0; i < 1000; i++)

{

Console.Write("X");

}

}

static void WriteY()

{

for (int i = 0; i < 1000; i++)

{

Console.Write("Y");

}

}

}

}

The main thread will create a new thread t, and the new thread will execute the repeated output character y of the method. At the same time, the main thread will also repeat the output character x.

On a single core computer, the operating system divides each thread into time slices (typically 20 milliseconds in Windows) to simulate concurrent execution. Therefore, the above code will have consecutive x and y. On a multi-core machine, two threads can execute in parallel (competing with other processes executing on the machine). Therefore, although we will still get continuous x and y, this is due to the mechanism of Console processing concurrent requests.

Once a Thread starts, its IsAlive property returns true until the Thread stops. When the execution of the delegate received by the Thread constructor is completed, the Thread will stop. The Thread cannot be started after it has stopped.

Static property thread Currentthread will return the thread currently executing:

Console.WriteLine(Thread.CurrentThread.Name);

Confluence and dormancy

Call the Join method of Thread to wait for the Thread to end:

using System;

using System.Threading;

using System.Threading.Tasks;

namespace Concurrent and asynchronous

{

internal class Program

{

static void Main(string[] args)

{

Thread t = new Thread(Go);

t.Start();

t.Join();

Console.WriteLine("Thread t has ended!");

}

static void Go()

{

for (int i = 0; i < 1000; i++)

{

Console.Write("y");

}

}

}

}

Thread. The sleep method pauses the execution of the current thread for a specified time:

Thread.Sleep(TimeSpan.FromHours(1)); Thread.Sleep(500);

Thread.Sleep(0) will cause the thread to immediately give up its own time slice and consciously hand over the CPU to other threads. Thread.Yield() does the same thing, but it only gives resources to threads running on the same processor.

While waiting for the thread Sleep or Join, the thread is blocked.

block

Use the ThreadState property to test the blocking state of a thread:

ThreadState is a flag enumeration type. It consists of "three-tier" binary bits. However, most of these values are redundant, useless or obsolete. The following extension methods limit ThreadState to one of the following four useful values: Unstarted, Running, WaitSleepJoin, and Stopped:

I/O intensive and computing intensive

If an operation waits for events most of the time, it is called I/O intensive, such as downloading web pages or calling console ReadLine. (I/O-Intensive operations generally involve input or output, but this is not a mandatory requirement. For example, Thread.Sleep is also an I/O-Intensive operation). On the contrary, if most of the time of the operation is used to perform a large number of CPU operations, it is called computing intensive.

Blocking and spin

I/O intensive operations mainly take the following two forms: either wait synchronously in the current thread until the operation is completed (such as Console.ReadLine, Thread.Sleep and Thread.Join); Either operate asynchronously and trigger the callback function when the operation is completed or at a certain time later (which will be described in detail later).

Local state and shared state

CLR allocates an independent memory stack for each thread to ensure the isolation of local variables. The following example defines a way to have local variables and invokes the method in the main thread and the newly created thread.

static void Main(string[] args)

{

new Thread(Go_).Start(); //Call Go() on a new thread

Go_(); //Call Go() on the main thead

}

static void Go_()

{

for (int cycles = 0; cycles < 5; cycles++)

{

Console.Write('?');

}

}

Since there will be an independent copy of cycles variable on the memory stack of each thread, we can predict that the output of the program will be 10 question marks. If different threads have references to the same object, they share data:

class ThreadTest

{

bool _done;

static void Main()

{

ThreadTest tt = new ThreadTest();

new Thread(tt.Go).Start();

}

void Go()

{

if (!_done) { _done = true; Console.WriteLine("Done"); }

}

}

Since both threads call the Go() method on the same ThreadTest instance, they share_ Done field. Therefore, "done" will print only once, not twice.

The compiler converts local variables or anonymous delegates captured by Lambda expressions into fields, so they can also be shared:

class ThreadTest

{

static void Main()

{

bool _done = false;

ThreadStart action = () =>

{

if (!_done) { _done = true; Console.WriteLine("Done"); }

};

new Thread(action).Start();

action();

}

}

Static fields provide another way to share variables between threads:

class ThreadTest

{

static bool _done;

static void Main()

{

new Thread(Go).Start();

Go();

}

static void Go()

{

if (!_done) { _done = true; Console.WriteLine("Done"); }

}

}

Lock and thread safety

When reading and writing a shared field, first obtain an exclusive lock to prevent the shared field from being used by multiple threads at the same time. This goal can be achieved by using C#'s lock statement:

class ThreadSafe

{

static bool _done;

static readonly object _locker = new object();

static void Main()

{

new Thread(Go).Start();

Go();

Console.ReadKey();

}

static void Go()

{

lock (_locker)

{

if (!_done)

{

Console.WriteLine("Done");

_done = true;

}

}

}

}

When two threads compete for a lock at the same time (it can be an object of any reference type, here _locker), one thread will wait (block) until the lock is released. It ensures that only one thread can enter this code block at a time.

Lock is not the master key to solve thread safety. There are some problems in the lock itself, and deadlock may occur.

Pass data to thread

Sometimes you need to pass parameters to the thread's startup method. The simplest solution is to use a Lambda expression and call the corresponding method with the specified parameters.

class ThreadTest

{

static void Main()

{

Thread t = new Thread(() => Print("Hello from t"));

t.Start();

Console.ReadKey();

}

static void Print(string message) { Console.WriteLine(message); }

}

Lambda expression and variable capture

Lambda expressions are one of the most convenient forms of passing parameters to threads. However, be careful not to accidentally modify the value of the capture variable after the thread starts. For example, consider the following code:

for (int i = 0; i < 10; i++)

{

new Thread(() => Console.Write(i)).Start();

}

Its output is uncertain. For example, you might get the following results:

12378941055

Variable i refers to the same memory location throughout the life cycle of the loop. Each thread is calling console with a variable that may change at any time during operation Write method. The solution is to use temporary variables in the loop:

for (int i = 0; i < 10; i++)

{

int temp = i;

new Thread(() => Console.Write(temp)).Start();

}

In this way, the numbers 0 to 9 will only appear once. (however, the order in which the numbers appear is still uncertain, because the start time of the thread is uncertain.)

exception handling

Thread execution has nothing to do with the try/catch/finally statement block in which the thread is created. The following procedure is assumed:

static void Main(string[] args)

{

try

{

new Thread(Go).Start();

}

catch (Exception)

{

// The following line will never run

Console.WriteLine("Exception!") ;

}

}

static void Go() { throw null; }

The above try/catch statement is invalid. Newly created threads are affected by unhandled nullreference exception exceptions.

The solution is to move the exception handler into the Go method:

static void Go() {

try

{

throw null;

}

catch (Exception)

{

throw;

}

}

In the production environment, all thread entry methods of the application need to add an exception handler, just as in the main thread (usually in a higher level of execution stack). Unhandled exceptions can cause the entire application to crash and pop up ugly error dialog boxes.

Centralized exception handling

WPF, UWP, and Windows Forms applications all support subscribing to global exception handling events. Application Dispatcher unhandledexception and application ThreadEx-ception. These events will be triggered when an unhandled exception occurs in the program's message loop (equivalent to all code running on the main thread when the application is activated). This approach is ideal for logging and reporting defects in the application (but note that it will not be triggered by unhandled exceptions that occur in non UI threads). Handling these events can prevent the application from shutting down directly, but in order to avoid potential state damage caused by the application's continued execution after unhandled exceptions, it is usually necessary to restart the application.

AppDomain. CurrentDomain. The unhandledexception event is triggered when an unhandled exception occurs in any thread. However, starting with CLR 2.0, CLR will forcibly shut down the application after the event handler is executed. However, you can prevent the application from closing by adding the following code to the application configuration file:

<configuration>

<runtime>

<legacyUnhandleExecptionPolicy enabled="1"/>

</runtime>

</configuration>

Foreground and background processes

In general, a thread created explicitly is called a Foreground thread. As long as one Foreground thread is still running, the application remains running. The background thread is not. When all foreground threads end, the application stops and all running background threads terminate.

static void Main(string[] args)

{

Thread worker = new Thread(() => Console.ReadLine());

if (args.Length>0)

{

worker.IsBackground = true;

}

worker.Start();

}

If the application calls without any parameters, the worker thread will be in the foreground state and wait for user input at the ReadLine statement. At the end of the main thread, the application will continue to run because the foreground thread is still running. If the application starts with parameters, the worker thread will be set to the background state, and the application will exit at the end of the main thread, thus terminating the execution of ReadLine.

thread priority

The Priority attribute of a thread can determine the length of time the current thread executes in the operating system relative to other threads. Specific priorities include:

public enum ThreadPriority

{

Lowest,

BelowNormal,

Normal,

AboveNormal,

Highest

}

The default is Normal priority.

Signal transmission

Sometimes a thread needs to wait for notifications from other threads, which is called signaling. The simplest signaling structure is manual reset event. Calling the WaitOne method of ManualResetEvent can block the current thread until other threads call Set to "turn on" the signal. The following example starts a thread and waits for ManualResetEvent. It blocks for two seconds until the main thread sends a signal:

var signal = new ManualResetEvent(false);

new Thread(() =>

{

Console.WriteLine("Waiting signal...");

signal.WaitOne();

signal.Dispose();

Console.WriteLine("Got the signal!");

}).Start();

Thread.Sleep(2000);

signal.Set(); //Open signal

After the Set call, the signaling structure will remain in the "open" state. You can call the Reset method to "close" it again.

Threads for rich client applications

In WPF, UWP, and Windows Forms applications, performing long operations on the main thread will cause the application to lose response. This is because the main thread is also the thread that handles the message loop. It will perform the corresponding rendering work according to the keyboard and mouse events.

- In WPF, call the BeginInvoke or Invoke method of the Dispatcher object on the element.

- In the UWP application, you can call the RunAsync or Invoke methods of the Dispatcher object.

- In Windows Forms applications: call the BeginInvoke or Invoke method of the control.

All of these methods receive a delegate to reference the method actually executed.

private void nosys_btn_Click(object sender, RoutedEventArgs e)

{

this.result1.Content = "Calculating, please wait...";

new Thread(Work).Start();

}

void Work()

{

Thread.Sleep(3000);

Action action = () => this.result1.Content = ExecuteTask1(100);

Dispatcher.BeginInvoke(action);

}

task

Thread is the underlying tool for creating concurrency, so it has some limitations. especially:

- Although it is not difficult to pass data to the thread when it starts, it is difficult to get the "return value" from the thread after joining. Usually you have to create some shared fields (to get the "return value"). In addition, catching and handling exceptions thrown by operations in threads is also very troublesome.

- After the thread completes, it cannot be started again. On the contrary, it can only be joined (and block the current operation thread).

The Task type can solve all these problems. Compared with threads, Task is a higher-level abstract concept. It represents a concurrent operation, which does not necessarily depend on threads. Tasks can be composed (you can concatenate them through continuation operations). They can use thread pool to reduce startup delay, or use TaskCompletionSource to use callback to avoid multiple threads waiting for I/O-Intensive operations at the same time.

Start task

From NET Framework 4.5, the easiest way to start a thread based Task is to use Task Run (the Task class is under the System.Threading.Tasks namespace) static method. When calling, only one Action delegate needs to be passed in:

Task.Run(() => Console.WriteLine("Task start..."));

By default, tasks use threads in the thread pool, which are background threads. This means that when the main thread ends, all tasks will stop. Therefore, to run these examples in a console application, you must block the main thread after starting the Task (for example, call Wait on the Task object or call the Console.ReadLine() method)

static void Main(string[] args)

{

Task.Run(() => Console.WriteLine("Task start..."));

Console.ReadLine();

}

Wait method

Calling the Wait method of the Task can block the current method until the Task is completed, which is similar to calling the Join method of the thread object:

Task task = Task.Run(() =>

{

Thread.Sleep(2000);

Console.WriteLine("Task start...");

});

Console.WriteLine(task.IsCompleted);

task.Wait(); //Block until the task is completed