Introduction - Start with the simplest insertion sort

Long, long ago, you might have learned some common sorting algorithms. At that time, computer algorithms were still a bit like math.

But I often think about the same kind of questions in my mind. What's the use (the deep contempt of the costume school by the silk practitioners). It's impossible for you to write about them.

It's all packaged so well. After n years, I understand the point and learn that it's for use. What's the purpose? Some are moon set and sunrise, wind blows and clouds ~_phi(?-)/

This article lists some places where sorting is used in practice and parses the sorting routines that have been used in years. Insert sorting here first

// Insert Sort void sort_insert(int a[], int len) { int i, j; for (i = 1; i < len; ++i) { int tmp = a[i]; for (j = i; j > 0; --j) { if (tmp >= a[j - 1]) break; a[j] = a[j - 1]; } a[j] = tmp; } }

Insert Sorting is common in small data sorting! It is also the preferred sorting algorithm for chain structures. Insert Sorting Super Evolution - > Hill Sorting, O() OHA~.

unsafe code requires a test framework. Here's a simple test suite for this article

void array_rand(int a[], int len); void array_print(int a[], int len); // // ARRAY_TEST - Arrays on the convenience test stack, About sorting related aspects // #define ARRAY_TEST(a, fsort) \ array_test(a, sizeof(a) / sizeof(*(a)), fsort) inline void array_test(int a[], int len, void(* fsort)(int [], int)) { assert(a && len > 0 && fsort); array_rand(a, len); array_print(a, len); fsort(a, len); array_print(a, len); } // Insert Sort void sort_insert(int a[], int len);

#include <stdio.h> #include <assert.h> #include <stdlib.h>

#define _INT_ARRAY (64)

// // test sort base, sort is small -> big // int main(int argc, char * argv[]) { int a[_INT_ARRAY]; // Raw data + Insert Sort ARRAY_TEST(a, sort_insert); return EXIT_SUCCESS; }

#define _INT_RANDC (200) void array_rand(int a[], int len) { for (int i = 0; i < len; ++i) a[i] = rand() % _INT_RANDC; } #undef _INT_SORTC #define _INT_PRINT (26) void array_print(int a[], int len) { int i = 0; printf("now array[%d] current low:\n", len); while(i < len) { printf("%4d", a[i]); if (++i % _INT_PRINT == 0) putchar('\n'); } if (i % _INT_PRINT) putchar('\n'); } #undef _INT_PRINT

Unit testing (white box testing) guarantees the quality of the project, otherwise you are afraid of your code. Software fundamentals 2 consist in testing the power in place.

The random function rand of the system appears by the way of a little bit. To add a little more, here is the most recently written 48-bit random algorithm scrand

scrand https://github.com/wangzhione/simplec/blob/master/simplec/module/schead/scrand.c

It is a randomized algorithm that is unplugged from redis for further processing and has better performance and randomness than the system provides. The greatest requirement is platform consistency.

After all, random algorithms are the top ten most important algorithms in computer history, as well as sorting.

At the beginning, insert sorting is introduced, mainly to introduce the system's built-in hybrid sorting algorithm qsort. qsort. Most implementations are

quick sort + small insert sort. What does Quick sort look like? See an efficient implementation like this

// Quick Sort void sort_quick(int a[], int len);

// Quick Row Partition, Start with default axis static int _sort_quick_partition(int a[], int si, int ei) { int i = si, j = ei; int par = a[i]; while (i < j) { while (a[j] >= par && i < j) --j; a[i] = a[j]; while (a[i] <= par && i < j) ++i; a[j] = a[i]; } a[j] = par; return i; } // Quick Sort Core Code static void _sort_quick(int a[], int si, int ei) { if (si < ei) { int ho = _sort_quick_partition(a, si, ei); _sort_quick(a, si, ho - 1); _sort_quick(a, ho + 1, ei); } } // Quick Sort inline void sort_quick(int a[], int len) { _sort_quick(a, 0, len - 1); }

Here's why science encapsulates _sort_quick_partition separately. The main reason is that _sort_quick is a recursive function.

Occupy system function stack, separate out, system occupies a smaller stack size. Slightly improve security. See here, hope to encounter others in the future

Chat basics can also tear a few sentences. Efficient operations are mostly a combination of environments and methods. Suddenly it feels like we can flip ~

Preface-To a fantastic heap sorting

The idea of heap sorting is clever, building a binary tree'memory'to handle the ordering in the sorting process. It is a superevolution of bubble sorting.

The total routine can be seen as an array index [0, 1, 2, 3, 4, 5, 6, 7, 8] - >

0, 1, 2 a binary tree, 1, 3, 4 a binary tree, 2, 5, 6 a binary tree, 3, 7, 8 a branch. Look directly at the code and feel the will of the previous God

// Add a Father Node Index to the Top heap, Rebuild Big Top Heap static void _sort_heap_adjust(int a[], int len, int p) { int node = a[p]; int c = 2 * p + 1; // Get left subtree index first while (c < len) { // If there is a right child node, And right child node value is high, Select Right Child if (c + 1 < len && a[c] < a[c + 1]) c = c + 1; // Father's node is the biggest, So this big top heap is built if (node > a[c]) break; // Tree branch goes above next node branch a[p] = a[c]; p = c; c = 2 * c + 1; } a[p] = node; } // Heap Sorting void sort_heap(int a[], int len) { int i = len / 2; // Line initializes a big top heap out while (i >= 0) { _sort_heap_adjust(a, len, i); --i; } // n - 1 Secondary adjustment, Sort for (i = len - 1; i > 0; --i) { int tmp = a[i]; a[i] = a[0]; a[0] = tmp; // Rebuild heap data _sort_heap_adjust(a, i, 0); } }

Heap sorting is a separate section because it is widely used in the development of basic components. For example, some timers are implemented with a small top heap structure.

Quickly get the nodes that need to be executed most recently. The heap structure can also be used for out-of-process sorting. There is also a heap that is particularly effective for extreme values within the processing range.

Later, we'll use heap sorting to handle out-of-file sorting.

/*

Description of the problem:

There is a large file, data.txt, holding int\n... This format of data. It is out of order.

Now you want to sort from smallest to largest and output data to the ndata.txt file

Restrictions:

Assuming that the file contents are too large to load into memory at once.

The maximum available memory for the system is less than 600MB.

*/

Text - To an actual case of out-of-order

To solve this problem, first build the data. Assume'Big data'is data.txt.an int plus char type.

Repeat output 1< 28 times, 28 bit-> 1.41 GB (1,519,600,600 bytes) bytes.

#define _STR_DATA "data.txt" // 28 -> 1.41 GB (1,519,600,600 byte) | 29 -> 2.83 GB (3,039,201,537 byte) #define _UINT64_DATA (1ull << 28) static FILE * _data_rand_create(const char * path, uint64_t sz) { FILE * txt = fopen(path, "wb"); if (NULL == txt) { fprintf(stderr, "fopen wb path error = %s.\n", path); exit(EXIT_FAILURE); } for (uint64_t u = 0; u < sz; ++u) { int num = rand(); fprintf(txt, "%d\n", num); } fclose(txt); txt = fopen(path, "rb"); if (NULL == txt) { fprintf(stderr, "fopen rb path error = %s.\n", path); exit(EXIT_FAILURE); } return txt; }

That's the data building process. Just resize the macro. It takes a little too long. The idea to solve the problem is

1. Cut the data into suitable parts N

2. Sort within each copy, small to large, and output to a specific file

3. Use a small N-size top heap to read and output one by one and record indexes

4. That index file output, that index file input, and finally a sorted file output

The first step is to cut the data and save it in a specific sequence file

#define _INT_TXTCNT (8)

static int _data_txt_sort(FILE * txt) { char npath[255]; FILE * ntxt; // Too much data to read, Simple and direct monitoring, Data is sufficient to build snprintf(npath, sizeof npath, "%d_%s", _INT_TXTCNT, _STR_DATA); ntxt = fopen(npath, "rb"); if (ntxt == NULL) { int tl, len = (int)(_UINT64_DATA / _INT_TXTCNT); int * a = malloc(sizeof(int) * len); if (NULL == a) { fprintf(stderr, "malloc sizeof int len = %d error!\n", len); exit(EXIT_FAILURE); } tl = _data_split_sort(txt, a, len); free(a); return tl; } return _INT_TXTCNT; }

Cut it into eight parts, each nearly 200MB. The complete build code is as follows

// Heap Sorting void sort_heap(int a[], int len); // Returns the number of delimited files static int _data_split_sort(FILE * txt, int a[], int len) { int i, n, rt = 1, ti = 0; char npath[255]; FILE * ntxt; do { // Get data for (n = 0; n < len; ++n) { rt = fscanf(txt, "%d\n", a + n); if (rt != 1) { // Read has ended break; } } if (n == 0) break; // Start Sorting sort_heap(a, n); // Output to file snprintf(npath, sizeof npath, "%d_%s", ++ti, _STR_DATA); ntxt = fopen(npath, "wb"); if (NULL == ntxt) { fprintf(stderr, "fopen wb npath = %s error!\n", npath); exit(EXIT_FAILURE); } for (i = 0; i < n; ++i) fprintf(ntxt, "%d\n", a[i]); fclose(ntxt); } while (rt == 1); return ti; }

#include <stdio.h> #include <stdint.h> #include <stdlib.h> // // Large Sort Data Validation // int main(int argc, char * argv[]) { int tl; FILE * txt = fopen(_STR_DATA, "rb"); puts("Start building test data _data_rand_create"); // Start building data if (NULL == txt) txt = _data_rand_create(_STR_DATA, _UINT64_DATA); puts("Data is in place, Start sorting delimited data"); tl = _data_txt_sort(txt); fclose(txt); // The data allocated here is built, Out-of-Start Sorting Process return EXIT_SUCCESS; }

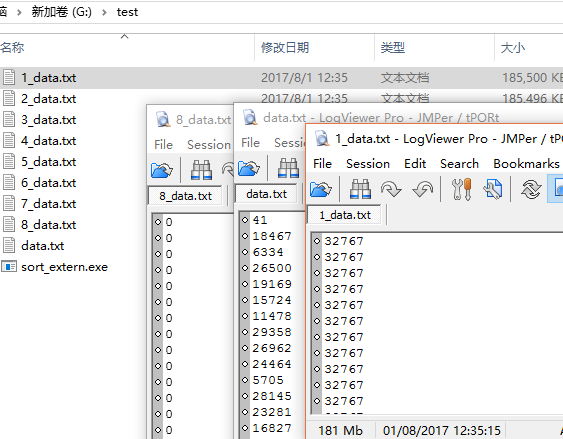

Execute the above cut code and the resulting data will be as follows

1 - 8 _data.txt data is the output data after separate sorting. Then load the start processing data and output the final result file by external sorting.

struct node { FILE * txi; // Currently is the index of that file int val; // Read Value }; // true Indicates that the reading is complete, false Read on static bool _node_read(struct node * n) { assert(n && n->txi); return 1 != fscanf(n->txi, "%d\n", &n->val); } // Build Small Top Heap static void _node_minheap(struct node a[], int len, int p) { struct node node = a[p]; int c = 2 * p + 1; // Get left subtree index first while (c < len) { // If there is a right child node, And right child node value is small, Select Right Child if (c + 1 < len && a[c].val > a[c + 1].val) c = c + 1; // The Father Node is the smallest, So this small top heap is already built if (node.val < a[c].val) break; // Tree branch goes above next node branch a[p] = a[c]; p = c; c = 2 * c + 1; } a[p] = node; } struct output { FILE * out; // Output data content int cnt; // How many file contents exist struct node a[]; }; // Data Destruction and Build Initialization void output_delete(struct output * put); struct output * output_create(int cnt, const char * path); // Start Sorting Build void output_sort(struct output * put);

#include <stdio.h> #include <assert.h> #include <stdlib.h> #include <stdbool.h> #define _INT_TXTCNT (8) #define _STR_DATA "data.txt" #define _STR_OUTDATA "output.txt" // // An external sorting attempt on the final generated data // int main(int argc, char * argv[]) { // Build Operation Content struct output * put = output_create(_INT_TXTCNT, _STR_OUTDATA); output_sort(put); // Data Destruction output_delete(put); return EXIT_SUCCESS; }

The above is the general process of processing, and the section on building and destroying is shown below

void output_delete(struct output * put) { if (put) { for (int i = 0; i < put->cnt; ++i) fclose(put->a[i].txi); free(put); } } struct output * output_create(int cnt, const char * path) { FILE * ntxt; struct output * put = malloc(sizeof(struct output) + cnt * sizeof(struct node)); if (NULL == put) { fprintf(stderr, "_output_init malloc cnt = %d error!\n", cnt); exit(EXIT_FAILURE); } put->cnt = 0; for (int i = 0; i < cnt; ++i) { char npath[255]; // Too much data to read, Simple and direct monitoring, Data is sufficient to build snprintf(npath, sizeof npath, "%d_%s", _INT_TXTCNT, _STR_DATA); ntxt = fopen(npath, "rb"); if (ntxt) { put->a[put->cnt].txi = ntxt; // And initialize the data if (_node_read(put->a + put->cnt)) fclose(ntxt); else ++put->cnt; } } // This is meaningless, Return data directly as empty if (put->cnt <= 0) { free(put); exit(EXIT_FAILURE); } // Build data ntxt = fopen(path, "wb"); if (NULL == ntxt) { output_delete(put); fprintf(stderr, "fopen path cnt = %d, = %s error!\n", cnt, path); exit(EXIT_FAILURE); } put->out = ntxt; return put; }

Core sort algorithm output_sort,

// 28 -> 1.41 GB (1,519,600,600 byte) | 29 -> 2.83 GB (3,039,201,537 byte) #define _UINT64_DATA (1ull << 28) // Start Sorting Build void output_sort(struct output * put) { int i, cnt; uint64_t u = 0; assert(put && put->cnt > 1); cnt = put->cnt; // Start building a small top heap i = cnt / 2; while (i >= 0) { _node_minheap(put->a, cnt, i); --i; } while (cnt > 1) { ++u; // output data, And rebuild the data fprintf(put->out, "%d\n", put->a[0].val); if (_node_read(put->a)) { --cnt; // Exchange data, And exclude it struct node tmp = put->a[0]; put->a[0] = put->a[cnt]; put->a[cnt] = tmp; } _node_minheap(put->a, cnt, 0); } // Output Final File Content, Output do { ++u; fprintf(put->out, "%d\n", put->a[0].val); } while (!_node_read(put->a)); printf("src = %llu, now = %llu, gap = %llu.\n", _UINT64_DATA, u, _UINT64_DATA - u); }

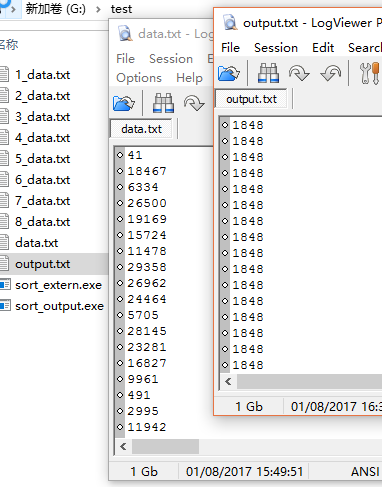

The final result is data output.txt

That's the big data fuss we're often asked during interviews, a crude solution. Of course, things are just getting started!

It's okay to blow a wave during the student stage interview ~Pull a little more NB when you're younger, and you'll only be able to watch others later ~

Postnote - Wait until I come home

Wait until I get home - http://music.163.com/#/song?id=477890886

Recently, I admire Chen Sheng Wu Guang very much. The future is unpredictable. If we are all straight male cancers, we must not forget that we had blood Fang Gang.