Cache invalidation

Cache penetration

-

Content introduction

Cache penetration refers to data that does not exist in the cache or database, but users constantly initiate requests, such as data with id of "- 1" or data with id of particularly large and nonexistent. At this time, the user is likely to be an attacker, and the attack will lead to excessive pressure on the database.

-

Solution

Solution: cache empty object, bloom filter, mvc interceptor

Cache avalanche

-

Content introduction

Cache avalanche means that when we set the cache, the key adopts the same expiration time, resulting in the cache invalidation at the same time at a certain time, all requests are forwarded to the DB, and the DB is under excessive instantaneous pressure.

-

Solution

-

Avoid avalanche: the expiration time of cached data is set randomly to prevent the expiration of a large amount of data at the same time.

-

If the cache database is distributed, the hot data will be evenly distributed in different cache databases.

-

Set hotspot data to never expire.

-

Avalanche: degraded fuse

-

In advance: try to ensure the high availability of the whole redis cluster, and make up the machine downtime as soon as possible. Select the appropriate memory obsolescence strategy.

-

In fact: local ehcache cache + hystrix current limiting & degradation to avoid MySQL crash

-

Afterwards: restore the cache as soon as possible using the data saved by the redis persistence mechanism

-

Buffer breakdown

-

Content introduction

Cache breakdown refers to the concurrent query of the same data. Cache breakdown means that there is no data in the cache but there is some data in the database (generally the cache time expires). At this time, due to the large number of concurrent users, the data is not read in the read cache at the same time, and the data is fetched from the database at the same time, resulting in an instantaneous increase in the pressure on the database, resulting in excessive pressure.

-

Solution

-

Set hotspot data to never expire.

-

Add mutex: mutex is a common practice in the industry.

In short, When the cache fails (it is judged that the value is empty), instead of immediately loading DB to load the database, first use some operations of the cache tool with the return value of successful operations (such as SETNX of Redis or ADD of Memcache) set a mutex key. When the operation returns success, perform the operation of load db and reset the cache; otherwise, retry the whole get cache method.

-

-

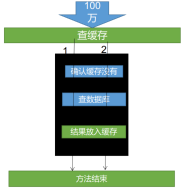

Lock

The bad way is synchronized(this). You can't write it like this. It's not specific

Lock timing problem: the previous logic is to check whether the cache is empty, and then take the competitive lock to check the database, resulting in multiple database queries.

Solution: after competing for the lock, confirm again that there is no in the cache, and then check the database. The lock is released after the result is put into the cache.

Distributed cache

-

Applicable scenario

Local cache problem: each micro service must have a cache service. When data is updated, only its own cache is updated, resulting in inconsistent cache data

-

Solution

Distributed cache, microservices, shared cache Middleware

Distributed lock

-

Content introduction

Distributed projects, but the local lock can only lock the current service. A distributed lock is required

-

Principle analysis

Principle of redis distributed lock: setnx, only one can be set successfully at one time. On the premise that the key of the lock is certain and the value can be changed.

-

matters needing attention

-

Lock blocking or sleep not obtained for a while

-

When the lock is set, the gadget service goes down and the logic of deleting the lock is not executed, resulting in a deadlock

Solution: set expiration time

-

The lock expired before the business was executed. Others got the lock and deleted others' locks after their own execution

Solution: Lock renewal (reisson has a watchdog). When deleting a lock, it is clearly your own lock, such as uuid

-

The judgment uuid is correct, but the lock expires when it is about to be deleted, and someone else sets a new value, then someone else's lock is deleted

Solution: delete locks must be atomic (ensure that judgment and delete locks are atomic). Use redis+Lua script to complete, and the script is atomic

-

-

Sample code

Reference command

http://redis.cn/commands/set.html

Delete lock lua script

if redis.call("get",KEYS[1]) == ARGV[1] then return redis.call("del",KEYS[1]) else return 0 end;Unlock java code

public Map<String, List<Catalog2Vo>> getCatalogJsonDbWithRedisLock() { String uuid = UUID.randomUUID().toString(); ValueOperations<String, String> ops = stringRedisTemplate.opsForValue(); Boolean lock = ops.setIfAbsent("lock", uuid,500, TimeUnit.SECONDS); if (lock) { Map<String, List<Catalog2Vo>> categoriesDb = getCategoryMap(); String lockValue = ops.get("lock"); // get and delete atomic operations String script = "if redis.call(\"get\",KEYS[1]) == ARGV[1] then\n" + " return redis.call(\"del\",KEYS[1])\n" + "else\n" + " return 0\n" + "end"; stringRedisTemplate.execute( new DefaultRedisScript<Long>(script, Long.class), // Scripts and return types Arrays.asList("lock"), // parameter lockValue); // Parameter value, lock value return categoriesDb; }else { try { Thread.sleep(100); } catch (InterruptedException e) { e.printStackTrace(); } // After sleeping for 0.1s, call / / spin again return getCatalogJsonDbWithRedisLock(); } }

SpringBoot integrates Redisson to implement distributed locks

-

Source address

https://github.com/redisson/redisson

-

Content introduction

Redisson is a Java in memory data grid implemented on the basis of Redis. It not only provides a series of distributed Java common objects, but also provides many distributed services, including (BitSet, Set, Multimap, SortedSet, Map, List, Queue, BlockingQueue, Deque, BlockingDeque, Semaphore, Lock, AtomicLong, CountDownLatch, Publish / Subscribe, Bloom filter, Remote service, Spring cache, Executor service, Live Object service, Scheduler service) Redisson provides the simplest and most convenient way to use Redis. Redisson aims to promote users' Separation of Concern on Redis, so that users can focus more on processing business logic.

-

Official documents

https://github.com/redisson/redisson/wiki/8.- Distributed lock and synchronizer

Implementation process

-

Import dependency

<!-- You can use it later redisson-spring-boot-starter --> <dependency> <groupId>org.redisson</groupId> <artifactId>redisson</artifactId> <version>3.13.4</version> </dependency> -

Open configuration

https://github.com/redisson/redisson/wiki/2.- Configuration method

Single node mode

@Configuration public class MyRedisConfig { @Value("${ipAddr}") private String ipAddr; // Redistributions are used through the redissoclient object. If there are multiple redis clusters, they can be configured @Bean(destroyMethod = "shutdown") public RedissonClient redisson() { Config config = new Config(); // Create configuration for singleton mode config.useSingleServer().setAddress("redis://" + ipAddr + ":6379"); return Redisson.create(config); } } -

Reentrant lock

Concept introduction

A calls B: AB all need the same lock. At this time, the reentrant lock can be reentrant, and a can call B. When a cannot re-enter a lock, a calling B will deadlock

Reference link

github.com/redisson/redisson/wiki/8.- Distributed lock and synchronizer

Sample code

Redisson distributed reentrant lock RLock Java object based on Redis implements Java util. concurrent. locks. Lock interface. It also provides asynchronous, Reactive and RxJava2 standard interfaces.

// Parameter is lock name RLock lock = redissonClient.getLock("CatalogJson-Lock");//The lock implements JUC locks. Lock interface lock.lock();//Blocking wait // Unlock it and put it finally. / / if it goes down here: there is a watchdog. Don't worry lock.unlock();Lock renewal

If the Redisson node responsible for storing the distributed lock goes down and the lock is locked, the lock will be locked. In order to avoid this situation, Redisson provides a watchdog to monitor the lock. Its function is to continuously extend the validity of the lock before the Redisson instance is closed. By default, the timeout time for the watchdog to check the lock is 30 seconds. You can also modify config Lockwatchdogtimeout to specify otherwise.

// It will unlock automatically 10 seconds after locking, and the watchdog will not continue to live // There is no need to call the unlock method to unlock manually lock.lock(10, TimeUnit.SECONDS); // Try to lock, wait for 100 seconds at most, and unlock automatically 10 seconds after locking boolean res = lock.tryLock(100, 10, TimeUnit.SECONDS); if (res) { try { ... } finally { lock.unlock(); } } /** * If the lock timeout is passed, execute the script to occupy the lock; * If the lock time is not passed, use the watchdog time to occupy the lock. If the lock occupation is returned successfully, call future onComplete(); * If there is no exception, call scheduleExpirationRenewal(threadId); * Reset the expiration time and schedule the task; * The principle of the watchdog is a scheduled task: reset the expiration time of the lock, and the new expiration time is the default time of the watchdog; * Lock time / 3 is the fixed task cycle; **/Asynchronous execution

Redisson also provides asynchronous execution methods for distributed locks:

RLock lock = redisson.getLock("anyLock"); lock.lockAsync(); lock.lockAsync(10, TimeUnit.SECONDS); Future<Boolean> res = lock.tryLockAsync(100, 10, TimeUnit.SECONDS);RLock objects fully comply with the Java Lock specification. In other words, only processes with locks can be unlocked, and other processes will throw an IllegalMonitorStateException error. However, if you need other processes to unlock, use the distributed Semaphore semaphore object.

Best practice: customize the lock time. Just take a long time

public Map<String, List<Catalog2Vo>> getCatalogJsonDbWithRedisson() { Map<String, List<Catalog2Vo>> categoryMap=null; RLock lock = redissonClient.getLock("CatalogJson-Lock"); lock.lock(); try { Thread.sleep(30000); categoryMap = getCategoryMap(); } catch (InterruptedException e) { e.printStackTrace(); }finally { lock.unlock(); return categoryMap; } } -

Read write lock (ReadWriteLock)

Redisson distributed reentrant read / write lock RReadWriteLock Java object based on Redis implements Java util. concurrent. locks. Readwritelock interface. Both read lock and write lock inherit RLock interface.

Distributed reentrant read-write locks allow multiple read locks and one write lock to be locked at the same time.

RReadWriteLock rwlock = redisson.getReadWriteLock("anyRWLock"); // Most common usage rwlock.readLock().lock(); // or rwlock.writeLock().lock(); // Automatic unlocking after 10 seconds // There is no need to call the unlock method to unlock manually rwlock.readLock().lock(10, TimeUnit.SECONDS); // or rwlock.writeLock().lock(10, TimeUnit.SECONDS); // Try to lock, wait for 100 seconds at most, and unlock automatically 10 seconds after locking boolean res = rwlock.readLock().tryLock(100, 10, TimeUnit.SECONDS); // or boolean res = rwlock.writeLock().tryLock(100, 10, TimeUnit.SECONDS); ... lock.unlock();Status in redis when locked

HashWrite-Lock key:mode value:read key:sasdsdffsdfsdf... value:1 -

Semaphore

Content introduction

The semaphore is a number stored in redis. When the number is greater than 0, you can call the acquire() method to increase the number or the release() method to reduce the number. However, when the number is less than 0 after calling release(), the method will block until the number is greater than 0.

The distributed Semaphore Java object RSemaphore of Redisson based on Redis adopts the interface and usage similar to java.util.concurrent.Semaphore. It also provides asynchronous, Reactive and RxJava2 standard interfaces.

common method

RSemaphore semaphore = redisson.getSemaphore("semaphore"); semaphore.acquire(); //or semaphore.acquireAsync(); semaphore.acquire(23); semaphore.tryAcquire(); //or semaphore.tryAcquireAsync(); semaphore.tryAcquire(23, TimeUnit.SECONDS); //or semaphore.tryAcquireAsync(23, TimeUnit.SECONDS); semaphore.release(10); semaphore.release(); //or semaphore.releaseAsync();Sample code

@GetMapping("/park") @ResponseBody public String park() { RSemaphore park = redissonClient.getSemaphore("park"); try { park.acquire(2); } catch (InterruptedException e) { e.printStackTrace(); } return "Stop 2"; } @GetMapping("/go") @ResponseBody public String go() { RSemaphore park = redissonClient.getSemaphore("park"); park.release(2); return "Drive away 2"; } -

Lockout (CountDownLatch)

Content introduction

The Redisson distributed locking (CountDownLatch) Java object RCountDownLatch based on Redisson adopts an interface and usage similar to java.util.concurrent.CountDownLatch.

Sample code

The following code can only be executed by setLatch() after offLatch() is called 5 times

RCountDownLatch latch = redisson.getCountDownLatch("anyCountDownLatch"); latch.trySetCount(5); latch.await(); // In another thread or other JVM RCountDownLatch latch = redisson.getCountDownLatch("anyCountDownLatch"); latch.countDown();

Cache and database consistency

Scene analysis

-

Double write mode

Write cache after writing database

Existing problems

In case of concurrency, 2 writes enter the DB and write to the cache after writing the DB. There is temporary dirty data

-

failure mode

After writing the database, delete the cache

Existing problems

Thread 2 reads the old DB again before it is stored in the database

Solution

-

Set the expiration time of the cache and update it regularly

-

When writing data, add distributed read-write lock

-

Solution

-

Scheme introduction

-

If it is user latitude data (order data and user data), the concurrency probability is very small. This problem does not need to be considered. Add the expiration time to the cached data, and trigger the active update of the read every other period of time;

-

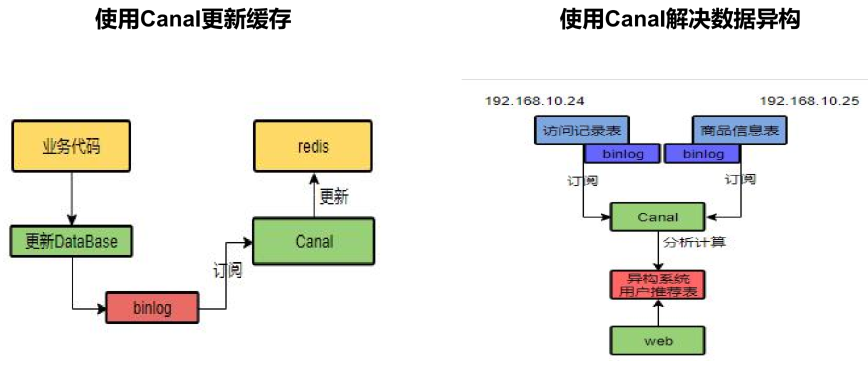

For basic data such as menus and product introductions, you can also use canal to subscribe to binlog;

-

Cache data + expiration time is also sufficient to meet the cache requirements of most businesses;

-

Lock to ensure concurrent reading and writing, and line up in order when writing. Reading doesn't matter. Therefore, it is suitable to use read-write lock. (the service is not related to heart data, and temporary dirty data can be ignored)

-

-

summary

-

The data we can put into the cache should not have high requirements for real-time and consistency. Therefore, add the expiration time when caching data to ensure that you can get the latest data every day.

-

We should not over design and increase the complexity of the system

-

When you encounter data with high requirements for real-time and consistency, you should check the database, even slowly.

-

SpringCache

-

Content introduction

spring has defined Cache and CacheManager interfaces since 3.1 to unify different caching technologies. It also supports the use of JCache(JSR-107) annotations to simplify our development

The implementation of Cache interface includes RedisCache, EhCacheCache, ConcurrentMapCache, etc

Each time you call a method that requires caching, spring will check whether the specified target method of the specified parameter has been called; If yes, the result after the method call will be directly obtained from the cache. If not, the method will be called and the result will be returned to the user after caching. The next call will be directly obtained from the cache.

-

matters needing attention

When using Spring cache abstraction, we need to pay attention to the following two points:

-

Determine the method needs caching and their caching strategy;

-

Read the data stored in the previous cache from the cache

-

Implementation process

-

Introduce dependency

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-cache</artifactId> </dependency> -

Specify the cache type and annotate @ EnableCaching on the main configuration class

spring: cache: #The specified cache type is redis type: redis redis: # Specify the expiration time in redis as 1h time-to-live: 3600000 -

Configuration class

jdk is used for serialization by default (poor readability). The default ttl is - 1 and will never expire. The custom serialization method requires the preparation of configuration classes

@Configuration public class MyCacheConfig { @Bean public RedisCacheConfiguration redisCacheConfiguration( CacheProperties cacheProperties) { CacheProperties.Redis redisProperties = cacheProperties.getRedis(); org.springframework.data.redis.cache.RedisCacheConfiguration config = org.springframework.data.redis.cache.RedisCacheConfiguration .defaultCacheConfig(); //Specifies that the cache serialization method is json config = config.serializeValuesWith( RedisSerializationContext.SerializationPair.fromSerializer(new GenericJackson2JsonRedisSerializer())); //Set various configurations in the configuration file, such as expiration time if (redisProperties.getTimeToLive() != null) { config = config.entryTtl(redisProperties.getTimeToLive()); } if (redisProperties.getKeyPrefix() != null) { config = config.prefixKeysWith(redisProperties.getKeyPrefix()); } if (!redisProperties.isCacheNullValues()) { config = config.disableCachingNullValues(); } if (!redisProperties.isUseKeyPrefix()) { config = config.disableKeyPrefix(); } return config; } }Cache auto configuration

// Cache auto configuration source code @Configuration(proxyBeanMethods = false) @ConditionalOnClass(CacheManager.class) @ConditionalOnBean(CacheAspectSupport.class) @ConditionalOnMissingBean(value = CacheManager.class, name = "cacheResolver") @EnableConfigurationProperties(CacheProperties.class) @AutoConfigureAfter({ CouchbaseAutoConfiguration.class, HazelcastAutoConfiguration.class, HibernateJpaAutoConfiguration.class, RedisAutoConfiguration.class }) @Import({ CacheConfigurationImportSelector.class, // See what CacheConfiguration to import CacheManagerEntityManagerFactoryDependsOnPostProcessor.class }) public class CacheAutoConfiguration { @Bean @ConditionalOnMissingBean public CacheManagerCustomizers cacheManagerCustomizers(ObjectProvider<CacheManagerCustomizer<?>> customizers) { return new CacheManagerCustomizers(customizers.orderedStream().collect(Collectors.toList())); } @Bean public CacheManagerValidator cacheAutoConfigurationValidator(CacheProperties cacheProperties, ObjectProvider<CacheManager> cacheManager) { return new CacheManagerValidator(cacheProperties, cacheManager); } @ConditionalOnClass(LocalContainerEntityManagerFactoryBean.class) @ConditionalOnBean(AbstractEntityManagerFactoryBean.class) static class CacheManagerEntityManagerFactoryDependsOnPostProcessor extends EntityManagerFactoryDependsOnPostProcessor { CacheManagerEntityManagerFactoryDependsOnPostProcessor() { super("cacheManager"); } } }@Configuration(proxyBeanMethods = false) @ConditionalOnClass(RedisConnectionFactory.class) @AutoConfigureAfter(RedisAutoConfiguration.class) @ConditionalOnBean(RedisConnectionFactory.class) @ConditionalOnMissingBean(CacheManager.class) @Conditional(CacheCondition.class) class RedisCacheConfiguration { @Bean // Put into cache manager RedisCacheManager cacheManager(CacheProperties cacheProperties, CacheManagerCustomizers cacheManagerCustomizers, ObjectProvider<org.springframework.data.redis.cache.RedisCacheConfiguration> redisCacheConfiguration, ObjectProvider<RedisCacheManagerBuilderCustomizer> redisCacheManagerBuilderCustomizers, RedisConnectionFactory redisConnectionFactory, ResourceLoader resourceLoader) { RedisCacheManagerBuilder builder = RedisCacheManager.builder(redisConnectionFactory).cacheDefaults( determineConfiguration(cacheProperties, redisCacheConfiguration, resourceLoader.getClassLoader())); List<String> cacheNames = cacheProperties.getCacheNames(); if (!cacheNames.isEmpty()) { builder.initialCacheNames(new LinkedHashSet<>(cacheNames)); } redisCacheManagerBuilderCustomizers.orderedStream().forEach((customizer) -> customizer.customize(builder)); return cacheManagerCustomizers.customize(builder.build()); } } -

Cache usage

// Store cache // When this method is called, the result will be cached. The cache name is category and the key is the method name // sync means that the cache of this method will be locked when it is read. / / value is equivalent to cacheNames // key if it is a string "''" @Cacheable(value = {"category"},key = "#root.methodName",sync = true) public Map<String, List<Catalog2Vo>> getCatalogJsonDbWithSpringCache() { return getCategoriesDb(); } // wipe cache // Calling this method will delete all caches under the cache category. If you want to delete a specific, use key = '' @Override @CacheEvict(value = {"category"},allEntries = true) public void updateCascade(CategoryEntity category) { this.updateById(category); if (!StringUtils.isEmpty(category.getName())) { categoryBrandRelationService.updateCategory(category); } } // If you want to empty multiple caches, use @ Caching(evict={@CacheEvict(value = "")})

Principle and deficiency of SpringCache

-

Read mode

Cache penetration

Query a null data. Solution: cache empty data through spring cache. redis. cache-null-values=true

Buffer breakdown

A large number of concurrent queries come in and query an expired data at the same time. Solution: lock? It is unlocked by default; Use sync = true to solve the breakdown problem

Cache avalanche

A large number of Keys expire at the same time. Solution: add random time.

-

Write mode (CACHE consistent with database)

-

Read write lock.

-

Introduce Canal to sense the update of MySQL and update Redis

-

Read more and write more. Just go to the database to query

-

-

summary

General data (for data with more reads and less writes, timeliness and low consistency requirements, spring cache can be used):

Write mode (as long as the cached data has an expiration time)

other

- jvisualvm

Reference link

-

Distributed locks with Redis

https://redis.io/topics/distlock

-

Cereal mall notes - beginner level tutorial (1 / 4)

https://blog.csdn.net/hancoder/article/details/106922139

-

The king scheme in distributed locks - Redisson

https://mp.weixin.qq.com/s/3bhVvJsHr_t5MFxzcmC7Jw

-

The strongest e-commerce course of the whole network, cereal mall, benchmarking Alibaba P6/P7, with an annual salary of 400000-600000

https://www.bilibili.com/video/BV1np4y1C7Yf?p=154

-

Springboot cache - getting started and basic use

https://blog.csdn.net/er_ving/article/details/105421572