1, Overview and installation of canal

1.1 briefly

Can al simulates mysql slave interaction protocol, pretends to be a slave node of mysql and sends dump protocol to mysql master. After receiving the dump request, mysql master starts to push binary log to slave. Can al parses binary log objects, that is, byte streams.

1.2 installation

1.2.1 installation of canal.deployer

(1)open mysql binlog SHOW VARIABLES LIKE '%log_bin%' //If the value of log bin is OFF, it is not ON; if it is ON, it is ON. //If OFF, it needs to be turned on mysql -h localhost -u root -p create user canal@'%' IDENTIFIED by 'canal'; GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT,SUPER ON *.* TO 'canal'@'%'; FLUSH PRIVILEGES;

(2) decompress after downloading

wget https://github.com/alibaba/canal/releases/download/canal-1.1.4/canal.adapter-1.1.4.tar.gz wget https://github.com/alibaba/canal/releases/download/canal-1.1.4/canal.deployer-1.1.4.tar.gz tar -zxvf canal.adapter-1.1.4.tar.gz -C /data/canal/canal.adapter-1.1.4 tar -zxvf canal.deployer-1.1.4.tar.gz -C /data/canal/canal.deployer-1.1.4

(3) Modify profile

canal.instance.mysql.slaveId=2 Can. Instance. Master. Address = own intranet: 3306 canal.instance.dbUsername=canal canal.instance.dbPassword=canal Enter mysql and execute the following statement to check the location of binlog mysql: show master status; ./bin/startup.sh view log cat /data/canal/canal.deployer-1.1.4/logs Check whether the port is occupied netstat -an |grep 11111

1.2.2 installation of canal.adapter

Send the monitored binlog information of mysql to another place, such as mq, hbase, etc

(1)modify application.yml

server:

port: 8081

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

default-property-inclusion: non_null

canal.conf:

mode: tcp

canalServerHost: 127.0.0.1:11111

batchSize: 500

syncBatchSize: 1000

retries: 0

timeout:

accessKey:

secretKey:

srcDataSources:

defaultDS:

url: jdbc:mysql://127.0.0.1:3306/school-edu?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&useSSL=false&serverTimezone=GMT%2B8&zeroDateTimeBehavior=convertToNull

username: canal

password: canal

canalAdapters:

- instance: example

groups:

- groupId: g1

outerAdapters:

- name: logger

- name: es

hosts: 127.0.0.1:9300

properties:

cluster.name: elasticsearch

(2)custom yml,Complete data change to es

vim edu.yml

dataSourceKey: defaultDS

destination: example

groupId: g1

esMapping:

_index: teacher

_type: _doc

_id: id

upsert: true

sql: "select t.id, t.name, t.career, t.level from edu_teacher t"

etlCondition: "where t.c_time>={}"

commitBatch: 30001.2.2 successful display

Problems encountered:

(1) MySQL 8 or MySQL 7 high point version needs to replace jar

(2)es must configure cluster.name: elasticsearch or report NPE

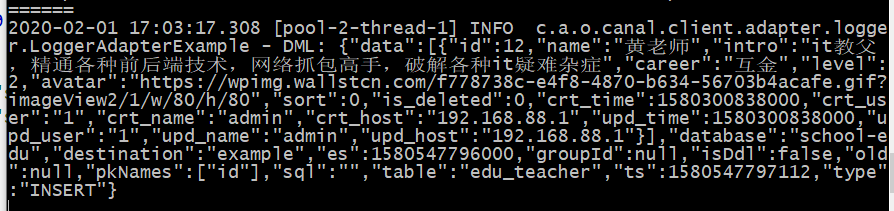

(3) The data needs to be written and then modified. Otherwise, the data is only the modified data, not the whole document. For example, the following figure id=12

2, Cannal and springboot to build real-time index

2.1 specific codes and ideas

In general: (1) listen to the canal11111 1 port to obtain the real-time modification data stream; (2) get the modification information and make analysis (which table, which library, which field); (3) rebuild the index according to the analysis results.

@Component

@Slf4j

public class CanalScheduling implements Runnable, ApplicationContextAware {

private ApplicationContext applicationContext;

@Autowired

private CourseEsService courseEsService;

@Resource

private CanalConnector canalConnector;

@Autowired

private RestHighLevelClient restHighLevelClient;

@Override

@Scheduled(fixedDelay = 100)

public void run() {

long batchId = -1;

try {

int batchSize = 1000;

//Take 1000 pieces of data from canal

Message message = canalConnector.getWithoutAck(batchSize);

batchId = message.getId();

List<CanalEntry.Entry> entries = message.getEntries();

if (batchId != -1 && entries.size() > 0) {

for (CanalEntry.Entry entry : entries) {

//If line record update

if (entry.getEntryType() == CanalEntry.EntryType.ROWDATA) {

//Analytic processing

publishCanalEvent(entry);

}

}

}

canalConnector.ack(batchId);

} catch (Exception e) {

e.printStackTrace();

canalConnector.rollback(batchId);

}

}

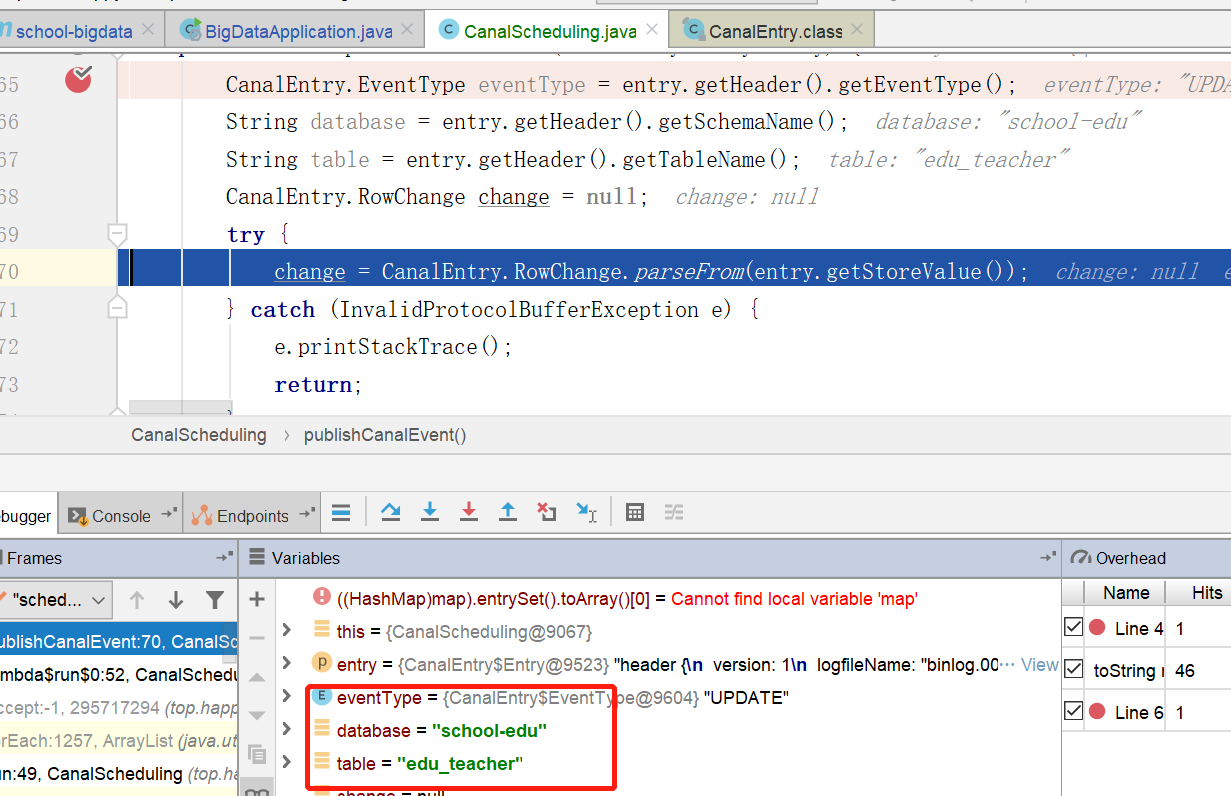

private void publishCanalEvent(CanalEntry.Entry entry) throws Exception {

//We can get the binlog type, whether to create or modify or otherwise, and then modify the code according to the business

//CanalEntry.EventType eventType = entry.getHeader().getEventType();

//Get database related information

String database = entry.getHeader().getSchemaName();

String table = entry.getHeader().getTableName();

CanalEntry.RowChange change = CanalEntry.RowChange.parseFrom(entry.getStoreValue());

for (CanalEntry.RowData rowData : change.getRowDatasList()) {

//Get change primary key id

List<CanalEntry.Column> columns = rowData.getAfterColumnsList();

String primaryKey = "id";

CanalEntry.Column idColumn = columns.stream().filter(column -> column.getIsKey()

&& primaryKey.equals(column.getName())).findFirst().orElse(null);

Map<String, Object> dataMap = parseColumnsToMap(columns);

//According to database, table and id information, retrieve data from database and build index

indexES(dataMap, database, table);

}

}

Map<String, Object> parseColumnsToMap(List<CanalEntry.Column> columns) {

Map<String, Object> jsonMap = new HashMap<>();

columns.forEach(column -> {

if (column == null) {

return;

}

jsonMap.put(column.getName(), column.getValue());

});

return jsonMap;

}

/**

* Rebuild index based on business logic

*

* @param dataMap

* @param database

* @param table

* @throws IOException

*/

private void reBuildEs(Map<String, Object> dataMap, String database, String table) throws Exception {

}

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

this.applicationContext = applicationContext;

}

}2.2 result display