BUG

BUG is written in front: Kerberos 1.15 1-18. el7. x86_ Version 64 has a BUG, do not install this version!!!!

If you have installed the version described above, don't be afraid. Here is a solution Upgrade kerberos

1. System environment

1. Operating system: CentOS Linux release 7.5 1804 (Core)

2. CDH: 5.16.2-1.cdh5.16.2.p0.8

3. Kerberos: 1.15.1-50.el7x86

4. Operate with root user

2.KDC service installation and configuration

2.1. Install KDC service

Install KDC service on Cloudera Manager server

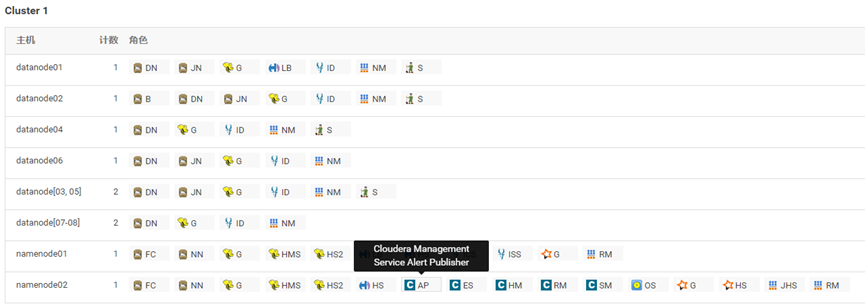

In CMWEIUI interface( http://cmip:7180/cmf/hardware/roles#clusterId=1 )Check cm installation address For example, it is installed in namenode02 in the following figure

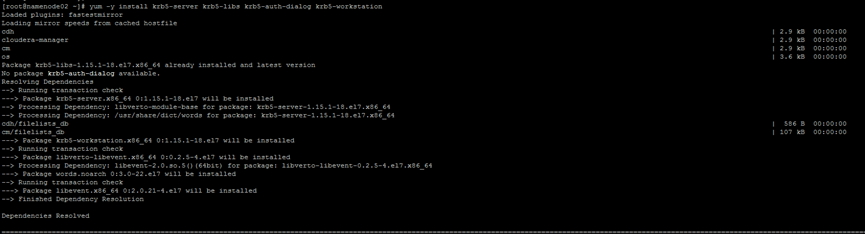

Execute the following command on namenode 02

yum -y install krb5-server krb5-libs krb5-auth-dialog krb5-workstation

After installation, a configuration file will be generated on the KDC host

• /etc/krb5.conf

• /var/kerberos/krb5kdc/kdc.conf

2.2. Modify profile

2.2. 1. Modify krb5 Conf file

2.2. 1.1. Modify file address

/etc/krb5.conf

2.1. 1.2. Modification content

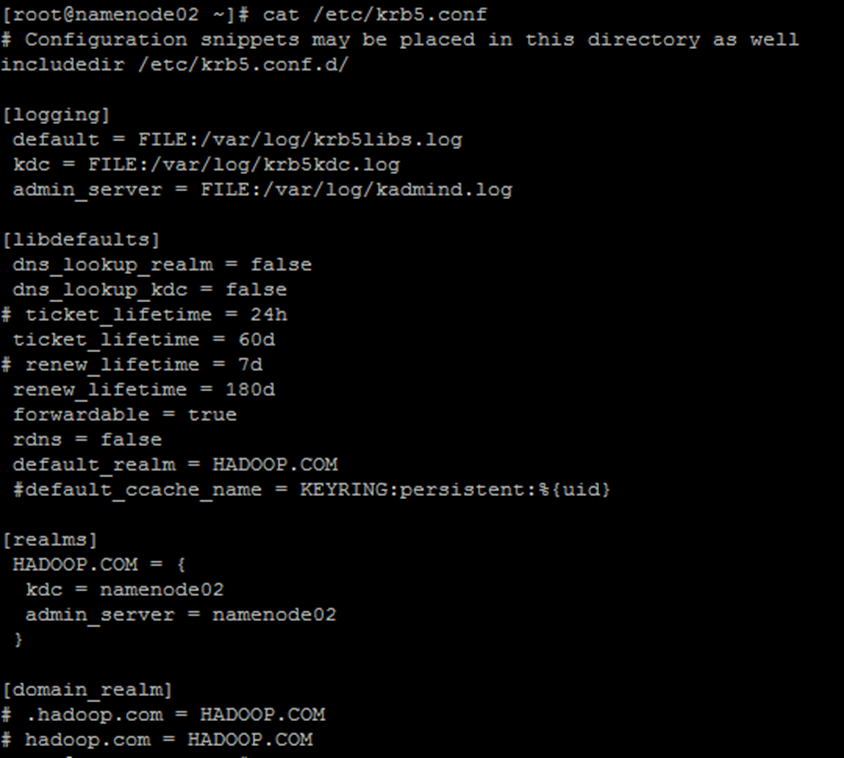

The modified contents are as follows. Those marked in yellow are the modified contents, and other configurations can be adjusted according to the actual situation

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

dns_lookup_kdc = false

# ticket_lifetime = 24h

ticket_lifetime = 60d

# renew_lifetime = 7d

renew_lifetime = 180d

forwardable = true

rdns = false

default_realm = HADOOP.COM

#default_ccache_name = KEYRING:persistent:%{uid}

[realms]

HADOOP.COM = {

kdc = namenode02

admin_server = namenode02

}

[domain_realm]

# .hadoop.com = HADOOP.COM

# hadoop.com = HADOOP.COM

2.1. 1.3. interpretative statement

default_realm = HADOOP.COM #Specify the default domain name

HADOOP.COM = {

kdc = namenode02 #kdc server address

admin_server = namenode02 #admin service (domain controller) address

}

2.1. 2. Modify kadm5 ACL file

2.1. 2.1. Modify file address

/var/kerberos/krb5kdc/kadm5.acl

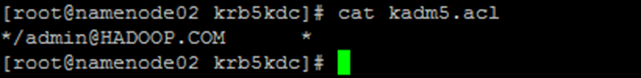

2.1. 2.2. Modification content

*/admin@HADOOP.COM *

2.1. 2.3. interpretative statement

Add to Database Administrator ACL Permissions, modifying kadm5.acl Documents,*Represents all permissions #The current user admin, * indicates all permissions. You can add users and assign permissions #Configuration is represented by/ admin@HADOOP.COM The end user has * (all, that is, all) permissions

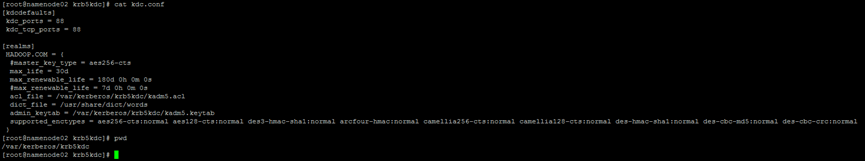

2.1. 3. Modify KDC Conf file

2.1. 3.1. Modify file address

/var/kerberos/krb5kdc/kdc.conf

2.1. 3.2. Modification content

The modified contents are as follows. Those marked in yellow are the modified contents, and other configurations can be adjusted according to the actual situation

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

HADOOP.COM = {

#master_key_type = aes256-cts

max_life = 30d

max_renewable_life = 180d 0h 0m 0s

#max_renewable_life = 7d 0h 0m 0s

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

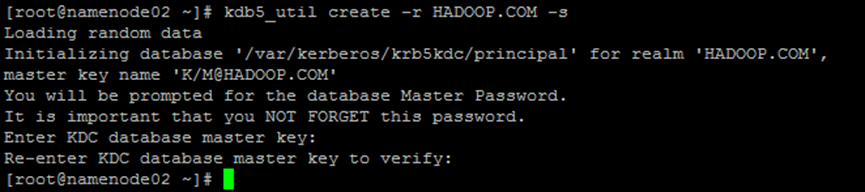

3. Create Kerberos database

kdb5_util create –r HADOOP.COM -s

You need to enter the password for the Kerberos database here.

--- Loading random data Initializing database '/var/kerberos/krb5kdc/principal' for realm 'HADOOP.COM', master key name 'K/M@HADOOP.COM' You will be prompted for the database Master Password. [Input password: HADOOP.COM] It is important that you NOT FORGET this password. Enter KDC database master key: Re-enter KDC database master key to verify: ---

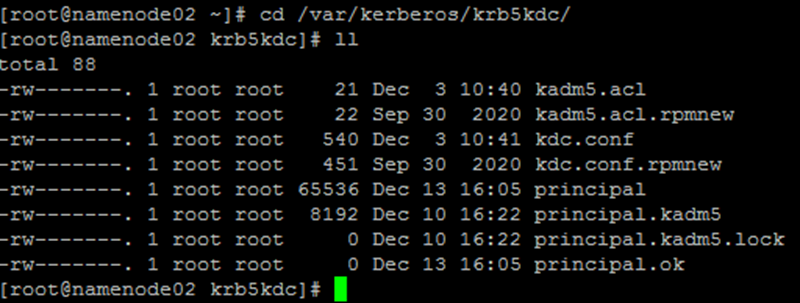

After the database is created. You can see the following files in the directory / var/kerberos/krb5kdc:

To rebuild the database, you need to delete the relevant principal files under / var/kerberos/krb5kdc

explain:

• [- s] means to generate a stash file and store the master server key (krb5kdc) in it

• [- r] to specify a realm name when krb5 Used when multiple realms are defined in conf

• after the Kerberos database is created, you can see the generated principal in / var/kerberos /

Related documents

• if you encounter the prompt that the database already exists, you can put the database in / var/kerberos/krb5kdc / directory

Delete all relevant documents of the principal. The default database name is principal. You can use - d to refer to

Name the database.

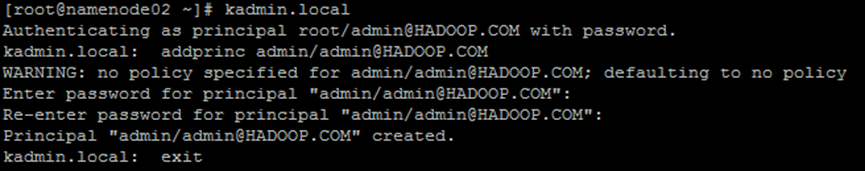

4. Create a Kerberos management account

Note kadmin Local can run directly on the KDC without Kerberos authentication

command

kadmin.local addprinc admin/admin@HADOOP.COM

implement

---- [root@namenode02 ~]# kadmin.local Authenticating as principal root/admin@HADOOP.COM with password. kadmin.local: addprinc admin/admin@HADOOP.COM WARNING: no policy specified for admin/admin@HADOOP.COM; defaulting to no policy Enter password for principal "admin/admin@HADOOP.COM": [Enter password as admin] Re-enter password for principal "admin/admin@HADOOP.COM": Principal "admin/admin@HADOOP.COM" created. kadmin.local: exit ----

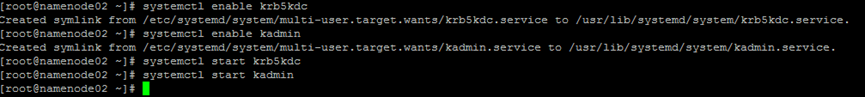

5. Start kerberos

Add the Kerberos service to the self start service and start krb5kdc and kadmin services.

command

systemctl enable krb5kdc systemctl enable kadmin systemctl start krb5kdc systemctl start kadmin

6. Verify the administrator account of Kerberos

command

kinit admin/admin@HADOOP.COM klist

implement

---

[root@namenode02 ~]# kinit admin/admin@HADOOP.COM

Password for admin/admin@HADOOP.COM: [Input password:admin]

[root@namenode02 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: admin/admin@HADOOP.COM

Valid starting Expires Service principal

12/03/2021 10:51:53 01/02/2022 10:51:53 krbtgt/HADOOP.COM@HADOOP.COM

renew until 06/01/2022 10:51:53

---

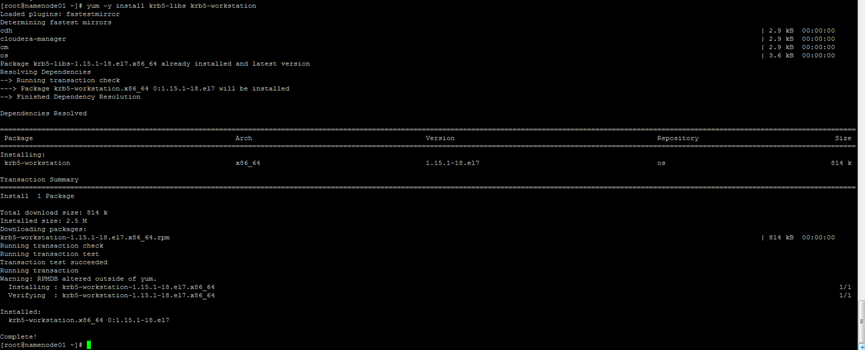

7. Install Kerberos client (all nodes)

Install all Kerberos clients for the cluster, including Cloudera Manager

Take namenode01 as an example:

yum -y install krb5-libs krb5-workstation

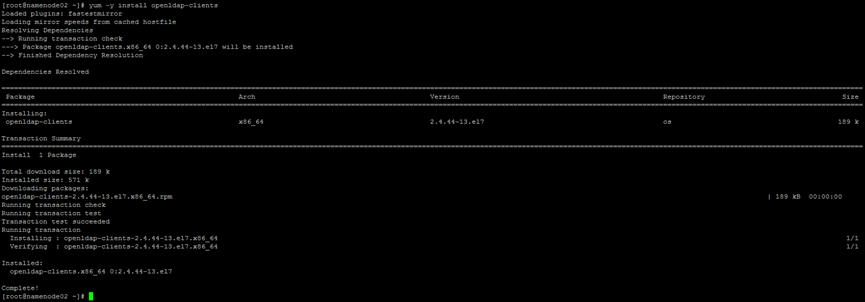

8. Install additional packages on the Cloudera Manager Server

yum -y install openldap-clients

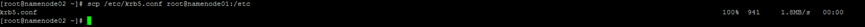

9. Copy configuration file

Install krb5.0 on KDC Server The conf file is copied to all Kerberos clients

Take namenode01 as an example:

scp /etc/krb5.conf root@namenode01:/etc

10. Configure JCE

For using centos5 6 and above, AES-256 is used for encryption by default. This requires CDH

Java Cryptography Extension (JCE) Unlimited is installed on all nodes of the cluster

Strength Jurisdiction Policy File

Download path:

http://www.oracle.com/technetwork/java/javase/downloads/jce8-download-21331

66.html

After downloading, execute at each node and send US_export_policy.jar under jre

unzip jce_policy-8.zip cp US_export_policy.jar /usr/java/jdk1.8.0_231/jre/lib/security/US_export_policy.jar

11. Add cm administrator account

Add an administrator account to Cloudera Manager in KDC

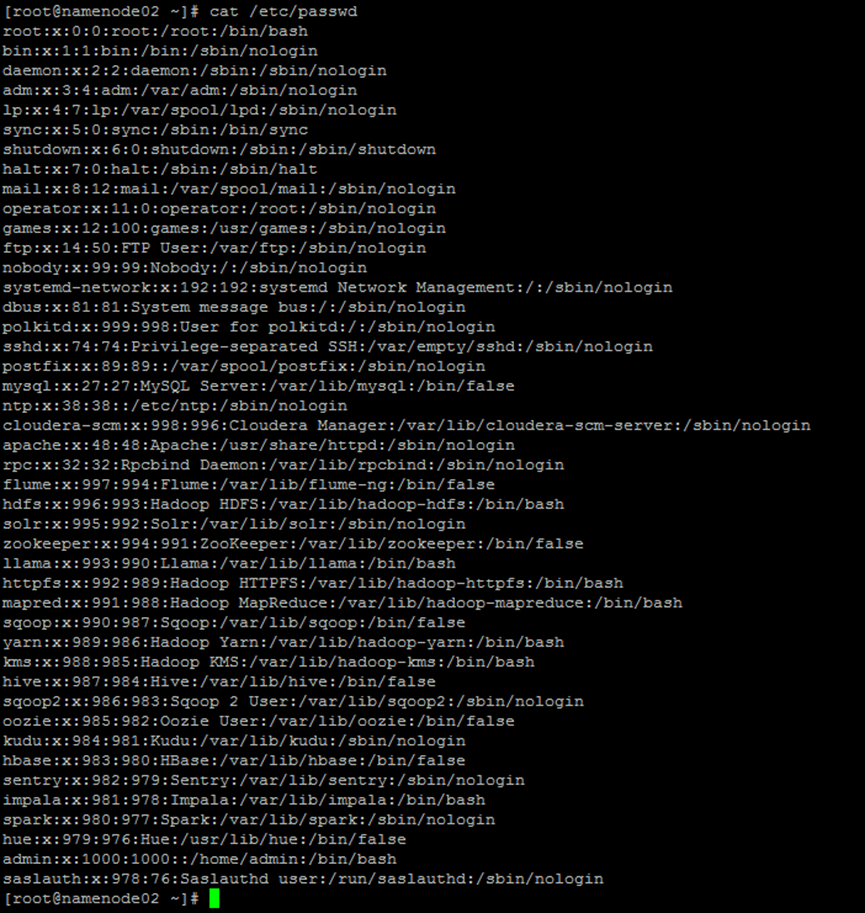

View the user name of cmd, as shown in the following figure. The user name is cloudera SCM

Add an administrator account to Cloudera Manager

command

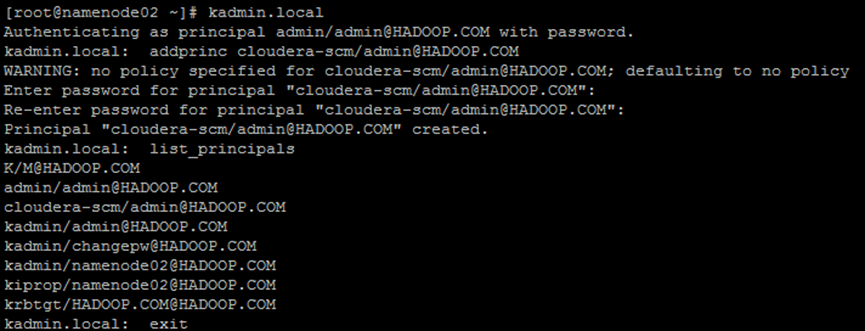

kadmin.local addprinc cloudera-scm/admin@HADOOP.COM list_principals

implement

---- Authenticating as principal admin/admin@HADOOP.COM with password. kadmin.local: addprinc cloudera-scm/admin@HADOOP.COM WARNING: no policy specified for cloudera-scm/admin@HADOOP.COM; defaulting to no policy Enter password for principal "cloudera-scm/admin@HADOOP.COM": [Enter password as cloudera-scm] Re-enter password for principal "cloudera-scm/admin@HADOOP.COM": Principal "cloudera-scm/admin@HADOOP.COM" created. kadmin.local: list_principals K/M@HADOOP.COM admin/admin@HADOOP.COM cloudera-scm/admin@HADOOP.COM kadmin/admin@HADOOP.COM kadmin/changepw@HADOOP.COM kadmin/namenode02@HADOOP.COM kiprop/namenode02@HADOOP.COM krbtgt/HADOOP.COM@HADOOP.COM kadmin.local: exit ----

12. Configure Kerberos and access Hadoop related services

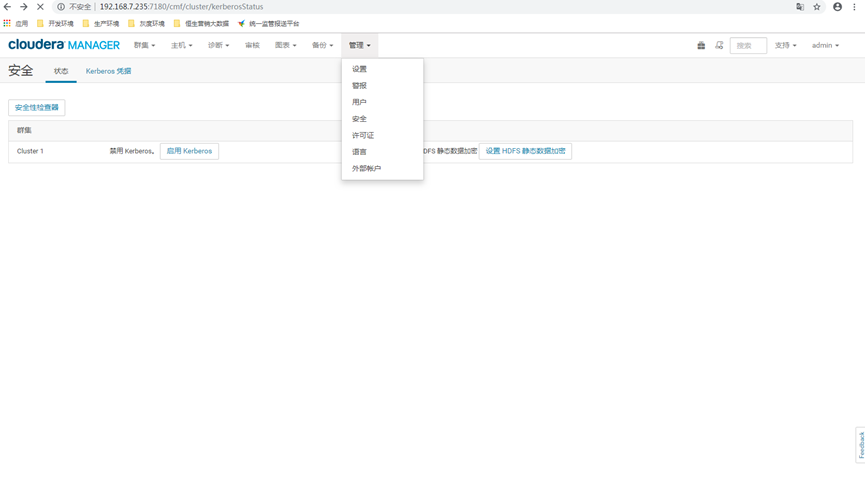

12.1 enter CDH management security interface

Log in cm to the WEBUI interface and select [management] – > [security] Enter the open kerberos interface

12.2 check that the following steps have been completed

Click the [start kerberos] button, go in and start the process, check all, and then click [continue]

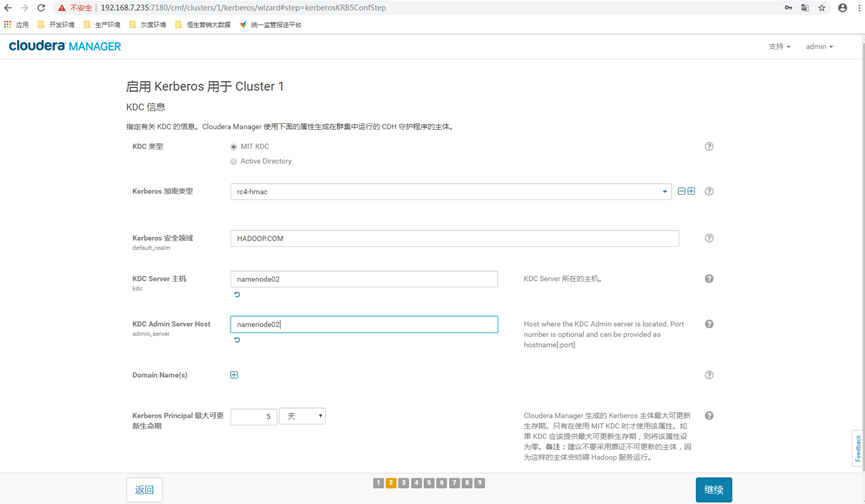

12.3 fill in the security domain

Fill in the security domain and the host names of KDC server and KDC admin server, and then click [continue]

12.4 configuration information

If this option is checked, you can deploy krb5 through the CM management interface Conf, but the actual operation

It is found that some configurations still need to be manually modified and synchronized. So it's not recommended to let

Cloudera Manager to manage krb5 Conf, click "continue"

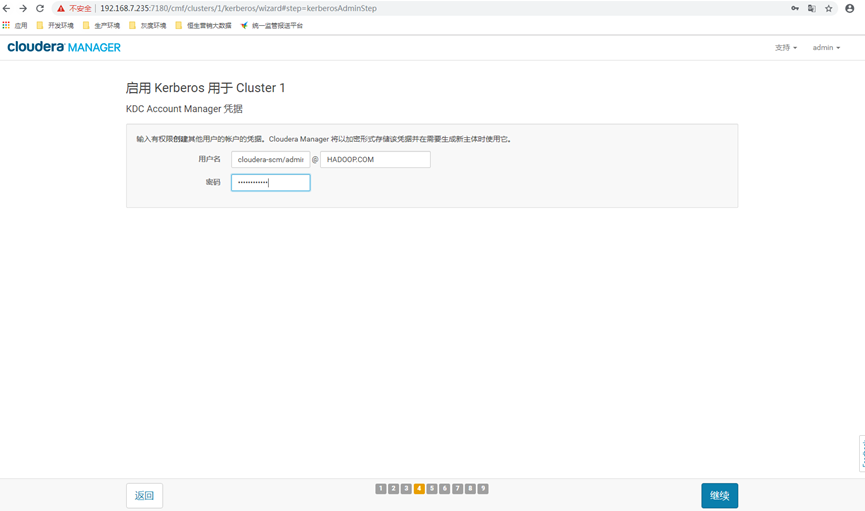

12.5 input cm administrator account information

Enter the Kerbers administrator account of Cloudera Manager, which must be consistent with the previously created account

cloudera-scm/admin@HADOOP.COM

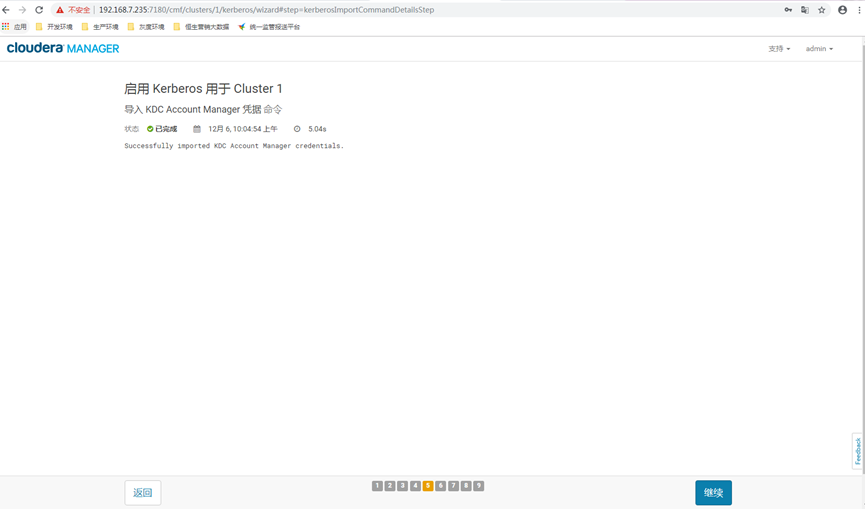

12.6 click continue

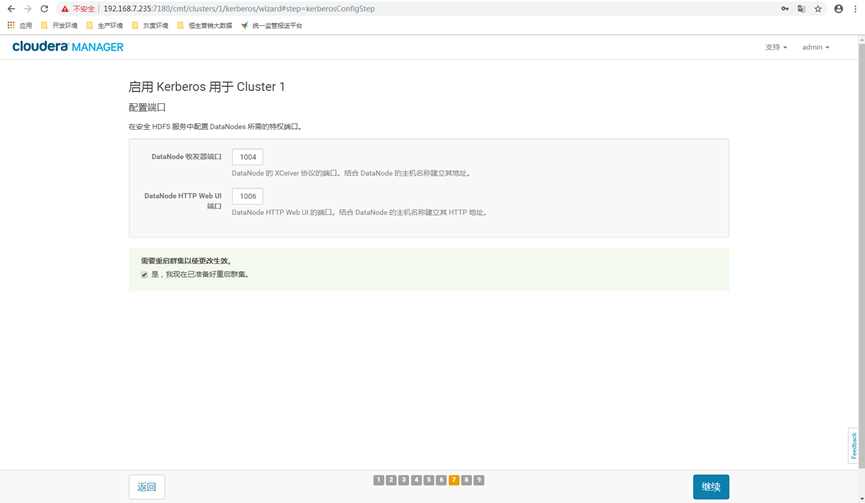

12.7 principle of service selection

By default, click continue

12.8 check restart

Check restart cluster and click continue

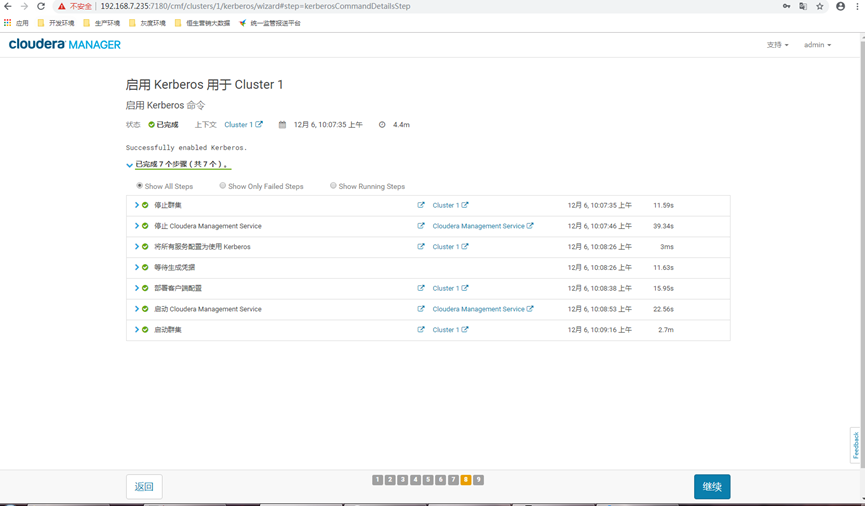

12.9 restart

Wait for restart, as shown in the figure below, and click continue

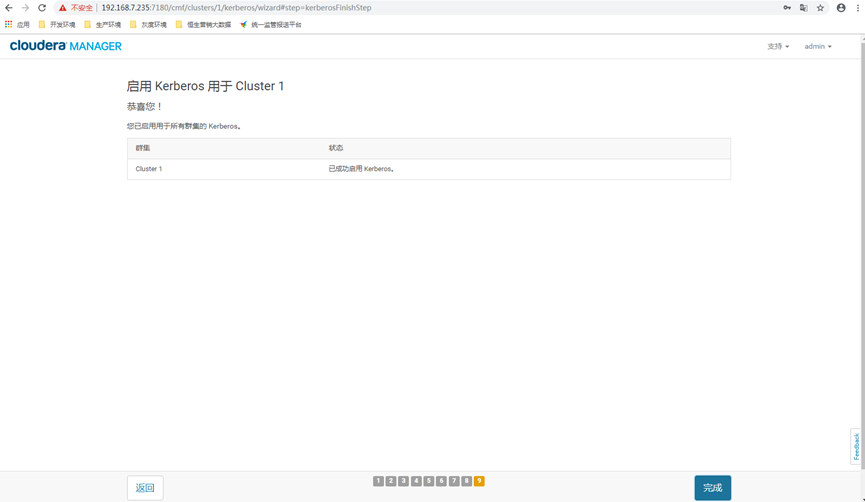

12.10 completion

Click finish

13. Appendix

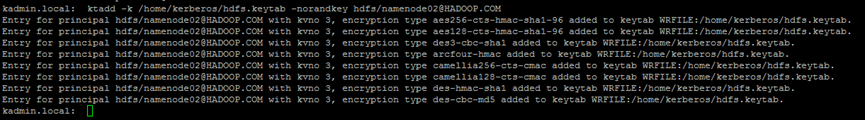

13.1. Generate keytab file

kadmin.local listprincs ktadd -k /home/kerberos/hdfs.keytab -norandkey hdfs/namenode02@HADOOP.COM

13.2 frequently asked questions

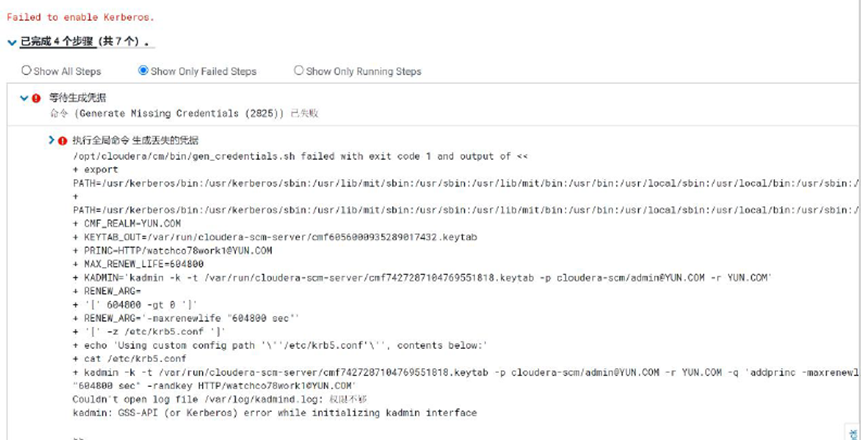

Question 1

kadmin: GSS-API (or Kerberos) error while initializing kadmin interface

Solution

Check whether the NTP service starts normally, and execute ntpq -p to check whether the offset is normal. If offset

It is too large. Correct the time of each host through the command "date - s" 2021-11-03 09:49:00 ".

Question 2

HUE certification issues

Solution

Execute the following command

klist -f -c /var/run/hue/hue_krb5_ccache kadmin.local modprinc -maxrenewlife 90day krbtgt/HADOOP.COM@HADOOP.COM list_principals modprinc -maxrenewlife 90day +allow_renewable hue/namenode01@HADOOP.COM modprinc -maxrenewlife 90day +allow_renewable hue/namenode02@HADOOP.COM

Question 3

Caused by: ExitCodeException exitCode=24:

File /var/lib/yarn-ce/etc/hadoop must not be world or group writable, but is 777

Solution

chmod -R 751 /var/lib

Question 4

Can't create directory /data1/yarn/nm/usercache/hive/appcache/application_1639382956195_0009 - Permission denied

Solution

Go to the error reporting host and execute the following script

chown yarn:yarn /data1/yarn/nm/usercache/hive

13.3. Common commands

#Enter kdc kadmin.local #Create kdc database kdb5_util create –r HADOOP.COM –s --establish princ addprinc cloudera-scm/admin@HADOOP.COM --delete princ delprinc cloudera-scm/admin@HADOOP.COM --Change Password change_password admin/admin@HADOOP.COM --View lifecycle getprinc zookeeper/datanode05 --see princ listprincs --Delete database rm -rf /var/kerberos/krb5kdc/principal* --generate keytab file ktadd -k /home/kerberos/hdfs.keytab -norandkey hdfs/namenode02@HADOOP.COM