Kubernetes CEPH CSI analysis directory navigation

CEPH CSI source code analysis (5) - RBD driver nodeserver analysis (Part I)

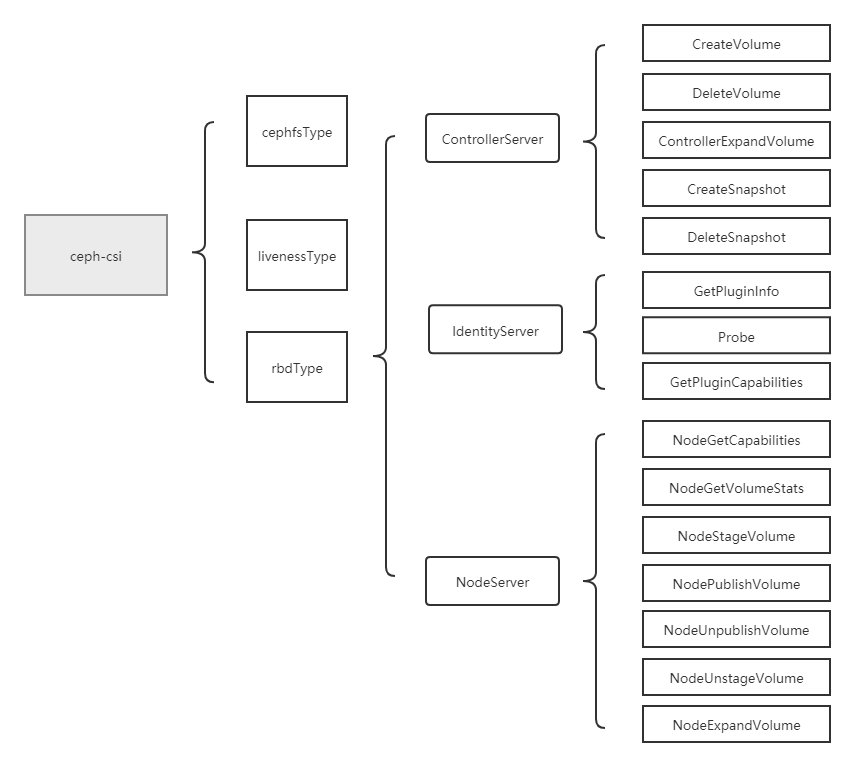

When the driver type specified when the CEPH CSI component is started is rbd, the services related to rbd driver will be started. Then, according to the parameter configuration of controllerserver and nodeserver, decide to start controllerserver and IdentityServer, or nodeserver and IdentityServer.

Based on tag v3 zero

https://github.com/ceph/ceph-csi/releases/tag/v3.0.0

rbd driver analysis will be divided into four parts: service entry analysis, controller server analysis, nodeserver analysis and identity server analysis.

nodeserver analysis is conducted in this section. nodeserver mainly includes NodeGetCapabilities, NodeGetVolumeStats, NodeStageVolume (map rbd and mount stagingPath), NodePublishVolume (mount targetPath), NodeUnpublishVolume (umount targetPath), NodeUnstageVolume (umount stagingPath and unmap rbd) NodeExpandVolume operations will be analyzed one by one. This section analyzes NodeGetCapabilities, NodeGetVolumeStats, and NodeExpandVolume.

nodeserver analysis

(1)NodeGetCapabilities

brief introduction

NodeGetCapabilities are mainly used to obtain the capabilities of the CEPH CSI driver.

This method is called by kubelet. Before kubelet calls NodeExpandVolume, NodeStageVolume, NodeUnstageVolume and other methods, it will first call NodeGetCapabilities to obtain the capability of the CEPH CSI driver to see whether it supports calling these methods.

The code that is called in kubelet is located at pkg/volume/csi/csi_. client. go.

NodeGetCapabilities

The ability of csi driver is registered in NodeGetCapabilities method.

The following code indicates that the csi component supports the following capabilities:

(1) Mount the storage to the node and remove the storage from the node;

(2) Obtain the storage status on the node;

(3) Storage expansion.

// ceph-csi/internal/rbd/nodeserver.go

// NodeGetCapabilities returns the supported capabilities of the node server.

func (ns *NodeServer) NodeGetCapabilities(ctx context.Context, req *csi.NodeGetCapabilitiesRequest) (*csi.NodeGetCapabilitiesResponse, error) {

return &csi.NodeGetCapabilitiesResponse{

Capabilities: []*csi.NodeServiceCapability{

{

Type: &csi.NodeServiceCapability_Rpc{

Rpc: &csi.NodeServiceCapability_RPC{

Type: csi.NodeServiceCapability_RPC_STAGE_UNSTAGE_VOLUME,

},

},

},

{

Type: &csi.NodeServiceCapability_Rpc{

Rpc: &csi.NodeServiceCapability_RPC{

Type: csi.NodeServiceCapability_RPC_GET_VOLUME_STATS,

},

},

},

{

Type: &csi.NodeServiceCapability_Rpc{

Rpc: &csi.NodeServiceCapability_RPC{

Type: csi.NodeServiceCapability_RPC_EXPAND_VOLUME,

},

},

},

},

}, nil

}

(2)NodeGetVolumeStats

brief introduction

NodeGetVolumeStats is used to detect the state of the mounted storage and return the relevant metrics of the storage to kubelet.

Kubelet calls periodically and circularly to obtain volume related indicators. The code of kubelet timing call is located in pkg/kubelet/server/stats/volume_stat_calculator.go-StartOnce().

NodeGetVolumeStats

Main logic:

(1) Obtain the storage mounting path;

(2) Check whether the storage mount path is a mount point (compare the value of device in the stat result of the specified path and its parent directory. If the value of device is inconsistent, it is a mount point);

(3) Get the Metrics storing the mount path through stat and return it.

// internal/csi-common/nodeserver-default.go

// NodeGetVolumeStats returns volume stats.

func (ns *DefaultNodeServer) NodeGetVolumeStats(ctx context.Context, req *csi.NodeGetVolumeStatsRequest) (*csi.NodeGetVolumeStatsResponse, error) {

// Get storage mount path

var err error

targetPath := req.GetVolumePath()

if targetPath == "" {

err = fmt.Errorf("targetpath %v is empty", targetPath)

return nil, status.Error(codes.InvalidArgument, err.Error())

}

/*

volID := req.GetVolumeId()

TODO: Map the volumeID to the targetpath.

CephFS:

we need secret to connect to the ceph cluster to get the volumeID from volume

Name, however `secret` field/option is not available in NodeGetVolumeStats spec,

Below issue covers this request and once its available, we can do the validation

as per the spec.

https://github.com/container-storage-interface/spec/issues/371

RBD:

Below issue covers this request for RBD and once its available, we can do the validation

as per the spec.

https://github.com/ceph/ceph-csi/issues/511

*/

// Check whether the storage mount path is mountpoint

isMnt, err := util.IsMountPoint(targetPath)

if err != nil {

if os.IsNotExist(err) {

return nil, status.Errorf(codes.InvalidArgument, "targetpath %s doesnot exist", targetPath)

}

return nil, err

}

if !isMnt {

return nil, status.Errorf(codes.InvalidArgument, "targetpath %s is not mounted", targetPath)

}

// Get the Metrics of the storage mount path through stat

cephMetricsProvider := volume.NewMetricsStatFS(targetPath)

volMetrics, volMetErr := cephMetricsProvider.GetMetrics()

if volMetErr != nil {

return nil, status.Error(codes.Internal, volMetErr.Error())

}

available, ok := (*(volMetrics.Available)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch available bytes"))

}

capacity, ok := (*(volMetrics.Capacity)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch capacity bytes"))

return nil, status.Error(codes.Unknown, "failed to fetch capacity bytes")

}

used, ok := (*(volMetrics.Used)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch used bytes"))

}

inodes, ok := (*(volMetrics.Inodes)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch available inodes"))

return nil, status.Error(codes.Unknown, "failed to fetch available inodes")

}

inodesFree, ok := (*(volMetrics.InodesFree)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch free inodes"))

}

inodesUsed, ok := (*(volMetrics.InodesUsed)).AsInt64()

if !ok {

klog.Errorf(util.Log(ctx, "failed to fetch used inodes"))

}

return &csi.NodeGetVolumeStatsResponse{

Usage: []*csi.VolumeUsage{

{

Available: available,

Total: capacity,

Used: used,

Unit: csi.VolumeUsage_BYTES,

},

{

Available: inodesFree,

Total: inodes,

Used: inodesUsed,

Unit: csi.VolumeUsage_INODES,

},

},

}, nil

}

IsMountPoint

Determine whether the path is a mount point by calling IsLikelyNotMountPoint.

// internal/util/util.go

// IsMountPoint checks if the given path is mountpoint or not.

func IsMountPoint(p string) (bool, error) {

dummyMount := mount.New("")

notMnt, err := dummyMount.IsLikelyNotMountPoint(p)

if err != nil {

return false, status.Error(codes.Internal, err.Error())

}

return !notMnt, nil

}

dummyMount.IsLikelyNotMountPoint() main logic:

(1) Perform stat operation on the specified path;

(2) Perform stat operation on the parent directory of the specified path;

(3) By comparing the value of device in the stat result of the specified path and its parent directory, judge whether the path is a mount point (if the value of device is inconsistent, it is a mount point).

// vendor/k8s.io/utils/mount/mount_linux.go

// IsLikelyNotMountPoint determines if a directory is not a mountpoint.

// It is fast but not necessarily ALWAYS correct. If the path is in fact

// a bind mount from one part of a mount to another it will not be detected.

// It also can not distinguish between mountpoints and symbolic links.

// mkdir /tmp/a /tmp/b; mount --bind /tmp/a /tmp/b; IsLikelyNotMountPoint("/tmp/b")

// will return true. When in fact /tmp/b is a mount point. If this situation

// is of interest to you, don't use this function...

func (mounter *Mounter) IsLikelyNotMountPoint(file string) (bool, error) {

stat, err := os.Stat(file)

if err != nil {

return true, err

}

rootStat, err := os.Stat(filepath.Dir(strings.TrimSuffix(file, "/")))

if err != nil {

return true, err

}

// If the directory has a different device as parent, then it is a mountpoint.

if stat.Sys().(*syscall.Stat_t).Dev != rootStat.Sys().(*syscall.Stat_t).Dev {

return false, nil

}

return true, nil

}

(3)NodeExpandVolume

brief introduction

Responsible for the storage capacity expansion operation on the node side. It is mainly to do corresponding operations on the node to synchronize the stored capacity expansion information to the node.

NodeExpandVolume resizes rbd volumes.

In fact, storage expansion is divided into two steps. The first step is csi's controller expansion volume, which is mainly responsible for expanding the underlying storage; The second step is the NodeExpandVolume of csi. When the volumemode is filesystem, it is mainly responsible for synchronizing the capacity expansion information of the underlying rbd image to the rbd/nbd device to expand the xfs/ext file system; When the volumemode is block, the node side capacity expansion operation is not required.

NodeExpandVolume

Main process:

(1) Check request parameters;

(2) Judge whether the specified path is a mount point;

(3) Get devicePath;

(4) Call resizefs Newresizefs initializes the resizer;

(5) Call Resizer Resize for further operation.

func (ns *NodeServer) NodeExpandVolume(ctx context.Context, req *csi.NodeExpandVolumeRequest) (*csi.NodeExpandVolumeResponse, error) {

volumeID := req.GetVolumeId()

if volumeID == "" {

return nil, status.Error(codes.InvalidArgument, "volume ID must be provided")

}

volumePath := req.GetVolumePath()

if volumePath == "" {

return nil, status.Error(codes.InvalidArgument, "volume path must be provided")

}

if acquired := ns.VolumeLocks.TryAcquire(volumeID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volumeID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volumeID)

}

defer ns.VolumeLocks.Release(volumeID)

// volumePath is targetPath for block PVC and stagingPath for filesystem.

// check the path is mountpoint or not, if it is

// mountpoint treat this as block PVC or else it is filesystem PVC

// TODO remove this once ceph-csi supports CSI v1.2.0 spec

notMnt, err := mount.IsNotMountPoint(ns.mounter, volumePath)

if err != nil {

if os.IsNotExist(err) {

return nil, status.Error(codes.NotFound, err.Error())

}

return nil, status.Error(codes.Internal, err.Error())

}

if !notMnt {

return &csi.NodeExpandVolumeResponse{}, nil

}

devicePath, err := getDevicePath(ctx, volumePath)

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

diskMounter := &mount.SafeFormatAndMount{Interface: ns.mounter, Exec: utilexec.New()}

// TODO check size and return success or error

volumePath += "/" + volumeID

resizer := resizefs.NewResizeFs(diskMounter)

ok, err := resizer.Resize(devicePath, volumePath)

if !ok {

return nil, fmt.Errorf("rbd: resize failed on path %s, error: %v", req.GetVolumePath(), err)

}

return &csi.NodeExpandVolumeResponse{}, nil

}

resizer.Resize

Call the corresponding resize method according to the corresponding file system format.

func (resizefs *ResizeFs) Resize(devicePath string, deviceMountPath string) (bool, error) {

format, err := resizefs.mounter.GetDiskFormat(devicePath)

if err != nil {

formatErr := fmt.Errorf("ResizeFS.Resize - error checking format for device %s: %v", devicePath, err)

return false, formatErr

}

// If disk has no format, there is no need to resize the disk because mkfs.*

// by default will use whole disk anyways.

if format == "" {

return false, nil

}

klog.V(3).Infof("ResizeFS.Resize - Expanding mounted volume %s", devicePath)

switch format {

case "ext3", "ext4":

return resizefs.extResize(devicePath)

case "xfs":

return resizefs.xfsResize(deviceMountPath)

}

return false, fmt.Errorf("ResizeFS.Resize - resize of format %s is not supported for device %s mounted at %s", format, devicePath, deviceMountPath)

}

xfsResize

xfs file system uses xfs_growfs command.

func (resizefs *ResizeFs) xfsResize(deviceMountPath string) (bool, error) {

args := []string{"-d", deviceMountPath}

output, err := resizefs.mounter.Exec.Command("xfs_growfs", args...).CombinedOutput()

if err == nil {

klog.V(2).Infof("Device %s resized successfully", deviceMountPath)

return true, nil

}

resizeError := fmt.Errorf("resize of device %s failed: %v. xfs_growfs output: %s", deviceMountPath, err, string(output))

return false, resizeError

}

extResize

The ext file system uses the resize 2fs command.

func (resizefs *ResizeFs) extResize(devicePath string) (bool, error) {

output, err := resizefs.mounter.Exec.Command("resize2fs", devicePath).CombinedOutput()

if err == nil {

klog.V(2).Infof("Device %s resized successfully", devicePath)

return true, nil

}

resizeError := fmt.Errorf("resize of device %s failed: %v. resize2fs output: %s", devicePath, err, string(output))

return false, resizeError

}

RBD driver nodeserver analysis (Part I) - Summary

This section analyzes the NodeGetCapabilities, NodeGetVolumeStats and NodeExpandVolume methods. Their functions are as follows: