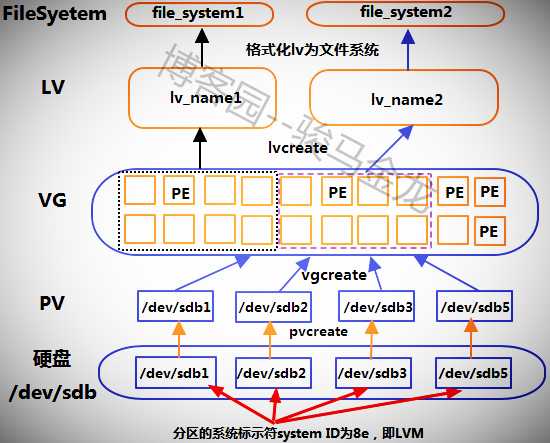

6.1 concepts and mechanisms related to LVM

LVM(Logical Volume Manager) can make the partition elastic, and can expand and reduce the partition size anytime, anywhere, provided that the partition is in LVM format.

The software package that LVM needs to use is lvm2, which is generally pre installed in the CentOS distribution.

- PV(Physical Volume) is the physical volume

After the hard disk is partitioned (not formatted as a file system), use the pvcreate command to create the partition as pv. The system ID of the partition is required to be 8e, that is, the system identifier in LVM format.

- VG(Volume Group) is the volume group

Combine multiple PVs and use the vgcreate command to create a volume group. In this way, the volume group contains multiple PVS, which is equivalent to the disk obtained by re consolidating multiple partitions. Although VG integrates multiple PVS, when creating VG, all space of VG will be divided into multiple PES according to the specified PE size. The storage in LVM mode takes PE as the unit, similar to the Block of file system.

- PE(Physical Extend)

PE is the storage unit in VG. The actual stored data is stored here.

- LV(Logical Volume)

VG is equivalent to an integrated hard disk, so Lv is equivalent to a partition, but the partition is divided by VG. There are many PE units in VG. You can specify how many PES are divided into one LV, or you can directly specify the size (such as how many megabytes). After being divided into LV, it is equivalent to dividing the partition. You only need to format the LV to become an ordinary file system.

Generally speaking, the partition step of non LVM management is to partition the hard disk and then format the partition as a file system. When LVM is used, the hard disk partition is transformed into a LVM manageable PV after it is partitioned into a partition with a specific LVM identifier. In fact, PV is still similar to partition, and then several PVS are integrated into VGS similar to disk. Finally, VG is divided into Lv. At this time, LV becomes a LVM manageable partition and can become a file system by formatting it.

- LE(logical extent)

PE is a physical storage unit, while Le is a logical storage unit, that is, the logical storage unit in lv, which is the same size as PE. Dividing lv from vg is actually dividing PE from vg, but after dividing lv, it is no longer called PE, but le.

The reason why LVM can scale capacity is to move the idle PE out of the LV or add the idle PE to the Lv.

6.2 writing mechanism of LVM

LV is divided from VG, and PE in LV is likely to come from multiple PV S. When storing data to LV, there are many storage mechanisms, two of which are:

- Linear mode: first write the PE from the same PV, and then write the PE from the next PV.

- Striped mode: one piece of data is split into multiple copies and written into each PV corresponding to the LV, so the read-write performance is good, similar to RAID 0.

Although striped has good read-write performance, it is not recommended to use this mode, because lvm focuses on elastic capacity expansion rather than performance. RAID should be used to achieve performance, and it will be troublesome to expand and shrink capacity when using striped mode. The default is to use linear mode.

6.3 LVM implementation diagram

6.4 implementation of LVM

The above figure is an example.

First, you need to modify the partition / dev/sdb{1,2,3,5} under / dev/sdb to an identifier in LVM format (/ dev/sdb4 will be used in later capacity expansion experiments). The identifier is 8e in mbr format and 8300 in gpt format.

The following is some partition information in gpt partition table format.

[root@server2 ~]# gdisk -l /dev/sdb GPT fdisk (gdisk) version 0.8.6 Number Start (sector) End (sector) Size Code 1 2048 20000767 9.5 GiB 8E00 2 20000768 26292223 3.0 GiB 8E00 3 26292224 29296639 1.4 GiB 8E00 4 29296640 33202175 1.9 GiB 8300 5 33202176 37109759 1.9 GiB 8E00

6.4.1 managing PV

There are several commands for managing PV: pvscan, pvdisplay, pvcreate, pvremove, and pvmove.

The command is simple and basically does not require any options.

function | command |

|---|---|

Create PV | pvcreate |

Scan and list all PVS | pvscan |

List pv attribute information | pvdisplay |

Remove pv | pvremove |

Move data in pv | pvmove |

Among them, pvscan searches which PVS are currently available, and puts the results in the cache after scanning; pvdisplay will display the details of each pv, such as PV name, pv size, and the VG to which it belongs.

Directly create the above / dev/sdb{1,2,3,5} as pv.

This code is by Java Architect must see network-Structure Sorting [root@server2 ~]# pvcreate -y /dev/sdb1 /dev/sdb2 /dev/sdb3 /dev/sdb5 # -The y option is used to automatically answer yes Wiping ext4 signature on /dev/sdb1. Physical volume "/dev/sdb1" successfully created Wiping ext2 signature on /dev/sdb2. Physical volume "/dev/sdb2" successfully created Physical volume "/dev/sdb3" successfully created Physical volume "/dev/sdb5" successfully created

Use pv scan to see which PVs and basic properties.

[root@server2 ~]# pvscan PV /dev/sdb1 lvm2 [9.54 GiB] PV /dev/sdb2 lvm2 [3.00 GiB] PV /dev/sdb5 lvm2 [1.86 GiB] PV /dev/sdb3 lvm2 [1.43 GiB] Total: 4 [15.83 GiB] / in use: 0 [0 ] / in no VG: 4 [15.83 GiB]

Note that the last line shows "total pv capacity / used pv capacity / idle pv capacity"

Use pv display to view the attribute information of one of the PVs.

This code is by Java Architect must see network-Architecture sorting [root@server2 ~]# pvdisplay /dev/sdb1 "/dev/sdb1" is a new physical volume of "9.54 GiB" --- NEW Physical volume --- PV Name /dev/sdb1 VG Name PV Size 9.54 GiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID fRChUf-CL8d-2UwC-d94R-xa8a-MRYa-yvgFJ9

pvdisplay also has a very important option "- m", which allows you to view the usage distribution of PE in the device. The following is the result of a display.

[root@server2 ~]# pvdisplay -m /dev/sdb2

--- Physical volume ---

PV Name /dev/sdb2

VG Name firstvg

PV Size 3.00 GiB / not usable 16.00 MiB

Allocatable yes

PE Size 16.00 MiB

Total PE 191

Free PE 100

Allocated PE 91

PV UUID uVgv3q-ANyy-02M1-wmGf-zmFR-Y16y-qLgNMV

--- Physical Segments ---

Physical extent 0 to 0: # Description the 0th PE is being used. The serial number of PE in PV starts from 0

Logical volume /dev/firstvg/first_lv

Logical extents 450 to 450 # The PE is at the 450th LE position in the LV

Physical extent 1 to 100: # Note in / dev/sdb2, the PE of 1-100 serial numbers is idle and unused

FREE

Physical extent 101 to 190: # Note the PE of serial number 101-190 is in use, and its position in LV is 551-640

Logical volume /dev/firstvg/first_lv

Logical extents 551 to 640 Knowing the distribution of PE, you can easily use the pvmove command to move PE data between devices. For specific usage of pvmove, see“ Shrink LVM disk "Section.

Test pvremove again, remove / dev/sdb5, and then add it back to pv.

[root@server2 ~]# pvremove /dev/sdb5 Labels on physical volume "/dev/sdb5" successfully wiped [root@server2 ~]# pvcreate /dev/sdb5 Physical volume "/dev/sdb5" successfully created

6.4.2 management VG

There are also several commands for managing VG.

function | command |

|---|---|

Create VG | vgcreate |

Scan and list all VGS | vgscan |

List vg attribute information | vgdisplay |

Remove vg, that is, delete vg | vgremove |

Remove pv from vg | vgreduce |

Add pv to vg | vgextend |

Modify vg properties | vgchange |

Similarly, vgscan searches for several VGS and displays the basic attributes of the vg. vgcreate is to create the vg, vgdisplay is to list the details of the vg, vgremove is to delete the entire vg, vgextend is used to extend the vg, that is, pv is added to the vg, and vgreduce is to remove pv from the vg. In addition, there is a command vgchange, which is used to change the properties of vg, such as changing the state of vg to active or inactive.

Create a vg and add the above four pv /dev/sdb{1,2,3,5} to the vg. Note that vg needs to be named. vg can be equivalent to the hierarchy of disks, and disks have names, such as / dev/sdb, / dev/sdc, etc. At the same time, when creating vg, you can use the - s option to specify the size of pe. If not specified, it defaults to 4M.

[root@server2 ~]# vgcreate -s 16M firstvg /dev/sdb{1,2,3,5}

Volume group "firstvg" successfully createdThe vg name created here is firstvg, and the specified pe size is 16M. After creating a vg, it is difficult to modify the pe size. Only the vg with empty data can be modified, but it is better to recreate the vg.

Note that there can only be 65534 PES per vg in lvm1, so specifying the size of pe can change the maximum capacity of each vg. However, there is no such restriction in lvm2, and now LVM generally refers to lvm2, which is also the default version.

Creating a vg actually manages a vg directory / dev/firstvg in the / dev directory. However, the directory will be created only when lv is created. If lv is created in the vg, a link file will be generated in the directory to point to the / dev/dm device.

Take another look at vgscan and vgdisplay.

[root@server2 ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "firstvg" using metadata type lvm2

[root@server2 ~]# vgdisplay firstvg --- Volume group --- VG Name firstvg System ID Format lvm2 Metadata Areas 4 Metadata Sequence No 1 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 4 Act PV 4 VG Size 15.80 GiB PE Size 16.00 MiB Total PE 1011 Alloc PE / Size 0 / 0 Free PE / Size 1011 / 15.80 GiB VG UUID GLwZTC-zUj9-mKas-CJ5m-Xf91-5Vqu-oEiJGj

Remove a pv from vg, such as / dev/sdb5, and then vgdisplay. It is found that one pv is missing and pe is reduced accordingly.

[root@server2 ~]# vgreduce firstvg /dev/sdb5 Removed "/dev/sdb5" from volume group "firstvg"

[root@server2 ~]# vgdisplay firstvg --- Volume group --- VG Name firstvg System ID Format lvm2 Metadata Areas 3 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 3 Act PV 3 VG Size 13.94 GiB PE Size 16.00 MiB Total PE 892 Alloc PE / Size 0 / 0 Free PE / Size 892 / 13.94 GiB VG UUID GLwZTC-zUj9-mKas-CJ5m-Xf91-5Vqu-oEiJGj

Then add / dev/sdb5 to vg.

[root@server2 ~]# vgextend firstvg /dev/sdb5 Volume group "firstvg" successfully extended

vgchange is used to set the active state of a volume group. The active state of a volume group mainly affects lv. Use the - a option to set.

Set firstvg to active (yes).

shell> vgchange -a y firstvg

Set firstvg to inactive (active no).

shell> vgchange -a n firstvg

6.4.3 manage LV

With vg, you can partition according to vg, that is, create Lv. There are similar commands for managing Lv.

function | command |

|---|---|

Create LV | lvcreate |

Scan and list all LVS | lvscan |

List lv attribute information | lvdisplay |

Remove the lv, that is, delete the lv | lvremove |

Reduce lv capacity | lvreduce(lvresize) |

Increase lv capacity | lvextend(lvresize) |

Change lv capacity | lvresize |

There are several options for the lvcreate command:

lvcreate {-L size(M/G) | -l PEnum} -n lv_name vg_name

Option Description:

-L: Create based on size lv,That is, how much space is allocated to this lv

-l: according to PE To create lv,That is, how many are allocated pe Here lv

-n: appoint lv Name ofThe vg created earlier has 1011 PE S with a total capacity of 15.8G.

[root@server2 ~]# vgdisplay | grep PE PE Size 16.00 MiB Total PE 1011 Alloc PE / Size 0 / 0 Free PE / Size 1011 / 15.80 GiB

Use - L and - L to create the name first, respectively_ lv and sec_lv of lv.

[root@server2 ~]# lvcreate -L 5G -n first_lv firstvg Logical volume "first_lv" created. [root@server2 ~]# lvcreate -l 160 -n sec_lv firstvg Logical volume "sec_lv" created.

After the lv is created, the soft link file corresponding to the lv name will be created in the / dev/firstvg directory, and the link file will also be created in the / dev/mapper directory. They all point to the / dev/dm device.

[root@server2 ~]# ls -l /dev/firstvg/ total 0 lrwxrwxrwx 1 root root 7 Jun 9 23:41 first_lv -> ../dm-0 lrwxrwxrwx 1 root root 7 Jun 9 23:42 sec_lv -> ../dm-1 [root@server2 ~]# ll /dev/mapper/ total 0 crw------- 1 root root 10, 236 Jun 6 02:44 control lrwxrwxrwx 1 root root 7 Jun 9 23:41 firstvg-first_lv -> ../dm-0 lrwxrwxrwx 1 root root 7 Jun 9 23:42 firstvg-sec_lv -> ../dm-1

Use lvscan and lvdisplay to view lv information. It should be noted that if lvdisplay wants to display a specified lv, it needs to specify its full path instead of simply specifying the lv name. Of course, if no parameters are specified, all lv information will be displayed.

[root@server2 ~]# lvscan ACTIVE '/dev/firstvg/first_lv' [5.00 GiB] inherit ACTIVE '/dev/firstvg/sec_lv' [2.50 GiB] inherit [root@server2 ~]# lvdisplay /dev/firstvg/first_lv --- Logical volume --- LV Path /dev/firstvg/first_lv LV Name first_lv VG Name firstvg LV UUID f3cRXJ-vucN-aAw3-HRbX-Fhnq-mW6c-kmL7WA LV Write Access read/write LV Creation host, time server2.longshuai.com, 2017-06-09 23:41:42 +0800 LV Status available # open 0 LV Size 5.00 GiB Current LE 320 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:0

Other commands about lv will be demonstrated in the later lvm expansion and reduction.

6.4.4 format lv as file system

Then format the lv to form a file system, and then mount it for use.

[root@server2 ~]# mke2fs -t ext4 /dev/firstvg/first_lv

How to view the file system type formatted by lv?

You can mount it first and then view it.

[root@server2 ~]# mount /dev/firstvg/first_lv /mnt [root@server2 ~]# mount | grep /mnt /dev/mapper/firstvg-first_lv on /mnt type ext4 (rw,relatime,data=ordered)

You can also use file -s to view, but since the LVS under / dev/firstvg and / dev/mapper are linked files linked to the block devices under / dev /, you can only view the block devices, otherwise the viewing result is only a linked file type.

[root@server2 ~]# file -s /dev/dm-0 /dev/dm-0: Linux rev 1.0 ext4 filesystem data, UUID=f2a3b608-f4e9-431b-8c34-9c75eaf7d3b5 (needs journal recovery) (extents) (64bit) (large files) (huge files)

Take another look at / dev/sdb.

[root@server2 ~]# lsblk -f /dev/sdb NAME FSTYPE LABEL UUID MOUNTPOINT sdb ├─sdb1 LVM2_member fRChUf-CL8d-2UwC-d94R-xa8a-MRYa-yvgFJ9 │ ├─firstvg-first_lv ext4 f2a3b608-f4e9-431b-8c34-9c75eaf7d3b5 /mnt │ └─firstvg-sec_lv ├─sdb2 LVM2_member uVgv3q-ANyy-02M1-wmGf-zmFR-Y16y-qLgNMV ├─sdb3 LVM2_member L1byov-fbjK-M48t-Uabz-Ljn8-Q74C-ncdv8h ├─sdb4 └─sdb5 LVM2_member Lae2vc-VfyS-QoNS-rz2h-IXUv-xKQc-Q6YCxQ

6.5 LVM disk expansion

The biggest advantage of lvm is its scalability, and its scalability focuses more on capacity expansion. At this time, the biggest reason for using lvm.

The essence of capacity expansion is to add free pe in vg to lv, so as long as there is free pe in vg, capacity expansion can be carried out. Even if there is no free pe, pv can also be added, and pv can be added to vg to increase free pe.

The two key steps of capacity expansion are as follows:

(1). Use lvextend or lvresize to add more pe or capacity to the lv

(2). Use the resize 2fs command (XFS, xfs_growfs) to increase the lv capacity to the corresponding file system (this process is to modify the file system rather than the LVM content)

For example, take / dev/sdb4, which has never been used, as the first_lv expansion source. First, create / dev/sdb4 as pv and add it to firstvg.

[root@server2 ~]# parted /dev/sdb toggle 4 lvm [root@server2 ~]# pvcreate /dev/sdb4 [root@server2 ~]# vgextend firstvg /dev/sdb4

Check the number of free PEs in firstvg.

[root@server2 ~]# vgdisplay firstvg | grep -i pe Open LV 1 PE Size 16.00 MiB Total PE 1130 Alloc PE / Size 480 / 7.50 GiB Free PE / Size 650 / 10.16 GiB

Now there are 650 PEs in vg, with a total capacity of 10.16G available. Add them all to first_lv can be added in two ways: by capacity and by PE quantity.

[root@server2 ~]# umount /dev/firstvg/first_lv [root@server2 ~]# lvextend -L +5G /dev/firstvg/first_lv # Add by capacity [root@server2 ~]# vgdisplay firstvg | grep -i pe Open LV 1 PE Size 16.00 MiB Total PE 1130 Alloc PE / Size 800 / 12.50 GiB Free PE / Size 330 / 5.16 GiB [root@server2 ~]# lvextend -l +330 /dev/firstvg/first_lv # Add by PE quantity [root@server2 ~]# lvscan ACTIVE '/dev/firstvg/first_lv' [15.16 GiB] inherit ACTIVE '/dev/firstvg/sec_lv' [2.50 GiB] inherit

lvresize can also be used to increase the capacity of lv. The method is the same as that of lvextend. For example:

shell> lvresize -L +5G /dev/firstvg/first_lv shell> lvresize -l +330 /dev/firstvg/first_lv

Set first_lv mount: view the capacity of the file system corresponding to the lv.

[root@server2 ~]# mount /dev/mapper/firstvg-first_lv /mnt [root@server2 ~]# df -hT /mnt Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/firstvg-first_lv ext4 4.8G 20M 4.6G 1% /mnt

It is found that the capacity has not increased. Why? Because only the capacity of lv has increased, but the capacity of file system has not increased. Therefore, use the resize 2fs tool to change the size of the ext file system. If it is an xfs file system, use xfs_growfs.

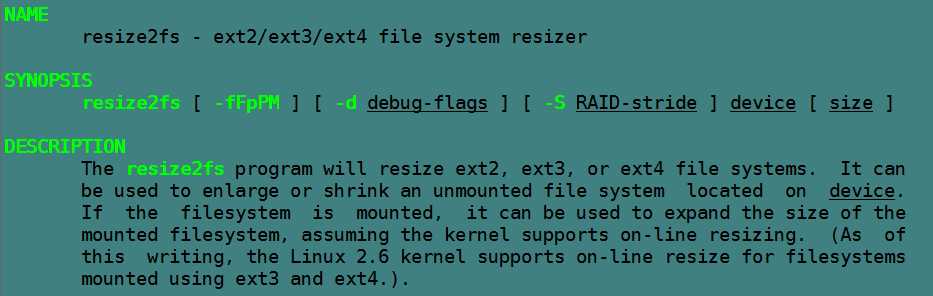

First, take a brief look at the instructions of the resize2fs tool.

It can be seen that this tool can be used to increase and reduce the file system size corresponding to the unloaded device. For versions after linux 2.6 kernel, it also supports online resize without uninstallation. However, it seems that online reduction is not supported during the experiment, so you can only uninstall it first.

Generally, you do not need to use the option. You can directly use the method of resize2fs device. If it fails, try to use the - f option to forcibly change the size.

[root@server2 ~]# resize2fs /dev/firstvg/first_lv resize2fs 1.42.9 (28-Dec-2013) Filesystem at /dev/firstvg/first_lv is mounted on /mnt; on-line resizing required old_desc_blocks = 1, new_desc_blocks = 2 The filesystem on /dev/firstvg/first_lv is now 3973120 blocks long.

[root@server2 ~]# df -hT | grep -i /mnt /dev/mapper/firstvg-first_lv ext4 15G 25M 15G 1% /mnt

Check again. The size has changed to 15G.

6.6 shrink LVM disk

The shrink function is not considered, and the xfs file system does not support shrink. However, seeing how to shrink can deepen the understanding of lvm and really understand the usage of pvdisplay and pvmove.

Current first_ The capacity of LV is 15.16G.

[root@server2 ~]# lvscan ACTIVE '/dev/firstvg/first_lv' [15.16 GiB] inherit ACTIVE '/dev/firstvg/sec_lv' [2.50 GiB] inherit

The use of pv is as follows:

[root@server2 ~]# pvscan PV /dev/sdb1 VG firstvg lvm2 [9.53 GiB / 0 free] PV /dev/sdb2 VG firstvg lvm2 [2.98 GiB / 0 free] PV /dev/sdb3 VG firstvg lvm2 [1.42 GiB / 0 free] PV /dev/sdb5 VG firstvg lvm2 [1.86 GiB / 0 free] PV /dev/sdb4 VG firstvg lvm2 [1.86 GiB / 0 free] Total: 5 [17.66 GiB] / in use: 5 [17.66 GiB] / in no VG: 0 [0 ]

What if you want to recycle 2.98G of / dev/sdb2? The steps of shrinking are opposite to those of expanding.

(1). First, unmount the device and use resize2fs to shrink the capacity of the file system to the target size

Here, you need to shrink 2.98G, compared with the original 15.16G, so the target capacity of the file system is 12.18G, which is about 12470m (because resize2fs cannot accept the size parameter of the decimal point, it can also be converted into an integer, or directly calculated as 12G). When calculating the size, you should give a little more capacity as much as possible, so it is calculated as shrinking 3.18G and target 12G.

[root@server2 ~]# umount /mnt [root@server2 ~]# resize2fs /dev/firstvg/first_lv 12G resize2fs 1.42.9 (28-Dec-2013) Please run 'e2fsck -f /dev/firstvg/first_lv' first.

Prompt: you need to run e2fsck - F / dev / myvg / first first first_ LV, mainly to check whether the modified size will affect the data.

[root@server2 ~]# e2fsck -f /dev/firstvg/first_lv e2fsck 1.42.9 (28-Dec-2013) Pass 1: Checking inodes, blocks, and sizes Pass 2: Checking directory structure Pass 3: Checking directory connectivity Pass 4: Checking reference counts Pass 5: Checking group summary information /dev/firstvg/first_lv: 11/999424 files (0.0% non-contiguous), 101892/3973120 blocks

[root@server2 ~]# resize2fs /dev/firstvg/first_lv 12G resize2fs 1.42.9 (28-Dec-2013) Resizing the filesystem on /dev/firstvg/first_lv to 3145728 (4k) blocks. The filesystem on /dev/firstvg/first_lv is now 3145728 blocks long.

(2). Retract lv. You can directly use "- L" to specify the shrinkage capacity, or you can use "- L" to specify the number of PE shrinkage.

For example, - L is used here to shrink.

[root@server2 ~]# lvreduce -L -3G /dev/firstvg/first_lv Rounding size to boundary between physical extents: 2.99 GiB WARNING: Reducing active logical volume to 12.16 GiB THIS MAY DESTROY YOUR DATA (filesystem etc.) Do you really want to reduce first_lv? [y/n]: y Size of logical volume firstvg/first_lv changed from 15.16 GiB (970 extents) to 12.16 GiB (779 extents). Logical volume first_lv successfully resized.

Warning: it may damage your data. If the actual data stored under the lv is larger than the contracted capacity, some data will be destroyed. However, if the stored data is smaller than the contracted capacity, no data will be destroyed. This is the advantage of lvm to modify the partition size losslessly. Since there is no data stored under lv, there is no need to worry about damage. Directly y determine reduce.

(3). pvmove move PE

The above process has released 3G PE, but where does this part of PE come from? Can you determine whether / dev/sdb2 can be removed at this time?

First, check which PV S have free PE S.

[root@server2 ~]# pvdisplay | grep 'PV Name\|Free' PV Name /dev/sdb1 Free PE 0 PV Name /dev/sdb2 Free PE 0 PV Name /dev/sdb3 Free PE 91 PV Name /dev/sdb5 Free PE 0 PV Name /dev/sdb4 Free PE 100

It can be seen that the idle PE S are distributed on / dev/sdb4 and / dev/sdb3, and / dev/sdb2 cannot be unloaded.

So what we need to do now is to move the PE on / dev/sdb2 to other devices to make / dev/sdb2 idle. Using the pvmove command, the execution of the command will involve many steps, so it may take some time.

Because 100 PES are idle over / dev/sdb4, move 100 PES from / dev/sdb2 to / dev/sdb4.

[root@server2 ~]# pvmove /dev/sdb2:0-99 /dev/sdb4

This means that 100 PES numbered 0-99 on / dev/sdb2 are moved to / dev/sdb4. If [- 99] is not added, it means that only the PE of 0 number is moved. Of course, the target PE location to be moved can also be specified in the same way on the target location / dev/sdb4.

Then move the remaining PE on / dev/sdb2 to / dev/sdb3, but at this time, you should first see what PE numbers are still occupied on / dev/sdb2.

[root@server2 ~]# pvdisplay -m /dev/sdb2

--- Physical volume ---

PV Name /dev/sdb2

VG Name firstvg

PV Size 3.00 GiB / not usable 16.00 MiB

Allocatable yes

PE Size 16.00 MiB

Total PE 191

Free PE 100

Allocated PE 91

PV UUID uVgv3q-ANyy-02M1-wmGf-zmFR-Y16y-qLgNMV

--- Physical Segments ---

Physical extent 0 to 99:

FREE

Physical extent 100 to 100:

Logical volume /dev/firstvg/first_lv

Logical extents 778 to 778

Physical extent 101 to 190:

Logical volume /dev/firstvg/first_lv

Logical extents 551 to 640Note that the PES from 100 to 190 are occupied. These PES are the ones to be moved to / dev/sdb3.

[root@server2 ~]# pvmove /dev/sdb2:100-190 /dev/sdb3

Now / dev/sdb2 is completely idle, that is, it can be removed from VG and unloaded.

[root@server2 ~]# pvdisplay /dev/sdb2 --- Physical volume --- PV Name /dev/sdb2 VG Name firstvg PV Size 3.00 GiB / not usable 16.00 MiB Allocatable yes PE Size 16.00 MiB Total PE 191 Free PE 191 Allocated PE 0 PV UUID uVgv3q-ANyy-02M1-wmGf-zmFR-Y16y-qLgNMV

(4). Remove pv from vg

[root@server2 ~]# vgreduce firstvg /dev/sdb2

(5). Remove the pv

[root@server2 ~]# pvremove /dev/sdb2

Now / dev/sdb2 is completely removed.