background

Because the new environment is online, data needs to be migrated from the old environment to the new environment.

Scheme Research

Scheme 1: copy data directory

First, observe the directory structure of ClickHouse on the file system (configured in the configuration file / ECT / ClickHouse server / config. XML) ), for ease of viewing, only the data and metadata directories are retained.

- The data directory stores data, a directory for each database and a subdirectory for each internal table.

- Metadata directory stores metadata, that is, database and table structure. among

- database.sql is the DDL that creates the database (ATTACH DATABASE default ENGINE = Ordinary)

- database/table.sql is the DDL for creating tables (attach table...)

The DDL here uses the ATTACH statement. Based on this information, directly copy the data and metadata directories (excluding the system) to the new cluster to realize data migration. Test with a small table to verify that it is feasible.

Operation process

- Pack the data and metadata data of the corresponding database or table on the hard disk of the source cluster

- Copy to the directory corresponding to the target cluster

- Restart Clickhouse server

Scheme 2: use remote table function

In addition to querying regular tables, ClickHouse can also use table functions to build some special "tables", in which the remote function can be used to query the tables of another ClickHouse.

It is easy to use:

- SELECT * FROM remote('addresses_expr', db, table, 'user', 'password') LIMIT 10;

Therefore, data migration can be realized with this function: - INSERT INTO <local_database>.<local_table> SELECT * FROM remote('remote_clickhouse_addr', <remote_database>, <remote_table>, '<remote_user>', '<remote_password>')

Operation process

- In the system. Of the source cluster Tables table queries database, table, DDL, partition, table engine and other information

- On the target cluster, run DDL to create the table, and then run the above migration statement to copy the data

- Traverse all tables and execute 2

Scheme 3: Clickhouse copier (adopted)

Clickhouse copier is an official data migration tool for data migration between multiple clusters.

Official website document configuration

Introduction to Clickhouse copier workflow

There are two configuration files read, one is zk's zookeeper XML configuration, a task saved in zk for the migration task XML configuration.

- Connect to zk and receive:

- Copy job.

- Status of the replication job.

- Read the task written to zk XML execution.

- Clickhouse copier tracks / task/path/description in zk and applies them. Note: the content in description is task XML configuration

- According to each running process, the "closest" partition of the source cluster will be selected, then the data will be copied to the target cluster, and the data will be re partitioned if necessary.

to configure

zookeeper.xml

Configure zoomeeper in the custom path of the server with Clickhouse copier installed xml

The configuration file consists of log level information and configuration information of the source side cluster zk. This configuration file is used for Clickhouse copier startup.

<yandex>

<logger>

<level>trace</level>

<size>100M</size>

<count>3</count>

</logger>

<zookeeper>

<node index="1">

<host>zkip1</host>

<port>12181</port>

</node>

<node index="2">

<host>zkip2</host>

<port>12181</port>

</node>

<node index="3">

<host>zkip3</host>

<port>12181</port>

</node>

</zookeeper>

</yandex>

task.xml

This configuration file is used to write to zk node before Clickhouse copier runs.

<yandex>

<remote_servers>

<!-- Source cluster configuration information -->

<src_manyun_ch_cluster>

<!-- Slice 1 -->

<shard>

<internal_replication>true</internal_replication>

<!-- Copy 1 -->

<replica>

<host>src_ck_ip1</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

<!-- Copy 2 -->

<replica>

<host>src_ck_ip2</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

</shard>

<!-- Slice 2 -->

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>src_ck_ip3</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

<replica>

<host>src_ck_ip4</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

</shard>

</src_manyun_ch_cluster>

<!-- Target cluster configuration information -->

<des_manyun_ch_cluster>

<!-- Slice 1 -->

<shard>

<internal_replication>true</internal_replication>

<!-- Copy 1 -->

<replica>

<host>des_ck_ip1</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

<!-- Copy 2 -->

<replica>

<host>des_ck_ip2</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

</shard>

<!-- Slice 2 -->

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>des_ck_ip3</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

<replica>

<host>des_ck_ip4</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

</shard>

<!-- Slice 3 -->

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>des_ck_ip5</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

<replica>

<host>des_ck_ip6</host>

<port>9000</port>

<user>root</user>

<password>pass</password>

</replica>

</shard>

</des_manyun_ch_cluster>

</remote_servers>

<!-- copier Maximum processes -->

<max_workers>8</max_workers>

<!-- Synchronization table information -->

<tables>

<!-- table_test_job Create custom category labels for,It is only used to distinguish different table synchronization tasks -->

<table_test_job>

<!-- pull information,Source table location -->

<cluster_pull>src_manyun_ch_cluster</cluster_pull>

<database_pull>src Library name</database_pull>

<table_pull>src Table name</table_pull>

<!-- push Information, target table location -->

<cluster_push>des_manyun_ch_cluster</cluster_push>

<database_push>des Library name</database_push>

<table_push>des Table name</table_push>

<!-- Destination table engine If the target cluster does not have a table, it will be based on this information engine Configuration creation table -->

<engine> ENGINE=ReplicatedMergeTree('/clickhouse/tables/{shard}/Library name/Table name', '{replica}')

PARTITION BY toYYYYMM(time)

ORDER BY (device_guid, point_code, time, device_type)

SETTINGS index_granularity = 8192

</engine>

<!-- The partition key used to insert data into the target cluster is inserted into the specified partition of the target -->

<sharding_key>02</sharding_key>

<!-- Filter criteria that can be added when querying source data -->

<!--

<where_condition> CounterID != 0 </where_condition>

-->

<!-- Specify the specific partition to be synchronized. If this parameter is not available, all partitions will be synchronized by default,partition Value is system.part Corresponding table partition column -->

<!--

<enabled_partitions></enabled_partitions>

-->

<!--Specify 202111 partition-->

<enabled_partitions>

<partition>'202111'</partition>

</enabled_partitions>

</table_test_job>

</tables>

</yandex>

Set task XML write zk

On the corresponding zk cluster node, execute

./zkCli.sh -server localhost:12181 create /clickhouse/copier_task ./zkCli.sh -server localhost:12181 create /clickhouse/copier_task/task # Create task information ./zkCli.sh -server localhost:12181 create /clickhouse/copier_task/task/description "`cat task.xml`" # View task information ./zkCli.sh -server localhost:12181 get /clickhouse/copier_task/task/description # Update task information ./zkCli.sh -server localhost:12181 set /clickhouse/copier_task/task/description "`cat task.xml`"

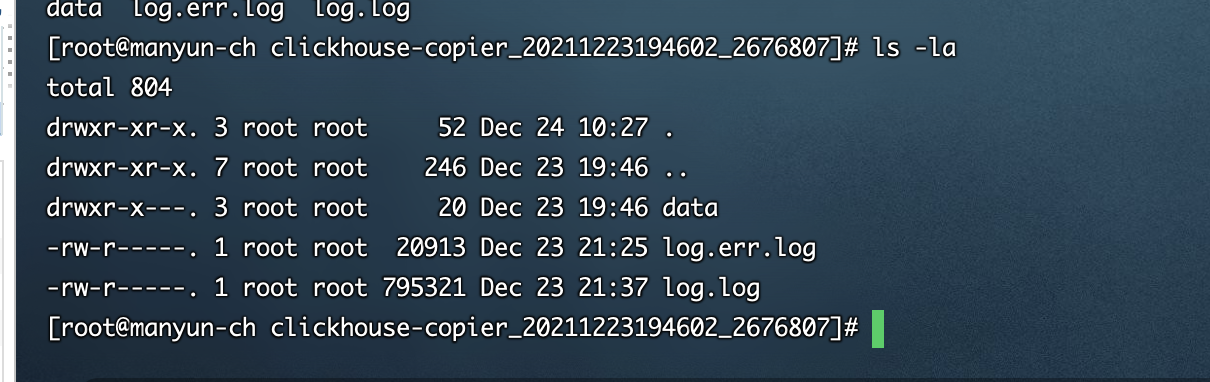

Execution process

Run in the custom path of the server with Clickhouse copier installed

If you have questions, you can Clickhouse copier -- help

The following command will read the task configured by zk cluster configuration / clickhouse/copytasks/test/description XML and save the log in logs under the current directory

Start task

Background operation

clickhouse-copier --config zookeeper.xml --task-path /clickhouse/copier_task/task --base-dir ./logs &

Log analysis

- First, the task generates a read_shard_n is used to read the data of the specified table on each shard. The task log is as follows:

2021.12.23 19:47:44.967318 [ 2676812 ] {} <Debug> ClusterCopier: Will copy 2 partitions from shard N2 (having a replica 172.16.0.122:9000, pull table ck_default.point_data_local of cluster src_manyun_ch_cluster

2021.12.23 19:50:24.851663 [ 2676811 ] {} <Debug> ClusterCopier: There are 2 destination partitions in shard N1 (having a replica 172.16.0.18:9000, pull table ck_default.point_data_local of cluster src_manyun_ch_cluster

- Then, according to the partition of the table_ The cityHash64 of all fields of key and orderby takes the result of the remaining 10 as the condition to split the data. The task log is as follows:

2021.12.23 19:50:24.855281 [ 2676811 ] {} <Debug> ClusterCopier: Checking shard N1 (having a replica 172.16.0.18:9000, pull table ck_default.point_data_local of cluster src_manyun_ch_cluster for partition 202110 piece 0 existence, executing query: SELECT 1 FROM _local.`.read_shard_0.des_manyun_ch_cluster.ck_default.point_data_test_local` WHERE (toYYYYMM(time) = (202110 AS partition_key)) AND (cityHash64(`device_guid`, `point_code`, `time`, `device_type`) % 10 = 0 ) LIMIT 1

Note: all splits here are from% 2esplit% 2edes_ manyun_ ch_ cluster%2Eck_ default%2Epoint_ data_ test_ local_ split is performed in piece and executed asynchronously. The data is streaming and no data is directly dropped.

3. Corresponding to these 10 pieces, there will be 10 tables corresponding to them at the destination. The table name is test_copy_piece_n (n is 0,1,2,..., 9), the task log is as follows:

2021.12.23 19:50:43.641097 [ 2676807 ] {} <Debug> StorageDistributed (.read_shard_0.des_manyun_ch_cluster.ck_default.point_data_test_local): Auto-increment is 0

2021.12.23 19:50:43.641393 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local): Auto-increment is 0

2021.12.23 19:50:43.641728 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_0): Auto-increment is 0

2021.12.23 19:50:43.641986 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_1): Auto-increment is 0

2021.12.23 19:50:43.642230 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_2): Auto-increment is 0

2021.12.23 19:50:43.642467 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_3): Auto-increment is 0

2021.12.23 19:50:43.642702 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_4): Auto-increment is 0

2021.12.23 19:50:43.642936 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_5): Auto-increment is 0

2021.12.23 19:50:43.643168 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_6): Auto-increment is 0

2021.12.23 19:50:43.643398 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_7): Auto-increment is 0

2021.12.23 19:50:43.643619 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_8): Auto-increment is 0

2021.12.23 19:50:43.643857 [ 2676807 ] {} <Debug> StorageDistributed (.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_9): Auto-increment is 0

2021.12.23 19:50:43.648644 [ 2676807 ] {} <Debug> ClusterCopier: Processing /clickhouse/copier_task/task/tables/des_manyun_ch_cluster.ck_default.point_data_test_local/202112/piece_0/shards/1

As can be seen from the following log, clickhouse_copier also uses insert into... select for data migration.

2021.12.23 19:50:45.481535 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_0` VALUES

2021.12.23 20:01:08.933373 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_1` VALUES

2021.12.23 20:11:34.144447 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_2` VALUES

2021.12.23 20:22:05.581291 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_3` VALUES

2021.12.23 20:32:42.431329 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_4` VALUES

2021.12.23 20:43:09.202856 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_5` VALUES

2021.12.23 20:53:40.903325 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_6` VALUES

2021.12.23 21:04:11.449778 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_7` VALUES

2021.12.23 21:14:42.446846 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_8` VALUES

2021.12.23 21:25:12.636855 [ 2676807 ] {} <Debug> ClusterCopier: Executing INSERT query: INSERT INTO _local.`.split.des_manyun_ch_cluster.ck_default.point_data_test_local_piece_9` VALUES

- After the task is completed, the test_copy_piece_n data in table attach to test_ In copy, the task log is as follows:

2021.12.23 21:35:42.672750 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 0 to original table

2021.12.23 21:35:54.482164 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:35:54.482228 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 1 to original table

2021.12.23 21:36:06.144835 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:36:06.144906 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 2 to original table

2021.12.23 21:36:18.104823 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:36:18.104898 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 3 to original table

2021.12.23 21:36:30.188603 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:36:30.188673 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 4 to original table

2021.12.23 21:36:42.229121 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:36:42.229197 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 5 to original table

2021.12.23 21:36:55.973762 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:36:55.973846 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 6 to original table

2021.12.23 21:36:55.973902 [ 2676807 ] {} <Debug> ClusterCopier: Executing ALTER query: ALTER TABLE ck_default.point_data_test_local ATTACH PARTITION 202109 FROM ck_default.point_data_test_local_piece_6

2021.12.23 21:37:12.261403 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:37:12.261475 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 7 to original table

2021.12.23 21:37:12.261500 [ 2676807 ] {} <Debug> ClusterCopier: Executing ALTER query: ALTER TABLE ck_default.point_data_test_local ATTACH PARTITION 202109 FROM ck_default.point_data_test_local_piece_7

2021.12.23 21:37:27.623009 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:37:27.623069 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 8 to original table

2021.12.23 21:37:27.623087 [ 2676807 ] {} <Debug> ClusterCopier: Executing ALTER query: ALTER TABLE ck_default.point_data_test_local ATTACH PARTITION 202109 FROM ck_default.point_data_test_local_piece_8

2021.12.23 21:37:40.992927 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:37:40.992987 [ 2676807 ] {} <Debug> ClusterCopier: Trying to move partition 202109 piece 9 to original table

2021.12.23 21:37:40.993029 [ 2676807 ] {} <Debug> ClusterCopier: Executing ALTER query: ALTER TABLE ck_default.point_data_test_local ATTACH PARTITION 202109 FROM ck_default.point_data_test_local_piece_9

2021.12.23 21:37:55.531416 [ 2676807 ] {} <Information> ClusterCopier: Number of nodes that executed ALTER query successfully : 6

2021.12.23 21:37:55.536704 [ 2676807 ] {} <Information> ClusterCopier: It took 6297.173648588 seconds to copy partition 202109: 377.38 GB uncompressed bytes, 4.39 billion rows and 5356530 source blocks are copied

- Finally, the test generated by the intermediate process will be_ copy_ piece_ N tables are deleted, and the task log is as follows:

2021.12.23 21:37:55.536783 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables

2021.12.23 21:37:55.536796 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 0

2021.12.23 21:37:55.536835 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_0

2021.12.23 21:37:55.538885 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.538944 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 1

2021.12.23 21:37:55.538961 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_1

2021.12.23 21:37:55.540567 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.540596 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 2

2021.12.23 21:37:55.540626 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_2

2021.12.23 21:37:55.542177 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.542206 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 3

2021.12.23 21:37:55.542220 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_3

2021.12.23 21:37:55.543788 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.543831 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 4

2021.12.23 21:37:55.543847 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_4

2021.12.23 21:37:55.545196 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.545225 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 5

2021.12.23 21:37:55.545253 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_5

2021.12.23 21:37:55.546966 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.546997 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 6

2021.12.23 21:37:55.547013 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_6

2021.12.23 21:37:55.548483 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.548532 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 7

2021.12.23 21:37:55.548549 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_7

2021.12.23 21:37:55.550149 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.550182 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 8

2021.12.23 21:37:55.550213 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_8

2021.12.23 21:37:55.551712 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.551741 [ 2676807 ] {} <Debug> ClusterCopier: Removing helping tables piece 9

2021.12.23 21:37:55.551769 [ 2676807 ] {} <Debug> ClusterCopier: Execute distributed DROP TABLE: DROP TABLE IF EXISTS ck_default.point_data_test_local_piece_9

2021.12.23 21:37:55.581328 [ 2676807 ] {} <Information> ClusterCopier: DROP TABLE query was successfully executed on 6 nodes.

2021.12.23 21:37:55.581638 [ 2676807 ] {} <Debug> MemoryTracker: Peak memory usage (for query): 2.25 GiB.

2021.12.23 21:37:55.581696 [ 2676807 ] {} <Debug> MemoryTracker: Peak memory usage (for query): 2.25 GiB.

At this point, the migration task is completed.

Supplementary notes

clickhouse_ In order to ensure that the task can continue to execute when it fails, the copier will keep some status information on the zk.

In addition to the description just created on zk, the other three are automatically generated. Tables records the synchronization tasks of tables, as follows: for example, task XML is the same as configured

[zk: localhost:12181(CONNECTED) 7] ls /clickhouse/copier_task/task/tables [des_manyun_ch_cluster.ck_default.point_data_test_local]

task_active_workers records which worker s are performing tasks

[zk: localhost:12181(CONNECTED) 7] ls /clickhouse/copier_task/task/task_active_workers des_manyun_ch_cluster.ck_default.point_data_test_local_copy

task_active_workers_version records the stage to which the task is executed, as follows:

[zk: localhost:12181(CONNECTED) 11] get /clickhouse/copier_task/task/task_active_workers_version /clickhouse/copier_task/task/tables/des_manyun_ch_cluster.ck_default.point_data_test_local/202109/piece_9/shards/2