Source: DeepHub IMBA This article is about 2700 words. It is recommended to read it for 9 minutes. This article takes you into it Distil Details, and gives a complete code implementation. This article introduces you in detail DistilBERT,The complete code implementation is given.

Machine learning models have become larger and larger. Even with trained models, when the hardware does not meet the expectations of the model that it should run, the reasoning time and memory cost will soar. To alleviate this problem, distillation can reduce the network to a reasonable size while minimizing performance loss.

In previous articles, we introduced how DistilBERT [1] introduced a simple and effective distillation technology, which can be easily applied to any model similar to BERT, but did not give any code implementation. In this article, we will enter into details and give a complete code implementation.

Initialization of student model

Since we want to initialize a new model from an existing model, we need to access the weights of the old model. This article will use Robert [2] large provided by Hugging Face as our teacher model. In order to obtain the model weight, we must know how to access them.

Model structure of Hugging Face

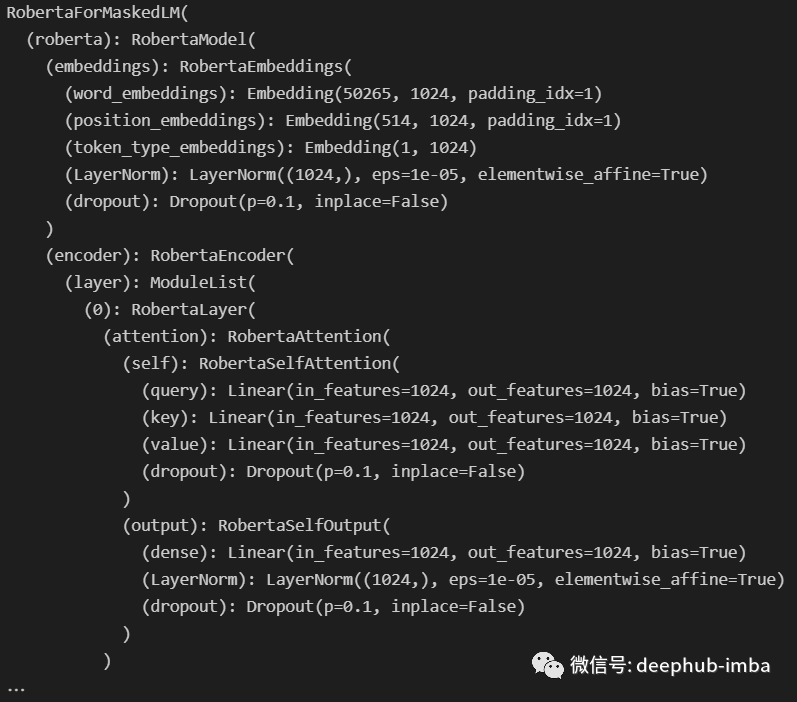

The first thing you can try is to print the model, which should give us an in-depth understanding of how it works. Of course, we can also study the Hugging Face document [3], but it's too cumbersome.

from transformers import AutoModelForMaskedLM

roberta = AutoModelForMaskedLM.from_pretrained("roberta-large")

print(roberta)After running this code, you get:

In the Hugging Face model, you can use The children () generator accesses the sub components of the module. Therefore, if we want to use the whole model, we need to call on it children() and call it on each child node. This is a recursive function. The code is as follows:

from typing import Anyfrom transformers import AutoModelForMaskedLM

roberta = AutoModelForMaskedLM.from_pretrained("roberta-large")

def visualize_children( object : Any, level : int = 0,) -> None: """ Prints the children of (object) and their children too, if there are any. Uses the current depth (level) to print things in a ordonnate manner. """ print(f"{' ' * level}{level}- {type(object).__name__}") try: for child in object.children(): visualize_children(child, level + 1) except: pass

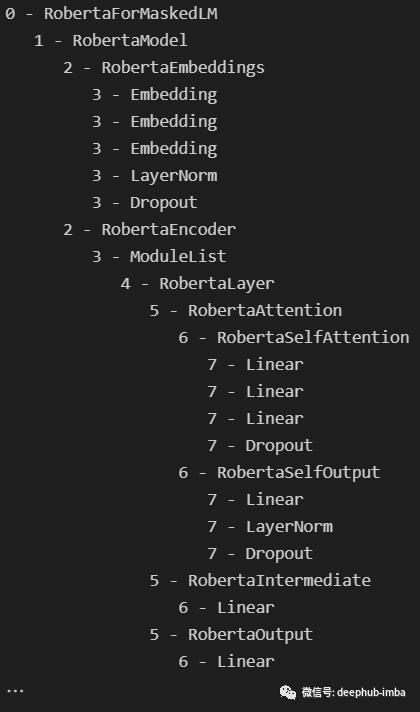

visualize_children(roberta)The following outputs are obtained:

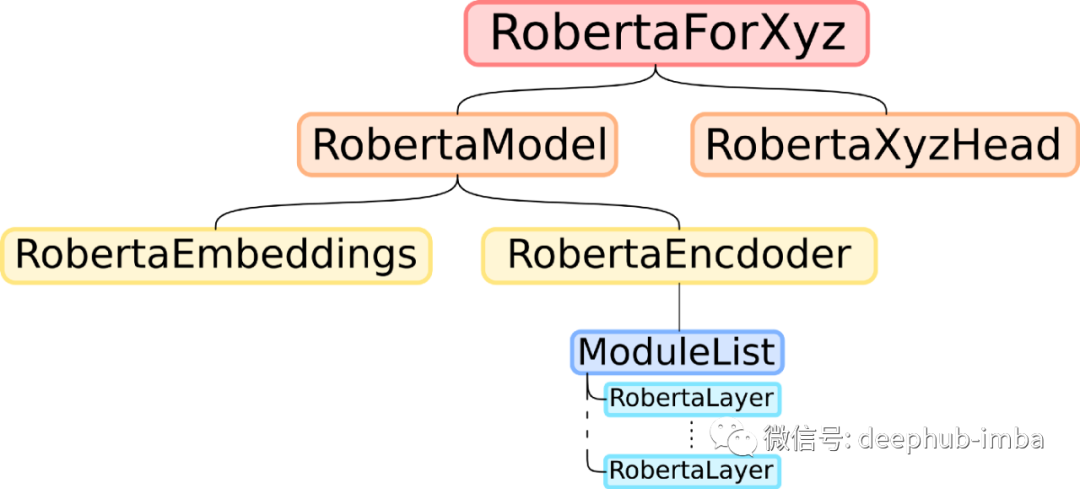

It seems that the structure of RoBERTa model is the same as that of other models similar to BERT, as shown below:

Copy the weight of the teacher model

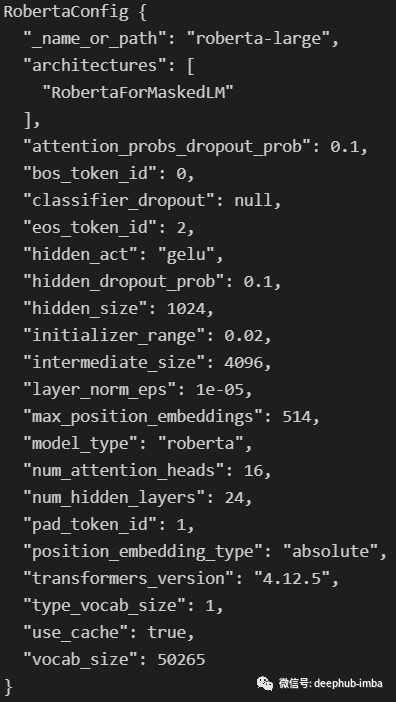

To initialize a BERT like model in the way of DistilBERT [1], we only need to copy everything except the deepest Roberta layer and delete half of it. So the steps here are as follows: first, we need to create a student model with the same architecture as the teacher model, but the number of hidden layers is halved. You only need to use the configuration of the teacher model, which is a dictionary like object that describes the architecture of the Hugging Face model. View Roberta When using the config attribute, we can see the following:

We are interested in the numhidden -layers attribute. Let's write a function to copy this configuration, change the properties by dividing it by 2, and then create a new model with the new configuration:

from transformers.models.roberta.modeling_roberta import RobertaPreTrainedModel, RobertaConfig def distill_roberta( teacher_model : RobertaPreTrainedModel,) -> RobertaPreTrainedModel: """ Distilates a RoBERTa (teacher_model) like would DistilBERT for a BERT model. The student model has the same configuration, except for the number of hidden layers, which is // by 2. The student layers are initilized by copying one out of two layers of the teacher, starting with layer 0. The head of the teacher is also copied. """ # Get teacher configuration as a dictionnary configuration = teacher_model.config.to_dict() # Half the number of hidden layer configuration['num_hidden_layers'] //= 2 # Convert the dictionnary to the student configuration configuration = RobertaConfig.from_dict(configuration) # Create uninitialized student model student_model = type(teacher_model)(configuration) # Initialize the student's weights distill_roberta_weights(teacher=teacher_model, student=student_model) # Return the student model return student_model

This function distill_ roberta_ The weights function will put half of the teacher's weight in the student layer, so it still needs to be coded. Because recursion works well in exploring the teacher model, you can use the same idea to explore and copy some parts. Here, we will iterate in the teacher's and student's models at the same time and copy them from one to another. The only thing to note is that the part of the hidden layer is copied only half.

The functions are as follows:

from transformers.models.roberta.modeling_roberta import RobertaEncoder, RobertaModelfrom torch.nn import Module

def distill_roberta_weights( teacher : Module, student : Module,) -> None: """ Recursively copies the weights of the (teacher) to the (student). This function is meant to be first called on a RobertaFor... model, but is then called on every children of that model recursively. The only part that's not fully copied is the encoder, of which only half is copied. """ # If the part is an entire RoBERTa model or a RobertaFor..., unpack and iterate if isinstance(teacher, RobertaModel) or type(teacher).__name__.startswith('RobertaFor'): for teacher_part, student_part in zip(teacher.children(), student.children()): distill_roberta_weights(teacher_part, student_part) # Else if the part is an encoder, copy one out of every layer elif isinstance(teacher, RobertaEncoder): teacher_encoding_layers = [layer for layer in next(teacher.children())] student_encoding_layers = [layer for layer in next(student.children())] for i in range(len(student_encoding_layers)): student_encoding_layers[i].load_state_dict(teacher_encoding_layers[2*i].state_dict()) # Else the part is a head or something else, copy the state_dict else: student.load_state_dict(teacher.state_dict())This function ensures that the student model is the same as the teacher security model of Roberta layer through recursion and type checking. If you only want to change the part of the for loop during initialization, you can change the part of the for loop.

Now that we have the student model, we need to train it. This part is relatively simple. The main problem is the loss function used.

Custom loss function

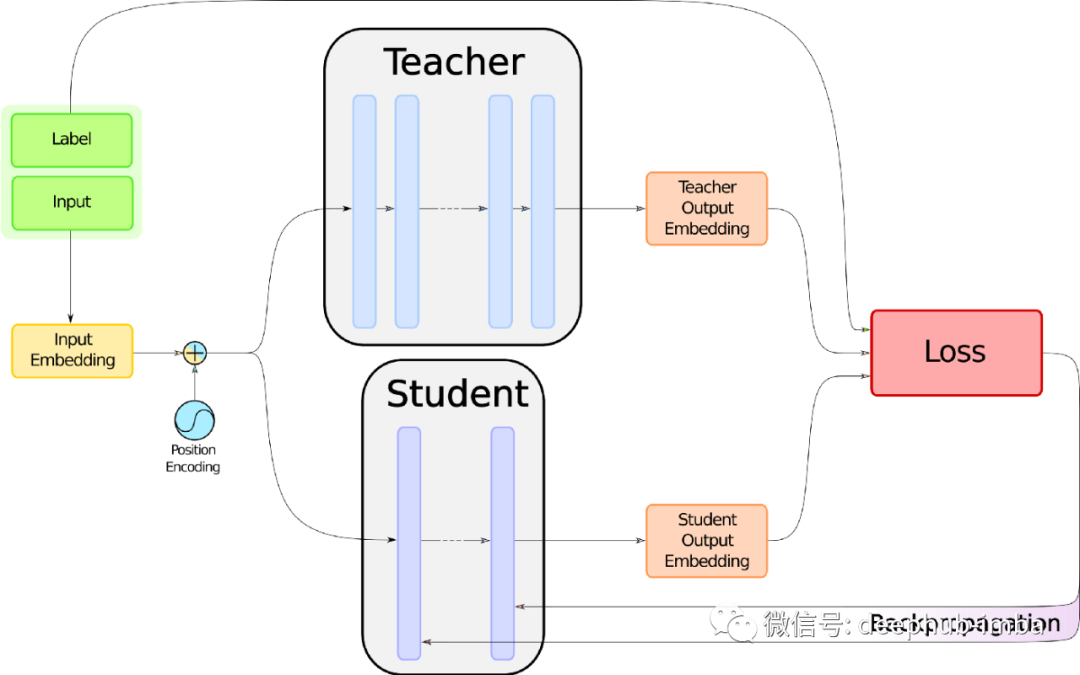

As a review of the DistilBERT training process, let's take a look at the following figure:

Please turn your attention to the big red box with "loss" on it. But before introducing what's inside in detail, we need to know how to collect what we want to feed it. In this picture, you can see that you need three things: tags, embedding of students and teachers. The label already exists because it is supervised learning. Now look at how you might get the other two.

Teacher and student input

Here, we need a function to give the input of a BERT like model, including two tensor inputs_ IDS and attention_mask and the model itself, and then the function will return logits of the model. Since we use Hugging Face, it is very simple. The only knowledge we need is to understand the following code:

from torch import Tensor def get_logits( model : RobertaPreTrainedModel, input_ids : Tensor, attention_mask : Tensor,) -> Tensor: """ Given a RoBERTa (model) for classification and the couple of (input_ids) and (attention_mask), returns the logits corresponding to the prediction. """ return model.classifier( model.roberta(input_ids, attention_mask)[0] )

Both students and teachers can use this function, but the first one has a gradient and the second one doesn't.

Code implementation of loss function

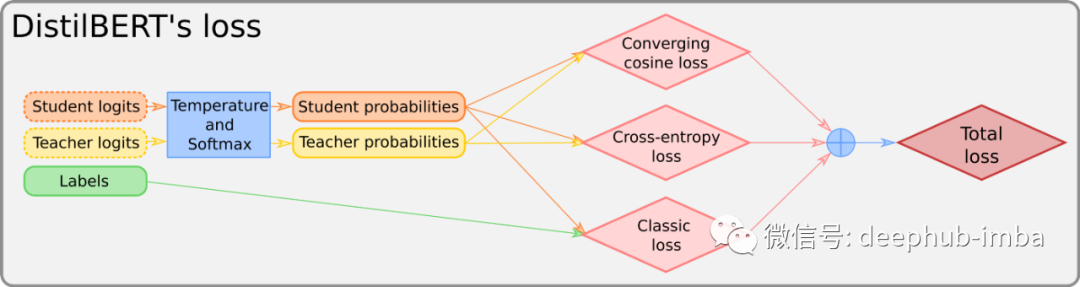

For a detailed introduction to the loss function, please refer to the article published last time. Here we use the following pictures to explain:

What we call 'converging cosine loss' is the conventional cosine loss used to align two input vectors. This is the code:

import torchfrom torch.nn import CrossEntropyLoss, CosineEmbeddingLoss def distillation_loss( teacher_logits : Tensor, student_logits : Tensor, labels : Tensor, temperature : float = 1.0,) -> Tensor: """ The distillation loss for distilating a BERT-like model. The loss takes the (teacher_logits), (student_logits) and (labels) for various losses. The (temperature) can be given, otherwise it's set to 1 by default. """ # Temperature and sotfmax student_logits, teacher_logits = (student_logits / temperature).softmax(1), (teacher_logits / temperature).softmax(1) # Classification loss (problem-specific loss) loss = CrossEntropyLoss()(student_logits, labels) # CrossEntropy teacher-student loss loss = loss + CrossEntropyLoss()(student_logits, teacher_logits) # Cosine loss loss = loss + CosineEmbeddingLoss()(teacher_logits, student_logits, torch.ones(teacher_logits.size()[0])) # Average the loss and return it loss = loss / 3 return loss

The above is the implementation of all the key ideas of DistilBERT, but there are still some things missing, such as GPU support, the whole training routine, etc., so the final complete code will be provided at the end of the article. If you need to use it in practice, it is recommended to use the final Distillator class.

result

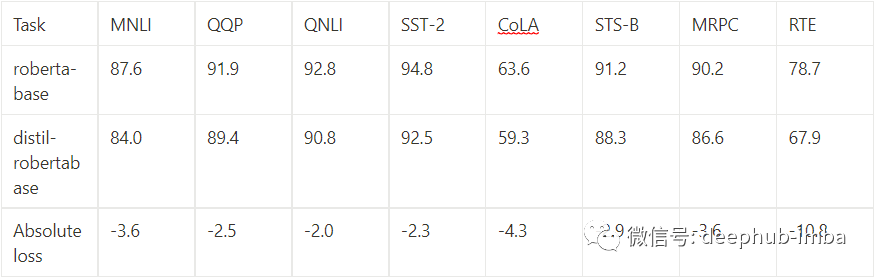

What is the final performance of the model refined in this way? For DistilBERT, you can read the original paper [1]. For RoBERTa, a distillation version similar to DistilBERT already exists on the Hugging Face. On the GLUE benchmark [4], we can compare two models:

As for time and memory costs, this model is about two-thirds the size of Roberta base and twice as fast.

summary

Through the above code, we can distill any model similar to BERT. In addition, there are many other better methods, such as TinyBERT [5] or MobileBERT [6]. If you think one of them is more suitable for your needs, you should read these articles. Even completely try a new distillation method, because this is an increasingly developing field.

The code of this article is here: https://gist.github.com/remi-or/4814577c59f4f38fcc89729ce4ba21e6

[1] Victor Sanh, lysandre debug, Julien chaumond, Thomas wolf, distilbert, a disabled version of Bert: smaller, faster, lighter and lighter (2019), hugging face [2] Yinhan Liu, myle Ott, naman Goyal, Jingfei Du, mandar Joshi, Danqi Chen, Omer levy, Mike Lewis, Luke zettlemoyer, Veselin Stoyanov, RoBERTa: A Robustly Optimized BERT Pretraining Approach (2019), arXiv[3] Hugging Face team crediting Julien Chaumond, Hugging Face’s RoBERTa documentation, Hugging Face[4] Alex WANG, Amanpreet SINGH, Julian MICHAEL, Felix HILL, Omer LEVY, Samuel R. BOWMAN, GLUE: A multi-task benchmark and analysis platform for natural language understanding (2019), arXiv[5] Xiaoqi Jiao, Yichun Yin, Lifeng Shang, Xin Jiang, Xiao Chen, Linlin Li, Fang Wang, Qun Liu, TinyBERT: Distilling BERT for Natural Language Understanding (2019), arXiv[6] Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, Denny Zhou, Mobilebert: a compact task agnostic Bert for resource limited devices (2020), arXiv editor: Huang Jiyan proofreader: Lin Yilin