Common current limiting schemes

My song moon lingers, my dance shadow is messy.

When you wake up, you have fun, and when you get drunk, you disperse.

1, Current limiting idea

There are four common system service current limiting modes: fusing, service degradation, delay processing and special processing.

1. Fuse

The fusing measures are embedded in the system design. When the system has problems, if it cannot be repaired in a short time, the system will automatically turn on the fusing switch to refuse traffic access and avoid the overload request of large traffic to the back end.

In addition, the system can also dynamically monitor the repair of the back-end program. When the program has returned to stability, it will close the fuse switch and restore normal service.

Common fuse components include Hystrix and Sentinel of Ali.

2. Service degradation

All functions and services of the system shall be graded. When the system has problems and needs emergency current limitation, the less important functions can be degraded to stop the service and ensure the normal operation of core functions.

For example, in the e-commerce platform, if there is a sudden surge of traffic, you can temporarily downgrade non core functions such as commodity reviews and points, stop these services, and release resources such as machines and CPU s to ensure users' normal orders.

These degraded functions and services can be started after the whole system returns to normal for supplement / compensation.

In addition to function degradation, you can also use the method of full read cache and write cache instead of directly operating the database as a temporary degradation scheme.

Fusing & degradation

-

Similarities:

The goal of consistency is to start from availability and reliability in order to prevent system collapse;

The user experience is similar. In the end, users experience that some functions are temporarily unavailable.

-

difference:

The trigger causes are different. Service fusing is generally caused by the failure of a service (downstream service, i.e. called service);

-

Service degradation is generally considered from the overall load.

3. Delay processing

Delay processing requires setting a traffic buffer pool at the front end of the system to buffer all requests into this pool without immediate processing. The back-end real business handler takes out requests from this pool and processes them in turn. Common can be implemented in queue mode.

This is equivalent to reducing the processing pressure of the back-end in an asynchronous way. However, when the traffic is large, the processing capacity of the back-end is limited, and the requests in the buffer pool may not be processed in time, resulting in a certain degree of delay.

4. Privilege handling

This mode needs to classify users. Through the preset classification, the system gives priority to the user groups that need high security, and the requests of other user groups will be delayed or not processed directly.

2, Current limiting algorithm

There are three common current limiting algorithms: counter algorithm, leaky bucket algorithm and token bucket algorithm.

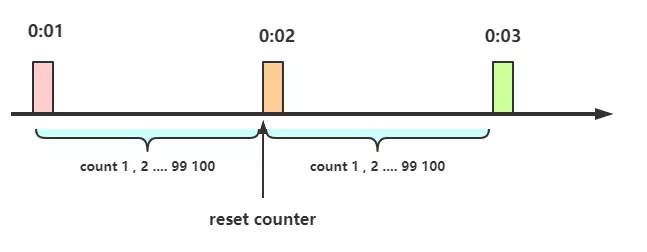

1. Counter algorithm

The counter algorithm is the simplest and easiest of the current limiting algorithms. As shown in the figure above, only 100 requests are allowed per minute. The time of the first request is startTime, and only 100 requests are allowed within startTime + 60s.

When there are more than ten requests within 60s, the request is rejected; If the permission request does not exceed, the time will be reset at 60s.

1 package com.todaytalents.rcn.parser.util;

2

3 import java.util.concurrent.atomic.AtomicInteger;

4

5 /**

6 * Current limiting by counter:

7 * Only 100 requests are allowed per minute. The time of the first request is startTime. Only 100 requests are allowed within startTime + 60s

8 * 60s If there are more than 100 requests within, the request is rejected,

9 * No more than, allow the request, and reset the time to 60s.

10 *

11 * @author: Arafat

12 * @date: 2021/12/29

13 * @company: Australia B99999

14 **/

15 public class CalculatorCurrentLimiting {

16

17 /**

18 * Number of current limiting

19 */

20 private int maxCount = 100;

21 /**

22 * Within the specified time: seconds

23 */

24 private long specifiedTime = 60;

25 /**

26 * Atomic counter

27 */

28 private AtomicInteger atomicInteger = new AtomicInteger(0);

29 /**

30 * Start time

31 */

32 private long startTime = System.currentTimeMillis();

33

34 /**

35 * @param maxCount Number of current limiting

36 * @param specifiedTime Within the specified time

37 * @return If true is returned, the current is unlimited; if false is returned, the current is limited

38 */

39 public boolean limit(int maxCount, int specifiedTime) {

40 atomicInteger.addAndGet(1);

41 if (1 == atomicInteger.get()) {

42 startTime = System.currentTimeMillis();

43 atomicInteger.addAndGet(1);

44 return true;

45 }

46 // Time interval exceeded, restart counting

47 if (System.currentTimeMillis() - startTime > specifiedTime * 1000) {

48 startTime = System.currentTimeMillis();

49 atomicInteger.set(1);

50 return true;

51 }

52 // Also during the time interval, check whether the current limit is exceeded

53 if (maxCount < atomicInteger.get()) {

54 return false;

55 }

56 return true;

57 }

58

59 }

Using the counter algorithm, for example, if an interface is required, the request cannot exceed 100 times in one minute.

A counter can be set at the beginning, which is + 1 for each request; If the value of the counter is greater than 10 and the interval between the counter and the first request is within 1 minute, it indicates that there are too many requests, and the request is restricted from being returned directly or not processed, and vice versa.

If the time interval between the request and the first request is greater than 1 minute and the value of the counter is still within the current limit, reset the counter.

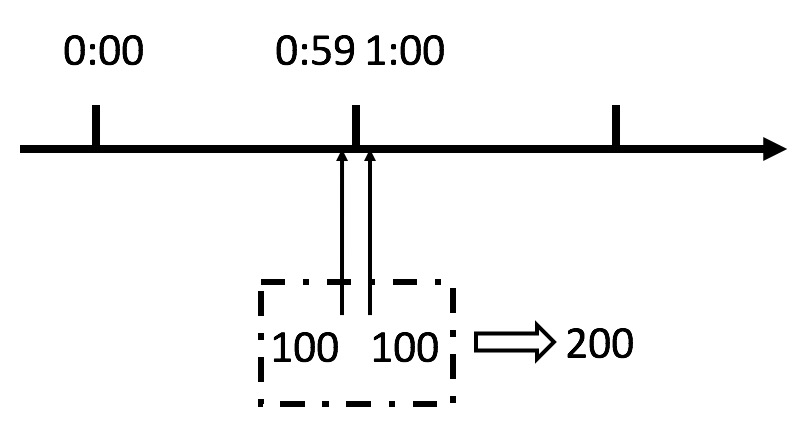

Although the calculator algorithm is simple, it has a fatal critical problem.

It can be seen from the above figure that if a malicious user sends 100 requests instantaneously at 0:59 and 100 requests instantaneously at 1:00, the user actually sends 200 requests instantaneously in one second.

The above counter algorithm specifies a maximum of 100 requests per minute, that is, a maximum of 1.7 requests per second. Through burst requests at the reset node of the time window, the user can instantly exceed the rate limit of the current limit. This vulnerability may crush the service application instantly.

The above vulnerability problem is actually because the statistical accuracy of the counter current limiting algorithm is too low, and the influence of the critical problem can be reduced with the help of the sliding window algorithm.

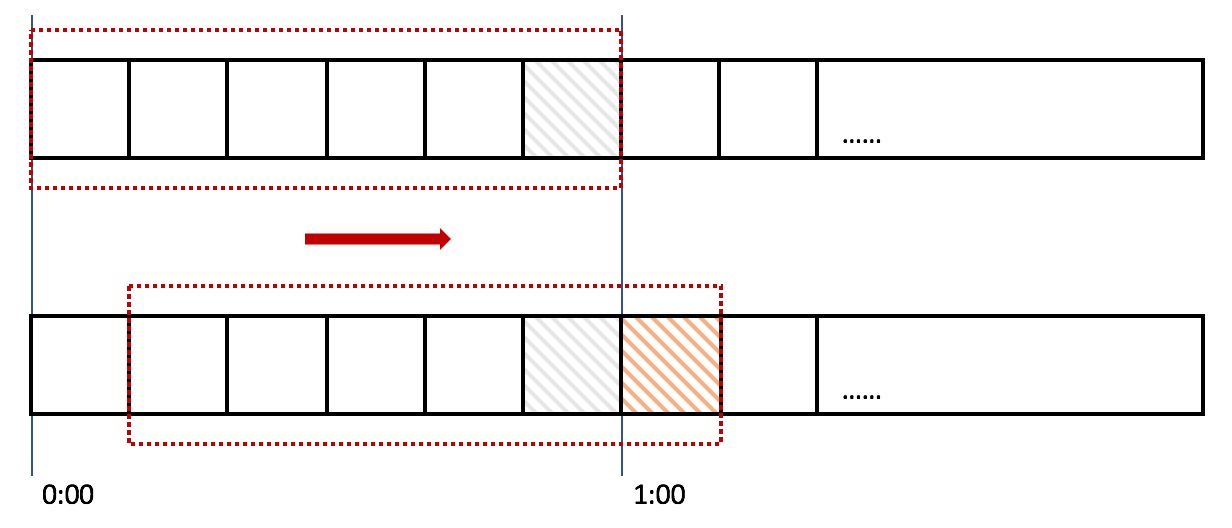

2. Sliding window

In the above figure, the entire red rectangle represents a time window. In the current limiting example of counter algorithm, a time window is one minute. Here, the time window is divided. For example, in the figure, the sliding window is divided into 6 grids, and each grid represents 10 seconds. The window slides to the right every 10 seconds. Each grid has its own independent counter. For example, when a request arrives at 0:35 seconds, the counter corresponding to 0:30 ~ 0:39 will increase by 1.

So how does the sliding window solve the critical problem just now?

In the above figure, 100 requests arriving at 0:59 will fall in the gray grid, while requests arriving at 1:00 will fall in the orange grid. When the time reaches 1:00, the window will move one grid to the right. At this time, the total number of requests in the time window is 200, exceeding the limit of 100, so it can be detected that the current limit is triggered.

After comparison, it is found that the counter algorithm is actually a sliding window algorithm. However, it does not further divide the time window, so there is only 1 grid. Therefore, the more grids of the sliding window are divided, the smoother the rolling of the sliding window is, and the more accurate the current limiting statistics will be.

3. Leaky bucket algorithm

The idea of the leaky bucket algorithm is very simple. The water (request) enters the leaky bucket first, and the leaky bucket leaves the water at a certain speed. When the water inflow speed exceeds the acceptable capacity of the bucket, it will overflow directly. It can be seen that the leaky bucket algorithm can forcibly limit the data transmission rate.

Using leaky bucket algorithm can ensure that the interface will process requests at a constant rate, so leaky bucket algorithm will not have critical problems.

Leaky bucket algorithm implementation class:

1 import java.util.concurrent.atomic.AtomicInteger;

2

3 /**

4 * Leaky bucket algorithm: treat water droplets as requests

5 *

6 * @author: Arafat

7 * @date: 2021/12/29

8 **/

9 public class LeakyBucket {

10 /**

11 * Barrel capacity

12 */

13 private int capacity = 100;

14 /**

15 * Amount of water drops remaining in the bucket (the bucket is empty during initialization)

16 */

17 private AtomicInteger water = new AtomicInteger(0);

18 /**

19 * The outflow rate of water droplets is 1 drop per 1000 milliseconds

20 */

21 private int leakRate;

22 /**

23 * After the first request, the barrel began to leak at this point in time

24 */

25 private long leakTimeStamp;

26

27 public LeakyBucket(int leakRate) {

28 this.leakRate = leakRate;

29 }

30

31 public boolean acquire() {

32 // If it is an empty bucket, use the current time as the time when the bucket begins to leak

33 if (water.get() == 0) {

34 leakTimeStamp = System.currentTimeMillis();

35 water.addAndGet(1);

36 return capacity == 0 ? false : true;

37 }

38 // First perform water leakage and calculate the remaining water volume

39 int waterLeft = water.get() - ((int) ((System.currentTimeMillis() - leakTimeStamp) / 1000)) * leakRate;

40 water.set(Math.max(0, waterLeft));

41 // Re update leakTimeStamp

42 leakTimeStamp = System.currentTimeMillis();

43 // Try adding water,And the water is not full

44 if ((water.get()) < capacity) {

45 water.addAndGet(1);

46 return true;

47 } else {

48 // When the water is full, refuse to add water and overflow directly

49 return false;

50 }

51 }

52

53 }

Use leakage bucket to limit current:

1 /**

2 * @author Arafat

3 */

4 @Slf4j

5 @RestController

6 @AllArgsConstructor

7 @RequestMapping("/test")

8 public class TestController {

9

10 /**

11 * Leaky bucket: the leakage rate of water droplets is 1 drop per second

12 */

13 private LeakyBucket leakyBucket = new LeakyBucket(1);

14

15 private UserService userService;

16

17 /**

18 * Leakage barrel current limiting

19 *

20 * @return

21 */

22 @RequestMapping("/searchUserInfoByLeakyBucket")

23 public Object searchUserInfoByLeakyBucket() {

24 // Current limiting judgment

25 boolean acquire = leakyBucket.acquire();

26 if (!acquire) {

27 log.info("Please try again later!");

28 return Reply.success("Please try again later!");

29 }

30 // If the current limiting requirements are not met,Direct call interface query

31 return Reply.success(userService.search());

32 }

33

34 }

Two advantages of leaky bucket algorithm:

- Peak clipping: when a large amount of traffic enters, overflow will occur, so that the current limiting protection service is available.

- Buffering: it does not directly request to the server. The buffering pressure and consumption speed are fixed because the computing performance is fixed.

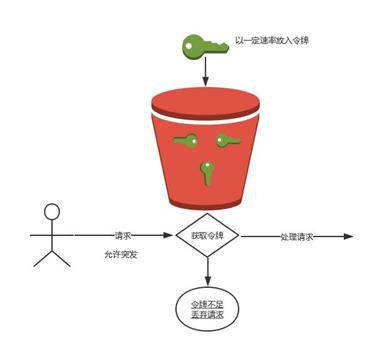

4. Token bucket algorithm

The idea of token bucket algorithm: generate a token at a fixed rate and put it into the token bucket. Each user request must apply for a token. If the token is insufficient, reject the request or wait.

In the above figure, the token bucket algorithm will put tokens into the bucket at a constant speed. If the request needs to be processed, you need to obtain a token from the bucket first. When there is no token in the bucket, the service will be denied.

1 import java.util.concurrent.Executors;

2 import java.util.concurrent.ScheduledExecutorService;

3 import java.util.concurrent.TimeUnit;

4

5 /**

6 * Token bucket algorithm current limiting

7 *

8 * @author: Arafat

9 * @date: 2021/12/30

10 **/

11 public class TokensLimiter {

12

13 /**

14 * Last token issuing time

15 */

16 public long timeStamp = System.currentTimeMillis();

17 /**

18 * Barrel capacity

19 */

20 public int capacity = 10;

21 /**

22 * Token generation speed 10/s

23 */

24 public int rate = 10;

25 /**

26 * Current number of tokens

27 */

28 public int tokens ;

29 /**

30 * Periodic thread pool

31 */

32 private ScheduledExecutorService scheduledExecutorService = Executors.newScheduledThreadPool(5);

33

34 /**

35 * The thread pool sends a random number of requests every 0.5s,

36 * The current number of tokens is calculated for each request,

37 * If the number of requested tokens exceeds the current number of tokens, the flow is limited.

38 */

39 public void acquire() {

40 scheduledExecutorService.scheduleWithFixedDelay(() -> {

41 long now = System.currentTimeMillis();

42 // Current number of tokens

43 tokens = Math.min(capacity, (int) (tokens + (now - timeStamp) * rate) / 1000);

44 //Every 0.5 Send a random number of requests per second

45 int permits = (int) (Math.random() * 9) + 1;

46 System.out.println("Number of requested Tokens:" + permits + ",Current number of Tokens:" + tokens);

47 timeStamp = now;

48 if (tokens < permits) {

49 // If no token is found,Then refuse

50 System.out.println("The current is limited");

51 } else {

52 // And a token. Get a token

53 tokens -= permits;

54 System.out.println("Remaining tokens=" + tokens);;

55 }

56 }, 1000, 500, TimeUnit.MILLISECONDS);

57 }

58

59 public static void main(String[] args) {

60 TokensLimiter tokensLimiter = new TokensLimiter();

61 tokensLimiter.acquire();

62 }

63

64 }

The token bucket algorithm does not take time to remove tokens from the bucket by default. If a delay time is set for removing tokens, the idea of leaky bucket algorithm is actually adopted.

As for the critical problem scenario, at 0:59 seconds, since the bucket is full of 100 tokens, these 100 requests can be passed instantly. However, since tokens are filled at a lower rate, the number of tokens in the bucket cannot reach 100 at 1:00, so it is impossible to pass another 100 requests at this time. So token bucket algorithm can solve the critical problem well.

Difference between leaky bucket algorithm and token bucket algorithm

- The main difference is that the "leaky bucket algorithm" can forcibly limit the data transmission rate, while the "token bucket algorithm" can not only limit the average data transmission rate, but also allow some degree of burst transmission.

- In the "token bucket algorithm", as long as there are tokens in the token bucket, it is allowed to transmit data in bursts until the user configured threshold is reached. Therefore, it is suitable for traffic with burst characteristics.

- Token bucket algorithm is widely used in the industry because of its simple implementation, allowing some traffic bursts and being user-friendly.

- Specific analysis of the specific situation, only the most appropriate algorithm, there is no optimal algorithm.

Current limiting based on Google RateLimiter

Google's Open Source Toolkit Guava provides the current limiting tool class RateLimiter, which is based on the token bucket algorithm to limit the current, which is very easy to use. RateLimiter is often used to limit the access rate of some physical or logical resources. It supports two interfaces to obtain permissions. One is to return false (tryAcquire()) immediately if it cannot be obtained, and the other will block and wait for a period of time to see if it can be obtained (tryacquire (long timeout, timeunit)).

1 import com.google.common.util.concurrent.RateLimiter;

2 import lombok.AllArgsConstructor;

3 import lombok.extern.slf4j.Slf4j;

4 import org.springframework.web.bind.annotation.RequestMapping;

5 import org.springframework.web.bind.annotation.RestController;

6

7 import java.util.concurrent.TimeUnit;

8

9 /**

10 * @author Arafat

11 */

12 @Slf4j

13 @RestController

14 @AllArgsConstructor

15 @RequestMapping("/test")

16 public class TestController {

17

18 /**

19 * Put n tokens per second, which is equivalent to only n requests per second

20 * n = 1

21 * n == 5

22 */

23 //private static final RateLimiter RATE_LIMITER = RateLimiter.create(1);

24 private static final RateLimiter RATE_LIMITER = RateLimiter.create(5);

25

26 public static void main(String[] args) {

27 // Limit 1 requests per second 0:Indicates the waiting timeout. Setting 0 indicates no waiting and directly rejects the request

28 boolean tryAcquire = RATE_LIMITER.tryAcquire(0, TimeUnit.SECONDS);

29 // false Indicates that no information was obtained token

30 if (!tryAcquire) {

31 System.out.println("There are too many people buying now. Please wait a minute!");

32 }

33

34 // tryAcquire The simulation has 20 requests

35 for (int i = 0; i < 20; i++) {

36 /**

37 * Trying to get a token from the token bucket,

38 * If it cannot be obtained, wait 300 milliseconds to see if it can be obtained

39 */

40 boolean request = RATE_LIMITER.tryAcquire(300, TimeUnit.MILLISECONDS);

41 if (request) {

42 // If successful, execute the corresponding logic

43 handle(i);

44 }

45 }

46

47 // acquire The simulation has 20 requests

48 for (int i = 0; i < 20; i++) {

49 // Get a token from the token bucket. If it is not obtained, it will block until it is obtained, so all requests will be executed

50 RATE_LIMITER.acquire();

51 // If successful, execute the corresponding logic

52 handle(i);

53 }

54 }

55

56 private static void handle(int i) {

57 System.out.println("The first " + i + " Secondary request OK~~~");

58 }

59

60 }

3, Cluster current limiting

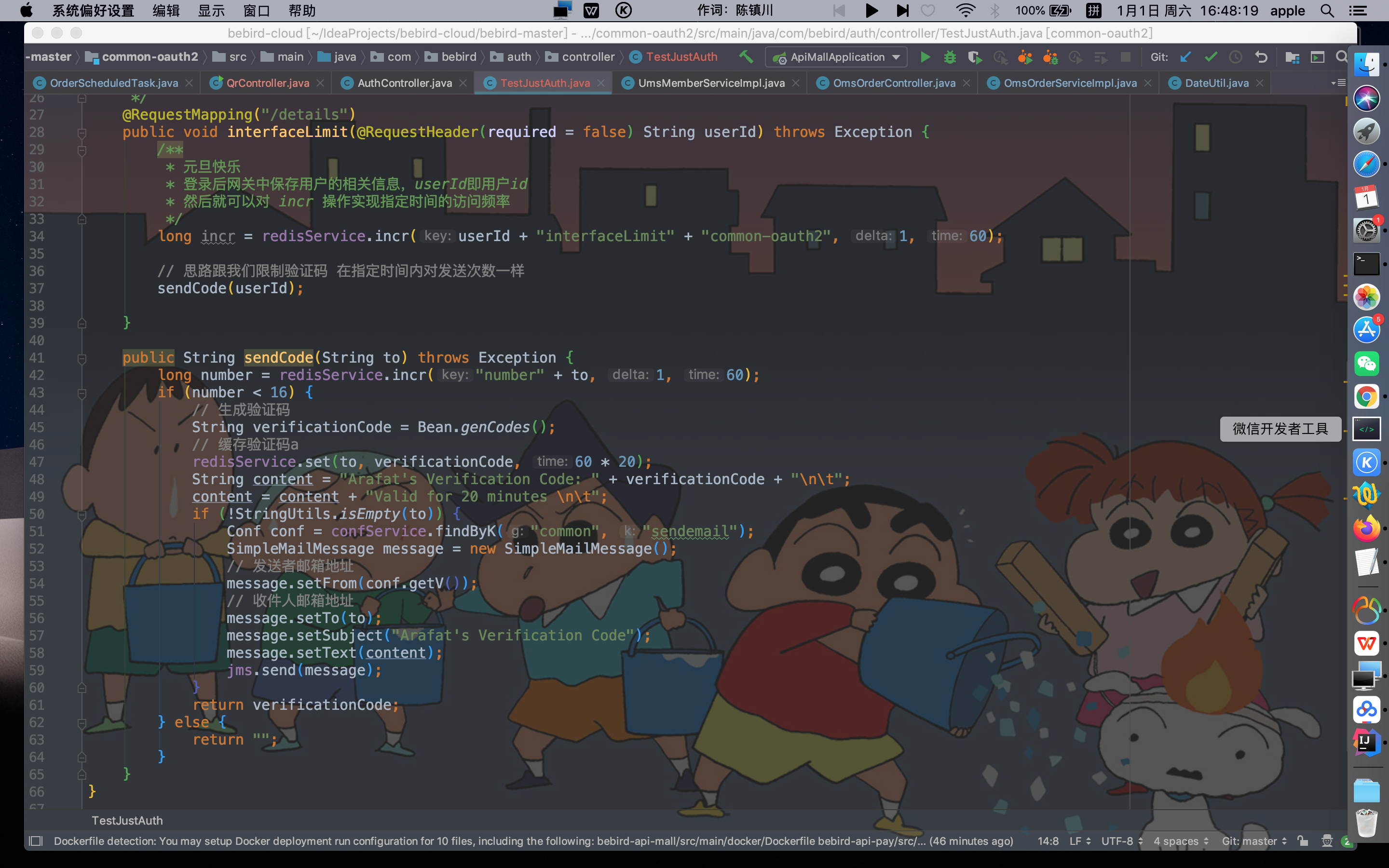

The previous algorithms belong to the category of single machine current limiting, but simple single machine current limiting still can not meet complex scenarios. For example, in order to limit the number of times a resource can be accessed by each user or merchant, it can only be accessed twice in 5s, or it can only be called 1000 times a day. In this scenario, single machine flow restriction cannot be realized. At this time, it needs to be realized through cluster flow restriction.

Redis can be used to implement cluster current limiting. The general idea is to send an incr command to the redis server every time there is a relevant operation.

redisOperations.opsForValue().increment()

For example, if you need to limit the number of times a user accesses a details / details interface, you only need to splice the user id and interface name, and add the prefix of the current service name as the redis key. Each time the user accesses this interface, you only need to execute the incr command on the key, and then add the expiration time to the key to realize the access frequency at the specified time.

My song moon lingers, my dance shadow is messy.

When you wake up, you have fun, and when you get drunk, you disperse.