In the development of high concurrency system, there are four tools to protect the system: diffluence, cache, degradation and flow restriction. Based on the author's experience, this paper introduces the related concepts, algorithms and conventional implementation of current limiting.

Concept interpretation

shunt

Shunting is the most commonly used, that is, capacity expansion, and then realize the desired shunting strategy through load balancing. Child load balancing is divided into hard load, such as F5; soft load, such as nginx, apache, haproxy, LVS, etc. It can also be divided into client load, such as spring cloud's Ribbon, and server load, such as nginx.

cache

Using cache can not only improve system access speed and concurrent access, but also protect database and system. If some data access frequency of the website is high, read more and write less, and the consistency requirement is low, cache is generally used. In addition, in the "write" scenario, we can use cache to improve system performance, such as accumulating some data for batch writing, cache queues (production consumption) in memory, etc., which are also used to improve system throughput or implement system protection measures. But when using buffer, we should avoid the problems brought by cache, such as cache penetration, cache avalanche, cache breakdown, etc.

Demotion

Service degradation refers to a strategic degradation of some services and pages according to the current business situation and traffic when the server's pressure increases sharply or the dependent services are out of account, or a lightweight response to the failure is made to release the server resources to ensure the normal operation of the core tasks. Degradation often specifies different levels, and different processing is performed in different exception levels.

According to the service mode: you can reject the service, delay the service, or sometimes serve randomly.

According to the service scope: you can cut off a function or some modules.

In short, service degradation needs to adopt different degradation strategies according to different business requirements. The main purpose is that although the service is damaged, it is better than nothing.

Current limiting

Current limiting can be considered as a kind of service degradation. Current limiting is to limit the input and output flow of the system to achieve the purpose of protecting the system. Generally speaking, the throughput of the system can be measured. In order to ensure the stable operation of the system, once the threshold that needs to be limited is reached, some measures need to be taken to achieve the purpose of limiting traffic. For example: delay processing, reject processing, or partial reject processing and so on.

Current limiting scenario

There are three types of current limiting scenarios

Agent layer

For example, SLB, nginx or business layer gateway all support flow restriction, which is usually based on the number of connections (or concurrent numbers) and requests. The dimension of flow restriction is usually based on IP address, resource location, user flag, etc. Furthermore, the current limiting strategy (benchmark) can be adjusted dynamically according to its own load.

Service caller

The service caller, also known as local flow restriction, can limit the calling speed of a remote service. If it exceeds the threshold, it can block or reject directly. It is the cooperator of flow restriction.

Service recipient

Basically the same as above, when the traffic exceeds the capacity of the system, the service will be directly rejected. Usually based on the reliability of the application itself, it belongs to the main side of current limiting. We often say that the current limiting occurs here. In this paper, we mainly discuss the use of current limiter based on RateLimiter, the others are similar.

Current limiting method

The three most commonly used current limiting methods are: counter current limiting, leaky bucket algorithm, token bucket algorithm

Counter current limiting

We can use atom counter AtomicInteger and Semaphore semaphore to do simple current limiting.

public static void main(String[] args) {

CurrentLimiting c = new CurrentLimiting();

// Number of current limiting

int maxCount = 10;

/ Within the specified time

long interval = 60;

c.limit(maxCount,interval);

}

// Atomic counter

private AtomicInteger atomicInteger = new AtomicInteger(0);

// Start time

private long startTime = System.currentTimeMillis();

public boolean limit(int maxCount, long interval) {

atomicInteger.addAndGet(1);

if (atomicInteger.get() == 1) {

startTime = System.currentTimeMillis();

atomicInteger.addAndGet(1);

return true;

}

// After the interval is exceeded, start counting again directly

if (System.currentTimeMillis() - startTime > interval * 1000) {

startTime = System.currentTimeMillis();

atomicInteger.set(1);

return true;

}

// Whether the number of check exceeds the current limit within the interval

if (atomicInteger.get() > maxCount) {

return false;

}

return true;

}Above is only a current limiting implementation of the stand-alone version. If you want to achieve distributed current limiting, you can use redis.

Counter current limiting, the existing problems are:

- First, assume that the service request reaches the limit in the first second. If the limit is set small, the traffic will fluctuate. If the limit is set large, it may cause a critical hit to the server.

- Second, in the time window, if the limit is reached in the first second, then all the requests coming in the remaining time of the time window will be rejected.

Each kind of current limiter has its disadvantages, but each kind also has its own application: for example, to limit a certain interface, service, the number of requests per minute, per day or the amount of calls, then you can use the counter to limit the current. Wechat access_ For token interface, the daily upper limit is 2000, which is a good way to use it.

The solution to the defect of counter current limiting is to use leaky bucket algorithm or token bucket algorithm to smooth current limiting.

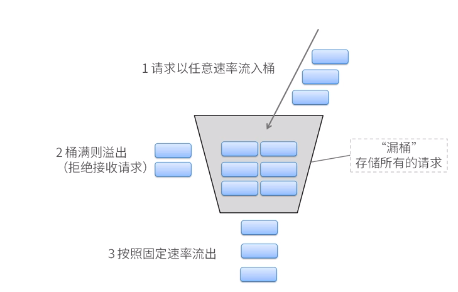

Leaky bucket algorithm

The counter current limiting method described above, for example, can only have 10 requests in 60 seconds. If the service request reaches the upper limit in the first second, all requests can only be rejected in the remaining 59 seconds. This is the first problem. The second problem is that the traffic trend will show that the traffic in the first second of every minute will soar, and the remaining 59 seconds will be smooth, which will cause traffic bullying. The leaky bucket algorithm can solve this problem.

Leaky bucket algorithm comes from the funnel in life, so we can think of it as a funnel. No matter how big the water flow is, that is, no matter how many requests are, it flows out at a uniform speed. When the flow speed on the upper side is greater than the outflow speed, the funnel will fill up slowly, and the request will be discarded when the funnel is full; when the flow speed on the upper side is less than the outflow speed, the funnel will never be full, and it can flow out all the time.

The implementation steps of leaky bucket algorithm are as follows:,

- A leaky bucket with a fixed capacity flows out water drops at a constant fixed rate;

- If the bucket is empty, there is no need to flow out water drops;

- It can flow into the leaking bucket at any rate;

- If the incoming water drop exceeds the capacity of the bucket, the incoming water drop overflows (is discarded), and the capacity of the leaking bucket is unchanged.

code implementation

public class LeakyBucket1 {

// Barrel capacity

private int capacity = 100;

// Amount of water left in the bucket (empty bucket at initialization time)

private AtomicInteger water = new AtomicInteger(0);

// Rate of water droplet outflow 1 drop per 1000 ms

private int leakRate;

// After the first request, the barrel began to leak at this point in time

private long leakTimeStamp;

public LeakyBucket1(int leakRate) {

this.leakRate = leakRate;

}

public boolean acquire() {

// If the bucket is empty, the current time is the time when the bucket begins to leak out

if (water.get() == 0) {

leakTimeStamp = System.currentTimeMillis();

water.addAndGet(1);

return capacity == 0 ? false : true;

}

// Perform leakage first and calculate the remaining water volume

int waterLeft = water.get() - ((int) ((System.currentTimeMillis() - leakTimeStamp) / 1000)) * leakRate;

water.set(Math.max(0, waterLeft));

// Update leakTimeStamp again

leakTimeStamp = System.currentTimeMillis();

// Try adding water, and the water is not full

if ((water.get()) < capacity) {

water.addAndGet(1);

return true;

} else {

// Water full, refuse to add water

return false;

}

}

}

@RestController

public class LeakyBucketController {

//Leakage bucket: the leakage rate of water drops is 1 drop per second

private LeakyBucket1 leakyBucket = new LeakyBucket1(1);

//Leakage bucket current limiting

@RequestMapping("/searchCustomerInfoByLeakyBucket")

public Object LeakyBucket() {

// 1. Current limiting judgment

boolean acquire = leakyBucket.acquire();

if (!acquire) {

System.out.println("Try again later!");

return "Try again later!";

}

// 2. If the current limiting requirements are not met, directly call the interface query

System.out.println("Call interface query directly");

return "";

}

}

The defects of leaky bucket algorithm are as follows:

- It is inefficient for traffic with burst characteristics. Because the leakage rate of leaky bucket is a fixed parameter, even if there is no resource conflict (no congestion), leaky bucket algorithm can not make the burst to port rate. For example, the leakage rate per second of the leaky bucket is 2. Now there is 1 server, each server can handle one per second. If there is sudden traffic coming, because the processing speed is limited, only two of them work every second, and the other eight are idle, which will cause waste of resources

Application of leaky bucket algorithm:

- The main scenario is that when we call a third-party system, the third-party system has a traffic limit or has no protection mechanism. At this time, our call speed cannot exceed its limit. At this time, we need to control in the main dispatcher, even if the traffic bursts, it must be limited, because the consumption capacity is determined by the third party.

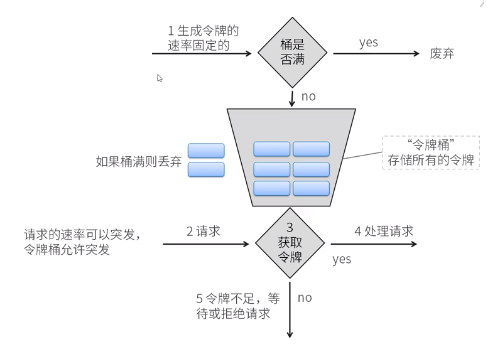

Token Bucket

Token bucket algorithm (Token Bucket) has the same effect as Leaky Bucket, but in the opposite direction, which is easier to understand. As time goes on, the system will add token to the bucket at a constant 1/QPS interval (if QPS=100, the interval is 10ms) (imagine that there is a faucet constantly adding water instead of loophole leakage). If the bucket is full, it will not be added. When a new request comes, it will take a token respectively. If there is no token to take, it will block or refuse the service.

Guava ratelimiter current limiting artifact

Google's Open Source Toolkit Guava provides a flow restriction tool class RateLimiter, which is based on the token bucket algorithm to complete the flow restriction and is very easy to use. And RateLimiter is thread safe, so it can be used directly in concurrent environment without additional lock or synchronization lock.

There are two ways to implement token bucket algorithm: smooth burst current limiting and smooth preheating current limiting.

Smooth burst current limiting

The current limiter can support the burst traffic. When the current limiter is not used, some tokens can be stored for the burst traffic. When the burst traffic occurs, the resource can be used more quickly and fully. The key point is that in the cooler room, tokens will accumulate, and in case of sudden flow, tokens accumulated before can be consumed without any waiting. Just like a person, after a period of rest after running, starting again can have a higher speed.

/**

* Five are generated per second, basically one is 0.2 seconds, so as to achieve smooth flow restriction without flow fluctuation

* Five tokens are generated per second, so that no more than five tokens can be given in a second, and they can be placed at a fixed rate to achieve a smooth output effect, which is basically one in 0.2 seconds

*

* Print results

* get 1 tokens:0.0s

* get 1 tokens:0.199556s

* get 1 tokens:0.187896s

* get 1 tokens:0.199865s

* get 1 tokens:0.199369s

* get 1 tokens:0.200024s

* get 1 tokens:0.19999s

* get 1 tokens:0.199315s

* get 1 tokens:0.200035s

*/

public void testSmothBursty1(){

//5 per second

RateLimiter r = RateLimiter.create(5);

while (true){

//The acquire() method is to obtain a token (permit is used in the source code). If the permit (resource or token) is sufficient, it will return directly without waiting. If it is insufficient, it will wait for 1/QPS second.

System.out.println("get 1 tokens:" + r.acquire() + "s");

}

}

/**

* Support burst

* RateLimiter Using token bucket algorithm will accumulate tokens. If the frequency of obtaining tokens is relatively low, it will not lead to waiting and get tokens directly.

*

* Print results:

* get 1 tokens:0.0s

* get 1 tokens:0.0s

* get 1 tokens:0.0s

* get 1 tokens:0.0s

* end

* get 1 tokens:0.499305s

* get 1 tokens:0.0s

* get 1 tokens:0.0s

* get 1 tokens:0.0s

* end

*/

public void testSmothBursty2(){

RateLimiter r = RateLimiter.create(2);

while (true){

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

try {

Thread.sleep(2000);

}catch (Exception e){ }

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("end");

}

}

/**

* Support lag processing

* RateLimiter Because tokens are accumulated, you can deal with burst traffic. In the following code, one request will directly request five tokens, but because there are accumulated tokens in the token bucket at this time, it is enough to respond quickly.

* RateLimiter When there are not enough tokens to be issued, the method of delay processing is adopted, that is to say, the waiting time for the previous request to obtain the token is borne by the next request, that is to say, waiting instead of the previous request.

*

* Results printing:

* get 5 tokens:0.0s

* get 1 tokens:0.999468s ---- Lag effect, need to wait for the previous request

* get 1 tokens:0.196924s

* get 1tokens:0.200056s

* end

* get 5 tokens:0.199583s

* get 1 tokens:0.999156s ---- Lag effect, need to wait for the previous request

* get 1 tokens:0.199329s

* get 1tokens:0.199907s

* end

*/

public void testSmothBursty3(){

RateLimiter r = RateLimiter.create(5);

while (true){

System.out.println("get 5 tokens:" + r.acquire(5) + "s");

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("get 1 tokens:" + r.acquire(1) + "s");

System.out.println("end");

}

}Smooth preheating current limiting

It is a smooth current limiter with preheating period. After it is started, there will be a preheating period to gradually increase the distribution frequency to the configured rate. That is, when the burst flow occurs, the maximum rate cannot be reached immediately, but it needs to rise gradually within the specified "preheating time" to finally reach the threshold value;

Its design philosophy, in contrast to smoothburst, is to use resources slowly and gradually (up to the highest rate) in a controllable way when sudden traffic occurs, and the rate is limited when the traffic is stable. For example, in the following code, create an average token distribution rate of 2 and a warm-up period of 3 seconds. Since the preheating time is set to 3 seconds, the token bucket will not send a token in 0.5 seconds at the beginning, but will form a smooth linear decline slope. The frequency is higher and higher. It will reach the originally set frequency within 3 seconds, and then it will output at a fixed frequency. This function is suitable for the scenario that the system just starts and needs a little time to "warm up".

/**

*

*

* Print results:

* get 1 tokens: 0.0s

* get 1 tokens: 1.332745s

* get 1 tokens: 0.997318s

* get 1 tokens: 0.666174s --- The sum of the time obtained by the above three times is exactly 3 seconds

* end

* get 1 tokens: 0.5005s --- Normal rate 0.5 seconds a token

* get 1 tokens: 0.499127s

* get 1 tokens: 0.499298s

* get 1 tokens: 0.500019s

* end

*/

public void testSmoothwarmingUp(){

RateLimiter r = RateLimiter.create(2,3, TimeUnit.SECONDS);

while (true){

System.out.println("get 1 tokens: " + r.acquire(1) + "s");

System.out.println("get 1 tokens: " + r.acquire(1) + "s");

System.out.println("get 1 tokens: " + r.acquire(1) + "s");

System.out.println("get 1 tokens: " + r.acquire(1) + "s");

System.out.println("end");

}

}Application of token bucket current restriction:

- Token bucket can protect ourselves. If our interface is called by others, we can limit the frequency of callers, so as to protect ourselves from being destroyed. For example, when the traffic bursts, we can use the traffic burst to limit the flow, so that the actual processing speed can exceed the configured limit.

Difference between leaky bucket and token bucket:

- Leaky bucket can restrict the data transmission rate.

- Token bucket can not only limit the average rate of data, but also allow burst traffic transmission.

Summary

In a word, to sum up the leaky bucket and token bucket is: if you want to keep your system from being broken, use token bucket. If you want to ensure that your system won't be broken, use the leaky bucket algorithm.

The RateLimiter of Guava is only applicable to the current limiting of single machine. In the Internet distributed project, other middleware can be used to realize the current limiting function, such as redis.

Redis + Lua can realize distributed current limiting. Please refer to this article of Tycoon: Redis + Lua current limiting implementation