Table of Contents

Implementation of Concurrent HashMap

Conversion of Red and Black Trees

HashMap is a collection that we use very frequently, but because it is non-thread-safe, put operation is likely to produce dead-loop in multi-threaded environment, resulting in CPU utilization close to 100%. To solve this problem, two solutions, Hashtable and Collections. synchronized Map (hashMap), are provided, but both of them are read-write locking, exclusive, one thread must wait while reading, with low throughput and low performance. So Doug Lea provides us with a high-performance thread security HashMap: Concurrent HashMap.

Implementation of Concurrent HashMap

Concurrent HashMap, as a family of Concurrents, has efficient concurrent operations. Concurrent HashMap is superior to Hashtable in its bulkiness.

Prior to version 1.8, Concurrent HashMap used the concept of segmented lock to make the lock more detailed, but 1.8 has changed this idea, using CAS+Synchronized to ensure the security of concurrent updates, of course, the underlying use of array + linked list + red-black tree storage structure.

For the difference between 1.7 and 1.8, please refer to Zhanwolf Blog: Talk about the different implementations of Concurrent HashMap 1.7 and 1.8: http://www.jianshu.com/p/e694f1e868ec

We have a comprehensive understanding of how Concurrent HashMap is implemented in 1.8 in the following sections:

- Important concepts

- Important internal classes

- Initialization of Concurrent HashMap

- put operation

- get operation

- size operation

- Capacity expansion

- Red-Black Tree Conversion

Important concepts

Concurrent HashMap defines the following constants:

// Maximum capacity: 2 ^ 30 = 1073741824 private static final int MAXIMUM_CAPACITY = 1 << 30; // The default initial value must be the number of acts of 2 private static final int DEFAULT_CAPACITY = 16; // static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8; // private static final int DEFAULT_CONCURRENCY_LEVEL = 16; // private static final float LOAD_FACTOR = 0.75f; // Link list to red-black tree threshold, > 8 Link list to red-black tree threshold static final int TREEIFY_THRESHOLD = 8; //The threshold value of tree link list is less than or equal to 6 (when tranfer, lc and hc=0 counter respectively + + record the number of original bin and new binTreeNode, <=UNTREEIFY_THRESHOLD untreeify(lo)) static final int UNTREEIFY_THRESHOLD = 6; // static final int MIN_TREEIFY_CAPACITY = 64; // private static final int MIN_TRANSFER_STRIDE = 16; // private static int RESIZE_STAMP_BITS = 16; // 2 ^ 15-1, maximum number of threads for help resize private static final int MAX_RESIZERS = (1 << (32 - RESIZE_STAMP_BITS)) - 1; // 32-16 = 16, offset of size recorded in sizeCtl private static final int RESIZE_STAMP_SHIFT = 32 - RESIZE_STAMP_BITS; // hash value of forwarding nodes static final int MOVED = -1; // hash value of root node static final int TREEBIN = -2; // hash value of ReservationNode static final int RESERVED = -3; // Number of processors available static final int NCPU = Runtime.getRuntime().availableProcessors();

The above is a constant defined by Concurrent HashMap, which is simple and easy to understand, and not much elaborated. Here are some important concepts of Concurrent HashMap.

- table: Used to store Node node data, the default is null, the default size is 16 arrays, the size of each expansion is always the power of 2;

- nextTable: Data generated when expanding, with an array twice as large as table.

- Node: Node, which saves the data structure of key-value.

- Forwarding Node: A special Node node with a hash value of - 1, where references to nextTable are stored. Forwarding Node works only when the table expands, and is placed as a placeholder in the table to indicate that the current node is null or has been moved.

-

sizeCtl: A control identifier used to control table initialization and expansion operations. It has different uses in different places, different values, and different meanings.

- Negative numbers represent initialization or expansion operations in progress

- - 1 represents initializing

- - N indicates that N-1 threads are expanding

- Positive or zero means that the hash table has not yet been initialized. This value indicates the size of the initialization or the next expansion.

Important internal classes

To implement Concurrent HashMap, Doug Lea provides many internal classes for auxiliary implementation, such as Node, TreeNode,TreeBin and so on. Let's take a look at several important internal classes of Concurrent HashMap.

Node

As the core and most important internal class in Concurrent HashMap, Node plays an important role: key-value key-value pairs. All data inserted into ConCurrent HashMap will be wrapped in Node. Definitions are as follows:

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

volatile V val; //With volatile to ensure visibility

volatile Node<K,V> next; //Next node pointer

Node(int hash, K key, V val, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.val = val;

this.next = next;

}

public final K getKey() { return key; }

public final V getValue() { return val; }

public final int hashCode() { return key.hashCode() ^ val.hashCode(); }

public final String toString(){ return key + "=" + val; }

/** Modification of value is not allowed */

public final V setValue(V value) {

throw new UnsupportedOperationException();

}

public final boolean equals(Object o) {

Object k, v, u; Map.Entry<?,?> e;

return ((o instanceof Map.Entry) &&

(k = (e = (Map.Entry<?,?>)o).getKey()) != null &&

(v = e.getValue()) != null &&

(k == key || k.equals(key)) &&

(v == (u = val) || v.equals(u)));

}

/** Assignment get() method */

Node<K,V> find(int h, Object k) {

Node<K,V> e = this;

if (k != null) {

do {

K ek;

if (e.hash == h &&

((ek = e.key) == k || (ek != null && k.equals(ek))))

return e;

} while ((e = e.next) != null);

}

return null;

}

}In Node internal classes, the attributes value and next are volatile. At the same time, the setter method of value is specially treated, and it is not allowed to call its setter method directly to modify the value. Finally, Node provides a find method to assign map.get().

TreeNode

When we learn HashMap, we know that the core data structure of HashMap is linked list. In Concurrent HashMap, if the linked list is too long, it will be converted to a red-black tree for processing. However, it is not a direct conversion, but rather wraps the nodes of these linked lists as TreeNodes in TreeBin objects, and then TreeBin completes the transformation of red and black trees. So TreeNode must also be a core class of Concurrent HashMap, which is a tree node class defined as follows:

static final class TreeNode<K,V> extends Node<K,V> {

TreeNode<K,V> parent; // red-black tree links

TreeNode<K,V> left;

TreeNode<K,V> right;

TreeNode<K,V> prev; // needed to unlink next upon deletion

boolean red;

TreeNode(int hash, K key, V val, Node<K,V> next,

TreeNode<K,V> parent) {

super(hash, key, val, next);

this.parent = parent;

}

Node<K,V> find(int h, Object k) {

return findTreeNode(h, k, null);

}

//Find nodes with hash h h and key k

final TreeNode<K,V> findTreeNode(int h, Object k, Class<?> kc) {

if (k != null) {

TreeNode<K,V> p = this;

do {

int ph, dir; K pk; TreeNode<K,V> q;

TreeNode<K,V> pl = p.left, pr = p.right;

if ((ph = p.hash) > h)

p = pl;

else if (ph < h)

p = pr;

else if ((pk = p.key) == k || (pk != null && k.equals(pk)))

return p;

else if (pl == null)

p = pr;

else if (pr == null)

p = pl;

else if ((kc != null ||

(kc = comparableClassFor(k)) != null) &&

(dir = compareComparables(kc, k, pk)) != 0)

p = (dir < 0) ? pl : pr;

else if ((q = pr.findTreeNode(h, k, kc)) != null)

return q;

else

p = pl;

} while (p != null);

}

return null;

}

}The source code shows that TreeNode inherits Node and provides findTreeNode to find nodes with hash and key as k.

TreeBin

This class is not responsible for key-value pair wrapping. It is used to wrap TreeNode nodes when the linked list is converted to a red-black tree. That is to say, the Concurrent HashMap red-black tree is stored as TreeBin, not TreeNode. This class encapsulates a series of methods, including putTreeVal, lookRoot, UNlookRoot, remove, balance Insetion, balance Deletion. Because TreeBin's code is too long, we only show the construction method here (the construction method is the process of constructing a red-black tree):

static final class TreeBin<K,V> extends Node<K,V> {

TreeNode<K, V> root;

volatile TreeNode<K, V> first;

volatile Thread waiter;

volatile int lockState;

static final int WRITER = 1; // set while holding write lock

static final int WAITER = 2; // set when waiting for write lock

static final int READER = 4; // increment value for setting read lock

TreeBin(TreeNode<K, V> b) {

super(TREEBIN, null, null, null);

this.first = b;

TreeNode<K, V> r = null;

for (TreeNode<K, V> x = b, next; x != null; x = next) {

next = (TreeNode<K, V>) x.next;

x.left = x.right = null;

if (r == null) {

x.parent = null;

x.red = false;

r = x;

} else {

K k = x.key;

int h = x.hash;

Class<?> kc = null;

for (TreeNode<K, V> p = r; ; ) {

int dir, ph;

K pk = p.key;

if ((ph = p.hash) > h)

dir = -1;

else if (ph < h)

dir = 1;

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0)

dir = tieBreakOrder(k, pk);

TreeNode<K, V> xp = p;

if ((p = (dir <= 0) ? p.left : p.right) == null) {

x.parent = xp;

if (dir <= 0)

xp.left = x;

else

xp.right = x;

r = balanceInsertion(r, x);

break;

}

}

}

}

this.root = r;

assert checkInvariants(root);

}

/** A lot of code is omitted */

}The construction method is the process of constructing a red-black tree.

ForwardingNode

This is a real auxiliary class that only survives when Concurrent HashMap scales. It's just a flag node and points to nextTable, which provides the find method. This class is also an integrated Node node with hash of - 1 and key, value and next all null. As follows:

static final class ForwardingNode<K,V> extends Node<K,V> {

final Node<K,V>[] nextTable;

ForwardingNode(Node<K,V>[] tab) {

super(MOVED, null, null, null);

this.nextTable = tab;

}

Node<K,V> find(int h, Object k) {

// loop to avoid arbitrarily deep recursion on forwarding nodes

outer: for (Node<K,V>[] tab = nextTable;;) {

Node<K,V> e; int n;

if (k == null || tab == null || (n = tab.length) == 0 ||

(e = tabAt(tab, (n - 1) & h)) == null)

return null;

for (;;) {

int eh; K ek;

if ((eh = e.hash) == h &&

((ek = e.key) == k || (ek != null && k.equals(ek))))

return e;

if (eh < 0) {

if (e instanceof ForwardingNode) {

tab = ((ForwardingNode<K,V>)e).nextTable;

continue outer;

}

else

return e.find(h, k);

}

if ((e = e.next) == null)

return null;

}

}

}

}Constructor

Concurrent HashMap provides a series of constructors for creating Concurrent HashMap objects:

public ConcurrentHashMap() {

}

public ConcurrentHashMap(int initialCapacity) {

if (initialCapacity < 0)

throw new IllegalArgumentException();

int cap = ((initialCapacity >= (MAXIMUM_CAPACITY >>> 1)) ?

MAXIMUM_CAPACITY :

tableSizeFor(initialCapacity + (initialCapacity >>> 1) + 1));

this.sizeCtl = cap;

}

public ConcurrentHashMap(Map<? extends K, ? extends V> m) {

this.sizeCtl = DEFAULT_CAPACITY;

putAll(m);

}

public ConcurrentHashMap(int initialCapacity, float loadFactor) {

this(initialCapacity, loadFactor, 1);

}

public ConcurrentHashMap(int initialCapacity,

float loadFactor, int concurrencyLevel) {

if (!(loadFactor > 0.0f) || initialCapacity < 0 || concurrencyLevel <= 0)

throw new IllegalArgumentException();

if (initialCapacity < concurrencyLevel) // Use at least as many bins

initialCapacity = concurrencyLevel; // as estimated threads

long size = (long)(1.0 + (long)initialCapacity / loadFactor);

int cap = (size >= (long)MAXIMUM_CAPACITY) ?

MAXIMUM_CAPACITY : tableSizeFor((int)size);

this.sizeCtl = cap;

}Initialization: initTable()

The initialization of ConcurrentHashMap is mainly implemented by the initTable() method. As we can see from the constructor above, ConcurrentHashMap does nothing in the constructor, just sets some parameters. Its real initialization occurs at insertion time, such as put, merge, compute, computeIfAbsent, and computeIfPresent operations. The method is defined as follows:

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

//SizeCtl < 0 denotes that other threads are initialized and that the thread must be suspended

if ((sc = sizeCtl) < 0)

Thread.yield();

// If the thread acquires the right to initialize, set sizeCtl to - 1 with CAS to indicate that the thread is initializing.

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

// Initialization

try {

if ((tab = table) == null || tab.length == 0) {

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = tab = nt;

// The size of next expansion

sc = n - (n >>> 2); /// Equivalent to 0.75*n setting a threshold for expansion

}

} finally {

sizeCtl = sc;

}

break;

}

}

return tab;

}The key to the initialization method initTable() is sizeCtl, which defaults to 0 and is a power of 2 if a parameter is passed into the constructor. If the value < 0 indicates that other threads are initializing, the thread must be suspended. If the thread has the privilege of initialization, set sizeCtl to - 1 to prevent other threads from entering, and finally set sizeCtl to 0.75 * n to represent the threshold of expansion.

put operation

Concurrent HashMap's most commonly used put and get operations, Concurrent HashMap's put operations and HashMap are not much different, the core idea is still to calculate the node insertion position in the table based on hash value, if the location is empty, then insert directly, otherwise insert into the list or tree. But the Concurrent HashMap can involve multithreading, which is much more complicated. Let's look at the source code first, and then analyze it step by step according to the source code:

public V put(K key, V value) {

return putVal(key, value, false);

}

final V putVal(K key, V value, boolean onlyIfAbsent) {

//Neither key nor value can be null

if (key == null || value == null) throw new NullPointerException();

//Calculate hash value

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

// table is null for initialization

if (tab == null || (n = tab.length) == 0)

tab = initTable();

//If there is no node in the i position, insert it directly without locking.

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

// If there are threads that are expanding, help expand first

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

else {

V oldVal = null;

//Locking the node (the head node of the linked list with the same hash value) has a slight impact on performance.

synchronized (f) {

if (tabAt(tab, i) == f) {

//FH > 0 is represented as a linked list, inserting the node at the end of the list

if (fh >= 0) {

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

//hash and key are the same, replacing value

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

//putIfAbsent()

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

//Direct insertion at the end of the list

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

//Tree nodes, insert according to the insertion operation of the tree

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

// If the list length has reached the critical value of 8, it needs to be converted into a tree structure.

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

//size + 1

addCount(1L, binCount);

return null;

}According to the source code above, we can confirm that the whole put process is as follows:

- The key and value of Concurrent HashMap are not allowed to be null

- Calculate hash. Hash value is calculated by the method.

static final int spread(int h) {

return (h ^ (h >>> 16)) & HASH_BITS;

}- Traversing the table, inserting nodes, the process is as follows:

- If the table is empty, it means that the Concurrent HashMap has not been initialized, then the initialization operation is performed: initTable()

- The location I of the node is obtained according to the hash value. If the location is empty, it is inserted directly. This process does not need to be locked. Calculate f position: i=(n-1) & hash

- If fh = f.hash == -1 is detected, then f is a Forwarding Node node, indicating that other threads are expanding, then it helps threads to expand together.

- If F. hash >= 0 indicates a linked list structure, traverse the linked list, replace value if there is a current key node, or insert it at the end of the list. If f is a TreeBin type node, update or add nodes according to the red-black tree method

- If the list length > TREEIFY_THRESHOLD (default is 8), the list is converted to a red-black tree structure.

- Call the addCount method, Concurrent HashMap size + 1

Here the entire put operation has been completed.

get operation

Concurrent HashMap's get operation is still quite simple. It's just to find the same key node through hash. Of course, we need to distinguish between linked list and tree.

public V get(Object key) {

Node<K,V>[] tab; Node<K,V> e, p; int n, eh; K ek;

// Calculate hash

int h = spread(key.hashCode());

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {

// The searched node key is the same as the incoming key and is not null. It is returned directly to the node.

if ((eh = e.hash) == h) {

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

return e.val;

}

// tree

else if (eh < 0)

return (p = e.find(h, key)) != null ? p.val : null;

// Link list, traversal

while ((e = e.next) != null) {

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

return null;

}The whole logic of get operations is very clear:

- Calculate hash value

- Determine whether the table is empty, and if it is empty, return null directly

- According to the hash value, the Node node (tabAt(tab, (n-1) & h) in the table is obtained, and then the corresponding node is found according to the linked list or tree, and its value is returned.

size operation

The size() method of Concurrent HashMap is not used very much, but it is necessary for us to understand it. The size() method of ConcurrentHashMap returns an inaccurate value because other threads are inserting and deleting operations when statistics are performed. Concurrent HashMap, of course, is also distracted by this inaccurate value.

To better count size, Concurrent HashMap provides two auxiliary variables, baseCount and counterCells, and a CounterCell auxiliary inner class.

@sun.misc.Contended static final class CounterCell {

volatile long value;

CounterCell(long x) { value = x; }

}

//The number of elements in Concurrent HashMap, but not necessarily the actual number of elements in the current Map. Lockless update based on CAS

private transient volatile long baseCount;

private transient volatile CounterCell[] counterCells;Here we need a clear definition of CounterCell

The size() method is defined as follows:

public int size() {

long n = sumCount();

return ((n < 0L) ? 0 :

(n > (long)Integer.MAX_VALUE) ? Integer.MAX_VALUE :

(int)n);

}Internal call sunmCount():

final long sumCount() {

CounterCell[] as = counterCells; CounterCell a;

long sum = baseCount;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

//Traversal, sum of all counter s

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}sumCount() is the process of iterating counterCells to count sum. We know that size() is bound to be affected by the put operation, so let's see how Councurrent HashMap is distracted by this discordant size().

At the end of the put() method, the addCount() method is called. This method mainly does two things, one is to update the value of baseCount, the other is to check whether the expansion is carried out. We only look at the update baseCount section:

private final void addCount(long x, int check) {

CounterCell[] as; long b, s;

// s = b + x, complete the baseCount++ operation;

if ((as = counterCells) != null ||

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

CounterCell a; long v; int m;

boolean uncontended = true;

if (as == null || (m = as.length - 1) < 0 ||

(a = as[ThreadLocalRandom.getProbe() & m]) == null ||

!(uncontended =

U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) {

// Execution when multithreaded CAS fails

fullAddCount(x, uncontended);

return;

}

if (check <= 1)

return;

s = sumCount();

}

// Check for expansion

}X = 1, if counterCells = null, then U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x), if the concurrent competition is large, may cause the change process to fail, and if it fails, the fullAddCount() method will eventually be invoked. In fact, in order to improve the visibility failure of baseCount in high concurrency and avoid retrying all the time, JDK 8 introduces the class Striped64, in which LongAdder and DoubleAdder are implemented based on this class, and CounterCell is also implemented based on Stried64. If counterCells! = null, and uncontended = U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x) also fails. The full AddCount () method is also called, and finally sumCount() is called to calculate s.

In fact, in 1.8, it does not recommend the size() method, but rather the mappingCount() method. The definition of this method is basically the same as that of the size() method.

public long mappingCount() {

long n = sumCount();

return (n < 0L) ? 0L : n; // ignore transient negative values

}Capacity expansion operation

When the number of table elements in Concurrent HashMap reaches the capacity threshold (sizeCtl), the expansion operation is needed. In the last put operation, addCount(long x, int check) is called. This method mainly does two tasks: 1. updating baseCount; 2. detecting whether expansion operation is needed. As follows:

private final void addCount(long x, int check) {

CounterCell[] as; long b, s;

// Update baseCount

//Check >= 0: Expansion is required

if (check >= 0) {

Node<K,V>[] tab, nt; int n, sc;

while (s >= (long)(sc = sizeCtl) && (tab = table) != null &&

(n = tab.length) < MAXIMUM_CAPACITY) {

int rs = resizeStamp(n);

if (sc < 0) {

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

//The current thread is the only one or the first to initiate the expansion when nextTable=null

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

s = sumCount();

}

}

}Transf () method is the core method of Concurrent HashMap expansion operation. Because Concurrent HashMap supports multi-threaded scaling and does not lock, the implementation becomes a bit complicated. The whole expansion operation is divided into two steps:

- Build a nextTable that is twice the size of the original one. This step is done in a single-threaded environment

- Copy the contents of the original table into the next table. This step allows multithreading, so the performance is improved and the time consumption of expansion is reduced.

Let's first look at the source code, then step by step analysis:

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

// If the amount of processing per core is less than 16, then the compulsory assignment is 16

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) { // initiating

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1]; //Build a nextTable object with twice the original capacity

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

// Connection Point Pointer, used for flag bits (fwd hash value is - 1, fwd.nextTable=nextTab)

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

// When advance = true, it indicates that the node has been processed

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

// Control -- i, traversing nodes in the original hash table

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

// TransfIndex Calculated by CAS

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

// All nodes have been replicated

if (finishing) {

nextTable = null;

table = nextTab; // table points to nextTable

sizeCtl = (n << 1) - (n >>> 1); // The threshold of sizeCtl is 1.5 times that of the original.

return; // Jump out of the dead cycle.

}

// CAS has a larger capacity threshold, where the sizectl value is reduced by one, indicating that a new thread is involved in the expansion operation.

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

// If the traversed node is null, it is put into the Forwarding Node pointer node

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

// f.hash == -1 means that the ForwardingNode node has been traversed, which means that the node has been processed.

// Here is the core of concurrent expansion control

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

else {

// Node locking

synchronized (f) {

// Node replication

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

// FH >= 0, expressed as linked list node

if (fh >= 0) {

// Construct two linked lists: one is the original list and the other is the reverse order of the original list.

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

if (runBit == 0) {

ln = lastRun;

hn = null;

}

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

// Insert a linked list at the nextTable i location

setTabAt(nextTab, i, ln);

// Insert linked list at nextTable i + n

setTabAt(nextTab, i + n, hn);

// Insert Forwarding Node at table i to indicate that the node has been processed

setTabAt(tab, i, fwd);

// advance = true can execute -- i action, traversing nodes

advance = true;

}

// If it is TreeBin, it is processed according to the red-black tree, and the processing logic is the same as above.

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

// If the number of tree nodes is <=6 after expansion, the tree is linked to a list.

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}The source code above is a bit long and a bit complicated. Here we abandon its multi-threaded environment. From a single-threaded point of view:

- Divide tasks for each kernel and ensure that they are no less than 16

- Check whether the nextTable is null, and if so, initialize the nextTable to double the capacity of the table.

- Dead-loop traverses nodes and knows finish: nodes copy from table to next table to support concurrency. Please think as follows:

- If node f is null, insert Forwarding Node (implemented by Unsafe. compare AndSwapObjectf method), which is the key to triggering concurrent expansion.

- If f is the head node of the list (fh >= 0), then we first construct a reverse list, then place them i n the I and i + n positions of nextTable respectively, and insert Forwarding Node into the original node position to represent that it has been processed.

- If f is a TreeBin node, it is also necessary to construct a reverse order. At the same time, it is necessary to determine whether unTreeify() operation is needed, and insert the results of the processing into the I and i+nw positions of nextTable, respectively, and insert the Forwarding Node node.

- When all nodes replicate, they point the table to nextTable and update sizeCtl = nextTable 0.75 times to complete the expansion process.

In a multithreaded environment, Concurrent HashMap uses two points to ensure correctness: Forwarding Node and synchronized. When a thread traverses a node that is Forwarding Node, it continues to traverse backwards. If not, it locks the node to prevent other threads from entering. After completion, the Forwarding Node node is set up so that other threads can see that the node has been processed, so that it can be crossed, efficient and safe.

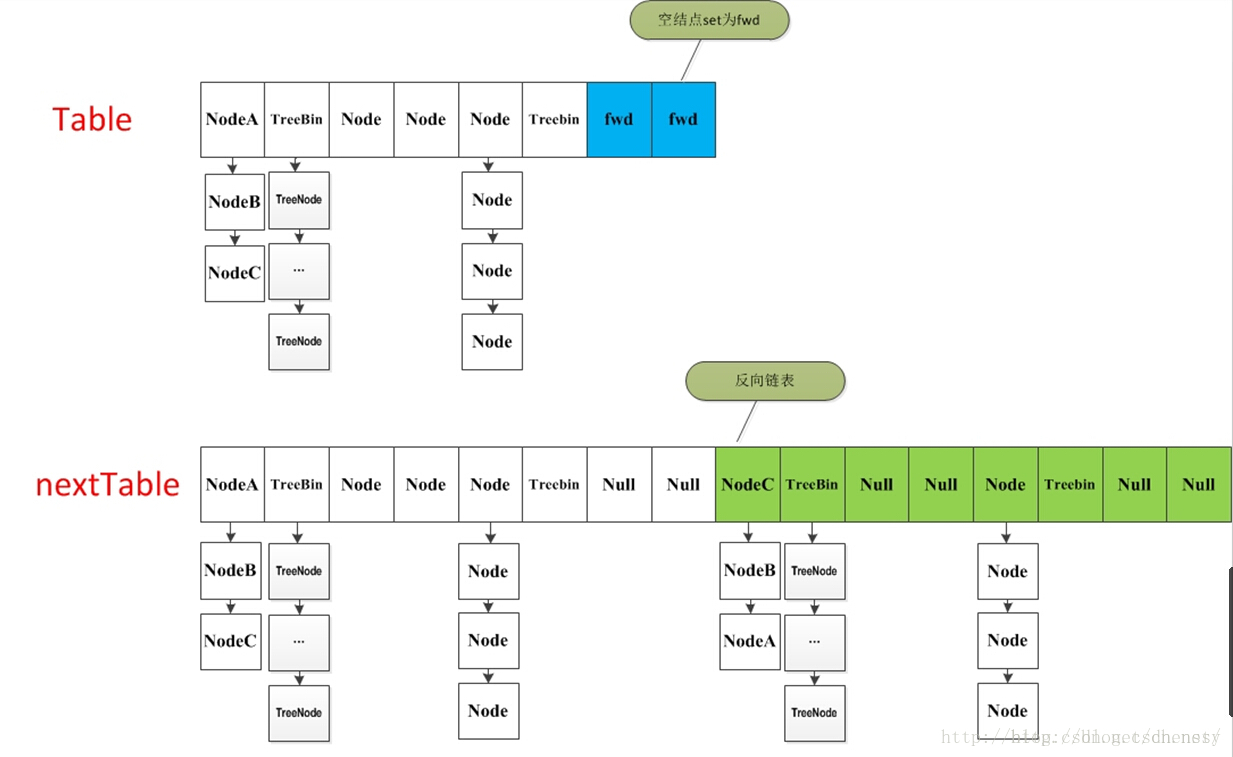

The following is a process of expansion (from: http://blog.csdn.net/u010723709/article/details/48007881):

If fh. hash = 1 is found during put operation, it indicates that the expansion operation is in progress, and the current thread will assist in the expansion operation.

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);The helpTransfer() method is to assist in expanding the method. When the method is called, the nextTable must have been created, so the method is mainly for replication. As follows:

final Node<K,V>[] helpTransfer(Node<K,V>[] tab, Node<K,V> f) {

Node<K,V>[] nextTab; int sc;

if (tab != null && (f instanceof ForwardingNode) &&

(nextTab = ((ForwardingNode<K,V>)f).nextTable) != null) {

int rs = resizeStamp(tab.length);

while (nextTab == nextTable && table == tab &&

(sc = sizeCtl) < 0) {

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) {

transfer(tab, nextTab);

break;

}

}

return nextTab;

}

return table;

}Conversion of Red and Black Trees

In the put operation, if the elements in the list structure exceed TREEIFY_THRESHOLD (default is 8), the list will be converted to a red-black tree, which is convenient to improve the query efficiency. As follows:

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);Call the treeifyBin method to convert the linked list to a red-black tree.

private final void treeifyBin(Node<K,V>[] tab, int index) {

Node<K,V> b; int n, sc;

if (tab != null) {

if ((n = tab.length) < MIN_TREEIFY_CAPACITY)//If table. length < 64, double back

tryPresize(n << 1);

else if ((b = tabAt(tab, index)) != null && b.hash >= 0) {

synchronized (b) {

if (tabAt(tab, index) == b) {

TreeNode<K,V> hd = null, tl = null;

//A TreeBin object is constructed to wrap all Node nodes as TreeNode and put them in

for (Node<K,V> e = b; e != null; e = e.next) {

TreeNode<K,V> p =

new TreeNode<K,V>(e.hash, e.key, e.val,

null, null);//Here we only use TreeNode encapsulation instead of next and parent domains of TreeNode.

if ((p.prev = tl) == null)

hd = p;

else

tl.next = p;

tl = p;

}

//Replace the original Node object with TreeBin at the original index location

setTabAt(tab, index, new TreeBin<K,V>(hd));

}

}

}

}

}From the above source code, it can be seen that the process of building a red black tree is synchronous. After synchronization, the process is as follows:

- According to the index location Node list in table, a TreeNode with hd as the head node is regenerated.

- According to the hd header node, the tree structure of TreeBin is generated, and the original Node object is replaced by TreeBin.

The whole construction process of red-black tree is a little complicated. We will follow up the analysis of the construction process of Concurrent HashMap red-black tree.

[Note] Concurrent HashMap's expansion and link list transition to red and black trees are slightly more complex, and a subsequent blog post analysis