appendix

In 2014, I have sorted out the relevant java Concurrent Programming interview questions, here as an appendix to reorganize

1.There are three threads: T1, T2 and T3. How can I ensure that T2 executes after T1 and T3 executes after T2?

Use join method

The function of the join method is to make asynchronous threads execute synchronously. That is, when the start method of a thread instance is called, the method returns immediately. If the start method is called and a value calculated by the thread is required, the join method must be used. Without the join method, there is no guarantee that the line will be executed when a statement following the start method is executed.The program will not execute until the thread exits using the join method

2.What are the advantages of the Lock interface in Java over synchronized?

If you need to implement an efficient cache that allows multiple users to read but only one user to write to maintain its integrity, how?

The biggest advantage of the Lock interface is that it provides locks for both read and write

ReadWriteLock has more powerful features, which can be subdivided into read and write locks

Read locks allow multiple threads to read at the same time, but not write processes; write locks allow only one write process to enter, during which no process can enter (which fully meets the conditions for multiple users to read and one user to write in the title)

It is important to note that each read and write lock has a padlock and unlock operation. It is best to use try and finally to nest each pair of padlock and unlock operations in the middle of the code, which will prevent the deadlock situation caused by the exception.

Here is a sample program

ReadWriteLockTest.java

import java.util.Random;

public class ReadWriteLockTest {

public static void main(String[] args) {

// This is shared data among threads

final TheData myData = new TheData();

// Open 3 read threads

for (int i = 0; i < 3; i++) {

new Thread(

() -> {

while (true) {

myData.get();

}

})

.start();

}

// Open 3 Write Threads

for (int i = 0; i < 3; i++) {

new Thread(

() -> {

while (true) {

myData.put(new Random().nextInt(10000));

}

})

.start();

}

}

}

TheData.java

import java.util.concurrent.locks.ReadWriteLock;

import java.util.concurrent.locks.ReentrantReadWriteLock;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class TheData {

private final ReadWriteLock rwl = new ReentrantReadWriteLock();

private Object data = null;

public void get() {

rwl.readLock().lock(); // Read lock open, read threads can enter

try { // Use try finally to prevent deadlocks caused by exceptions

log.info("{} is ready to read", Thread.currentThread().getName());

Thread.sleep(new Random().nextInt(100));

log.info("{} have read data {}", Thread.currentThread().getName(), data);

} catch (InterruptedException e) {

log.info(ExceptionUtils.getStackTrace(e));

} finally {

rwl.readLock().unlock(); // Read Lock Unlock

}

}

public void put(Object data) {

rwl.writeLock().lock(); // Write lock is on and only one write thread enters

try {

log.info("{} is ready to write", Thread.currentThread().getName());

Thread.sleep(new Random().nextInt(100));

this.data = data;

log.info("{} have write data {}", Thread.currentThread().getName(), data);

} catch (InterruptedException e) {

log.error(ExceptionUtils.getStackTrace(e));

} finally {

rwl.writeLock().unlock(); // Write Lock Unlock

}

}

}

3.How are wait and sleep methods different in java?

The biggest difference is that while waiting, wait releases the lock, while sleep always holds the lock. Wait is often used for interthread interaction, and sleep is often used to suspend execution.

Other differences are

- sleep is the static method of the Thread class and wait is the Object method.

- wait, notify, and notifyAll can only be used in synchronization control methods or synchronization control blocks, while sleep can be used anywhere

- sleep must catch exceptions, while wait, notify, and notifyAll do not need to catch exceptions

4.How do I block queues in Java?

First, we want to clarify the definition of the blocking queue:

Blocking QueueIs a queue that supports two additional operations. These two additional operations are: when the queue is empty, the thread that gets the element waits for the queue to become non-empty. When the queue is full, the thread that stores the element waits for the queue to become available. Blocking queues is often used in scenarios for producers and consumers, where the producer is the thread that adds the element to the queue and the consumer takes the element from the queue.Threads. Blocking queues are containers where producers store elements, while consumers only take elements from containers

notes: For producer-consumer questions, see the Wikipedia Web site [https://Zh-wikipedia.mirror.wit.im/w/index.php?Search=producer consumer question&title=Special:search](https://zh-wikipedia.mirror.wit.im/w/index.php?search=%E7%94%9F%E4%BA%A7%E8%80%85%E6%B6%88%E8%B4%B9%E8%80%85%E9%97%AE%E9%A2%98&title=Special:%E6%90%9C%E7%B4%A2) And Baidu Encyclopedia website [https://Baike.baidu.com/item/Producer-Consumer Issues] (https://baike.baidu.com/item/%E7%94%9F%E4%BA%A7%E8%80%85%E6%B6%88%E8%B4%B9%E8%80%85%E9%97%AE%E9%A2%98)

A simple implementation of blocking queues

BlockingQueue.java

import java.util.LinkedList;

import java.util.Queue;

public class BlockingQueue {

private final Queue<Object> queue = new LinkedList<>();

private int limit = 10;

public BlockingQueue(int limit) {

this.limit = limit;

}

public synchronized void enqueue(Object item) throws InterruptedException {

while (this.queue.size() == this.limit) {

wait();

}

if (this.queue.size() == 0) {

notifyAll();

}

this.queue.add(item);

}

public synchronized Object dequeue() throws InterruptedException {

while (this.queue.size() == 0) {

wait();

}

if (this.queue.size() == this.limit) {

notifyAll();

}

return this.queue.remove(0);

}

}

Within the enqueue and dequeue methods, the notifyAll method is called only if the size of the queue equals either the upper limit or the lower limit (0). If the size of the queue is neither equal to the upper limit nor the lower limit, no thread will block when calling the enqueue or dequeue method, and elements can be added to or removed from the queue normally.

5.Write Java code to solve producer-consumer problems

The producer-consumer problem is one of the classic problems that can't be bypassed when studying multithreaded programs. It describes a buffer as a warehouse, where a producer can put a product into a warehouse, and a consumer can take the product out of the warehouse.

Use the blocking queue implementation code from Question 4 to solve. This is not the only solution.

There are two types of solutions to producer/consumer problems:

- Use a mechanism to protect synchronization between producers and consumers

- Create a pipeline between producers and consumers

The first method is more efficient and easy to implement, and the code can be controlled well, which belongs to the common mode. The second kind of pipeline buffer is not easy to control, the transmitted data object is not easy to encapsulate, and so on. Therefore, the first method is recommended.

The core issue of synchronization is how to ensure integrity when the same resource is accessed concurrently by multiple threads.

The common synchronization method is to use signal or lock mechanism to ensure that resources are accessed by at least one thread at any time. Java language achieves full objectification in multi-threaded programming and provides good support for synchronization mechanism.

There are four methods to support synchronization in Java, the first three of which are synchronization methods and the first one is pipeline method. Pipeline method is not recommended. The blocking queue method is described in question 4, and only the first two are provided.

- wait()/notify() method

- await()/signal() method

- BlockingQueue Blocking Queue Method

- PipedInputStream/PipedOutputStream

Producer class (Producer.java)

import com.wujunshen.thread.producerandconsumer.notify.Storage;

import lombok.Data;

import lombok.EqualsAndHashCode;

@EqualsAndHashCode(callSuper = true)

@Data

public class Producer extends Thread {

/** Number of products per production */

private int num;

/** The warehouse where it is placed */

private Storage storage;

/**

* Constructor, set warehouse

*

* @param storage Warehouse

*/

public Producer(Storage storage) {

this.storage = storage;

}

/** Thread run function */

@Override

public void run() {

produce(num);

}

/**

* Call warehouse store's production function

*

* @param num Production Quantity

*/

public void produce(int num) {

storage.produce(num);

}

}

Consumer class (Consumer.java)

import com.wujunshen.thread.producerandconsumer.notify.Storage;

import lombok.Data;

import lombok.EqualsAndHashCode;

@EqualsAndHashCode(callSuper = true)

@Data

public class Consumer extends Thread {

/** Number of products consumed per consumption */

private int num;

/** The warehouse where it is placed */

private Storage storage;

/**

* Constructor, set warehouse

*

* @param storage Warehouse

*/

public Consumer(Storage storage) {

this.storage = storage;

}

/** Thread run function */

@Override

public void run() {

consume(num);

}

/**

* Call warehouse store's production function

*

* @param num Consumption Quantity

*/

public void consume(int num) {

storage.consume(num);

}

}

Warehouse class: (wait/notify method)

import java.util.LinkedList;

import java.util.Queue;

import lombok.Data;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Data

@Slf4j

public class Storage {

/** Warehouse Maximum Storage */

private static final int MAX_SIZE = 100;

/** Carrier for warehouse storage */

private final Queue<Object> list = new LinkedList<>();

/**

* Produce num products

*

* @param num Production Quantity

*/

public void produce(int num) {

// Synchronize Code Snippets

synchronized (list) {

// If warehouse capacity is insufficient

while (list.size() + num > MAX_SIZE) {

log.info("[Number of products to be produced): {}", num);

log.info("[Inventory): {} Production tasks are temporarily unavailable!", list.size());

try {

list.wait(); // Production blocked due to unsatisfactory conditions

} catch (InterruptedException e) {

log.error(ExceptionUtils.getStackTrace(e));

}

}

// num products produced when production conditions are met

for (int i = 1; i <= num; ++i) {

list.add(new Object());

}

log.info("[Number of Products Produced): {}", num);

log.info("[Storage on hand is]: {}", list.size());

list.notifyAll();

}

}

/**

* Consuming num products

*

* @param num Consumption Quantity

*/

public void consume(int num) {

// Synchronize Code Snippets

synchronized (list) {

// If warehouse storage is insufficient

while (list.size() < num) {

log.info("[Number of products to consume): {}", num);

log.info("[Inventory): {} Production tasks are temporarily unavailable!", list.size());

try {

// Consumer congestion due to unsatisfactory conditions

list.wait();

} catch (InterruptedException e) {

log.error(ExceptionUtils.getStackTrace(e));

}

}

// num products consumed when consumption conditions are met

for (int i = 1; i <= num; ++i) {

list.remove();

}

log.info("[Number of already consumed products): {}", num);

log.info("[On-hand Storage)Quantity]: {}", list.size());

list.notifyAll();

}

}

}

Warehouse class: (await/signal method)

import java.util.LinkedList;

import java.util.Queue;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import lombok.Data;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Data

@Slf4j

public class Storage {

/** Warehouse Maximum Storage */

private static final int MAX_SIZE = 100;

/** lock */

private final Lock lock = new ReentrantLock();

/** Conditional variable with full warehouse */

private final Condition full = lock.newCondition();

/** Conditional variable with empty warehouse */

private final Condition empty = lock.newCondition();

/** Carrier for warehouse storage */

private Queue<Object> list = new LinkedList<>();

/**

* Produce num products

*

* @param num Production Quantity

*/

public void produce(int num) {

// Acquire locks

lock.lock();

// If warehouse capacity is insufficient

while (list.size() + num > MAX_SIZE) {

log.info("[Number of products to be produced): {}", num);

log.info("[Inventory): {} Production tasks are temporarily unavailable!", list.size());

try {

// Production blocked due to unsatisfactory conditions

full.await();

} catch (InterruptedException e) {

log.error(ExceptionUtils.getStackTrace(e));

}

}

// num products produced when production conditions are met

for (int i = 1; i <= num; ++i) {

list.add(new Object());

}

log.info("[Number of Products Produced): {}", num);

log.info("[Storage on hand is]: {}", list.size());

// Wake up all other threads

full.signalAll();

empty.signalAll();

// Release lock

lock.unlock();

}

/**

* Consuming num products

*

* @param num Consumption Quantity

*/

public void consume(int num) {

// Acquire locks

lock.lock();

// If warehouse storage is insufficient

while (list.size() < num) {

log.info("[Number of products to consume): {}", num);

log.info("[Inventory): {} Production tasks are temporarily unavailable!", list.size());

try {

// Consumer congestion due to unsatisfactory conditions

empty.await();

} catch (InterruptedException e) {

log.error(ExceptionUtils.getStackTrace(e));

}

}

// num products consumed when consumption conditions are met

for (int i = 1; i <= num; ++i) {

list.remove();

}

log.info("[Number of already consumed products): {}", num);

log.info("[On-hand Storage)Quantity]: {}", list.size());

// Wake up all other threads

full.signalAll();

empty.signalAll();

// Release lock

lock.unlock();

}

}

6.How do I solve a deadlock-causing program written in Java?

The Java thread deadlock problem is often associated with a problem known as philosopher dining

Note: For questions about philosophers eating, see the Wikipedia website

https://zh-wikipedia.mirror.wit.im/w/index.php?search=Philosopher's Dining Question

And Baidu Encyclopedia website

https://baike.baidu.com/item/Philosopher's Dining Question

The root cause of the deadlock is the inappropriate use of the "synchronized" keyword to manage thread access to specific objects

"Synchronized"The purpose of keywords is to ensure that only one thread is allowed to execute a specific block of code at a given time, so the thread allowed to execute must first have exclusive access to a variable or object. When a thread accesses an object, the thread locks the object, causing other threads that also want to access the same object to be blocked until the first thread releases itLocks placed on objects. For this reason, when using the "synchronized" keyword, it is easy for two threads to wait for each other to make an action

Deadlock program example

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class DeadLock implements Runnable {

static final Object O_1 = new Object();

static final Object O_2 = new Object();

static int flag = 1;

public static void main(String[] args) {

DeadLock td1 = new DeadLock();

DeadLock td2 = new DeadLock();

td1.flag = 1;

td2.flag = 0;

Thread t1 = new Thread(td1);

Thread t2 = new Thread(td2);

t1.start();

t2.start();

}

@Override

public void run() {

log.info("flag={}", flag);

if (flag == 1) {

synchronized (O_1) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

synchronized (O_2) {

log.info("1");

}

}

}

if (flag == 0) {

synchronized (O_2) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

synchronized (O_1) {

log.info("0");

}

}

}

}

}

Explain

When an object of a class flag=1 When ( T1),Lock first O_1,Sleep for 500 milliseconds, then lock O_2 and T1 Another while sleeping flag=0 Object ( T2)Thread starts, lock first O_2,Sleep 500 milliseconds, wait T1 release O_1 T1 Need to lock after sleep O_2 To continue execution, and at this time O_2 Has been T2 locking T2 Need to lock after sleep O_1 To continue execution, and at this time O_1 Has been T1 locking T1,T2 Waiting for each other, deadlocks occur when resources locked by the other party are required to continue execution

A common rule of thumb for avoiding deadlocks is to ensure that when several threads access shared resources A, B, and C, each thread accesses them in the same order, such as accessing A first, then B and C

If Thread t2 = new Thread(td2); change to Thread t2 = new Thread(td1);

Another method is to synchronize the object to increase the granularity of the lock, such as the example above, which causes the process to lock the current object rather than the two child objects, O_1 and O_2, of the current object step by step. This way, even after t1 locks O_1, the current object is still locked by t1 even if hibernation occurs, t2 cannot interrupt t1 to lock O_2, and then lock O_2 after t1 hibernates to obtain capital.Source, execution succeeded. The current object t2 is then released and t1 continues to run.

The code is as follows

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class DeadLock implements Runnable {

public int flag = 1;

public static void main(String[] args) {

DeadLock td1 = new DeadLock();

DeadLock td2 = new DeadLock();

td1.flag = 1;

td2.flag = 0;

Thread t1 = new Thread(td1);

Thread t2 = new Thread(td2);

t1.start();

t2.start();

}

@Override

public synchronized void run() {

log.info("flag={}", flag);

if (flag == 1) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

log.info("1");

}

if (flag == 0) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

log.info("0");

}

}

}

Explain

Code modification to public synchronized void run(){..},Remove child object locks. Add for a member method synchronized A keyword is actually a lock on the object itself where the member method is located. In this case, the td1,td2 These two items. Deadlock Object locking

The third way to resolve deadlocks is to use the ReentrantLock class, which implements the Lock interface, with the following code

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class DeadLock implements Runnable {

private final Lock lock = new ReentrantLock();

private int flag = 1;

public static void main(String[] args) {

DeadLock td1 = new DeadLock();

DeadLock td2 = new DeadLock();

td1.flag = 1;

td2.flag = 0;

Thread t1 = new Thread(td1);

Thread t2 = new Thread(td2);

t1.start();

t2.start();

}

public boolean checkLock() {

return lock.tryLock();

}

@Override

public void run() {

if (checkLock()) {

try {

log.info("flag={}", flag);

if (flag == 1) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

log.info("1");

}

if (flag == 0) {

try {

Thread.sleep(500);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

log.info("0");

}

} finally {

lock.unlock();

}

}

}

}

Explain

Code line lock.tryLock()Is to test if an object operation is already in progress and if it is already in progress, to return immediately false,Achieving the effect of ignoring object operations

7. What is an atomic operation and what is an atomic operation in Java?

Atomic operations are operations that are not interrupted by the thread scheduling mechanism; once they start, they run to the end and switch to another thread in the middle.

Introduction to atomic operations in java

The package for jdk1.5 is java.util.concurrent.atomic

This package provides a set of atomic classes. The basic feature is exclusivity in a multithreaded environment when multiple threads execute methods contained by instances of these classes at the same time.

That is, when a thread enters a method and executes its instructions, it will not be interrupted by other threads, like locks, until the method is executed before the JVM chooses another thread from the waiting queue to enter. This is only a logical understanding. It is actually achieved with the help of hardware-related instructions, but it will not block the thread(synchronized suspends other waiting threads, or simply blocks them at the hardware level)

The classes can be grouped into four groups

- AtomicBoolean,AtomicInteger,AtomicLong,AtomicReference

- AtomicIntegerArray,AtomicLongArray

- AtomicLongFieldUpdater,AtomicIntegerFieldUpdater,AtomicReferenceFieldUpdater

- AtomicMarkableReference,AtomicStampedReference,AtomicReferenceArray

The role of Atomic classes

- Makes operations on a single data atomized

- Using Atomic classes to build complex, non-blocking code

- Accessing two or more atomic variables (or performing two or more operations on a single atomic variable) is generally considered synchronous in order for these operations to be able to act as an atomic unit

Four basic types, AtomicBoolean/AtomicInteger/AtomicLong/AtomicReference, are used to process four types of data: Boolean, Integer, Long Integer, Object

- Constructor (two constructors)

- Default constructor: initialized data is false, 0, 0, null

- Parameterized constructor: data whose parameters are initialized

- set() and get() methods: atomic data can be set and retrieved atomically. Like volatile, data is guaranteed to be set or read in main memory

- getAndSet() method

- An atom sets a variable to new data and returns old data.

- It is essentially a get() operation followed by a set() operation. Although both operations are atomic, they are not atomic when they are combined. At the Java source level, it is not possible to do this without relying on the synchronized mechanism. Only the native method can do this.

- compareAndSet() and weakCompareAndSet() methods

- Both methods are conditional modifier methods. The two methods accept two parameters, one is expected and the other is new. If the data inside the atomic is the same as the expected data, the new data is set to atomic's data and returns true, indicating success; otherwise, it is not set and returns false.

- Special methods are also provided for AtomicInteger, AtomicLong. getAndIncrement(), incrementAndGet(), getAndDecrement(), decrementAndGet (), addAndGet(), getAndAdd() are used to add and subtract atoms.(Note that--i, ++ I is not an atomic operation, it contains three steps: first, read i; second, add 1 or subtract 1; third, write back memory)

Example - Create a thread-safe stack using AtomicReference

import java.util.concurrent.atomic.AtomicReference;

import lombok.AccessLevel;

import lombok.AllArgsConstructor;

public class LinkedStack<T> {

private final AtomicReference<Node<T>> stacks = new AtomicReference<>();

public T push(T e) {

Node<T> oldNode;

Node<T> newNode;

// The handling here is very special, and it must be

while (true) {

oldNode = stacks.get();

newNode = new Node<>(e, oldNode);

if (stacks.compareAndSet(oldNode, newNode)) {

return e;

}

}

}

public T pop() {

Node<T> oldNode;

Node<T> newNode;

while (true) {

oldNode = stacks.get();

newNode = oldNode.next;

if (stacks.compareAndSet(oldNode, newNode)) {

return oldNode.object;

}

}

}

@AllArgsConstructor(access = AccessLevel.PRIVATE)

private static final class Node<T> {

private final T object;

private final Node<T> next;

}

}

8. What does the volatile keyword do in Java and how do you use it? How does it differ from the synchronized method in Java?

volatile is used to synchronize variables across multiple threads. To improve efficiency, threads copy a member variable (such as A) into a copy (such as B), where access to A is actually accessed by B. A and B are synchronized only for certain actions. Therefore, there is a discrepancy between A and B.

volatile is used to avoid this situation. volatile tells jvm that the variable it modifies does not retain a copy and directly accesses the (A) variable in main memory.

Declaring a variable as volatile means that it is always modified by other threads and therefore cannot be cache d in thread memory. The following examples show the role of volatile

public class StoppableTask extends Thread {

private volatile boolean pleaseStop;

@Override

public void run() {

while (!pleaseStop) {

// do some stuff...

}

}

public void tellMeToStop() {

pleaseStop = true;

}

}

If pleaseStop is not declared volatile and the thread checks its own copy when run ning, it is not timely to know that other threads have called tellMeToStop() to modify the value of pleaseStop.

Volatile cannot normally replace sychronized because volatile does not guarantee the atomicity of an operation, and even if it is only i++, it is actually composed of multiple atomic operations.

read i; inc; write i,

If multiple threads execute i++, volatile can only guarantee that they operate on I in the same memory, but it can still write dirty data. If you work with Java 5's increased atomic wrapper classes, operations like their increases do not require sychronized.

The difference between volatile and synchronized is easiest to explain. Volatile is a variable modifier, while synchronized acts on a piece of code or method; see the following three get codes

int i1;

volatile int i2;

int i3;

int geti1() {

return i1;

}

int geti2() {

return i2;

}

synchronized int geti3() {

return i3;

}

Gets the value of i1 stored in the current thread. Multiple threads have multiple copies of i1 variables, and these i1 can be different from each other. In other words, another thread may have changed the value of i1 in its thread, which can be different from the value of i1 in the current thread. In fact, Java has an idea called the main memory area, where the current variable is stored."Accurate value". Each thread can have its own copy of a variable whose copy value can be different from what is stored in the "main" memory area. So there is actually a possibility: "main"The i1 value in the memory region is 1, the i1 value in thread 1 is 2, and the i1 value in thread 2 is 3 - this happens when thread 1 and thread 2 both change their i1 values and this change has not yet been passed to the main memory region or other threads.

geti2() gets the i2 value for the main memory region. Variables modified with volatile are not allowed to differ from the main memory regionVariable copies of memory regions. In other words, a variable must be synchronized across all threads after volatile modification; any thread changes its value and all other threads immediately get the same value. Of course, volatile-modified variable access consumes a little more resources than regular variables because threads have their own copies of variables that are higherEffectiveness.

Now that the volatile keyword has synchronized data between threads, what do you want synchronized to do? There are two differences between them. First, synchronized obtains and releases monitors - if two threads use the same object lock, the monitor can force code blocks to be executed by only one thread at a time - which is a well-known fact. However, synchronizedSynchronized memory as well: In fact, synchronized synchronizes the entire thread's memory in the "main" memory area. Therefore, the geti3() method is executed as follows:

- Thread requests an object lock to monitor this object (assuming it is not locked, otherwise the thread waits until the lock is released)

- Thread memory data is eliminated and read in from the Main memory area

- Code block is executed

- Any changes to a variable can now be safely written to the Main memory area (although the geti3() method does not change the value of the variable)

- Threads release object locks that monitor this object

So volatile simply synchronizes the values of a variable between thread memory and main memory, while synchronized synchronizes the values of all variables by locking and unlocking a monitor. Obviously synchronized consumes more resources than volatile

9.What are the competition conditions and how do you find and resolve them?

Two threads operate on the same object synchronously, making the final state of the object unknown -- called a race condition. A race condition can be anywhere a programmer should guarantee atomic operation without forgetting to use synchronized.

The only solution is to lock.

Java has two locks to choose from

-

Object or class locks. Each object or class has a lock. Get it using the synchronized keyword. Synchronized uses a class lock when it is added to the static method and object lock when it is added to the normal method. In addition, synchronized can be used to lock critical section blocks. After synchronized, an object (the carrier of the lock) is formulated.And wrap the key areas in curly braces with synchronized(this){// critical code}

-

Show built locks (java.util.concurrent.locks.Lock), call lock's lock method to lock key code

10.How do I use thread dump? How do I analyze Thread dump?

Thread Dump is a very useful tool for diagnosing Java application problems. Every Java virtual machine has the ability to generate thread-dump in time to show all threads in a certain state. Although the print output formats of each Java virtual machine are slightly different, ThreadThe information dumps gives contains the thread; the state of the thread, its identity, and the stack of calls; the stack of calls contains the full class name, the method being executed, and, if possible, the number of lines of source code

SUN

JVM produces ThreadDumpSolaris OS

<ctrl>-'\' (Control-Backslash) kill -QUIT <PID>

HP-UX/UNIX/Linux

Kill -3 <PID>

Windows

Direct to MSDOS Window program press Ctrl-break

Some Java application servers run on the console, such as Weblogic, and for the convenience of getting threaddump information, when Weblogic starts, its standard output is redirected to a file. With the command "nohup. /startWebLogic.Sh > log.out &", execute "kill-3", and Thread dump is output to log.out.

Tomcat's Thread Dump is output to the command line console or to the catalina.out file of logs. To reflect the dynamic changes in thread state, more than three threaddumps are needed in succession, each 10-20s apart

IBM JVM produces Thread Dump

With IBM's JVM on AIX, when memory overflows, a javacore file (about the cpu) and a heapdump file (about memory) are generated by default. If not, refer to the following methods

- Set the following environment variables before server starts (which can be added to the startup script)

export IBM_HEAPDUMP=true export IBM_HEAP_DUMP=true export IBM_HEAPDUMP_OUTOFMEMORY=true export IBM_HEAPDUMPDIR=<directory path>

- Check the parameter settings with the set command, make sure DISABLE_JAVADUMP is not set, and then start the server

- Executing kill-3 command generates javacore and heapdump files

When you get the java thread dump, all you have to do is look for the thread for the "waiting for monitor entry". If a large number of threads are waiting for the same address to be locked (because for Java, there is only one lock on an object), it is likely that a deadlock has occurred. For example

"service-j2ee" prio=5 tid=0x024f1c28 nid=0x125 waiting for monitor entry [62a3e000..62a3f690] [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.IASNonSharedResourcePool.internalGetResource(IASNonS haredResourcePool.java:625) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: - waiting to lock <0x965d8110> (a com.sun.enterprise.resource.IASNonSharedResourcePool) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.IASNonSharedResourcePool.getResource(IASNonSharedRes ourcePool.java:520) ................

To identify problems, it is often necessary to collect thread dump again after two minutes, and if the output is the same and a large number of threads are still waiting to lock the same address, then it must be deadlocked.

How to find the thread currently holding the lock is the key to solving the problem. Search thread dump, find "locked <0x965d8110>", and find the thread holding the lock

[27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: "Thread-20" daemon prio=5 tid=0x01394f18 nid=0x109 runnable [6716f000..6716fc28] [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at java.net.SocketInputStream.socketRead0(Native Method) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at java.net.SocketInputStream.read(SocketInputStream.java:129) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.Packet.receive(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.DataPacket.receive(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.NetInputStream.getNextPacket(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.NetInputStream.read(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.NetInputStream.read(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.net.ns.NetInputStream.read(Unknown Source) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.jdbc.ttc7.MAREngine.unmarshalUB1(MAREngine.java:929) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.jdbc.ttc7.MAREngine.unmarshalSB1(MAREngine.java:893) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.jdbc.ttc7.Ocommoncall.receive(Ocommoncall.java:106) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.jdbc.ttc7.TTC7Protocol.logoff(TTC7Protocol.java:396) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: - locked <0x954f47a0> (a oracle.jdbc.ttc7.TTC7Protocol) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at oracle.jdbc.driver.OracleConnection.close(OracleConnection.java:1518) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: - locked <0x954f4520> (a oracle.jdbc.driver.OracleConnection) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.JdbcUrlAllocator.destroyResource(JdbcUrlAllocator.java:122) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.IASNonSharedResourcePool.destroyResource(IASNonSharedResourcePool.java:872) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.IASNonSharedResourcePool.resizePool(IASNonSharedResourcePool.java:1086) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: - locked <0x965d8110> (a com.sun.enterprise.resource.IASNonSharedResourcePool) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at com.sun.enterprise.resource.IASNonSharedResourcePool$Resizer.run(IASNonSharedResourcePool.java:1178) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at java.util.TimerThread.mainLoop(Timer.java:432) [27/Jun/2006:10:03:08] WARNING (26140): CORE3283: stderr: at java.util.TimerThread.run(Timer.java:382)

In this example, a thread holding a lock is waiting for Oracle to return a result, but it can't wait for a response, so a deadlock occurs.

If the thread holding the lock is still waiting to lock another object, follow the above path until the root of the deadlock is found. In addition, threads like this are often seen in thread dump, which are threads waiting for a condition and voluntarily discarding the lock. For example

"Thread-1" daemon prio=5 tid=0x014e97a8 nid=0x80 in Object.wait() [68c6f000..68c6fc28] at java.lang.Object.wait(Native Method) - waiting on <0x95b07178> (a java.util.LinkedList) at com.iplanet.ias.util.collection.BlockingQueue.remove(BlockingQueue.java:258) - locked <0x95b07178> (a java.util.LinkedList) at com.iplanet.ias.util.threadpool.FastThreadPool$ThreadPoolThread.run(FastThreadPool.java:241) at java.lang.Thread.run(Thread.java:534)

Sometimes it is necessary to analyze such threads, especially the conditions under which they are waiting.

In fact, Java thread dump is not just used to analyze deadlocks; other Java applications can use thread dump to analyze strange behavior when running

In Java SE 5, tools for jstack have been added, as well as thread dump. In Java SE 6, graphical tools for jconsole make it easy to find deadlocks involving object monitors and java.util.concurrent.locks

Reference article: http://www.cnblogs.com/zhengyun_ustc/archive/2013/01/06/dumpanalysis.html

11.Why can't the run() method be called directly instead of the run() method when the start() method is called?

When the start() method is called, a new thread is created and the code in the run() method is executed. However, if the run() method is called directly, it will not create a new thread or execute the code calling the thread.

12.How do I wake up a blocked thread in Java?

If IO is blocked, add a number of thresholds when creating threads, beyond which they will not be created. Or set a flag variable for each thread to flag whether the thread has ended or join a thread group directly to manage it

If a thread is blocked by calling wait(), sleep(), or join() methods, you can interrupt the thread and wake it up by throwing InterruptedException

13.What is the difference between CycliBarriar and CountdownLatch in Java?

CountdownLatch: A thread (or multiple threads) that waits for another N threads to complete something before executing.

CycliBarriar: N threads are waiting for each other, and all threads must wait before any one thread finishes.

It should be clear that for CountDownLatch, the focus is on the "one thread" that is waiting, while the other N thread can either continue waiting or terminate after doing something.

For CyclicBarrier, the focus is on the N threads, either of which is incomplete and all of which have to wait.

- CyclicBarrier can be used multiple times, CountDownLatch can only be used once (unchanged after 0)

- Barrier waits for a specified number of threads to arrive before continuing processing; Latch waits for a specified event to change to a specified state before continuing processing. For CountDown, the count is reduced to zero, but you can implement or use other Latches instead.

- Barrier waits for a specified number of tasks to complete, Latch waits for other tasks to complete a change in the specified state before continuing

14.What is an immutable object and how does it help write concurrent applications?

Immutable object is an object whose state cannot be changed after it is created.

Because it is immutable and does not require additional synchronization guarantees for concurrency, it performs better than other methods such as lock synchronization.

Derived Question: Why is String immutable?

- String Constant Pool Requirements

String pool (String pool, String intern pool, String reserved pool) is a special storage area in Java heap memory. When a String object is created, if the string value already exists in the constant pool, a new object is not created, but a reference to an existing object is made.

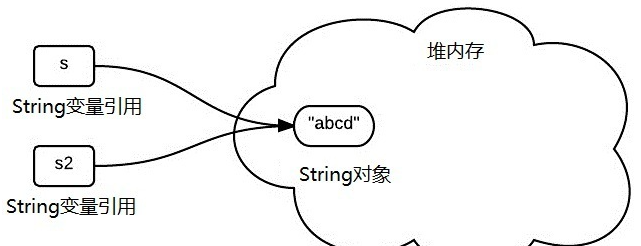

As shown in the code below, only one actual String object will be created in heap memory

String s1 = "abcd"; String s2 = "abcd";

The diagram is as follows

If string objects are allowed to change, various logic errors will result, such as changing one object affecting another independent object.Strictly speaking, this idea of a constant pool is a means of optimization.

Consider: If the code looks like this, would s1 and s2 point to the same actual String object?

String s1= "ab" + "cd"; String s2= "abc" + "d";

Perhaps this problem violates the novice's intuition, but considering that modern compilers do routine optimizations, they all point to the same object in the constant pool.Alternatively, you can use tools such as jd-gui to view the compiled class file

- Allow String objects to cache HashCode

Hash codes for String objects are frequently used in Java, such as in containers such as hashMap.

String invariance guarantees the uniqueness of hash codes so that they can be safely cached. It is also a means of performance optimization, meaning that new hash codes do not have to be computed every time.There is the following code in the definition of the String class

private int hash;//Used to cache HashCode

- Security

String is used as a parameter by many Java classes (libraries), such as network connection address URL s, file path, and String parameters required by the reflection mechanism. If String is not fixed, it will cause various security risks.

If you have the following code

boolean connect(String s) {

if (!isSecure(s)) {

throw new SecurityException();

}

// If you can modify the String elsewhere, this can cause unexpected problems/errors

causeProblem(s);

}

15.What are the common problems encountered in a multithreaded environment and how can they be solved?

Memory-interface, competitive conditions, deadlocks, live locks, and hunger are common in multithreaded and concurrent programs.

Memory-interface

(No data yet) I ~

Competition Conditions

See 9

deadlock

See 6

Livelock

-

concept

Thing 1 can use resources, but it lets others use resources first; Thing 2 can use resources first, but it also lets others use resources first, so both are always modest and unable to use resources. A living lock has a certain chance of being unlocked. A deadlock cannot be unlocked. -

Solve

An easy way to avoid a live lock is to use a first-come-first-service strategy. When multiple transactions request to block the same data object, the blocking subsystem queues the transactions in the order in which they are requested to block. Once a lock on a data object is released, the first transaction in the request queue is approved to be blocked.

hunger

-

concept

This means that if transaction T1 blocks data R and transaction T2 requests to block R, then T2 waits. T3 also requests to block R. When T1 releases the blockade on R, the system first approves T3's request, and T2 still waits. Then T4 requests to block R. When T3 releases the blockade on R, the system approves T4's request again. T2 may wait forever, which is hunger. -

Solve

With a fair lock, each thread calling lock() enters a queue. When unlocked, only the first thread in the queue is allowed to lock the Farlock instance, and all other threads are waiting until they are at the head of the queue

Code Samples

FairLock class (FairLock.java)

import java.util.ArrayList;

import java.util.List;

public class FairLock {

private final List<QueueObject> waitingThreads = new ArrayList<>();

private boolean isLocked = false;

private Thread lockingThread = null;

public void lock() throws InterruptedException {

QueueObject queueObject = new QueueObject();

boolean isLockedForThisThread = true;

synchronized (this) {

waitingThreads.add(queueObject);

}

while (isLockedForThisThread) {

synchronized (this) {

isLockedForThisThread = isLocked || waitingThreads.get(0) != queueObject;

if (!isLockedForThisThread) {

isLocked = true;

waitingThreads.remove(queueObject);

lockingThread = Thread.currentThread();

return;

}

}

try {

queueObject.doWait();

} catch (InterruptedException e) {

synchronized (this) {

waitingThreads.remove(queueObject);

}

throw e;

}

}

}

public synchronized void unlock() {

if (this.lockingThread != Thread.currentThread()) {

throw new IllegalMonitorStateException("Calling thread has not locked this lock");

}

isLocked = false;

lockingThread = null;

if (waitingThreads.size() > 0) {

waitingThreads.get(0).doNotify();

}

}

}

Queue Object Class (QueueObject.java)

public class QueueObject {

private boolean isNotified = false;

public synchronized void doWait() throws InterruptedException {

while (!isNotified) {

this.wait();

}

this.isNotified = false;

}

public synchronized void doNotify() {

this.isNotified = true;

this.notify();

}

@Override

public boolean equals(Object o) {

return this == o;

}

}

Explain

First lock()Method is no longer declared as synchronized,Replace with the code that must be synchronized at synchronized Nested within. FairLock Create a new QueueObject Instances and calls to each lock()The thread is queued. Call unlock()Threads will be fetched from the queue head QueueObject,And call it doNotify(),Used to wake up threads waiting on this object. In this way, only one waiting thread wakes up at the same time, not all waiting threads. This is also implemented FairLock Fairness. Note that in the same synchronization block, the lock status is still checked and set to avoid slip conditions. Also, QueueObject Is actually one semaphore. doWait()and doNotify()Method in QueueObject Save the signal in. This prevents a thread from calling queueObject.doWait()Previously called by another unlock()And then called queueObject.doNotify()Thread reentrance, resulting in signal loss. queueObject.doWait()Calls placed in synchronized(this)Outside of block to avoid being monitor Nested lock, so as long as no threads are in lock Methodological synchronized(this)Executed in blocks, other threads can be unlocked. Finally, note that queueObject.doWait()stay try/catch How is it called in a block. InterruptedException Thread can leave if thrown lock(),And need to be removed from the queue

16.What is the difference between a green thread and a local thread in java?

Green threads execute user-level threads and use only one OS thread at a time. Local threads use the OS threading system and use one OS thread per JAVA thread. When Java is executed, you can choose whether the threads used are green or local by using the -green or -native flag

17.What is the difference between a thread and a process?

Threads are an execution unit within a process and are also dispatchable entities within the process.

Differentiation from process

- address space

An execution unit within a process;The process has at least one thread;They share the process's address space;The process has its own address space - Resource Ownership

A process is a unit of resource allocation and ownership, and threads within the same process share the process's resources - Threads are the basic unit of processor scheduling, but processes are not

- Both can be executed concurrently

Processes and threads are the basic units that the operating system understands when a program runs, and they are used by the system to achieve concurrency of applications.

In short, a program has at least one process and a process has at least one thread. Threads are divided at a smaller scale than processes, which makes multithreaded programs more concurrent.

In addition, the process has separate memory units during execution, and multiple threads share memory, which greatly improves the efficiency of the program.Threads differ from processes in their execution. Each separate thread has an entry into which the program runs, a sequential execution sequence, and an exit from the program. However, threads cannot execute independently and must be controlled by multiple threads in an application depending on it.

Logically, the meaning of multithreading is that in an application, multiple execution parts can be executed simultaneously. However, the operating system does not treat multiple threads as separate applications to achieve process scheduling and management, as well as resource allocation. This is an important difference between processes and threads.

A process is a running activity on a data collection of programs with certain independent functions. A process is an independent unit of the system for resource allocation and scheduling.

Threads are an entity of a process and the basic unit of CPU scheduling and allocation. Threads are smaller and can run independently than processes. Threads do not have system resources on their own and only have a few resources (such as program counters, a set of registers and stacks) that are essential to running, but they can share all the resources owned by processes with other threads belonging to the same process.

One thread can create and undo another thread;Multiple threads in the same process can execute concurrently

18.What is context switching in multithreading?

The operating system manages the execution of many processes. Some are separate processes from various programs, systems, and applications, and some are from applications or programs that have been broken down into many processes. When a process is moved from the kernel,Context switching occurs between processes when another process becomes active. The operating system must record all the information needed to restart a process and start a new process to make it active. This information is called context and describes the existing state of the process. When a process becomes active, it can continue executing from the preempted location.

Context switching between threads occurs when a thread is preempted. If the threads belong to the same process, they share the same address space because the threads are contained within the address space of the process to which they belong. In this way, most of the information that the process needs to recover is unnecessary for the thread. Although the process and its threads share a lot, it is the most important.What you want is its address space and resources, where some information is local and unique to the thread, and other aspects of the thread are contained within each segment of the process

19.The difference between deadlock and live lock, the difference between deadlock and hunger?

Deadlock: A phenomenon in which two or more processes wait for each other due to competition for resources during the execution process. Without external forces, they will not be able to move forward. In this case, the system is in a deadlock state or the system has a deadlock. These processes that are always waiting for each other are called deadlock processes.Since resource usage is mutually exclusive, a deadlock occurs when a process requests resources, making it impossible for the process concerned to allocate the necessary resources without additional assistance and to continue running.

Although deadlocks may occur during a process's operation, deadlocks must also occur under certain conditions. The occurrence of deadlocks must have the following four essential conditions

- mutual exclusion

A process makes exclusive use of the allocated resources, that is, a resource is occupied by only one process for a period of time. If there are other processes requesting resources at this time, the requester can only wait until the process that occupies the resources has been released. - Request and Hold Conditions

A process that has kept at least one resource but has made a new request for it, which has been occupied by another process, is blocking the requesting process, but is still holding on to other resources it has acquired - Non-deprivation conditions

A resource acquired by a process that cannot be deprived until it has been used up and can only be released by itself when it has been used up - Loop waiting condition

When a deadlock occurs, there must be a process - the ring chain of resources, that is, the P0 in the process collection {P 0, P 1, P 2,... P n} is waiting for a resource occupied by P 1; P 1 is waiting for a resource occupied by P 2,..., P n is waiting for a resource occupied by P 0

Active Lock

Thing 1 can use resources, but it lets other things use resources first; Thing 2 can use resources, but it also lets other things use resources first, so neither can use resources.

A live lock has a certain chance of being unlocked. A deadlock cannot be unlocked.

An easy way to avoid a live lock is to use a first-come-first-service strategy. When multiple transactions request to block the same data object, the blocking subsystem queues the transactions in the order in which they are requested to block. Once a lock on a data object is released, the first transaction in the request queue is approved to be blocked.

The difference between deadlock and hunger?

See 15

20.What is the thread scheduling algorithm used in Java?

Computers usually have only one CPU and can execute only one machine instruction at any time. Each thread can execute instructions only if it has access to the CPU.The so-called multi-threaded concurrent operation means that from a macro perspective, each thread obtains CPU usage in turn and performs its own tasks. In the run pool, there will be many threads in a ready state waiting for the CPU. One task of the JAVA virtual machine is to be responsible for thread scheduling. Thread scheduling refers to assigning CPU usage rights to multiple threads according to a specific mechanism.

The java virtual machine uses a preemptive scheduling model, which means that threads with the highest priority in the runnable pool occupy the CPU first. If the threads in the runnable pool have the same priority, then a thread is randomly selected to occupy the CPU. A running thread runs until it has to abandon the CPU.

A thread discards the CPU for the following reasons

- The java virtual machine allows the current thread to temporarily abandon the CPU and go to a ready state to give other threads the chance to run

- The current thread is blocked for some reason

- Thread End Run

It is important to note that thread scheduling is not cross-platform, it depends not only on the java virtual machine, but also on the operating system. In some operating systems, the CPU will not be abandoned as long as the running threads are not blocked.

In some operating systems, even if threads do not experience blockages, they will run for a period of time and give other threads a chance to run. java's thread scheduling is timeless, and once multiple threads are started, it is not guaranteed that threads will take turns to get equal CPU time slices.If you want to explicitly give one thread the opportunity to run another thread, you can do one of the following.

Prioritize threads

- Have a running thread call the Thread.sleep() method

- Have a running thread call the Thread.yield() method

- Have a running thread call another thread's join() method

21.What is thread scheduling in Java?

See 20

22.How do I handle uncaught exceptions in a thread?

There are two ways to catch exceptions

- Snap thread errors and throw them up

- Exceptions are caught through the thread pool factory, uncaughtException writes error logs to log4j

Sample Code

public class TestThread implements Runnable {

@Override

public void run() {

throw new RuntimeException("throwing runtimeException.....");

}

}

When thread code throws a runlevel exception, the thread interrupts. The main thread is not affected by this, does not handle this, and cannot catch the exception at all, and continues to execute its own code

Method 1 code example

import com.wujunshen.thread.lock.exception.TestThread;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.Future;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class TestMain {

public static void main(String[] args) {

try {

TestThread t = new TestThread();

ExecutorService exec = Executors.newCachedThreadPool();

Future<?> future = exec.submit(t);

exec.shutdown();

future.get(); // The main point is that this statement works by calling the get() method, throwing it out unexpectedly, and wrapping it in ExecutorException

} catch (Exception e) { // Here you can continue throwing threaded exceptions

log.error(ExceptionUtils.getStackTrace(e));

}

}

}

Method 2 code example

HandlerThreadFactory.java

import java.util.concurrent.ThreadFactory;

import org.jetbrains.annotations.NotNull;

public class HandlerThreadFactory implements ThreadFactory {

@Override

public Thread newThread(@NotNull Runnable runnable) {

Thread t = new Thread(runnable);

MyUncaughtExceptionHandler myUncaughtExceptionHandler = new MyUncaughtExceptionHandler();

t.setUncaughtExceptionHandler(myUncaughtExceptionHandler);

return t;

}

}

MyUncaughtExceptionHandler.java

import lombok.extern.slf4j.Slf4j;

@Slf4j

public class MyUncaughtExceptionHandler implements Thread.UncaughtExceptionHandler {

@Override

public void uncaughtException(Thread t, Throwable e) {

log.info("write logger here:{}", e);

}

}

TestMain.java

import com.wujunshen.thread.lock.exception.TestThread;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

@Slf4j

public class TestMain {

public static void main(String[] args) {

try {

TestThread t = new TestThread();

ExecutorService exec = Executors.newCachedThreadPool(new HandlerThreadFactory());

exec.execute(t);

} catch (Exception e) {

log.error(ExceptionUtils.getStackTrace(e));

}

}

}

23.What are thread groups and why are they not recommended in Java?

ThreadGroup Thread groups represent a collection of threads. Thread groups can also contain other thread groups. Thread groups form a tree in which each thread group has a parent thread group in addition to the initial thread group.

Allows a thread to access information about its own thread group, but does not allow it to access information about its parent thread group or any other thread group. The purpose of a thread group is to manage threads

Why Thread Groups Are Not Recommended

Save the overhead of frequently creating and destroying threads and increase thread efficiency.

Derived Question: What is the difference between a thread group and a thread pool?

A thread's cycle is divided into three phases: create, run, and destroy. When working on a task, create a task thread first, then execute the task, and finish, destroying the thread. When a thread is running, it is really working on the tasks we give it, and this phase is the effective run time. So we want to spend on creating and destroying threadsThe fewer resources, the better. If you do not destroy a thread and it cannot be invoked by other tasks, then there will be a waste of resources. To improve efficiency and reduce the time and space waste of creating and destroying threads, a thread pool technology has emerged. This technology creates a certain number of threads at the beginning, batches tasks like this, and waits for the task to arrive.When the task is finished, the thread can perform other tasks. When the thread is no longer needed, it is destroyed. This saves the hassle of frequently creating and destroying threads.

24.Why is it better to use the Executor framework than to create and manage threads using applications?

Most concurrent applications are managed using task as the basic unit. Usually, we create a separate thread for each task to execute.

This creates two problems

- A large number of threads (>100) consume system resources, increasing the overhead of thread scheduling and causing performance degradation

- Frequent creation and extinction of threads is not a wise choice for tasks with short life cycles because the overhead of creating and extincting threads may outweigh the performance benefits of using multithreads

A more reasonable way to use multithreading is to use a Thread Pool. java.util.concurrent provides a flexible thread pool implementation: the Executor framework. This framework can be used for asynchronous task execution and supports many different types of task execution strategies. It also provides a standard way to decouple task Submission from task execution and a common way to describe tasks using Runnable.The Executor implementation also provides lifecycle support and hook functions, which can add extensions such as statistics collection, application management mechanisms, and monitors.

Executing task threads in the thread pool allows you to reuse existing threads without creating new ones. This reduces the overhead of thread creation and extinction when working on multiple tasks. At the same time, worker threads usually already exist when tasks arrive, and the wait time used to create threads does not delay task execution, thus increasing responsiveness by adjusting lines appropriately.The size of the process pool, while getting enough threads to keep the processor busy, also prevents too many threads from competing for resources, resulting in applications consuming too much resources for thread management

25.What is the difference between Executor and Executors in Java?

Executor is an interface used to perform Runnable tasks; it defines only one method, execute(Runnable command); it performs tasks of type Runnable.

Executors are classes that provide a series of factory methods for creating thread pools that implement the ExecutorService interface.

Several important Executors methods

- callable(Runnable task)

Convert a Runnable task to a Callable task - newSingleThreadExecutor()

Produces an ExecutorService object that has only one thread available to perform tasks. If there is more than one task, the tasks will be executed sequentially - newCachedThreadPool()

Produces an ExecutorService object with a thread pool, which is resized to suit your needs and returns to the thread pool for the next task - newFixedThreadPool(int poolSize)

Produces an ExecutorService object with a thread pool of size poolSize, and if the number of tasks is greater than poolSize, the tasks are executed sequentially in a queue - newSingleThreadScheduledExecutor()

Produces a ScheduledExecutorService object with a thread pool size of 1. If there is more than one task, the tasks will be executed in sequence - newScheduledThreadPool(int poolSize)

Produces a ScheduledExecutorService object whose thread pool size is poolSize, and if the number of tasks is greater than poolSize, the task waits for execution in a queue

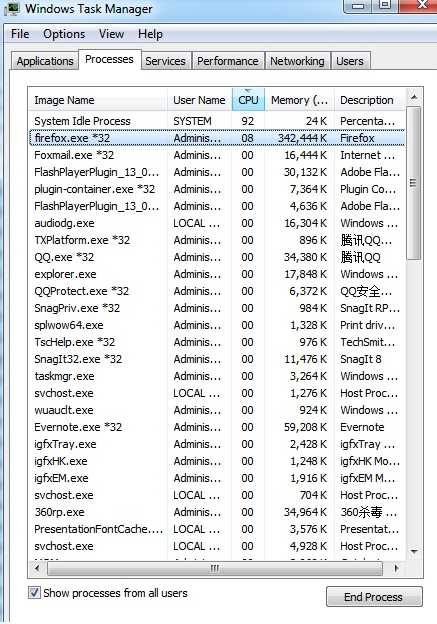

26.How do I find which thread is using the longest CPU time on Windows and Linux?

It's actually looking for the thread with the highest CPU share

Windows

Inside Task Manager, see the following

Linux

Use the following command to find out which threads are cpu-intensive

$ ps H -eo user,pid,ppid,tid,time,%cpu,cmd –sort=%cpu

This command first specifies the parameter'H', which displays thread-related information in the format output.

user,pid,ppid,tid,time,%cpu,cmd

Then sort using the%cpu field. This will find the threads that occupy the processor

Reference material

-

Java Multithreaded Interview Question Summary

-

Producer-Consumer Issues (Wikipedia Chinese)

-

Producer-consumer issues (Baidu Encyclopedia)

-

Dining Problem for Philosophers (Wikipedia Chinese)

-

Dining Problem for Philosophers (Baidu Encyclopedia)

-

Three examples demonstrate Java Thread Dump log analysis

http://www.cnblogs.com/zhengyun_ustc/archive/2013/01/06/dumpanalysis.html

-

All sample source addresses in this article

https://gitee.com/darkranger/beating-interviewer/tree/master/src/main/java/com/wujunshen/thread