Concurrent programming

History of operating system

# Concurrent programming is actually the history of the operating system (underlying logic) History of operating system:

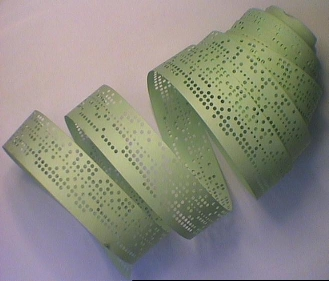

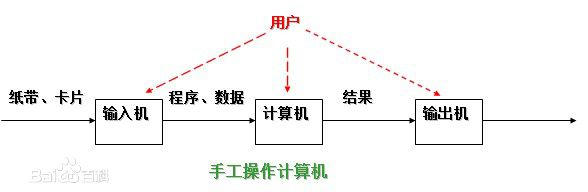

1. Punch card Era

cpu utilization is extremely low

The programmer loads the punched paper tape (or card) corresponding to the program and data into the input machine, then starts the input machine to input the program and data into the computer memory, and then starts the program to run against the data through the console switch; After the calculation, the printer outputs the calculation results; After the user takes away the results and removes the paper tape (or card), the next user can get on the computer.

Manual operation has two characteristics:

(1) the user owns the whole machine. There will be no waiting because resources have been occupied by other users, but the utilization rate of resources is low.

(2) the CPU waits for manual operation. Insufficient utilization of CPU.

20 In the late 1950s, there was a man-machine contradiction: there was a sharp contradiction between the slow speed of manual operation and the high speed of computer. Manual operation has seriously damaged the utilization rate of system resources (reducing the resource utilization rate to a few percent or even lower), which can not be tolerated. The only solution: only get rid of human manual operation and realize the automatic transition of operation. This leads to batch processing.

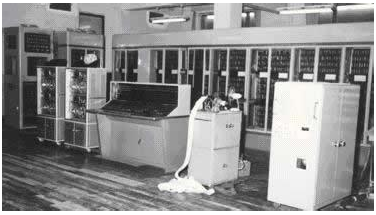

2. Online batch processing system

A storage device - magnetic tape is added between the host and the input machine. Under the automatic control of the supervision program running on the host, the computer can automatically complete: read the user jobs on the input machine into the magnetic tape in batches, read the user jobs on the magnetic tape into the host memory and execute them in turn, and output the calculation results to the output machine. After completing the last batch of jobs, the supervision program inputs another batch of jobs from the input machine, saves them on the tape, and repeats the processing according to the above steps. The supervision program continuously processes each job, so as to realize the automatic transfer from job to job, reduce the job establishment time and manual operation time, effectively overcome the man-machine contradiction and improve the utilization rate of computer. However, when job input and result output, the high speed of the host CPU Still idle, waiting for slow input/The output device completes the work: the host is in the "busy" state.

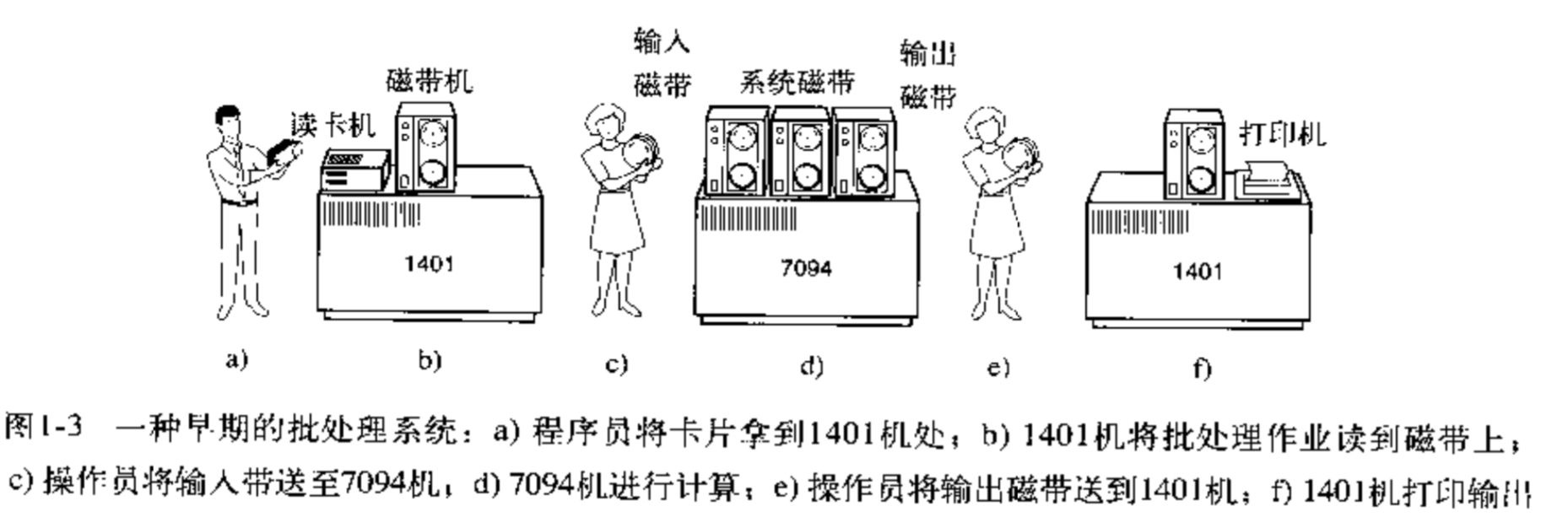

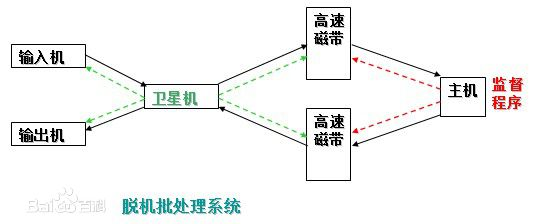

3. Offline batch processing system

In order to overcome and alleviate the contradiction between high-speed host and slow peripherals, improve CPU The off-line batch processing system, i.e. input, is introduced/The output is out of host control.

Satellite machine: a machine that is not directly connected to the host but is specially used to deal with input / output equipment.

Its functions are:

(1) read the user job from the input machine and put it on the input tape.

(2) read the execution result from the output tape and transmit it to the output machine.

In this way, the host does not directly deal with slow input / output devices, but with relatively fast tape drives, which effectively alleviates the contradiction between the host and devices. The host computer and satellite computer can work in parallel, and their division of labor is clear, which can give full play to the high-speed computing ability of the host computer.

Offline batch processing system: it was widely used in the 1960s. It greatly alleviated the contradiction between man-machine and host and peripherals.

Insufficient: only one job is stored in the host memory each time. Whenever it sends an input / output (I/O) request during operation, the high-speed CPU is waiting for the low-speed I/O to complete, resulting in idle CPU.

In order to improve the utilization of CPU, a multiprogramming system is introduced.

Multichannel technology

# Multichannel technology premise: single core CPU (one core has 4 CPUs)

Multichannel technology is: switch+Save status

When the first program starts : This program takes a long time to start cpu In the service phase, the second program needs to be started.

The second program starts(This program has a short startup time) : cpu It will switch over and save the execution point of the previous program. After the program is executed, go to the previous execution point of the previous program to continue execution.

"""

CPU Working mechanism

1.When a program enters IO State, the operating system will automatically deprive the program CPU Execution Authority

2.When a program is occupied for a long time CPU The operating system will also deprive the program CPU Execution Authority

"""

The so-called multiprogramming technology is to allow multiple programs to enter the memory and run at the same time. That is to put multiple programs into memory at the same time and allow them to alternate in memory CPU They share various hardware and software resources in the system. When a program is due to I/O When suspended on request, CPU Immediately switch to another program.

# Metaphor Description: The waiter is serving five tables. The waiter will first give a menu to one table, then serve the next table, and then give the next table a menu to serve in turn. Then go to the first table to serve in turn, just cpu The speed is very fast. Users can't feel it. This is multichannel technology, which serves multiple programs at the same time.

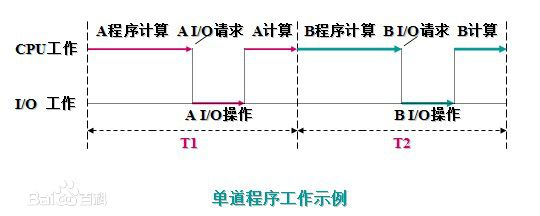

I/O is idle during program A calculation, and CPU is idle during program A I/O operation (the same is true for program B); Only after the work of A is completed can b enter the memory to start work. The two are serial, and the total time required for all completion = T1+T2.

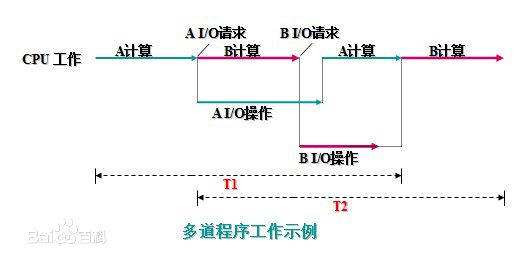

Store the A and B programs in the memory at the same time. Under the control of the system, they can run alternately on the CPU. When the a program abandons the CPU due to requesting I/O operation, the B program can occupy the CPU for operation, so that the CPU is no longer idle, and the I/O equipment undergoing A I/O operation is not idle. Obviously, both the CPU and I/O equipment are in the "busy" state, The utilization rate of resources is greatly improved, which also improves the efficiency of the system. The time required for the completion of a and B is < < T1 + T2.

Multiprogramming technology not only makes full use of CPU, but also improves the utilization of I/O equipment and memory, so as to improve the resource utilization and system throughput of the whole system (the number of processing jobs (programs) per unit time), and finally improve the efficiency of the whole system.

Features of multiprogramming in single processor system:

(1) multi channel: several independent programs are stored in the computer memory at the same time;

(2) macro parallelism: several programs entering the system at the same time are in the process of operation, that is, they have started their own operation, but they have not finished;

(3) micro serial: in fact, each program uses CPU in turn and runs alternately.

The emergence of multiprogramming system marks the gradual maturity of the operating system. It has the functions of job scheduling management, processor management, memory management, external device management, file system management and so on.

Because multiple programs run in the computer at the same time, the concept of space isolation began to exist. Only the isolation of memory space can make the data more secure and stable.

In addition to spatial isolation, multichannel technology also embodies the characteristics of space-time reuse for the first time. When encountering IO operation, it switches programs, which improves the utilization of cpu and the working efficiency of computer.

Concurrency and parallelism

parallel:Multiple programs are executed at the same time(More than one is required cpu) Concurrent:Multiple programs can run as long as they look like they're running at the same time(The running speed is very fast, which users can't feel)

# Single core cpu can not achieve parallelism, but can only achieve concurrency.

# For example, 12306 can support hundreds of millions of users to buy tickets at the same time. At this time, parallel or concurrent: This must be concurrent and highly concurrent, because there can't be hundreds of millions cpu Serve hundreds of millions of users at the same time. Star track: it is how many stars can be derailed by microblog. Weibo said it can support eight satellite orbits. # Therefore, satellite orbit can also represent the high concurrency of cpu.

Process theory

Difference between process and program

Program: a pile of code(Where did you die) Processes: running programs(Live running) # Just like you open a cool dog music, listening to music is the process. If you don't open it, it's most important to put the program on the hard disk.

Process scheduling in the case of single core

Evolution of process scheduling algorithm 1.FCFS(first come first server) : First come, first served Unfriendly to short assignments

If the first process takes 24 hours, the subsequent processes only need 1 s 2s Then the subsequent process is very unfriendly.

2.Short job first scheduling algorithm Unfriendly to long homework

If the process is relatively long and there are a lot of short jobs during the job, the short job will take priority and the long job will have to wait all the time. This is very unfriendly to long homework.

3.time-slice Round-robin method + Multilevel feedback queue(The most popular algorithm at present)

Time slice rotation method: allocate the same time slice to each process, let the program run first, and let the user feel that the program is running. Rotation method: after each process has allocated time slices, it will rotate back to determine the type of process according to the amount of time slices consumed by the process. The long homework put him back(This is called multi-level feedback queue) Multi level feedback queue: it is divided into different levels according to the time slice consumed by the process. If the user opens the process when working on a long job, the long job will be stopped, and the time slice will be allocated to the newly opened process first, so that the user can feel the program started.

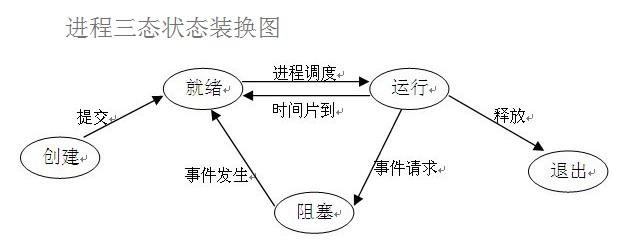

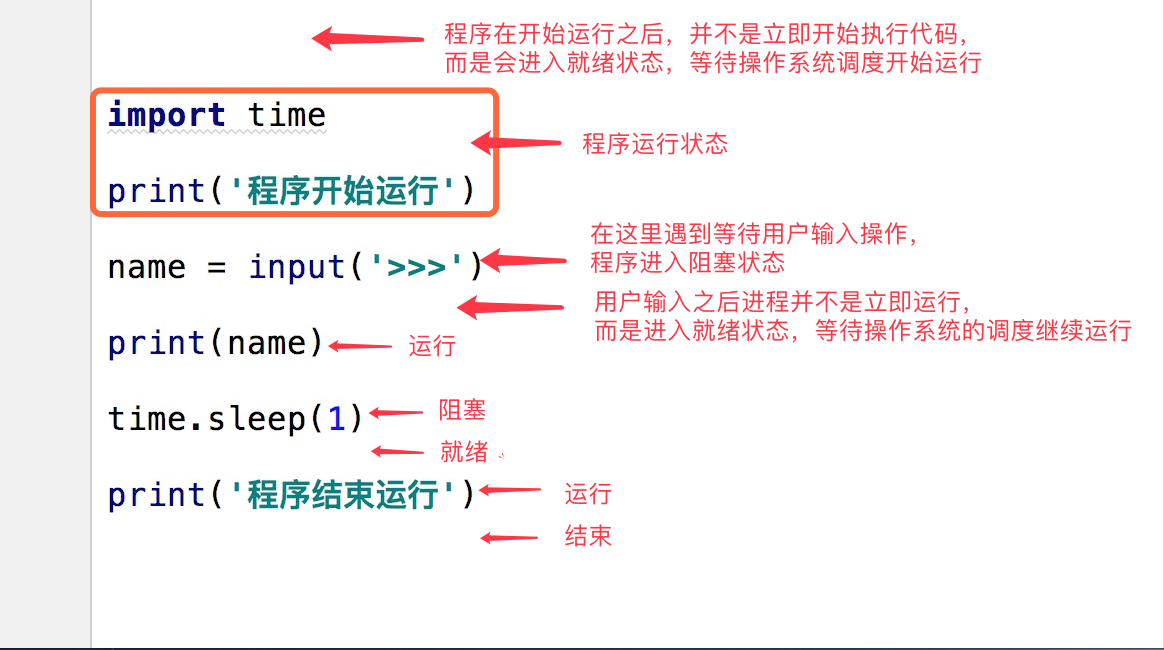

Process three state diagram

In order to enter the running state, the program must go through the ready state first

(1)be ready(Ready)state When a process has been assigned to a division CPU All necessary resources other than the processor can be executed immediately as long as the processor is obtained. At this time, the process state is called ready state. (2)implement/Run( Running)State when the process has obtained the processor and its program is executing on the processor, the process state is called execution state. (3)block(Blocked)When a process that is executing cannot be executed because it waits for an event to occur, it abandons the processor and is in a blocking state. There can be a variety of events that cause process blocking, such as waiting I/O Completed, application buffer not satisfied, waiting for letter(signal)Wait.

Synchronous and asynchronous

## Describes how a task is submitted synchronization:After submitting the task, do nothing while waiting for the return result of the task asynchronous:After submitting the task, do not wait for the return result of the task, and directly do other things. The result is automatically reminded by the feedback mechanism

At the same time, I was washing clothes, cooking and sweeping the floor(robot) Process: put the clothes into the washing machine, start the sweeping robot, and then cook # You can't wait next to the washing machine to start the sweeping robot after the washing machine is finished, and then wait for the sweeping robot to sweep the floor.

Blocking and non blocking

The two concepts of blocking and non blocking are related to the program (thread) waiting for message notification(It doesn't matter whether it's synchronous or asynchronous)Related to the status of the. In other words, blocking and non blocking are mainly from the perspective of the state of the program (thread) waiting for message notification

Continue with the above example, whether queuing or using a number to wait for notification, if in the process of waiting, the waiting person can't do anything other than waiting for message notification, then the mechanism is blocked and manifested in the program,That is, the program has been blocked in the function call and cannot continue to execute. On the contrary, some people like to wait while making phone calls and sending text messages when the bank handles these businesses. This state is non blocking because it is not blocked(Waiting person)Instead of blocking this message, I waited while doing my own thing. Note: the synchronous non blocking form is actually inefficient. Imagine you need to look up while you are on the phone to see if you are in line. If calling and observing the queue position are regarded as two operations of the program, the program needs to switch back and forth between these two different behaviors, and the efficiency can be imagined to be low; The asynchronous non blocking form does not have such a problem, because it is you who make the call(Waiting person)It's the counter that informs you(Message trigger mechanism)The program does not switch back and forth between two different operations.

Synchronous / asynchronous and blocking / non blocking

- Synchronous blocking form

Lowest efficiency. Take the above example, that is, you concentrate on queuing and do nothing else.

- Asynchronous blocking form

If the person waiting for business in the bank uses an asynchronous way to wait for the message to be triggered (notified), that is, he receives a small note. If he cannot leave the bank to do other things during this period, it is obvious that the person is blocked in the waiting operation;

Asynchronous operation can be blocked, but it is not blocked when processing messages, but when waiting for message notification.

- Synchronous non blocking form

It's actually inefficient.

Imagine that while you are on the phone, you still need to look up to see if the queue has reached you. If you regard calling and observing the position of the queue as two operations of the program, the program needs to switch back and forth between these two different behaviors, and the efficiency can be imagined to be low.

- Asynchronous non blocking form

More efficient,

Because calling is your business (waiting person) and notifying you is the business of the counter (message trigger mechanism), the program does not switch back and forth in two different operations.

For example, this person suddenly found that he was addicted to smoking and needed to go out for a cigarette, so he told the lobby manager that if he had to inform me outside when he reached my number, he would not be blocked in the waiting operation. Naturally, this is the asynchronous + non blocking method.

Many people confuse synchronization with blocking because synchronous operations are often expressed in the form of blocking. Similarly, many people also confuse asynchronous and non blocking because asynchronous operations are generally not blocked at real IO operations.

Create process

# Code level creation process

from multiprocessing import Process

import time

import os

def test(name):

print(os.getpid()) # Get process number

print(os.getppid()) # Get parent process number

print(f'{name}Running')

time.sleep(2)

print(f'{name}End of operation')

if __name__ == '__main__':

p = Process(target=test,args=('jason',)) # Generate a process object

p.start() # Warn the operating system to open a new process asynchronous commit

print(os.getpid())

print('Main process')

"""

stay windows The setup process in is similar to the import module

Execute the code again from top to bottom

Must be in__main__The code that executes the process in the judgment statement

stay linux Is to directly copy a complete copy of the code for execution

No need to__main__Execute within judgment statement

"""

class MyProcess(Process):

def __init__(self, name):

super().__init__()

self.name = name

def run(self):

print('%s Running' % self.name)

time.sleep(3)

print('%s It's already over.' % self.name)

if __name__ == '__main__':

p = MyProcess('jason')

p.start()

print('main')

# results of enforcement sss jason Executing jason Execute again Process finished with exit code 0

join method of process

join method : Wait for the child process to finish executing before executing the main process

from multiprocessing import Process

import time

def test(name, n):

print('%s is running' % name)

time.sleep(n)

print('%s is over' % name)

if __name__ == '__main__':

p_list = []

start_time = time.time()

for i in range(1, 4):

p = Process(target=test, args=(i, i))

p.start()

p_list.append(p)

# p.join() # Serial 9s+

for p in p_list:

p.join()

print(time.time() - start_time)

# results of enforcement 1 is running 3 is running 2 is running 1 is over 2 is over 3 is over 3.190359592437744 Main process

Processes cannot interact by default

# Data between processes is isolated from each other

from multiprocessing import Process

money = 100

def test():

global money

money = 999

if __name__ == '__main__':

p = Process(target=test)

p.start()

# Make sure the subprocess is running before printing

p.join()

print(money)

Execution result: 100

Object method

1.current_process # View process number 2.os.getpid() # View process number os.getppid() # View parent process number 3.The name of the process, p.name There is a direct default, or it can be passed in the form of keywords when instantiating the process object name='' 3.p.terminate() # Kill child process 4.p.is_alive() # Determine whether the process is alive # The combination of 3 and 4 can not see the results, because the operating system needs reaction time. The effect can be seen when the main process sleeps 0.1