Making computer programs run concurrently is a topic that is often discussed. Today I want to discuss various concurrency methods under Python.

Concurrent mode

Thread ([thread])

Multithreading is almost every program ape. When using each language, he will first think of tools to solve concurrency (JS programmers, please avoid). Using multithreading can effectively use CPU resources (Python exception). However, the complexity of programs brought by multithreading is inevitable, especially the synchronization of competing resources.

However, due to the use of global interpretation lock (GIL) in Python, the code can not run concurrently on multiple cores at the same time, that is, python multithreading can not be concurrent. Many people will find that after using multithreading to improve their Python code, the running efficiency of the program decreases. What a painful thing! In fact, it is very difficult to use multithreaded programming model. Programmers are easy to make mistakes. This is not programmers' fault, because parallel thinking is anti-human. Most of us think serially (schizophrenia is not discussed), and the computer architecture designed by von Neumann is also based on sequential execution. So if you can't get your multithreaded program done, Congratulations, you're a normal thinking program Ape:)

Python provides two groups of thread interfaces. One is the thread module, which provides a basic, low-level interface and uses Function as the running body of the thread. Another group is the threading module, which provides an easier to use object-based interface (similar to Java). It can inherit the thread object to implement threads. It also provides other thread related objects, such as Timer and Lock

Example of using thread module

import thread

def worker():

"""thread worker function"""

print 'Worker'

thread.start_new_thread(worker)Example of using threading module

import threading

def worker():

"""thread worker function"""

print 'Worker'

t = threading.Thread(target=worker)

t.start()Or Java Style

import threading

class worker(threading.Thread):

def __init__(self):

pass

def run():

"""thread worker function"""

print 'Worker'

t = worker()

t.start()Process

Due to the problem of global interpretation lock mentioned earlier, a better parallel method in Python is to use multiple processes, which can make very effective use of CPU resources and achieve real concurrency. Of course, the cost of processes is greater than threads, that is, if you want to create an amazing number of concurrent processes, you need to consider whether your machine has a strong heart.

Python's mutlipprocess module has a similar interface to threading.

from multiprocessing import Process

def worker():

"""thread worker function"""

print 'Worker'

p = Process(target=worker)

p.start()

p.join()Because threads share the same address space and memory, the communication between threads is very easy, but the communication between processes is more complex. Common interprocess communications include, pipes, message queues, Socket interfaces (TCP/IP), and so on.

Python's mutlipprocess module provides encapsulated pipes and queues, which can easily transfer messages between processes.

The synchronization between Python processes uses locks, which is the same as threads.

In addition, Python also provides a process Pool object, which can easily manage and control threads.

Remote Distributed Node

With the advent of the era of big data, Moore's theorem seems to have lost its effect on a single machine. The calculation and processing of data need a distributed computer network to run, and the parallel operation of programs on multiple host nodes has become a problem that must be considered by the current software architecture.

There are several common ways of interprocess communication between remote hosts

TCP/IP

TCP / IP is the basis of all remote communications. However, the API is relatively low-level and cumbersome to use, so it is generally not considered

Remote method call Remote Function Call

[RPC]

Remote objectremote object

Remote object is a higher-level encapsulation. Programs can operate a remote object's local proxy like local objects. CORBA, the most widely used specification for remote objects, has the greatest advantage that it can communicate in different languages and platforms. When different languages and platforms have their own remote object implementations, such as Java RMI and MS DCOM

The open source implementation of Python has a lot of support for remote objects

- Dopy]

- Fnorb (CORBA)

- ICE

- omniORB (CORBA)

- Pyro

- YAMI

Message queuemessage queue

Compared with RPC or remote object, message is a more flexible means of communication. Common message mechanisms supporting Python interface include

- RabbitMQ

- ZeroMQ

- Kafka

- AWS SQS + BOTO

There is no great difference between executing concurrent processes on remote hosts and local multi processes. The problem of inter process communication needs to be solved. Of course, the management and coordination of remote processes are more complex than local processes.

There are many open source frameworks under Python to support distributed concurrency and provide effective management methods, including:

Celery

Celery is a very mature Python distributed framework, which can perform tasks asynchronously in distributed systems, and provide effective management and scheduling functions.

SCOOP

SCOOP (Scalable COncurrent Operations in Python) provides a simple and easy-to-use distributed call interface, and uses the Future interface for concurrency.

Dispy

Compared with Celery and SCOP, Dispy provides more lightweight distributed parallel services

PP

PP (Parallel Python) is another lightweight Python parallel service

Asyncoro

Asyncoro is another Python framework that uses Generator to implement distributed concurrency,

Of course, there are many other systems that I don't list

In addition, many distributed systems provide support for Python interfaces, such as Spark

Pseudo Thread

Another concurrency method is not common. We can call it pseudo thread, that is, it looks like a thread and uses an interface similar to a thread interface, but the actual non thread method does not save the corresponding thread overhead.

greenlet

greenlet provides lightweight coroutines to support intra process concurrency.

greenlet is a by-product of Stackless. tasklet is used to support a technology called micro thread. Here is an example of pseudo thread using greenlet

from greenlet import greenlet

def test1():

print 12

gr2.switch()

print 34

def test2():

print 56

gr1.switch()

print 78

gr1 = greenlet(test1)

gr2 = greenlet(test2)

gr1.switch()Run the above procedure and get the following results:

12 56 34

The pseudo thread gr1 switch will print 12, then call gr2 switch to get 56, then switch return to gr1, print 34, then the pseudo thread gr1 ends, the program exits, so 78 will never be printed. Through this example, we can see that using pseudo threads, we can effectively control the execution process of programs, but pseudo threads do not have real concurrency.

eventlet, gevent and concurrence are all based on greenlet to provide concurrency.

- eventlet http://eventlet.net/

eventlet is a Python library that provides network call concurrency. Users can call blocked IO operations in a non blocking way.

import eventlet

from eventlet.green import urllib2

urls = ['http://www.google.com', 'http://www.example.com', 'http://www.python.org']

def fetch(url):

return urllib2.urlopen(url).read()

pool = eventlet.GreenPool()

for body in pool.imap(fetch, urls):

print("got body", len(body))The results are as follows

('got body', 17629)

('got body', 1270)

('got body', 46949)In order to support the operation of the generator, the eventlet has modified urlib2, and the interface is consistent with urlib2. GreenPool here is consistent with Python's Pool interface.

- gevent

gevent is similar to eventlet,

import gevent from gevent import socket urls = ['www.google.com', 'www.example.com', 'www.python.org'] jobs = [gevent.spawn(socket.gethostbyname, url) for url in urls] gevent.joinall(jobs, timeout=2) print [job.value for job in jobs]

The results are as follows:

['206.169.145.226', '93.184.216.34', '23.235.39.223']

- concurence

https://github.com/concurrenc...

Concurrence is another open source library that uses greenlet to provide network concurrency. I haven't used it. You can try it yourself.

Practical application

There are usually two situations where concurrency needs to be used. One is computing intensive, that is, your program needs a lot of CPU resources; The other is IO intensive. The program may have a large number of read and write operations, including reading and writing files, sending and receiving network requests, etc.

Compute intensive

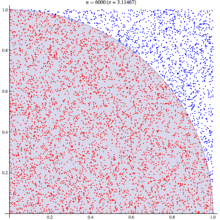

For computing intensive applications, we choose the famous Monte Carlo algorithm to calculate the PI value. The basic principle is as follows

Monte Carlo algorithm uses the principle of statistics to simulate and calculate the Pi. In a square, the probability of a random point falling on the area of 1 / 4 circle (red point) is directly proportional to its area. This probability p = Pi * r * r / 4: R * r, where R is the side length of the square and the radius of the circle. In other words, the probability is 1 / 4 of the Pi. Using this conclusion, we can know the Pi as long as we simulate the probability that the point falls on the quarter circle. In order to obtain this probability, we can generate a large number of points through a large number of experiments, that is, to see which region the point is in, and then count the results.

The basic algorithm is as follows:

from math import hypot

from random import random

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))Here, the test method makes n (tries) tests and returns the number of points falling in the quarter circle. The judgment method is to check the distance from the point to the center of the circle. If it is less than R, it is on the circle.

Through a large number of concurrent tests, we can quickly run multiple tests. The more tests, the closer the results are to the real PI.

The program codes of different concurrency methods are given here

Non concurrent

Let's start with a single thread, but the process runs to see how the performance is

from math import hypot

from random import random

import eventlet

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

result = map(test, [tries] * nbFutures)

ret = 4. * sum(result) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

print calcPi(3000,4000)Multithreaded thread

In order to use the thread pool, we use the dummy package of multiprocessing, which is an encapsulation of multithreading. Note that although the code here does not mention threads in a word, it is truly multithreaded.

Through the test, we found that as expected, when the thread pool is 1, its running result is the same as that when there is no concurrency. When we set the thread pool number to 5, the time-consuming is almost twice that when there is no concurrency. My test data is from 5 seconds to 9 seconds. So for computing intensive tasks, let's give up multithreading.

from multiprocessing.dummy import Pool

from math import hypot

from random import random

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

p = Pool(1)

result = p.map(test, [tries] * nbFutures)

ret = 4. * sum(result) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

if __name__ == '__main__':

p = Pool()

print("pi = {}".format(calcPi(3000, 4000)))multiprocess

Theoretically, it is more appropriate to use multi process concurrency for computing intensive tasks. In the following example, the size of the process pool is set to 5, and the impact on the results can be seen by modifying the size of the process pool. When the process pool is set to 1, the time required for multi-threaded results is similar, because there is no concurrency at this time; When set to 2, the response time has been significantly improved, which is half of that without concurrency before; However, continuing to expand the process pool has little impact on performance, or even decreased. Maybe my Apple Air CPU has only two cores?

Be careful. If you set up a very large process pool, you will encounter the error of Resource temporarily unavailable. The system cannot support the creation of too many processes. After all, resources are limited.

from multiprocessing import Pool

from math import hypot

from random import random

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

p = Pool(5)

result = p.map(test, [tries] * nbFutures)

ret = 4. * sum(result) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

if __name__ == '__main__':

print("pi = {}".format(calcPi(3000, 4000)))gevent (pseudo thread)

No matter gevent or eventlet, because there is no actual concurrency, there is little difference between response time and no concurrency. This is consistent with the test results.

import gevent

from math import hypot

from random import random

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

jobs = [gevent.spawn(test, t) for t in [tries] * nbFutures]

gevent.joinall(jobs, timeout=2)

ret = 4. * sum([job.value for job in jobs]) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

print calcPi(3000,4000)- eventlet (thread)

from math import hypot

from random import random

import eventlet

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

pool = eventlet.GreenPool()

result = pool.imap(test, [tries] * nbFutures)

ret = 4. * sum(result) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

print calcPi(3000,4000)- SCOOP

The Future interface in SCOP conforms to the definition of PEP-3148, that is, the Future interface provided in Python 3.

In the default SCOP configuration environment (single machine, four workers), the concurrent performance is improved, but it is not as good as the multi process configuration of two process pools.

from math import hypot

from random import random

from scoop import futures

import time

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

expr = futures.map(test, [tries] * nbFutures)

ret = 4. * sum(expr) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

if __name__ == "__main__":

print("pi = {}".format(calcPi(3000, 4000)))- Celery

Task code

from celery import Celery

from math import hypot

from random import random

app = Celery('tasks', backend='amqp', broker='amqp://guest@localhost//')

app.conf.CELERY_RESULT_BACKEND = 'db+sqlite:///results.sqlite'

@app.task

def test(tries):

return sum(hypot(random(), random()) < 1 for _ in range(tries))Client code

from celery import group

from tasks import test

import time

def calcPi(nbFutures, tries):

ts = time.time()

result = group(test.s(tries) for i in xrange(nbFutures))().get()

ret = 4. * sum(result) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

print calcPi(3000, 4000)The concurrent test results using Celery are unexpected (the environment is single machine, 4freefork is concurrent, and the message broker is rabbitMQ), which is the worst of all test cases, and the response time is 5 ~ 6 times that without concurrency. This may be because the cost of control and coordination is too high. For such computing tasks, Celery may not be a good choice.

asyncoro

Asyncoro's test results are consistent with non concurrency.

import asyncoro

from math import hypot

from random import random

import time

def test(tries):

yield sum(hypot(random(), random()) < 1 for _ in range(tries))

def calcPi(nbFutures, tries):

ts = time.time()

coros = [ asyncoro.Coro(test,t) for t in [tries] * nbFutures]

ret = 4. * sum([job.value() for job in coros]) / float(nbFutures * tries)

span = time.time() - ts

print "time spend ", span

return ret

print calcPi(3000,4000)IO intensive

IO intensive tasks are another common use case. For example, the network WEB server is an example. How many requests can be processed per second is an important indicator of the WEB server.

Let's take web page reading as the simplest example

from math import hypot

import time

import urllib2

urls = ['http://www.google.com', 'http://www.example.com', 'http://www.python.org']

def test(url):

return urllib2.urlopen(url).read()

def testIO(nbFutures):

ts = time.time()

map(test, urls * nbFutures)

span = time.time() - ts

print "time spend ", span

testIO(10)Since the codes under different concurrent libraries are similar, I will not list them one by one. You can refer to the code in computing intensive for reference.

Through the test, we can find that for IO intensive tasks, using multithreading or multi processes can effectively improve the efficiency of the program, while using pseudo threads can significantly improve the performance. Compared with the case without concurrency, the response time of eventlets is improved from 9 seconds to 0.03 seconds. At the same time, eventlet / gevent provides a non blocking asynchronous call mode, which is very convenient. Threads or pseudo threads are recommended here because threads and pseudo threads consume less resources when the response time is similar.

summary

Python provides different concurrency modes. Corresponding to different scenarios, we need to choose different modes for concurrency. To choose an appropriate method, you should not only understand the principle of the method, but also do some tests and experiments. The data is the best reference for you to make your choice.