Project background

In addition to using the common third-party drawing bed, we can also build a private drawing bed to provide front-end basic services for the team. This paper aims to review and summarize the implementation scheme of the back-end part of the self built drawing bed, hoping to give some reference and schemes to students with similar needs. In addition, due to the front-end infrastructure, we are completely familiar with the node JS to realize the required back-end service requirements.

programme

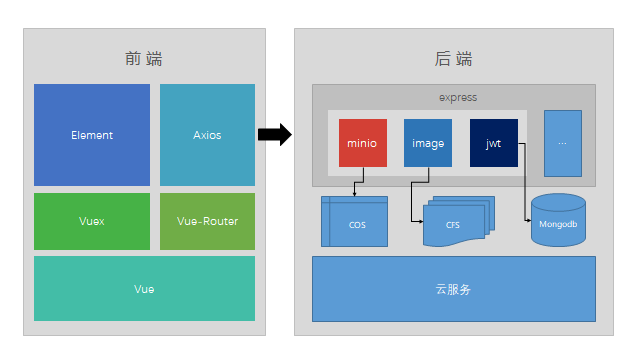

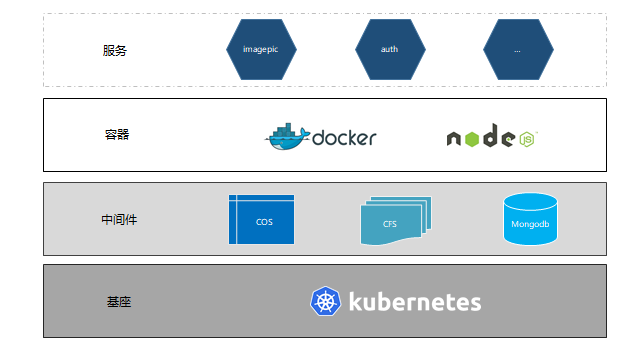

The architecture selection of the back-end part is mainly to provide infrastructure services for front-end business developers, and the group platform also provides various cloud services, and there will not be too many scenes such as high concurrency. Therefore, the language selection is still the node familiar to the former students JS mainly. Our team here mainly focuses on express Framework based. In the whole large private network technology team, the back-end is still java based, and node is mainly used as the middle layer BFF to develop and aggregate some interfaces. Therefore, the main body is still based on the single architecture, and the form of micro service adopts the cloud service product of service mesh (such as istio) to cooperate with java students instead of some nodes JS microservice framework (for example, nest.js has microservice related settings, seneca, etc.). As it is a single application, in view of the middleware mechanism of express, different modules are separated through routing, and the services provided in this drawing bed service are isolated under the module of imagepic; In terms of database selection, only one authentication mechanism is needed here, and there is no special need for additional persistence. Here I choose mongodb as database persistence data (ps: mongodb provided by cloud middleware has access problems, and the alternative scheme is implemented through CFS (file storage system) + FaaS); Due to the particularity of the drawing bed function, the uploaded pictures are converted into streams. Here, a temporary picture storage process will be used, which will be stored persistently through the CFS (file storage system) of cloud products, and the data will be deleted regularly; The real picture storage is put in COS (object storage). Compared with the file interface specification of CFS, cos is based on Amazon's S3 specification, so it is more suitable to be used as the storage carrier of pictures

catalogue

db

- \_\_temp\_\_

- imagepic

deploy

dev

- Dockerfile

- pv.yaml

- pvc.yaml

- server.yaml

production

- Dockerfile

- pv.yaml

- pvc.yaml

- server.yaml

- build.sh

faas

- index.js

- model.js

- operator.js

- read.js

- utils.js

- write.js

server

api

- openapi.yaml

lib

- index.js

- cloud.js

- jwt.js

- mongodb.js

routes

imagepic

- auth

- bucket

- notification

- object

- policy

- index.js

- minio.js

- router.js

utils

- index.js

- is.js

- pagination.js

- reg.js

- uuid.js

- app.js

- config.js

- index.js

- main.js

practice

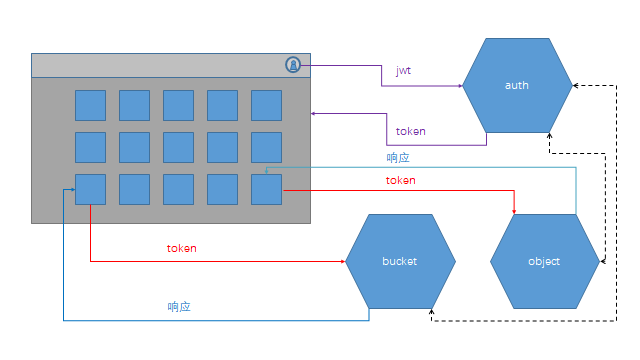

Authentication judgment is required for some interfaces involved. Here jwt is used for relevant permission verification

Source code

faas

Cloud functions are abstracted here to provide capabilities for back-end services and simulate some database operations similar to mongodb

model.js

Defined model related data format

/**

* documents data structure

* @params

* _name String Name of the file

* _collections Array Collection of files

* @examples

* const documents = {

* "_name": String,

* "_collections": Array

* }

*/

exports.DOCUMENTS_SCHEMA = {

"_name": String,

"_collections": Array

}

/**

* collections data structure

* @params

* _id String Default id of the collection

* _v Number Self increasing sequence of sets

* @examples

* const collections = {

* "_id": String,

* "_v": Number,

* }

*/

exports.COLLECTIONS_SCHEMA = {

"_id": String

}read.js

File reading operation of fs module of node

const {

isExit,

genCollection,

genDocument,

findCollection,

findLog,

stringify,

fs,

compose,

path

} = require('./utils');

exports.read = async (method, ...args) => {

let col = '', log = '';

const isFileExit = isExit(args[0], `${args[1]}_${args[2]['phone']}.json`);

console.log('isFileExit', isFileExit)

const doc = genDocument(...args);

switch (method) {

case 'FIND':

col = compose( stringify, findCollection )(doc, genCollection(...args));

log = compose( stringify, findLog, genCollection )(...args);

break;

};

if(isFileExit) {

return fs.promises.readFile(path.resolve(__dirname, `../db/${args.slice(0,2).join('/')}_${args[2][`phone`]}.json`), {encoding: 'utf-8'}).then(res => {

console.log('res', res);

console.log(log)

return {

flag: true,

data: res,

};

})

} else {

return {

flag: false,

data: {}

};

}

};write.js

File writing operation of fs module of node

const {

isExit,

fs,

path,

stringify,

compose,

genCollection,

addCollection,

addLog,

updateCollection,

updateLog,

removeCollection,

removeLog,

genDocument

} = require('./utils');

exports.write = async (method, ...args) => {

console.log('write args', args, typeof args[2]);

const isDirExit = isExit(args.slice(0, 1));

const doc = genDocument(...args);

let col = '', log = '';

switch (method) {

case 'ADD':

col = compose( stringify, addCollection )(doc, genCollection(...args));

log = compose( stringify, addLog, genCollection )(...args);

break;

case 'REMOVE':

col = compose( stringify, removeCollection )(doc, genCollection(...args));

log = compose( stringify ,removeLog, genCollection )(...args);

break;

case 'UPDATE':

col = compose( stringify, updateCollection )(doc, genCollection(...args));

log = compose( stringify, updateLog, genCollection )(...args);

break;

}

if (!isDirExit) {

return fs.promises.mkdir(path.resolve(__dirname, `../db/${args[0]}`))

.then(() => {

console.log(`Create database ${args[0]}success`);

return true;

})

.then(flag => {

if (flag) {

return fs.promises.writeFile(path.resolve(__dirname, `../db/${args.slice(0,2).join('/')}_${args[2][`phone`]}.json`), col)

.then(() => {

console.log(log);

return true;

})

.catch(err => console.error(err))

}

})

.catch(err => console.error(err))

} else {

return fs.promises.writeFile(path.resolve(__dirname, `../db/${args.slice(0,2).join('/')}_${args[2][`phone`]}.json`), col)

.then(() => {

console.log(log)

return true;

})

.catch(err => console.error(err))

}

};operator.js

const { read } = require('./read');

const { write } = require('./write');

exports.find = async (...args) => await read('FIND', ...args);

exports.remove = async (...args) => await write('REMOVE', ...args);

exports.add = async (...args) => await write('ADD', ...args);

exports.update = async (...args) => await write('UPDATE', ...args);utils.js

Common toolkit

const { DOCUMENTS_SCHEMA, COLLECTIONS_SCHEMA } = require('./model');

const { v4: uuidv4 } = require('uuid');

const path = require('path');

const fs = require('fs');

exports.path = path;

exports.uuid = uuidv4;

exports.fs = fs;

exports.compose = (...funcs) => {

if(funcs.length===0){

return arg=>arg;

}

if(funcs.length===1){

return funcs[0];

}

return funcs.reduce((a,b)=>(...args)=>a(b(...args)));

};

exports.stringify = arg => JSON.stringify(arg);

exports.isExit = (...args) => fs.existsSync(path.resolve(__dirname, `../db/${args.join('/')}`));

console.log('DOCUMENTS_SCHEMA', DOCUMENTS_SCHEMA);

exports.genDocument = (...args) => {

return {

_name: args[1],

_collections: []

}

};

console.log('COLLECTIONS_SCHEMA', COLLECTIONS_SCHEMA);

exports.genCollection = (...args) => {

return {

_id: uuidv4(),

...args[2]

}

};

exports.addCollection = ( doc, col ) => {

doc._collections.push(col);

return doc;

};

exports.removeCollection = ( doc, col ) => {

for(let i = 0; i < doc._collections.length; i++) {

if(doc._collections[i][`_id`] == col._id) {

doc._collections.splice(i,1)

}

}

return doc;

};

exports.findCollection = ( doc, col ) => {

return doc._collections.filter(f => f._id == col._id)[0];

};

exports.updateCollection = ( doc, col ) => {

doc._collections = [col];

return doc;

};

exports.addLog = (arg) => {

return `Added set ${JSON.stringify(arg)}`

};

exports.removeLog = () => {

return `Collection removed successfully`

};

exports.findLog = () => {

return `Query collection succeeded`

};

exports.updateLog = (arg) => {

return `Updated collection ${JSON.stringify(arg)}`

};lib

cloud.js

Business operations use cloud functions

const {

find,

update,

remove,

add

} = require('../../faas');

exports.cloud_register = async (dir, file, params) => {

const findResponse = await find(dir, file, params);

if (findResponse.flag) {

return {

flag: false,

msg: 'Registered'

}

} else {

const r = await add(dir, file, params);

console.log('cloud_register', r)

if (r) {

return {

flag: true,

msg: 'success'

}

} else {

return {

flag: false,

msg: 'fail'

}

}

}

}

exports.cloud_login = async (dir, file, params) => {

const r = await find(dir, file, params);

console.log('cloud_read', r)

if (r.flag == true) {

if (JSON.parse(r.data)._collections[0].upwd === params.upwd) {

return {

flag: true,

msg: 'Login successful'

}

} else {

return {

flag: false,

msg: 'Incorrect password'

}

}

} else {

return {

flag: false,

msg: 'fail'

}

}

}

exports.cloud_change = async (dir, file, params) => {

const r = await update(dir, file, params);

console.log('cloud_change', r)

if (r) {

return {

flag: true,

msg: 'Password modified successfully'

}

} else {

return {

flag: false,

msg: 'fail'

}

}

}jwt.js

jwt verification related configuration

const jwt = require('jsonwebtoken');

const {

find

} = require('../../faas');

exports.jwt = jwt;

const expireTime = 60 * 60;

exports.signToken = (rawData, secret) => {

return jwt.sign(rawData, secret, {

expiresIn: expireTime

});

};

exports.verifyToken = (token, secret) => {

return jwt.verify(token, secret, async function (err, decoded) {

if (err) {

console.error(err);

return {

flag: false,

msg: err

}

}

console.log('decoded', decoded, typeof decoded);

const {

phone,

upwd

} = decoded;

let r = await find('imagepic', 'auth', {

phone,

upwd

});

console.log('r', r)

if (r.flag == true) {

if (JSON.parse(r.data)._collections[0].upwd === decoded.upwd) {

return {

flag: true,

msg: 'Verification successful'

}

} else {

return {

flag: false,

msg: 'The login password is incorrect'

}

}

} else {

return {

flag: false,

msg: 'Login user not found'

}

}

});

}auth

For login registration verification

const router = require('../../router');

const url = require('url');

const {

pagination,

isEmpty,

isArray,

PWD_REG,

NAME_REG,

EMAIL_REG,

PHONE_REG

} = require('../../../utils');

const {

// mongoose,

cloud_register,

cloud_login,

cloud_change,

signToken

} = require('../../../lib');

// const Schema = mongoose.Schema;

/**

* @openapi

* /imagepic/auth/register:

post:

summary: register

tags:

- listObjects

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/register'

responses:

'200':

content:

application/json:

example:

code: "0"

data: {}

msg: "Success“

success: true

*/

router.post('/register', async function (req, res) {

const params = req.body;

console.log('params', params);

let flag = true,

err = [];

const {

name,

tfs,

email,

phone,

upwd

} = params;

flag = flag && PWD_REG.test(upwd) &&

EMAIL_REG.test(email) &&

PHONE_REG.test(phone);

if (!PWD_REG.test(upwd)) err.push('The password does not meet the specification');

if (!EMAIL_REG.test(email)) err.push('Mailbox filling does not meet the specification');

if (!PHONE_REG.test(phone)) err.push('The filling of mobile phone number does not meet the specification');

// const registerSchema = new Schema({

// name: String,

// tfs: String,

// email: String,

// phone: String,

// upwd: String

// });

// const Register = mongoose.model('Register', registerSchema);

if (flag) {

// const register = new Register({

// name,

// tfs,

// email,

// phone,

// upwd

// });

// register.save().then((result)=>{

// console.log("successful callback", result);

// res.json({

// code: "0",

// data: {},

// msg: 'success',

// success: true

// });

// },(err)=>{

// console.log("failed callback", err);

// res.json({

// code: "-1",

// data: {

// err: err

// },

// msg: 'failed',

// success: false

// });

// });

let r = await cloud_register('imagepic', 'auth', {

name,

tfs,

email,

phone,

upwd

});

if (r.flag) {

res.json({

code: "0",

data: {},

msg: 'success',

success: true

});

} else {

res.json({

code: "-1",

data: {

err: r.msg

},

msg: 'fail',

success: false

});

}

} else {

res.json({

code: "-1",

data: {

err: err.join(',')

},

msg: 'fail',

success: false

})

}

});

/**

* @openapi

* /imagepic/auth/login:

post:

summary: Sign in

tags:

- listObjects

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/login'

responses:

'200':

content:

application/json:

example:

code: "0"

data: {token:'xxx'}

msg: "Success“

success: true

*/

router.post('/login', async function (req, res) {

const params = req.body;

console.log('params', params);

let flag = true,

err = [];

const {

phone,

upwd

} = params;

flag = flag && PWD_REG.test(upwd) &&

PHONE_REG.test(phone);

if (!PWD_REG.test(upwd)) err.push('The password does not meet the specification');

if (!PHONE_REG.test(phone)) err.push('The filling of mobile phone number does not meet the specification');

// const registerSchema = new Schema({

// name: String,

// tfs: String,

// email: String,

// phone: String,

// upwd: String

// });

// const Register = mongoose.model('Register', registerSchema);

if (flag) {

// const register = new Register({

// name,

// tfs,

// email,

// phone,

// upwd

// });

// register.save().then((result)=>{

// console.log("successful callback", result);

// res.json({

// code: "0",

// data: {},

// msg: 'success',

// success: true

// });

// },(err)=>{

// console.log("failed callback", err);

// res.json({

// code: "-1",

// data: {

// err: err

// },

// msg: 'failed',

// success: false

// });

// });

let r = await cloud_login('imagepic', 'auth', {

phone,

upwd

});

if (r.flag) {

const token = signToken({

phone,

upwd

}, 'imagepic');

// console.log('token', token)

res.json({

code: "0",

data: {

token: token

},

msg: 'success',

success: true

});

} else {

res.json({

code: "-1",

data: {

err: r.msg

},

msg: 'fail',

success: false

});

}

} else {

res.json({

code: "-1",

data: {

err: err.join(',')

},

msg: 'fail',

success: false

})

}

});

/**

* @openapi

* /imagepic/auth/change:

post:

summary: Change Password

tags:

- listObjects

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/change'

responses:

'200':

content:

application/json:

example:

code: "0"

data: {token:'xxx'}

msg: "Success“

success: true

*/

router.post('/change', async function (req, res) {

const params = req.body;

console.log('params', params);

let flag = true,

err = [];

const {

phone,

opwd,

npwd

} = params;

flag = flag && PWD_REG.test(opwd) &&

PWD_REG.test(npwd) &&

PHONE_REG.test(phone);

if (!PWD_REG.test(opwd)) err.push('The old password does not meet the specification');

if (!PWD_REG.test(npwd)) err.push('The new password does not meet the specification');

if (!PHONE_REG.test(phone)) err.push('The filling of mobile phone number does not meet the specification');

if (flag) {

let r = await cloud_login('imagepic', 'auth', {

phone: phone,

upwd: opwd

});

if (r.flag) {

const changeResponse = await cloud_change('imagepic', 'auth', {

phone: phone,

upwd: npwd

});

if(changeResponse.flag) {

res.json({

code: "0",

data: {},

msg: 'success',

success: true

});

} else {

res.json({

code: "-1",

data: {

err: changeResponse.msg

},

msg: 'fail',

success: false

});

}

} else {

res.json({

code: "-1",

data: {

err: r.msg

},

msg: 'fail',

success: false

});

}

} else {

res.json({

code: "-1",

data: {

err: err.join(',')

},

msg: 'fail',

success: false

})

}

})

module.exports = router;bucket

Interface related to barrel operation

const minio = require('../minio');

const router = require('../../router');

const url = require('url');

const {

pagination,

isEmpty,

isArray

} = require('../../../utils');

/**

* @openapi

* /imagepic/bucket/listBuckets:

summary: Query all buckets

get:

parameters:

- name: pageSize

name: pageNum

in: query

description: user id.

required: false

tags:

- List

responses:

'200':

content:

application/json:

example:

code: "0"

data: [

{

"name": "5g-fe-file",

"creationDate": "2021-06-04T10:01:42.664Z"

},

{

"name": "5g-fe-image",

"creationDate": "2021-05-28T01:34:50.375Z"

}

]

message: "Success“

success: true

*/

router.get('/listBuckets', function (req, res) {

const params = url.parse(req.url, true).query;

console.log('params', params);

minio.listBuckets(function (err, buckets) {

if (err) return console.log(err)

// console.log('buckets :', buckets);

res.json({

code: "0",

// Paging processing

data: isEmpty(params) ?

buckets :

isArray(buckets) ?

( params.pageSize && params.pageNum ) ?

pagination(buckets, params.pageSize, params.pageNum) :

[] :

[],

msg: 'success',

success: true

})

})

})

module.exports = router;object

Interface for picture object

const minio = require('../minio');

const router = require('../../router');

const multer = require('multer');

const path = require('path');

const fs = require('fs');

const {

pagination

} = require('../../../utils');

const {

verifyToken

} = require('../../../lib');

/**

* @openapi

* /imagepic/object/listObjects:

get:

summary: Gets all objects in the bucket

tags:

- listObjects

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/listObjects'

responses:

'200':

content:

application/json:

example:

code: "0"

data: 49000

msg: "Success“

success: true

*/

router.post('/listObjects', function (req, res) {

const params = req.body;

// console.log('listObjects params', params)

const {

bucketName,

prefix,

pageSize,

pageNum

} = params;

const stream = minio.listObjects(bucketName, prefix || '', false)

let flag = false,

data = [];

stream.on('data', function (obj) {

data.push(obj);

flag = true;

})

stream.on('error', function (err) {

console.log(err)

data = err;

flag = false;

})

stream.on('end', function (err) {

if (flag) {

// Paging processing

res.json({

code: "0",

data: pageNum == -1 ? {

total: data.length,

lists: data

} : {

total: data.length,

lists: pagination(data, pageSize || 10, pageNum || 1)

},

msg: 'success',

success: true

})

} else {

res.json({

code: "-1",

data: err,

msg: 'fail',

success: false

})

}

})

})

/**

* @openapi

* /imagepic/object/getObject:

post:

summary: Download object

tags:

- getObject

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/getObject'

responses:

'200':

content:

application/json:

example:

code: "0"

data: 49000

msg: "Success“

success: true

*/

router.post('/getObject', function (req, res) {

const params = req.body;

// console.log('statObject params', params)

const {

bucketName,

objectName

} = params;

minio.getObject(bucketName, objectName, function (err, dataStream) {

if (err) {

return console.log(err)

}

let size = 0;

dataStream.on('data', function (chunk) {

size += chunk.length

})

dataStream.on('end', function () {

res.json({

code: "0",

data: size,

msg: 'success',

success: true

})

})

dataStream.on('error', function (err) {

res.json({

code: "-1",

data: err,

msg: 'fail',

success: false

})

})

})

})

/**

* @openapi

* /imagepic/object/statObject:

post:

summary: Get object metadata

tags:

- statObject

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/statObject'

responses:

'200':

content:

application/json:

example:

code: "0"

data: {

"size": 47900,

"metaData": {

"content-type": "image/png"

},

"lastModified": "2021-10-14T07:24:59.000Z",

"versionId": null,

"etag": "c8a447108f1a3cebe649165b86b7c997"

}

msg: "Success“

success: true

*/

router.post('/statObject', function (req, res) {

const params = req.body;

// console.log('statObject params', params)

const {

bucketName,

objectName

} = params;

minio.statObject(bucketName, objectName, function (err, stat) {

if (err) {

return console.log(err)

}

// console.log(stat)

res.json({

code: "0",

data: stat,

msg: 'success',

success: true

})

})

})

/**

* @openapi

* /imagepic/object/presignedGetObject:

post:

summary: Get object temporary connection

tags:

- presignedGetObject

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/presignedGetObject'

responses:

'200':

content:

application/json:

example:

code: "0"

data: "http://172.24.128.7/epnoss-antd-fe/b-ability-close.png?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=7RGX0TJQE5OX9BS030X6%2F20211126%2Fdefault%2Fs3%2Faws4_request&X-Amz-Date=20211126T031946Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&X-Amz-Signature=27644907283beee2b5d6f468ba793db06cd704e7b3fb1c334f14665e0a8b6ae4"

msg: "Success“

success: true

*/

router.post('/presignedGetObject', function (req, res) {

const params = req.body;

// console.log('statObject params', params)

const {

bucketName,

objectName,

expiry

} = params;

minio.presignedGetObject(bucketName, objectName, expiry || 7 * 24 * 60 * 60, function (err, presignedUrl) {

if (err) {

return console.log(err)

}

// console.log(presignedUrl)

res.json({

code: "0",

data: presignedUrl,

msg: 'success',

success: true

})

})

})

/**

* @openapi

* /imagepic/object/putObject:

post:

summary: Upload pictures

tags:

- putObject

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/putObject'

responses:

'200':

content:

application/json:

example:

code: "0"

data: ""

msg: "Success“

success: true

*/

router.post('/putObject', multer({

dest: path.resolve(__dirname, '../../../../db/__temp__')

}).single('file'), async function (req, res) {

console.log('/putObject', req.file, req.headers);

const verifyResponse = await verifyToken(req.headers.authorization, 'imagepic');

console.log('verifyResponse', verifyResponse)

const bucketName = req.headers.bucket,

folder = req.headers.folder,

originName = req.file['originalname'],

file = req.file['path'],

ext = path.extname(req.file['originalname']),

fileName = req.file['filename'];

console.log('folder', folder);

if (!verifyResponse.flag) {

fs.unlink(path.resolve(__dirname, `../../../../db/__temp__/${fileName}`), function (err) {

if (err) {

console.error(`Delete file ${fileName} Failure, failure reason: ${err}`)

}

console.log(`Delete file ${fileName} success`)

});

return res.json({

code: "-1",

data: verifyResponse.msg,

msg: 'Permission not met',

success: false

})

} else {

const fullName = folder ? `${folder}/${originName}` : `${originName}`;

fs.stat(file, function (err, stats) {

if (err) {

return console.log(err)

}

minio.putObject(bucketName, fullName, fs.createReadStream(file), stats.size, {

'Content-Type': `image/${ext}`

}, function (err, etag) {

fs.unlink(path.resolve(__dirname, `../../../../db/__temp__/${fileName}`), function (err) {

if (err) {

console.error(`Delete file ${fileName} Failure, failure reason: ${err}`)

}

console.log(`Delete file ${fileName} success`)

});

if (err) {

return res.json({

code: "-1",

data: err,

msg: 'fail',

success: false

})

} else {

return res.json({

code: "0",

data: etag,

msg: 'success',

success: true

})

}

})

})

}

});

/**

* @openapi

* /imagepic/object/removeObject:

post:

summary: Delete picture

tags:

- removeObject

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/removeObject'

responses:

'200':

content:

application/json:

example:

code: "0"

data: ""

msg: "Success“

success: true

*/

router.post('/removeObject', async function (req, res) {

console.log('/removeObject', req.body, req.headers);

const verifyResponse = await verifyToken(req.headers.authorization, 'imagepic');

if (!verifyResponse.flag) {

return res.json({

code: "-1",

data: verifyResponse.msg,

msg: 'Permission not met',

success: false

})

} else {

const {

bucketName,

objectName

} = req.body;

minio.removeObject(bucketName, objectName, function (err) {

if (err) {

return res.json({

code: "-1",

data: err,

msg: 'fail',

success: false

})

}

return res.json({

code: "0",

data: {},

msg: 'success',

success: true

})

})

}

});

module.exports = router;summary

In the process of developing the back-end interface for the front-end drawing bed, I really feel the simplicity of using Serverless to develop the data side. For node JS, the better use of faas for related function granularity business development may be more applicable to other existing scenarios, node JS is hard to shake the position of java, go, c + + and other traditional back-end languages in the back-end market. Therefore, I personally think that in some scenarios, such as business focusing on IO and event model, node The Serverless of JS may become a follow-up development momentum. The form of multilingual back-end services combined with other recalculation scenarios may be a form in the future. (ps: the concept of faas is only used here. The real Serverless should not only use the format of such a function, but also pay more attention to the scheduling of baas layer. It doesn't matter whether Serverless or not. We should mainly focus on services rather than resources.)