Series of articles:

Container & Service: opening, pressure and resources

Container & Service: Docker construction practice of a Java application

Container & Service: Jenkins construction of Docker application

Container & Service: Jenkins construction of Docker application (II)

Container & Service: K8s and Docker application cluster (I)

Container & Service: K8s and Docker application cluster (II)

Container & Service: Kubernetes component and Deployment operation

Container & Service: ClickHouse and k8s architecture

Container & Service: capacity expansion

Container & Service: metrics server exploration

Container & Service: Helm Charts (I)

Series of articles:

Container & Service: opening, pressure and resources

Container & Service: Docker construction practice of a Java application

Container & Service: Jenkins construction of Docker application

Container & Service: Jenkins construction of Docker application (II)

Container & Service: K8s and Docker application cluster (I)

Container & Service: K8s and Docker application cluster (II)

Container & Service: Kubernetes component and Deployment operation

Container & Service: ClickHouse and k8s architecture

Container & Service: capacity expansion

Container & Service: metrics server exploration

Container & Service: Helm Charts (I)

I. local environment

macos Big Sur, 11.2.3, Apple m1 chip, 8G memory.

II. Helm installation

2.1 installation under mac

Through online search, we found a relatively high-quality introductory document: Helm Chinese document , including from installation to use, developer's guide, deployment to Kubernetes Kubernetes CI/CD Several aspects.

This chapter first focuses on installation and use. Homebrew on mac has supported the download and installation of helm, and members of Kubernetes community have contributed a helm subcommand to homebrew;

brew install kubernetes-helm

Execute the installation locally. The command and output are as follows:

brew install kubernetes-helm Updating Homebrew... Warning: helm 3.5.4 is already installed and up-to-date. To reinstall 3.5.4, run: brew reinstall helm

Currently, brew is installed at version 3.5.4.

2.2 windows

Under windows, we can also choose to use Chocolatey To install helm. For a brief description, chocolate is a NuGet based package manager tool developed specifically for Windows systems. It is similar to npm of Node.js, brew of MacOS and apt get of Ubuntu. It is called choco for short. Chocolate's design goal is to become a decentralized framework for developers to quickly install applications and tools on demand.

Simply understand, chocolate is yum or apt get under Windows system or Homebrew under mac.

Members of the Kubernetes community are Chocolatey Contributed a Helm package , the command is:

choco install kubernetes-helm

In addition to chocolate, scoop is also a super easy-to-use software under Windows and a powerful Windows command line package management tool

You can also install the binary file of Helm through the scoop command-line installer, scoop install helm.

2.3 other methods

We can also use Helm's source code to build and install according to the specific system.

III. premise of Helm use

3.1 premise

The following are prerequisites for the successful and safe use of Helm.

-

A Kubernetes cluster

-

Decide which security configurations to apply to the installation, if any

-

Install and configure Helm and Tiller (cluster side service).

3.2 installation of Kubernetes

3.2.1 installation method reference

For the installation of docker under Mac, please refer to Container & Service: Jenkins local and docker installation and deployment ; Kubernetes are included in the latest version of docker, but you may need to install it in docker or make another configuration adjustment. For relevant operations, please refer to: Construction of docker MAC environment.

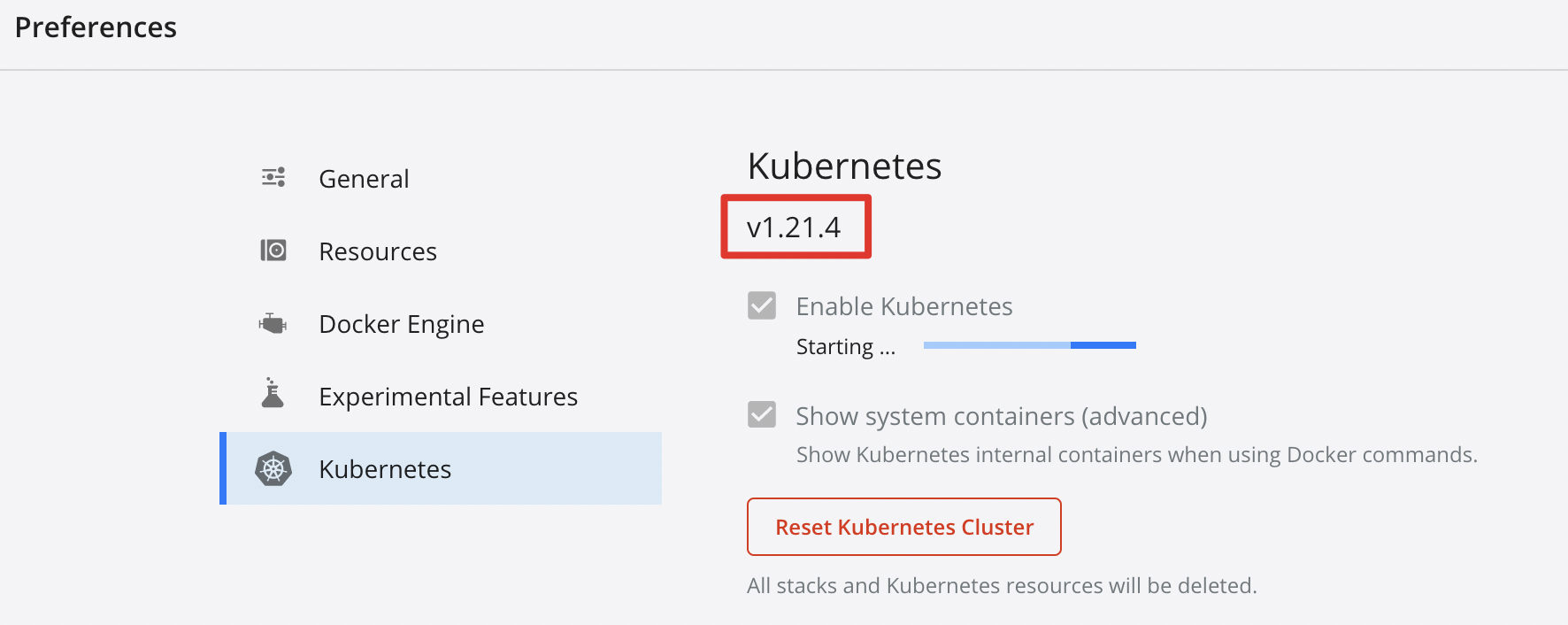

Kubernetes installed in Docker can be seen in the figure below:

The problem is that Kubernetes is always in the starting state after installation:

3.2.2 problem handling process

In practice, it is found that it is a little different from the previous articles, mainly the version of Kubernetes. The docker version used in this article is:

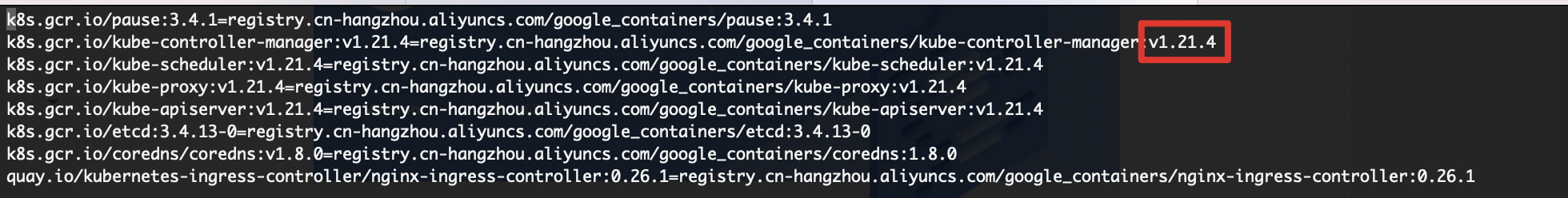

Docker version is 4.0.1, Kubernetes:v1.21.4. The branch of k8s for docker desktop we downloaded only supports v1.32.3, so we need to manually modify the file images.properties to v1.21.4.

Corresponding files can be used directly:

k8s.gcr.io/pause:3.4.1=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 k8s.gcr.io/kube-controller-manager:v1.21.4=registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.21.4 k8s.gcr.io/kube-scheduler:v1.21.4=registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.21.4 k8s.gcr.io/kube-proxy:v1.21.4=registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.21.4 k8s.gcr.io/kube-apiserver:v1.21.4=registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.21.4 k8s.gcr.io/etcd:3.4.13-0=registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 k8s.gcr.io/coredns/coredns:v1.8.0=registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.0 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1=registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1

3.2.3 execute image loading and restart k8s

After the above modifications are completed, we execute. / load again_ images.sh , You can see the execution contents as follows (to save space, only the beginning and end parts are reserved):

k8s-for-docker-desktop % ./load_images.sh images.properties found. 3.4.1: Pulling from google_containers/pause Digest: sha256:6c3835cab3980f11b83277305d0d736051c32b17606f5ec59f1dda67c9ba3810 Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause@sha256:6c3835cab3980f11b83277305d0d736051c32b17606f5ec59f1dda67c9ba3810 v1.21.4: Pulling from google_containers/kube-controller-manager Omit the middle part... Digest: sha256:d0b22f715fcea5598ef7f869d308b55289a3daaa12922fa52a1abf17703c88e7 Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1 registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1 Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1 Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller@sha256:d0b22f715fcea5598ef7f869d308b55289a3daaa12922fa52a1abf17703c88e7

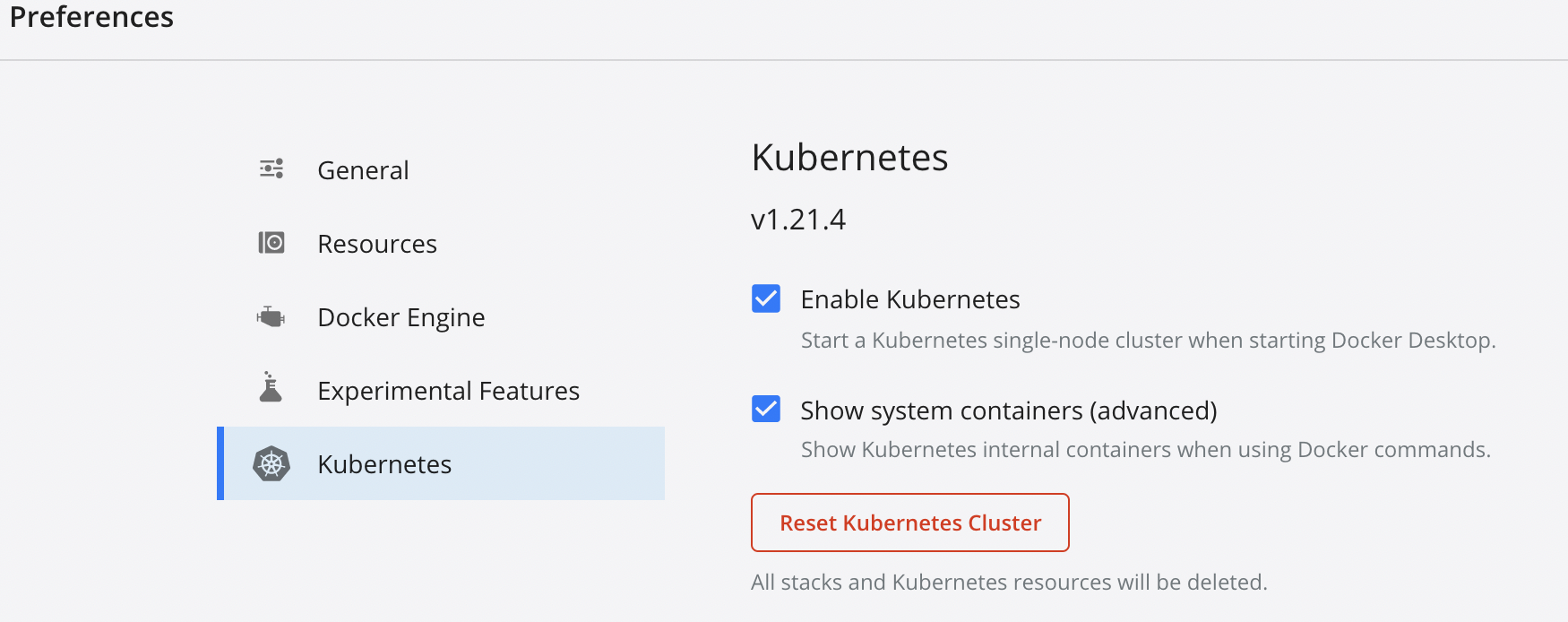

After the implementation is completed, start Kubernetes again. After a while, it will show that the startup is complete.

3.3 kubectl

In addition to the operational Kubernetes cluster, we also need to have a locally configured kubectl.

Helm will find the location where Tiller is installed by reading the Kubernetes configuration file (usually $HOME/.kube/config). This is the same file used by kubectl.

To find out which cluster Tiller will be installed in, you can run kubectl config current context or kubectl cluster info.

$ kubectl config current-contextmy-cluster docker-desktop

IV. Helm initialization and Tiller

4.1 what is Tiller

Tiller is the server part of Helm and usually runs inside the Kubernetes cluster. But for development, it can also run locally and be configured to communicate with a remote Kubernetes cluster.

4.2 Helm initialization

4.2.1 official execution order

According to the official documentation, after we install Helm, we can use the helm init command to initialize the local CLI and install Tiller to our Kubernetes cluster:

$ helm init --history-max 200

But in fact, it is not so simple. An error is reported after execution:

k8s-for-docker-desktop % helm init --history-max 200 Error: unknown command "init" for "helm" Did you mean this? lint Run 'helm --help' for usage.

4.2.2 problem analysis

Helm version information:

helm version

version.BuildInfo{Version:"v3.5.4", GitCommit:"1b5edb69df3d3a08df77c9902dc17af864ff05d1", GitTreeState:"dirty", GoVersion:"go1.16.3"}It can be seen that the locally installed helm version is 3.5.4, while the init command is helm2, which has been deprecated in Helm 3. You can use helm env to view the environment configuration information. In addition, in Helm 3, Tiller is removed.

helm env view information is as follows:

HELM_BIN="helm" HELM_CACHE_HOME="/Users/lijingyong/Library/Caches/helm" HELM_CONFIG_HOME="/Users/lijingyong/Library/Preferences/helm" HELM_DATA_HOME="/Users/lijingyong/Library/helm" HELM_DEBUG="false" HELM_KUBEAPISERVER="" HELM_KUBEASGROUPS="" HELM_KUBEASUSER="" HELM_KUBECAFILE="" HELM_KUBECONTEXT="" HELM_KUBETOKEN="" HELM_MAX_HISTORY="10" HELM_NAMESPACE="default" HELM_PLUGINS="/Users/lijingyong/Library/helm/plugins" HELM_REGISTRY_CONFIG="/Users/lijingyong/Library/Preferences/helm/registry.json" HELM_REPOSITORY_CACHE="/Users/lijingyong/Library/Caches/helm/repository" HELM_REPOSITORY_CONFIG="/Users/lijingyong/Library/Preferences/helm/repositories.yaml"

It seems that in the current Helm 3 version, there is no need to execute the commands related to helm init. Let's continue to execute downward.

4.3 installing a sample chart

4.3.1 perform repo update

helm repo update Error: no repositories found. You must add one before updating

Execution failed, prompt to add one first. ok, add one first:

helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Then we execute the update, and the result is ok:

% helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "stable" chart repository Update Complete. ⎈Happy Helming!⎈

4.3.2 installing chart

helm install mysql stable/mysql Error: Kubernetes cluster unreachable: Get "https://kubernetes.docker.internal:6443/version?timeout=32s": x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

Error again, um... This time, the problem is that the kubernetes cluster is unreachable, but you can locate the ca authentication problem. Refer to the following articles:

ca authentication debugging record during kubernetes binary deployment