Blog direct in the last section!

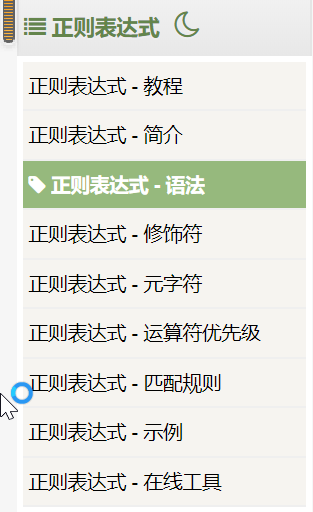

After obtaining the web page, the next step is to analyze the data. We need to be familiar with the basic operation of regular expressions. Here is a tutorial entrance. Students without knowledge reserve can go to this channel first

You can only look at the simplest "grammar" section

OK, assuming that everyone is basically familiar with the basic syntax of regular expressions, let's start this section!

Start with the regular expression code in the above code:

#Find links

findLink=re.compile(r'<a href="(.*?)">')

#Find pictures

findImage=re.compile(r'<img.*src="(.*?)"',re.S)

#Film title

findTitle=re.compile(r'<span class="title">(.*)</span>')

#Film rating

findRating=re.compile(r'<span class="rating_num" property="v:average">(.*)</span>')

#Number of evaluators

findJudge=re.compile(r'<span>(\d*)Human evaluation</span>') #Notice the backslash

#brief introduction

findInq=re.compile(r'<span class="inq">(.*)</span>') #Note that regular expressions use backslashes, but browser tags use a general division sign/

#Find the relevant content of the film (director, actor, etc.) and just climb the first < p > tag. We note that regular expressions can be used, and then change it again after the corresponding error occurs

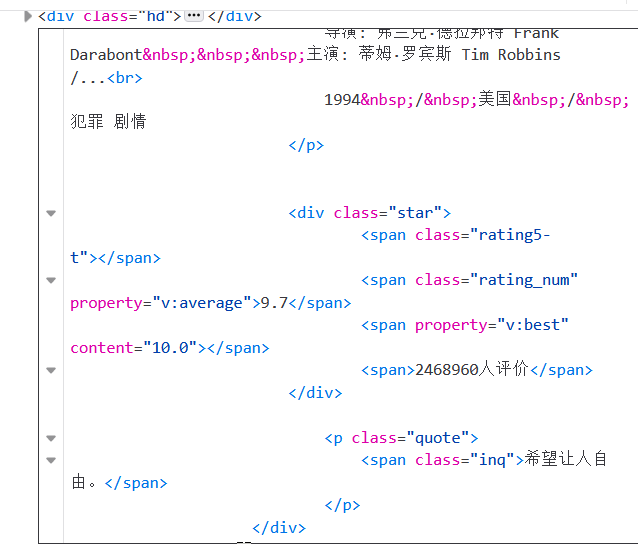

findBd=re.compile(r'<p class="">(.*?)</p>',re.S) #We found that the column of Firefox is different from that of chrome</p>

#So do we have to add re.S to this bd? Using re.S, you can match all strings. If you remove it, it will only match the first line. Of course, there is nothing

#'?' in regular expression Indicates that the previous' < p class = "" > 'appears 0 ~ 1 times

#'r' here means that there is no need to add additional transfer characters, otherwise we have to do additional escape operations when matching strings

We should pay attention to several points

1)re.compile(r ''), we know that we should pay special attention to the occurrence of line break '\' in string matching, but if we add it every time, it will be inefficient and easy to miss and make errors. Therefore, python provides this r function to automatically add '\' to our string. For our programmers, this operation is transparent, as if it were the original string, But Python actually helped us to do the conversion (it took me a while to wonder how this was used)

2) For matching multiple rows of data, not adding re.S is a serious consequence. Without re.S, the matching text only occurs in the first row! We want to search in the whole HTML, but you just found the first line and found a lonely

3) < p class = "" > (. *?) < / P > here '?' What string does it represent 0 ~ 1?

We found that there are two < p > tags in the DIV in the figure below, but in fact, we only need the actor and director information in the first < p > tag. If '?' is not added, The second < p > tag also meets the conditions, so it still needs to be added

4) Regular expressions are equivalent to intercepting a piece of code for us. For internal information, we still need to use code logic in the part of 'parsing function code' to obtain the data we really want

The function code is parsed on the following table:

def getData(baseurl):

datalist=[] #For example, for a 250 movie, we crawl 25 movies at a time, so we need to call it 10 times in total

for i in range(0,1): #From page 0 to 10, close the section on the left and open the section on the right

url=baseurl+str(25*i) #String splicing requires str(int) function for conversion,

html=askURL(url)

datalist.append(html)

#2. Analyze data

soup=BeautifulSoup(html,"html.parser")

# count=0

data=[]

for item in soup.find_all('div',class_="item"): #Underline means that there is a class attribute in div. when class is an attribute, underline it

#print(item) test to view all the information of the movie

item=str(item)

link=re.findall(findLink,item)[0] #re library is used to find the specified string through regular expression and select the first item

data.append(link)

image=re.findall(findImage,item)

data.append(image)

titles=re.findall(findTitle,item)

if(len(titles)>=2):

ctitle=titles[0]

otitle=titles[1]

data.append(ctitle)

data.append(otitle)

else:

data.append(titles[0])

data.append(list('It only has a Chinese name')) #No foreign name left blank

inq=re.findall(findInq,item)

data.append(inq)

rating=re.findall(findRating,item)[0] #The data retrieved from the list is of type string

rating+=str('branch')

data.append(rating)

bd=re.findall(findBd,item)[0]

bd=re.sub(r'<br(\s+)?/>(\s+)?',"",bd)

print('Above is bd')

data.append(bd.strip())

judge=re.findall(findJudge,item)

data.append(list('Number of evaluators:')+judge)

print(data)

datalist.append(data)

return datalist1) Name, we only keep two names for convenience, Chinese and others

2) < / BR > tags, spaces and other data we don't want to see may remain in the code. How to remove them?

Or use regular expressions to replace everything we don't want to see with "" An empty string is sufficient

Or use regular expressions to replace everything we don't want to see with "" An empty string is sufficient

bd=re.sub(r'<br(\s+)?/>(\s+)?',"",bd)

3) To splice URL addresses, we need 250 movies, but there are only 25 movies on each page, so we need to access the corresponding page by observing the parameter information carried by the URL, which requires the use of previous functions and string splicing operations

for i in range(0,10): # from page 0 to 10, the interval is closed on the left and opened on the right

The splicing of url=baseurl+str(25*i) # string needs to be transformed by str(int) function,

html=askURL(url)