In the last issue, we explained the Python Requests library, submitting a basic form, HTML related controls and so on.

In this article, we followed the previous article on submitting files and images through Python Requests, processing login cookie s, HTTP basic access authentication, and other form related issues.

Submit documents and images

Although uploading files is very common on the network, it is not commonly used for network data collection. However, if you want to upload a test example for your website's files, you can also implement it through the Python Requests library. In any case, it is always useful to master the working principle.

The following is an example of the source code for file upload:

<label class="UploadPicture-wrapper"> <input type="file" accept="image/png,image/jpeg" class="UploadPicture-input"> <button type="button" class="Button UserCoverEditor-simpleEditButton DynamicColorButton DynamicColorButton--dark"> <span style="display: inline-flex; align-items: center;"></span> Edit cover image </button></label>

In addition to the < input > tag, the file upload form has a type attribute of file, which looks no different from the text field in the previous article. In fact, the Python Requests library handles this form in a very similar way as before:

import requestsdef upload_image(): files = {'uploadFile': open('files/2fe7243c7c113fad443b375a021801eb6277169d.png', 'rb')} r = requests.post("http://pythonscraping.com/pages/processing2.php", files=files) print(r.text)if __name__ == '__main__': upload_image()It should be noted that the value submitted to the form field uploadFile here is not necessarily a simple string, but a Python file object opened with the open function. In this example, we submit an image file saved on our computer. The file path is relative to the location of the Python program.

Handling logins and cookie s

So far, most of the forms we've introduced allow you to submit information to the website, or let you see the page information you want immediately after submitting the form. So what's the difference between those forms and the login form, which keeps you "logged in" when you browse the website?

Most modern websites use cookies to track the status information of whether users have logged in. Once the website verifies your login credentials, it will save them in your browser's cookie, which usually contains a token generated by the server, login validity period and login status tracking information. The website will use this cookie as a credential for information verification and show it to the server when you browse each page of the website. Before the widespread use of cookies in the mid-1990s, it was a major problem on websites to ensure users' secure authentication and tracking of users.

Although cookies solve big problems for web developers, they also bring big problems for web crawlers. You can submit the login form only once a day, but if you don't pay attention to the cookie sent back to you after the form, your login status will be lost and you need to log in again when you visit the new page again after a period of time.

Now we have a blog management background. We need to log in to publish articles and go to Guizhou cadre training school https://www.ganxun.cn/searchschool/gz.html Send pictures. Let's simulate login and track cookie s by using Python Requests. The following is a code example:

import requestsfrom bs4 import BeautifulSoupfrom requests import Session, exceptionsfrom utils import connection_utilclass GetCookie(object): def __init__(self): self._session = Session() self._init_connection = connection_util.ProcessConnection() def get_cookie_by_login(self): # Another one session in get_token=self.request_verification_token() if get_token: params = {'__RequestVerificationToken': get_token, 'Email': 'abc@pdf-lib.org', 'Password': 'hhgu##$dfe__e', 'RememberMe': True} r = self._session.post('https://pdf-lib.org/account/admin', params) # if request is used_ verification_ Token: a 500 error if r.status will appear here_ code == 500: print(r.content.decode('utf-8')) print('Cookie is set to:') print(r.cookies.get_dict()) print('--------------------------------') print('Going to post article page..') r = self._ session. get(' https://pdf-lib.org/Manage/ArticleList ', cookies=r.cookies) print(r.text) def request_verification_token (self): # here you will still get the required content_ content = self._ init_ connection. init_ connection(' https://pdf-lib.org/account/admin ') if get_ content: try: get_ token = get_ content. find("input", {"name": "__RequestVerificationToken"}). get("value") except Exception as e: print(f"ot unhandled exception {e}") return False return get_ tokenif __ name__ == '__ main__': get_ cookie = GetCookie() get_ cookie. get_ cookie_ by_ login()The above code sends relevant parameters to the login page to simulate US entering the user name and password to the login page. Then we get cookie s from the request and print the login results.

For simple pages, we can handle it this way, but if the website is complex, it often secretly adjusts cookies, or if we don't want to use cookies at the beginning, what should we do? The session function of Requests library can perfectly solve these problems:

import requestsfrom bs4 import BeautifulSoupfrom requests import Session, exceptionsfrom utils import connection_utilclass GetCookie(object): def __init__(self): self._session = Session() self._init_connection = connection_util.ProcessConnection() def get_cookie_by_login(self): # Another one session in get_token=self.get_request_verification_token() if get_token: params = {'__RequestVerificationToken': get_token, 'Email': 'abc@pdf-lib.org', 'Password': 'hhgu##$dfe__e', 'RememberMe': True} r = self._session.post('https://pdf-lib.org/account/admin', params) # if request is used_ verification_ Token: a 500 error if r.status will appear here_ code == 500: print(r.content.decode('utf-8')) print('Cookie is set to:') print(r.cookies.get_dict()) print('--------------------------------') print('Going to post article page..') r = self._ session. get(' https://pdf-lib.org/Manage/ArticleList ', cookies=r.cookies) print(r.text) def get_ request_verification_token (self): # connect to the website try: headers = {"user agent": "Mozilla / 5.0 (Windows NT 10.0; win64; x64) applewebkit / 537.36 (KHTML, like gecko) Chrome / 85.0.4183.121 Safari / 537.36", "accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9"} html = self._ session. get(" https://pdf-lib.org/Account/Login ", headers=headers) except (exceptions.ConnectionError, exceptions.HTTPError, exceptions.Timeout) as e: return False try: bsObj = BeautifulSoup(html.text, features='html.parser') except AttributeError as e: return False if bsObj: try: get_token = bsObj.find(" input", {"name": "__RequestVerificationToken"}).get("value") except Exception as e: print(f"ot unhandled exception {e}") return False return get_tokenif __name__ == '__main__': get_cookie = GetCookie() get_cookie.get_cookie_by_login()In this example, the session object (obtained by calling requests.Session()) will continue to track session information, such as cookie s, header s, and even information about running HTTP protocols, such as HTTPS adapter (providing a unified interface for HTTP and HTTPS linked sessions).

Requests is a awesome library. Programmers need no brains and no code to write. They may just be inferior to Selenium. Although writing web crawlers, you may be letting Requests libraries do everything for themselves, but it is very important to keep a close watch on the state of cookie and control the scope of them. In this way, you can avoid painful debugging and searching for exceptions of the website, and save a lot of time.

HTTP basic access authentication

Before the invention of cookie s, the most common way to handle website login was to use HTTP basic access authentication. It is not commonly used now, but we still meet it occasionally, especially on some websites with high security or internal websites of the company, as well as some API s.

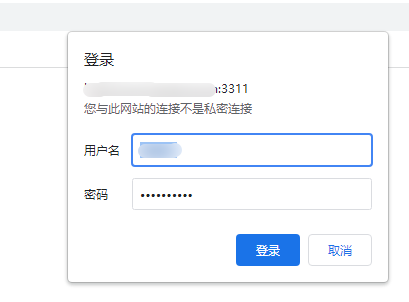

The following is a screenshot of the basic access authentication interface:

As in the previous example, you need to enter a user name and password to log in.

The Requests library has an auth module dedicated to HTTP authentication:

import requestsfrom requests.auth import HTTPBasicAuthdef http_auth(): auth = HTTPBasicAuth('user', '1223**%%adw') r = requests.post(url="http://www.test.com:3311/", auth=auth) print(r.text)Although this looks like a normal POST request, an HTTPBasicAuth object is passed to the request as an auth parameter. The result displayed will be the page of successful user name and password verification (if the verification fails, it will be a page of denial of access).

Other form questions

Forms are the entry point of websites loved by malicious bots. Of course, you don't want robots to create spam accounts, occupy expensive server resources, or submit spam comments on blogs. Therefore, new websites often use many security measures in HTML, so that forms can not be submitted by robots in batches.

I don't intend to explain the details of the verification code in this article (because the article is long enough). Later, I will introduce the image processing and text recognition methods using Python to detail this part.

If you encounter some inexplicable errors when submitting the form, or the server always rejects the default request, I will introduce these problems in future articles.

All the source code of this article has been hosted in Github, address: https://github.com/sycct/Scrape_1_1.git

If you have any questions, please issue.