1, Nosql overview

Why use Nosql

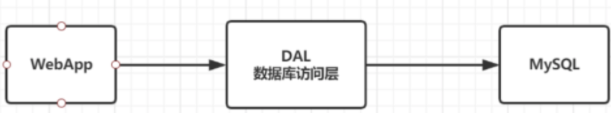

1. Stand alone Mysql Era

In the 1990s, the number of visits to a website was generally not too large, and a single database was enough. With the increase of users, the website has the following problems

- When the amount of data increases to a certain extent, the stand-alone database cannot be put down

- The index of data (B+ Tree) cannot be stored in a single machine memory

- When the amount of access becomes larger (mixed reading and writing), one server cannot afford it.

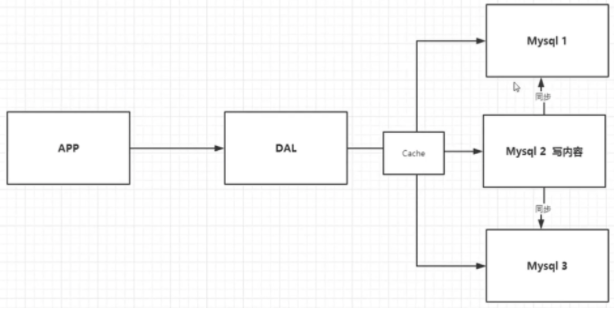

2. Memcached + Mysql + vertical split (read write split)

80% of the website is reading. It's very troublesome to query the database every time! Therefore, we hope to reduce the pressure on the database. We can use cache to ensure efficiency!

The optimization process goes through the following processes:

- Optimize the data structure and index of the database (difficult)

- File cache, which is obtained through IO stream, is slightly more efficient than accessing the database every time, but the IO stream can't bear the explosive growth of traffic

- MemCache, the most popular technology at that time, added a layer of cache between the database and the database access layer. During the first access, query the database and save the results to the cache. For subsequent queries, check the cache first. If it is used directly, the efficiency is significantly improved.

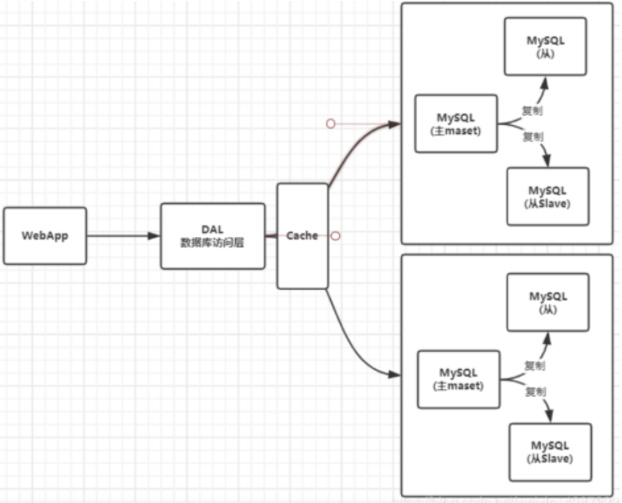

3. Sub database and sub table + horizontal split + Mysql Cluster

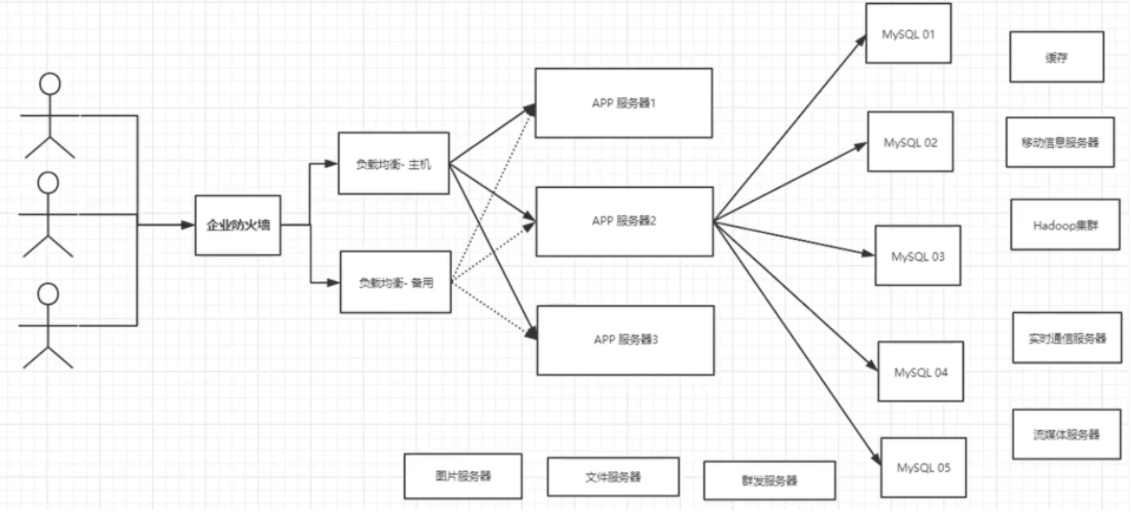

4. Today's recent era

Nowadays, with the rapid growth of information and the emergence of a variety of data (user positioning data, picture data, etc.), relational database (RDBMS) can not meet the requirements of a large number of data under the background of big data. Nosql database can easily solve these problems.

At present, it is a basic Internet project

Why use NoSQL?

User's personal information, social networks, geographical location. User generated data, user logs and so on are growing explosively!

At this time, we need to use NoSQL database. NoSQL can handle the above situations well!

What is Nosql

NoSQL = Not Only SQL (not just SQL)

Not Only Structured Query Language

Relational database: column + row. The data structure under the same table is the same.

Non relational database: data storage has no fixed format and can be expanded horizontally.

NoSQL generally refers to non relational database, with the development of web2.0 0 the birth of the Internet, the traditional relational database is difficult to deal with web2 0 era! In particular, the large-scale and highly concurrent communities have exposed many insurmountable problems. NoSQL is developing very rapidly in today's big data environment, and Redis is the fastest growing community.

Nosql features

-

Easy to expand (there is no relationship between data, it is easy to expand!)

-

Large amount of data and high performance (Redis can write 80000 times and read 110000 times a second. NoSQL's cache record level is a fine-grained cache, and its performance will be relatively high!)

-

Data types are diverse! (there is no need to design the database in advance, and it can be used at any time)

-

Traditional RDBMS and NoSQL

conventional RDBMS(Relational database) - Structured organization - SQL - Data and relationships exist in separate tables row col - Operation, data definition language - Strict consistency - Basic transaction - ...

Nosql - Not just data - There is no fixed query language - Key value pair storage, column storage, document storage, graphic database (social relationship) - Final consistency - CAP Theorem sum BASE - High performance, high availability, high scalability - ...

Understanding: 3V + 3 high

3V in the era of big data: it mainly describes the problem

- Massive Velume

- Diversity

- Real time Velocity

Three highs in the era of big data: mainly the requirements for procedures

- High concurrency

- Highly scalable

- High performance

Real practice in the company: NoSQL + RDBMS is the strongest.

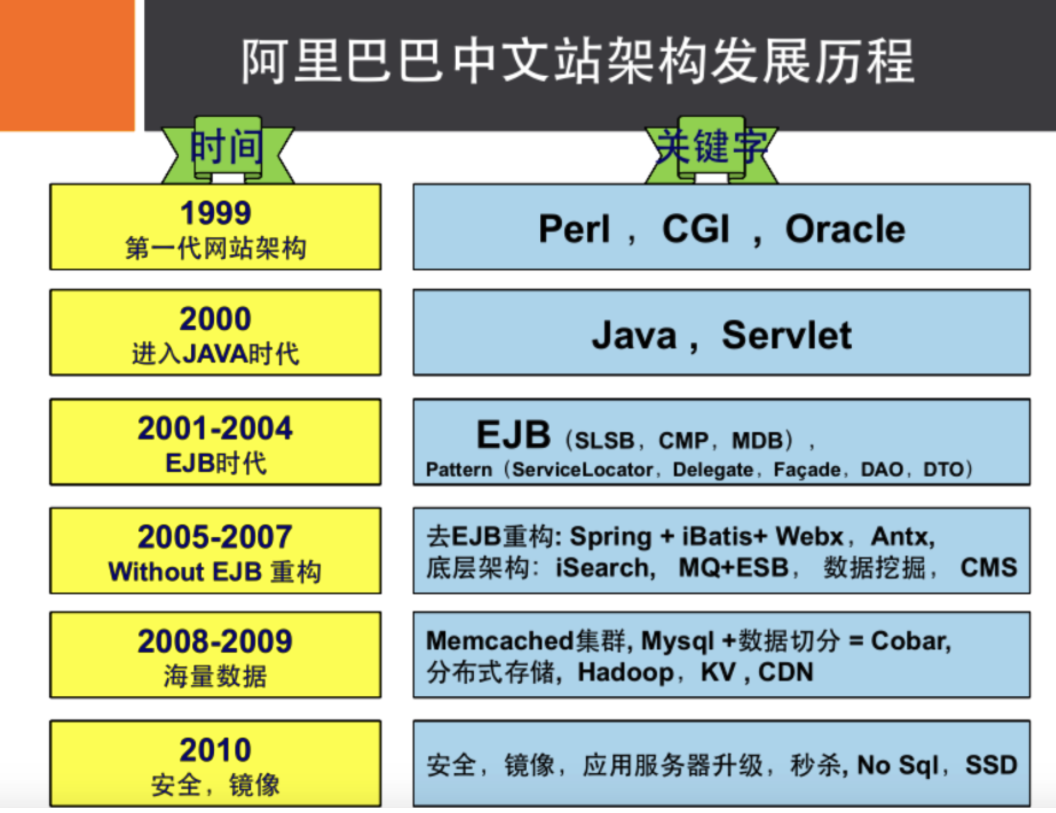

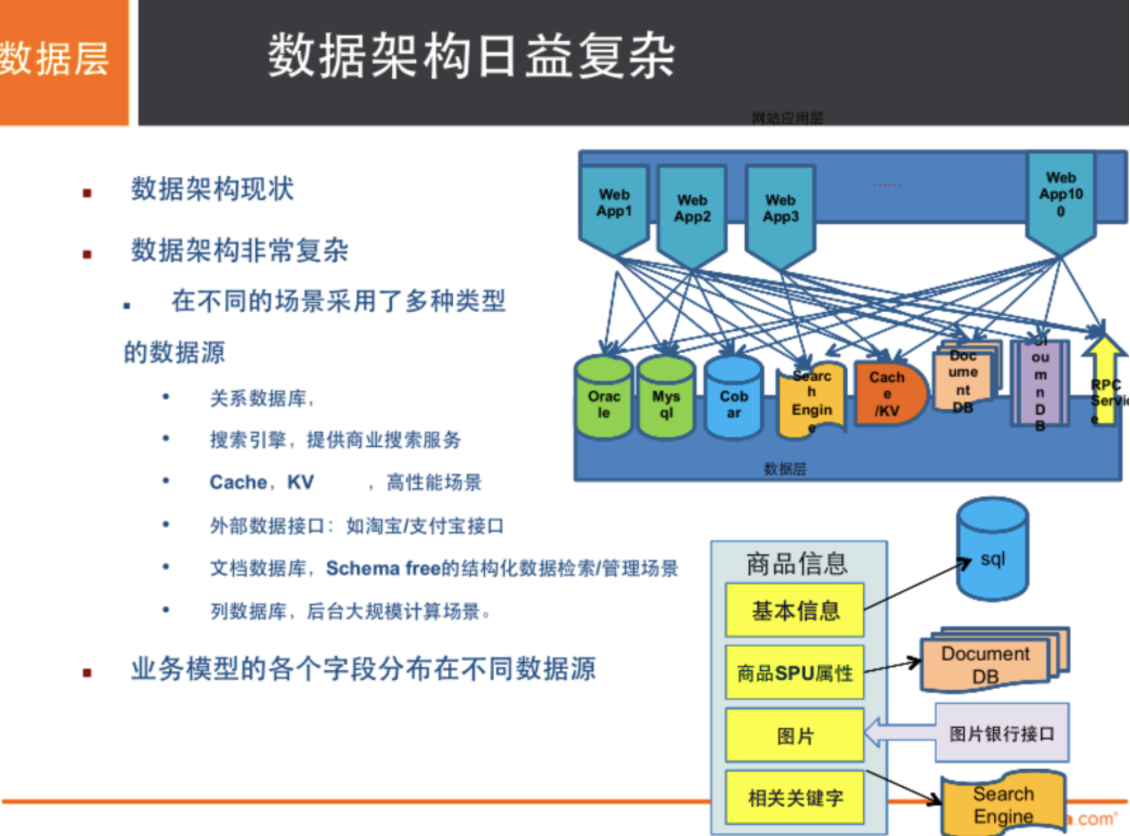

Alibaba evolution analysis

Recommended reading: these lunatics of Alibaba cloud https://yq.aliyun.com/articles/653511

# Commodity information - Generally stored in relational database: Mysql,Alibaba uses Mysql It's all internal changes. # Product description and comments (mostly text) - Document database: MongoDB # picture - distributed file system FastDFS - TaoBao: TFS - Google: GFS - Hadoop: HDFS - Alibaba cloud: oss # Product keywords for search - Search Engines: solr,elasticsearch - Ali: Isearch Doron # Popular band information - In memory database: Redis,Memcache # Commodity transaction, external payment interface - Third party applications

Four categories of Nosql

KV key value pair

- Sina: Redis

- Meituan: Redis + Tair

- Alibaba, baidu: Redis + Memcache

Document database (bson data format):

- Mongodb (Master)

- Database based on distributed file storage. Write a large number of documents in C + +.

- MongoDB is an intermediate product between RDBMS and NoSQL. MongoDB is the most functional database in non relational databases, and NoSQL is most like a relational database.

- ConthDB

Column storage database

- HBase (big data must learn)

- distributed file system

Graph relational database

For advertising, recommendation, social networking

- Neo4j,InfoGrid

| classification | Examples examples | Typical application scenarios | data model | advantage | shortcoming |

|---|---|---|---|---|---|

| Key value pair | Tokyo Cabinet/Tyrant, Redis, Voldemort, Oracle BDB | Content caching is mainly used to deal with the high access load of a large amount of data, as well as some log systems. | The Key Value pair from Key to Value is usually implemented by hash table | Fast search speed | Data is unstructured and is usually only treated as string or binary data |

| Column storage database | Cassandra, HBase, Riak | Distributed file system | It is stored in column clusters to store the same column of data together | Fast search speed, strong scalability and easier distributed expansion | Relatively limited functions |

| Document database | CouchDB, MongoDb | Web application (similar to key Value, Value is structured, but the database can understand the content of Value) | The key value pair corresponding to key value. Value is structured data | The data structure requirements are not strict, the table structure is variable, and the table structure does not need to be defined in advance like the relational database | The query performance is not high, and there is a lack of unified query syntax. |

| Graph database | Neo4J, InfoGrid, Infinite Graph | Social networks, recommendation systems, etc. Focus on building relationship maps | Figure structure | Using graph structure correlation algorithm. For example, shortest path addressing, N-degree relationship search, etc | In many cases, we need to calculate the whole graph to get the required information, and this structure is not good for distributed clustering |

2, Getting started with Redis

summary

What is Redis?

Redis (Remote Dictionary Server), that is, remote dictionary service.

It is an open source log and key value database written in ANSI C language, supporting network, memory based and persistent, and provides API s in multiple languages.

Like memcached, data is cached in memory to ensure efficiency. The difference is that redis will periodically write the updated data to the disk or write the modification operation to the additional record file, and on this basis, it realizes master-slave synchronization.

What can Redis do?

- Memory storage and persistence. Memory is lost immediately after power failure, so persistence is required (RDB, AOF)

- High efficiency, for cache

- Publish subscribe system

- Map information analysis

- Timer, counter (eg: views)

- . . .

characteristic

-

Diverse data types

-

Persistence

-

colony

-

affair

...

Environment construction

Official website: https://redis.io/

It is recommended to use Linux server for learning.

The windows version of Redis has been stopped for a long time

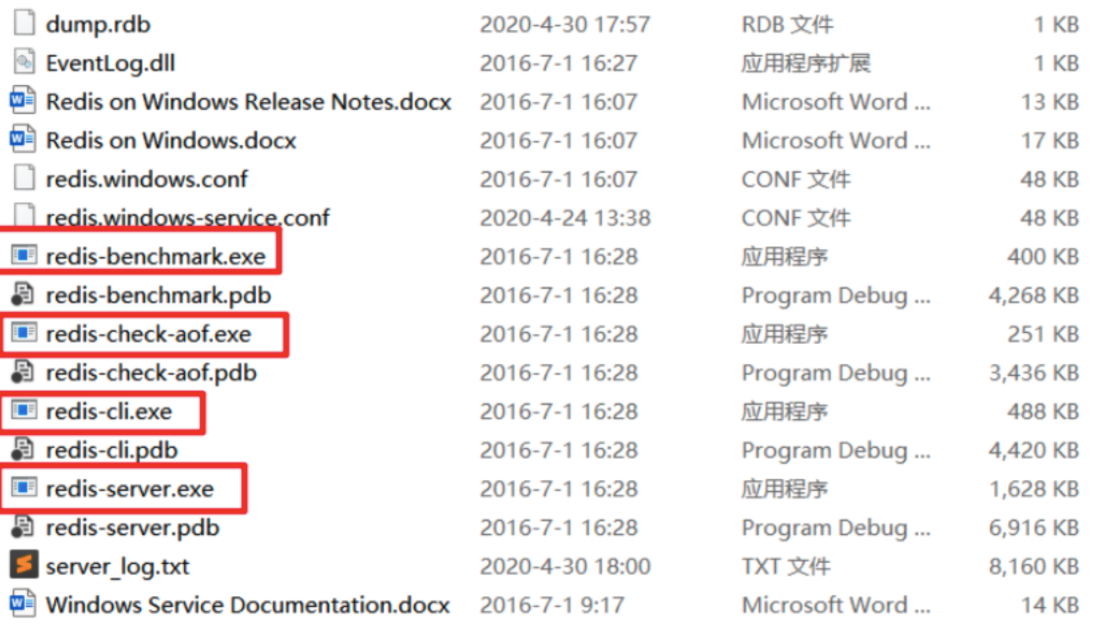

Windows setup

https://github.com/dmajkic/redis

- Unzip the installation package

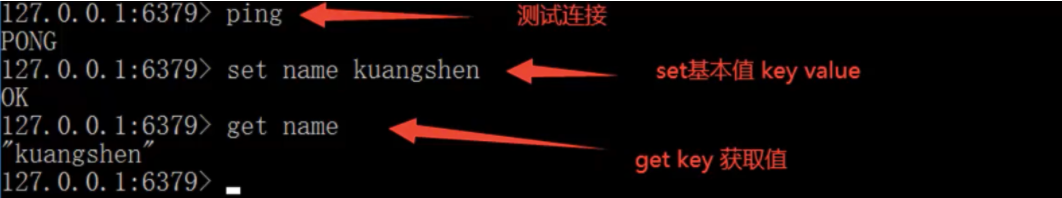

- Open redis server exe

- Start redis cli Exe test

Linux Installation

-

Download the installation package! redis-5.0.8.tar.gz

-

Unzip the Redis installation package! Programs are usually placed in the / opt directory

-

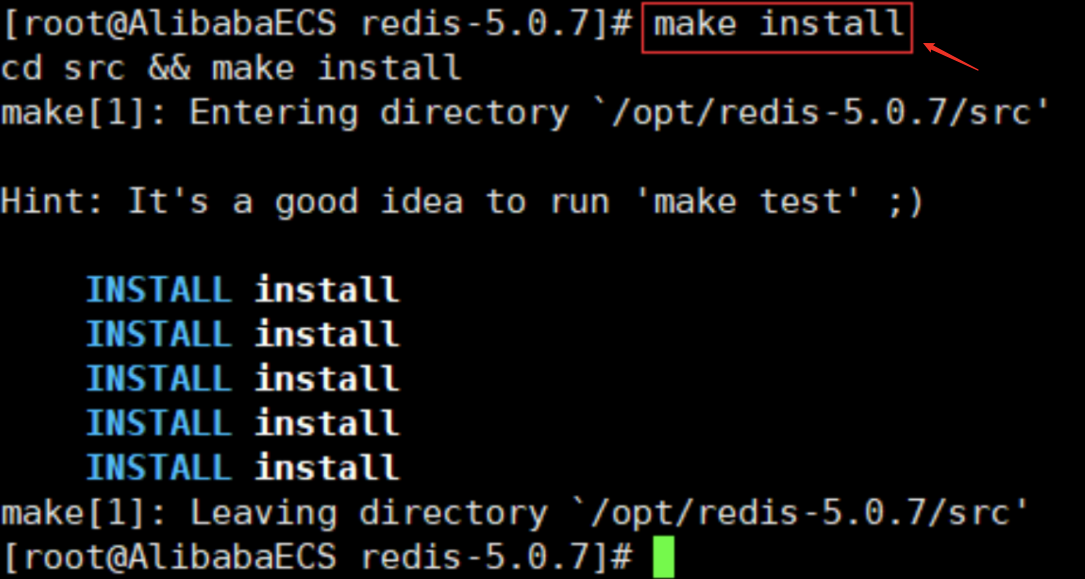

Basic environment installation

yum install gcc-c++ # Then enter the redis directory for execution make # Then execute make install

-

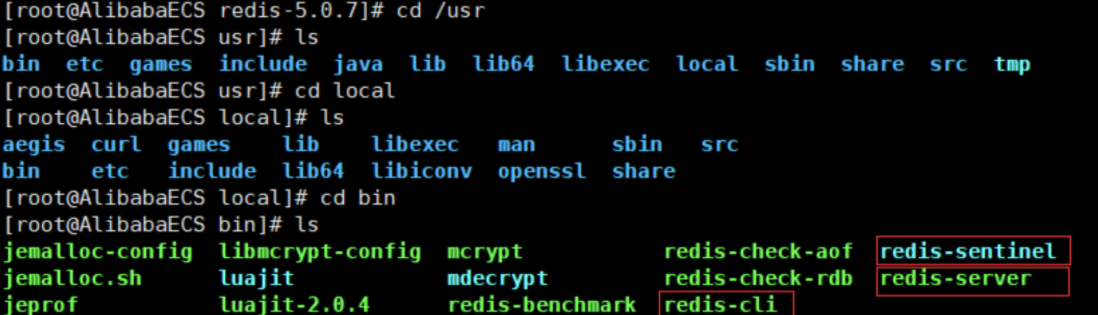

The default installation path of redis is / usr/local/bin

-

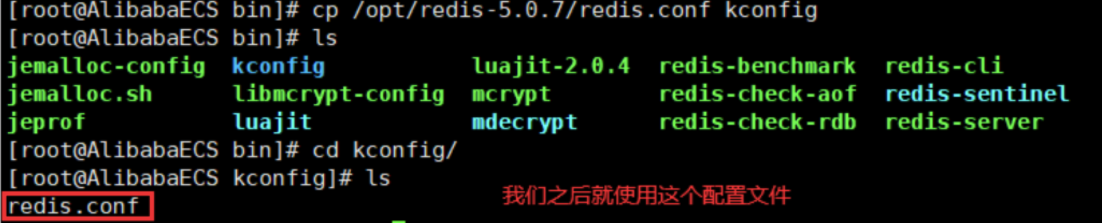

Copy the redis configuration file to the program installation directory / usr/local/bin/kconfig

-

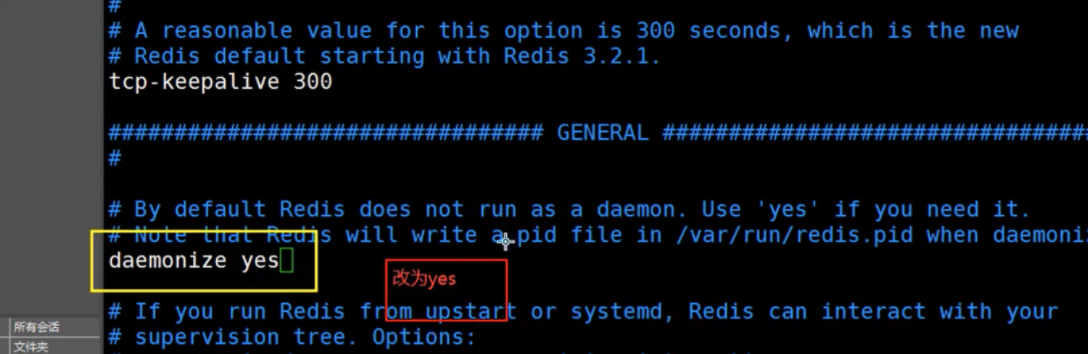

redis is not started in the background by default. You need to modify the configuration file!

-

Start the redis service through the specified configuration file

-

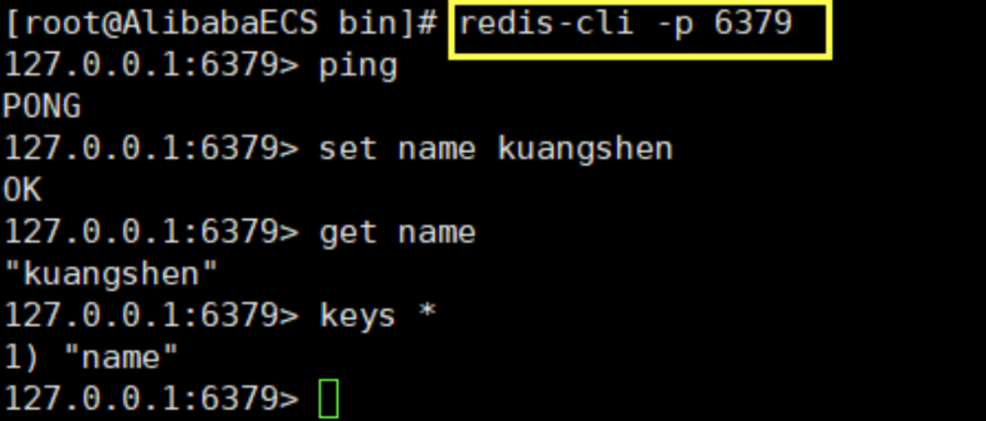

Test with the specified port number of Redis cli connection. The default port of Redis is 6379

-

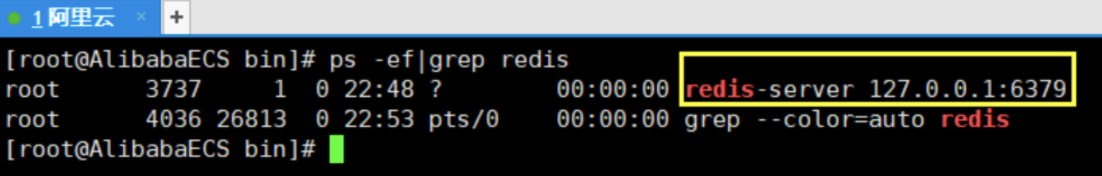

Check whether the redis process is started

-

Shut down Redis service

-

Check whether the process exists again

-

Later, we will start the cluster test using single machine multi Redis

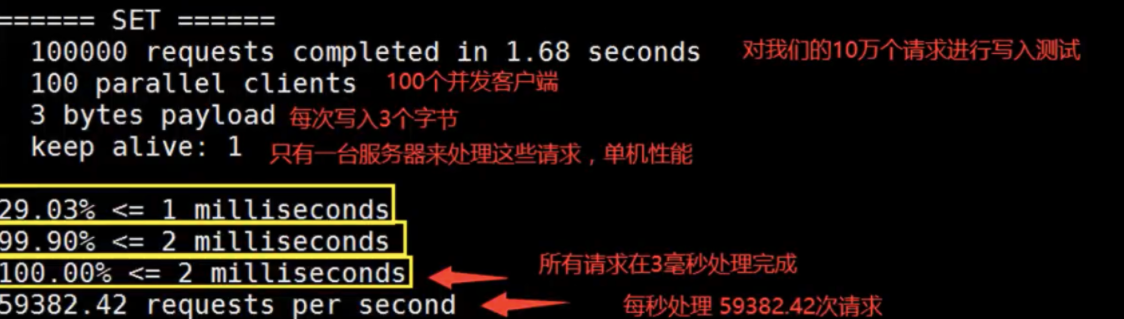

Test performance

**Redis benchmark: * * the performance test tool officially provided by redis. The parameter options are as follows:

Simple test:

# Test: 100 concurrent connections 100000 requests redis-benchmark -h localhost -p 6379 -c 100 -n 100000 12

Basic knowledge

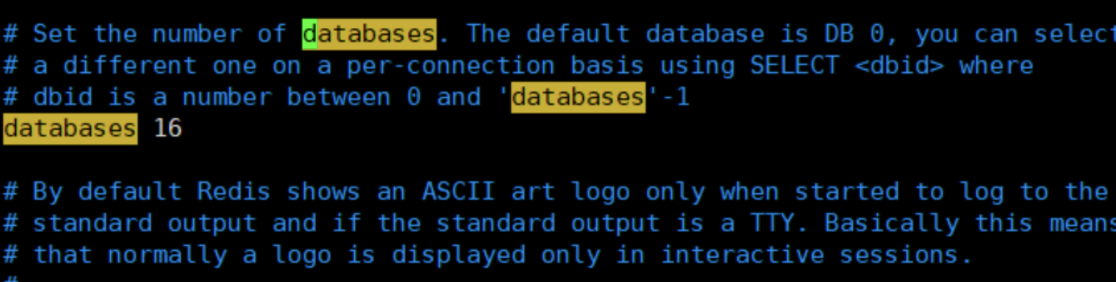

redis has 16 databases by default

The 0 used by default;

16 databases: DB 0~DB 15

DB 0 is used by default. You can use select n to switch to DB n. dbsize can view the size of the current database, which is related to the number of key s.

127.0.0.1:6379> config get databases # View the number of databases on the command line 1) "databases" 2) "16" 127.0.0.1:6379> select 8 # Switch database DB 8 OK 127.0.0.1:6379[8]> dbsize # View database size (integer) 0 # Data cannot be exchanged between different databases, and dbsize is based on the number of key s in the database. 127.0.0.1:6379> set name sakura OK 127.0.0.1:6379> SELECT 8 OK 127.0.0.1:6379[8]> get name # Key value pairs in db0 cannot be obtained in db8. (nil) 127.0.0.1:6379[8]> DBSIZE (integer) 0 127.0.0.1:6379[8]> SELECT 0 OK 127.0.0.1:6379> keys * 1) "counter:__rand_int__" 2) "mylist" 3) "name" 4) "key:__rand_int__" 5) "myset:__rand_int__" 127.0.0.1:6379> DBSIZE # size is related to the number of key s (integer) 5

keys *: view all key s in the current database.

Flush DB: clear the key value pairs in the current database.

Flush all: clear key value pairs of all databases.

Redis is single threaded, and redis is based on memory operation.

Therefore, the performance bottleneck of Redis is not CPU, but machine memory and network bandwidth.

So why is Redis so fast and high-performance? QPS up to 10W+

Why is Redis single thread so fast?

- Myth 1: a high-performance server must be multi-threaded?

- Myth 2: multithreading (CPU context will switch!) It must be more efficient than single thread!

Core: Redis puts all data in memory, so using single thread to operate is the most efficient. Multithreading (CPU context switching: time-consuming operation!), For the memory system, if there is no context switching, the efficiency is the highest. Multiple reads and writes are on one CPU. In the case of storing data in memory, single thread is the best solution.

3, Five data types

Redis is an open source (BSD licensed) data structure server with in memory storage, which can be used as database, cache and message queue agent. It supports character string,Hashtable ,list,aggregate,Ordered set,bitmap ,hyperloglogs And other data types. Built in replication Lua script , LRU retraction affair And different levels of disk persistence. At the same time, it provides high availability through Redis Sentinel and automatic storage through Redis Cluster partition.

Redis-key

Any data type in redis is saved in the form of key value in the database. The operation of redis key is used to complete the operation of data in the database.

Learn the following commands:

- exists key: determines whether the key exists

- del key: delete key value pairs

- move key db: move the key value pair to the specified database

- expire key second: sets the expiration time of the key value pair

- type key: view the data type of value

127.0.0.1:6379> keys * # View all key s in the current database (empty list or set) 127.0.0.1:6379> set name qinjiang # set key OK 127.0.0.1:6379> set age 20 OK 127.0.0.1:6379> keys * 1) "age" 2) "name" 127.0.0.1:6379> move age 1 # Move key value pairs to the specified database (integer) 1 127.0.0.1:6379> EXISTS age # Determine whether the key exists (integer) 0 # non-existent 127.0.0.1:6379> EXISTS name (integer) 1 # existence 127.0.0.1:6379> SELECT 1 OK 127.0.0.1:6379[1]> keys * 1) "age" 127.0.0.1:6379[1]> del age # Delete key value pair (integer) 1 # Number of deleted 127.0.0.1:6379> set age 20 OK 127.0.0.1:6379> EXPIRE age 15 # Sets the expiration time of key value pairs (integer) 1 # Set success start count 127.0.0.1:6379> ttl age # View the remaining expiration time of the key (integer) 13 127.0.0.1:6379> ttl age (integer) 11 127.0.0.1:6379> ttl age (integer) 9 127.0.0.1:6379> ttl age (integer) -2 # -2 means that the key is expired, - 1 means that the expiration time is not set for the key 127.0.0.1:6379> get age # Expired key s will be automatically delete d (nil) 127.0.0.1:6379> keys * 1) "name" 127.0.0.1:6379> type name # View the data type of value string

About TTL commands

Redis's key returns the expiration time of the key through the TTL command. Generally speaking, there are three types:

- The current key has no expiration time set, so - 1 will be returned

- The current key has a set expiration time, and the key has expired, so - 2 will be returned

- The current key has a set expiration time, and the key has not expired yet, so the normal remaining time of the key will be returned

About renaming RENAME and RENAMENX

- RENAME key newkey modify the name of the key

- RENAMENX key newkey renames the key to newkey only if the newkey does not exist.

Learn more commands: https://www.redis.net.cn/order/

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-wbvztgvm-1597890996517) (crazy God said Redis.assets/image-20200813114228439.png)]

String (string)

Ordinary set and get are skipped directly.

| command | describe | Examples |

|---|---|---|

| APPEND key value | Append a string to the value of the specified key | 127.0.0.1:6379> set msg hello OK 127.0.0.1:6379> append msg " world" (integer) 11 127.0.0.1:6379> get msg "hello world" |

| DECR/INCR key | The value value of the specified key is + 1 / - 1 (for numbers only) | 127.0.0.1:6379> set age 20 OK 127.0.0.1:6379> incr age (integer) 21 127.0.0.1:6379> decr age (integer) 20 |

| INCRBY/DECRBY key n | Adds or subtracts values in specified steps | 127.0.0.1:6379> INCRBY age 5 (integer) 25 127.0.0.1:6379> DECRBY age 10 (integer) 15 |

| INCRBYFLOAT key n | Adds a floating-point value to the value | 127.0.0.1:6379> INCRBYFLOAT age 5.2 "20.2" |

| STRLEN key | Gets the string length of the saved value of the key | 127.0.0.1:6379> get msg "hello world" 127.0.0.1:6379> STRLEN msg (integer) 11 |

| GETRANGE key start end | Get the string according to the start and end position (closed interval, start and end positions) | 127.0.0.1:6379> get msg "hello world" 127.0.0.1:6379> GETRANGE msg 3 9 "lo worl" |

| SETRANGE key offset value | Replace the value from offset in the key with the specified value | 127.0.0.1:6379> SETRANGE msg 2 hello (integer) 7 127.0.0.1:6379> get msg "tehello" |

| GETSET key value | Set the value of the given key to value and return the old value of the key. | 127.0.0.1:6379> GETSET msg test "hello world" |

| SETNX key value | set only when the key does not exist | 127.0.0.1:6379> SETNX msg test (integer) 0 127.0.0.1:6379> SETNX name sakura (integer) 1 |

| SETEX key seconds value | Set key value pair and set expiration time | 127.0.0.1:6379> setex name 10 root OK 127.0.0.1:6379> get name (nil) |

| MSET key1 value1 [key2 value2..] | Batch set key value pair | 127.0.0.1:6379> MSET k1 v1 k2 v2 k3 v3 OK |

| MSETNX key1 value1 [key2 value2..] | Batch setting of key value pairs is only executed when all keys in the parameter do not exist | 127.0.0.1:6379> MSETNX k1 v1 k4 v4 (integer) 0 |

| MGET key1 [key2..] | Get the saved values of multiple key s in batch | 127.0.0.1:6379> MGET k1 k2 k3 1) "v1" 2) "v2" 3) "v3" |

| PSETEX key milliseconds value | It is similar to the SETEX command, but it sets the lifetime of the key in milliseconds, | |

| getset key value | If there is no value, nil is returned. If there is a value, the original value is obtained and a new value is set |

String similar usage scenario: value can be either a string or a number. For example:

- Counter

- Count the number of multiple units: uid:123666: follow 0

- Number of fans

- Object storage cache

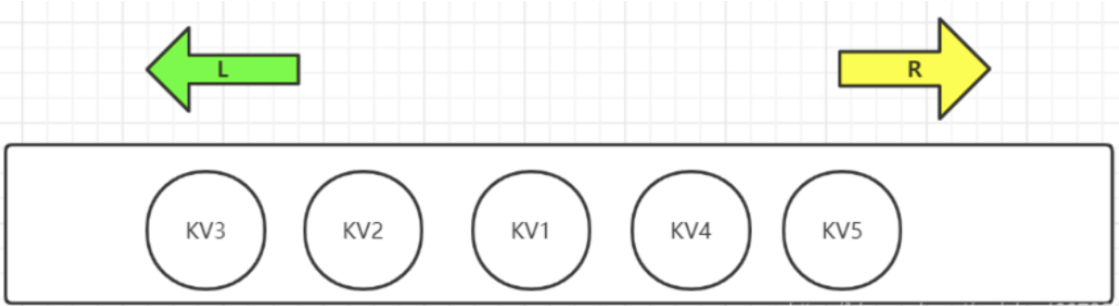

List

Redis list is a simple string list, sorted by insertion order. You can add an element to the head (left) or tail (right) of the list

A list can contain up to 232 - 1 elements (4294967295, with more than 4 billion elements per list).

First of all, we can change the list into queue, stack, double ended queue, etc. through rule definition

As shown in the figure, List in Redis can be operated at both ends, so the commands are divided into LXXX and RLLL. Sometimes L also represents List, such as LLEN

| command | describe |

|---|---|

| LPUSH/RPUSH key value1[value2..] | PUSH values (one or more) from the left / right to the list. |

| LRANGE key start end | Get the start and end elements of the list = = (the index is incremented from left to right)== |

| LPUSHX/RPUSHX key value | push values (one or more) into existing column names |

| LINSERT key BEFORE|AFTER pivot value | Inserts value before / after the specified list element |

| LLEN key | View list length |

| LINDEX key index | Get list elements by index |

| LSET key index value | Set values for elements by index |

| LPOP/RPOP key | Remove value from leftmost / rightmost and return |

| RPOPLPUSH source destination | Pop up and return the last value at the end (right) of the list, and then add it to the head of another list |

| LTRIM key start end | Intercept the list within the specified range by subscript |

| LREM key count value | In the List, duplicate values are allowed. Count > 0: start the search from the head and delete the specified values. At most count is deleted. Count < 0: start the search from the tail... count = 0: delete all the specified values in the List. |

| BLPOP/BRPOP key1[key2] timout | Move out and get the first / last element of the list. If there is no element in the list, the list will be blocked until the waiting timeout or pop-up element is found. |

| BRPOPLPUSH source destination timeout | The same function as RPOPLPUSH, if there are no elements in the list, the list will be blocked until the waiting timeout or pop-up elements are found. |

---------------------------LPUSH---RPUSH---LRANGE--------------------------------

127.0.0.1:6379> LPUSH mylist k1 # LPUSH mylist=>{1}

(integer) 1

127.0.0.1:6379> LPUSH mylist k2 # LPUSH mylist=>{2,1}

(integer) 2

127.0.0.1:6379> RPUSH mylist k3 # RPUSH mylist=>{2,1,3}

(integer) 3

127.0.0.1:6379> get mylist # Ordinary get cannot get the list value

(error) WRONGTYPE Operation against a key holding the wrong kind of value

127.0.0.1:6379> LRANGE mylist 0 4 # LRANGE gets the elements within the range of start and end positions

1) "k2"

2) "k1"

3) "k3"

127.0.0.1:6379> LRANGE mylist 0 2

1) "k2"

2) "k1"

3) "k3"

127.0.0.1:6379> LRANGE mylist 0 1

1) "k2"

2) "k1"

127.0.0.1:6379> LRANGE mylist 0 -1 # Get all elements

1) "k2"

2) "k1"

3) "k3"

---------------------------LPUSHX---RPUSHX-----------------------------------

127.0.0.1:6379> LPUSHX list v1 # list does not exist LPUSHX failed

(integer) 0

127.0.0.1:6379> LPUSHX list v1 v2

(integer) 0

127.0.0.1:6379> LPUSHX mylist k4 k5 # Push to the left in mylist K4 K5

(integer) 5

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k5"

2) "k4"

3) "k2"

4) "k1"

5) "k3"

---------------------------LINSERT--LLEN--LINDEX--LSET----------------------------

127.0.0.1:6379> LINSERT mylist after k2 ins_key1 # Insert ins after k2 element_ key1

(integer) 6

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k5"

2) "k4"

3) "k2"

4) "ins_key1"

5) "k1"

6) "k3"

127.0.0.1:6379> LLEN mylist # View the length of mylist

(integer) 6

127.0.0.1:6379> LINDEX mylist 3 # Gets the element with subscript 3

"ins_key1"

127.0.0.1:6379> LINDEX mylist 0

"k5"

127.0.0.1:6379> LSET mylist 3 k6 # set the element of subscript 3 to k6

OK

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k5"

2) "k4"

3) "k2"

4) "k6"

5) "k1"

6) "k3"

---------------------------LPOP--RPOP--------------------------

127.0.0.1:6379> LPOP mylist # Left (head) eject

"k5"

127.0.0.1:6379> RPOP mylist # Right (tail) eject

"k3"

---------------------------RPOPLPUSH--------------------------

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k4"

2) "k2"

3) "k6"

4) "k1"

127.0.0.1:6379> RPOPLPUSH mylist newlist # Pop up the last value (k1) of mylist and add it to the head of newlist

"k1"

127.0.0.1:6379> LRANGE newlist 0 -1

1) "k1"

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k4"

2) "k2"

3) "k6"

---------------------------LTRIM--------------------------

127.0.0.1:6379> LTRIM mylist 0 1 # Intercept 0 ~ 1 parts in mylist

OK

127.0.0.1:6379> LRANGE mylist 0 -1

1) "k4"

2) "k2"

# Initial mylist: k2,k2,k2,k2,k2,k2,k4,k2,k2,k2,k2, K2, K2, K2

---------------------------LREM--------------------------

127.0.0.1:6379> LREM mylist 3 k2 # Search from the head and delete up to 3 k2

(integer) 3

# After deletion: mylist: k2,k2,k2,k4,k2,k2,k2,k2

127.0.0.1:6379> LREM mylist -2 k2 #Search from the tail and delete up to 2 k2

(integer) 2

# After deletion: mylist: k2,k2,k2,k4,k2,k2

---------------------------BLPOP--BRPOP--------------------------

mylist: k2,k2,k2,k4,k2,k2

newlist: k1

127.0.0.1:6379> BLPOP newlist mylist 30 # Pop up the first value from the newlist and use mylist as the candidate

1) "newlist" # eject

2) "k1"

127.0.0.1:6379> BLPOP newlist mylist 30

1) "mylist" # Because the newlist is empty, it pops up from mylist

2) "k2"

127.0.0.1:6379> BLPOP newlist 30

(30.10s) # Timeout

127.0.0.1:6379> BLPOP newlist 30 # We connected another client and push ed test into the newlist. The blocking was resolved.

1) "newlist"

2) "test"

(12.54s)

Summary

- List is actually a linked list. Before node, after, left and right can insert values

- If the key does not exist, create a new linked list

- If the key exists, add content

- If all values are removed, the empty linked list also means that it does not exist

- Inserting or changing values on both sides is the most efficient! Modifying intermediate elements is relatively inefficient

Application:

Message queuing! Message queue (Lpush Rpop), stack (Lpush Rpop)

Set

Redis Set is an unordered Set of string type. Collection members are unique, which means that duplicate data cannot appear in the collection.

The collection in Redis is realized through hash table, so the complexity of adding, deleting and searching is O(1).

The largest number of members in the collection is 232 - 1 (4294967295, each collection can store more than 4 billion members).

| command | describe |

|---|---|

| SADD key member1[member2..] | Add one or more members to the collection unordered |

| SCARD key | Gets the number of members of the collection |

| SMEMBERS key | Returns all members of the collection |

| SISMEMBER key member | Query whether the member element is a member of the collection. The result is unordered |

| SRANDMEMBER key [count] | Randomly return count members in the collection. The default value of count is 1 |

| SPOP key [count] | Randomly remove and return count members in the collection. The default value of count is 1 |

| SMOVE source destination member | Move the member of the source collection to the destination collection |

| SREM key member1[member2..] | Remove one or more members from the collection |

| SDIFF key1[key2..] | Returns the difference set of all sets key1- key2 - |

| SDIFFSTORE destination key1[key2..] | On the basis of SDIFF, save the results to the set = = (overwrite) =. Cannot save to other types of key s! |

| SINTER key1 [key2..] | Returns the intersection of all sets |

| SINTERSTORE destination key1[key2..] | On the basis of SINTER, the results are stored in the collection. cover |

| SUNION key1 [key2..] | Returns the union of all sets |

| SUNIONSTORE destination key1 [key2..] | On the basis of SUNION, store the results to and Zhang. cover |

| SSCAN KEY [MATCH pattern] [COUNT count] | In a large amount of data environment, use this command to traverse the elements in the collection, and traverse the parts each time |

---------------SADD--SCARD--SMEMBERS--SISMEMBER--------------------

127.0.0.1:6379> SADD myset m1 m2 m3 m4 # Add members to myset m1~m4

(integer) 4

127.0.0.1:6379> SCARD myset # Gets the number of members of the collection

(integer) 4

127.0.0.1:6379> smembers myset # Get all members in the collection

1) "m4"

2) "m3"

3) "m2"

4) "m1"

127.0.0.1:6379> SISMEMBER myset m5 # Query whether m5 is a member of myset

(integer) 0 # No, return 0

127.0.0.1:6379> SISMEMBER myset m2

(integer) 1 # Yes, return 1

127.0.0.1:6379> SISMEMBER myset m3

(integer) 1

---------------------SRANDMEMBER--SPOP----------------------------------

127.0.0.1:6379> SRANDMEMBER myset 3 # Random return of 3 members

1) "m2"

2) "m3"

3) "m4"

127.0.0.1:6379> SRANDMEMBER myset # Return 1 member randomly

"m3"

127.0.0.1:6379> SPOP myset 2 # Randomly remove and return 2 members

1) "m1"

2) "m4"

# Restore set to {m1,m2,m3,m4}

---------------------SMOVE--SREM----------------------------------------

127.0.0.1:6379> SMOVE myset newset m3 # Move m3 members in myset to newset set

(integer) 1

127.0.0.1:6379> SMEMBERS myset

1) "m4"

2) "m2"

3) "m1"

127.0.0.1:6379> SMEMBERS newset

1) "m3"

127.0.0.1:6379> SREM newset m3 # Remove m3 element from newset

(integer) 1

127.0.0.1:6379> SMEMBERS newset

(empty list or set)

# The following is the multi set operation. If there is only one parameter in the multi set operation, it will operate with itself by default

# setx=>{m1,m2,m4,m6}, sety=>{m2,m5,m6}, setz=>{m1,m3,m6}

-----------------------------SDIFF------------------------------------

127.0.0.1:6379> SDIFF setx sety setz # Equivalent to setx sety setz

1) "m4"

127.0.0.1:6379> SDIFF setx sety # setx - sety

1) "m4"

2) "m1"

127.0.0.1:6379> SDIFF sety setx # sety - setx

1) "m5"

-------------------------SINTER---------------------------------------

# Common concern (intersection)

127.0.0.1:6379> SINTER setx sety setz # Find the intersection of setx, sety and setx

1) "m6"

127.0.0.1:6379> SINTER setx sety # Find the intersection of setx and sety

1) "m2"

2) "m6"

-------------------------SUNION---------------------------------------

127.0.0.1:6379> SUNION setx sety setz # Union of setx sety setz

1) "m4"

2) "m6"

3) "m3"

4) "m2"

5) "m1"

6) "m5"

127.0.0.1:6379> SUNION setx sety # setx sety Union

1) "m4"

2) "m6"

3) "m2"

4) "m1"

5) "m5"

Hash (hash)

Redis hash is a mapping table of field and value of string type. Hash is especially suitable for storing objects.

Set is a simplified Hash. Only the key is changed, and the value is filled with the default value. A Hash table can be stored as an object, and the information of the object is stored in the table.

| command | describe |

|---|---|

| HSET key field value | Set the value of the field field in the hash table key to value. Setting the same field repeatedly will overwrite and return 0 |

| HMSET key field1 value1 [field2 value2..] | Set multiple field value pairs into the hash table key at the same time. |

| HSETNX key field value | Set the value of the hash table field only if the field field does not exist. |

| HEXISTS key field | Check whether the specified field exists in the hash table key. |

| HGET key field value | Gets the value of the specified field stored in the hash table |

| HMGET key field1 [field2..] | Gets the value of all the given fields |

| HGETALL key | Get all fields and values of the key in the hash table |

| HKEYS key | Get all fields in the hash table key |

| HLEN key | Gets the number of fields in the hash table |

| HVALS key | Gets all the values in the hash table |

| HDEL key field1 [field2..] | Delete one or more field fields in the hash table key |

| HINCRBY key field n | Add the increment n to the integer value of the specified field in the hash table key and return the increment. The result is the same. It is only applicable to integer fields |

| HINCRBYFLOAT key field n | Adds the increment n to the floating-point value of the specified field in the hash table key. |

| HSCAN key cursor [MATCH pattern] [COUNT count] | Iterates over key value pairs in the hash table. |

------------------------HSET--HMSET--HSETNX---------------- 127.0.0.1:6379> HSET studentx name sakura # Take the studentx hash table as an object and set the name to sakura (integer) 1 127.0.0.1:6379> HSET studentx name gyc # Repeatedly set the field to override and return 0 (integer) 0 127.0.0.1:6379> HSET studentx age 20 # Set the age of studentx to 20 (integer) 1 127.0.0.1:6379> HMSET studentx sex 1 tel 15623667886 # Set sex to 1 and tel to 15623667886 OK 127.0.0.1:6379> HSETNX studentx name gyc # HSETNX sets the existing field (integer) 0 # fail 127.0.0.1:6379> HSETNX studentx email 12345@qq.com (integer) 1 # success ----------------------HEXISTS-------------------------------- 127.0.0.1:6379> HEXISTS studentx name # Does the name field exist in studentx (integer) 1 # existence 127.0.0.1:6379> HEXISTS studentx addr (integer) 0 # non-existent -------------------HGET--HMGET--HGETALL----------- 127.0.0.1:6379> HGET studentx name # Get the value of the name field in studentx "gyc" 127.0.0.1:6379> HMGET studentx name age tel # Get the value of the name, age and tel fields in studentx 1) "gyc" 2) "20" 3) "15623667886" 127.0.0.1:6379> HGETALL studentx # Get all field s and their value s in studentx 1) "name" 2) "gyc" 3) "age" 4) "20" 5) "sex" 6) "1" 7) "tel" 8) "15623667886" 9) "email" 10) "12345@qq.com" --------------------HKEYS--HLEN--HVALS-------------- 127.0.0.1:6379> HKEYS studentx # View all field s in studentx 1) "name" 2) "age" 3) "sex" 4) "tel" 5) "email" 127.0.0.1:6379> HLEN studentx # View the number of fields in studentx (integer) 5 127.0.0.1:6379> HVALS studentx # View all value s in studentx 1) "gyc" 2) "20" 3) "1" 4) "15623667886" 5) "12345@qq.com" -------------------------HDEL-------------------------- 127.0.0.1:6379> HDEL studentx sex tel # Delete the sex and tel fields in studentx (integer) 2 127.0.0.1:6379> HKEYS studentx 1) "name" 2) "age" 3) "email" -------------HINCRBY--HINCRBYFLOAT------------------------ 127.0.0.1:6379> HINCRBY studentx age 1 # The age field value of studentx + 1 (integer) 21 127.0.0.1:6379> HINCRBY studentx name 1 # Non integer font fields are not available (error) ERR hash value is not an integer 127.0.0.1:6379> HINCRBYFLOAT studentx weight 0.6 # Increase the weight field by 0.6 "90.8"

The data changed by hash is user name and age, especially user information, which changes frequently! Hash is more suitable for object storage, and Sring is more suitable for string storage!

Zset (ordered set)

The difference is that each element is associated with a score of type double. redis sorts the members of the collection from small to large through scores.

Same score: sort by dictionary order

Members of an ordered set are unique, but scores can be repeated.

| command | describe |

|---|---|

| ZADD key score member1 [score2 member2] | Add one or more members to an ordered collection, or update the scores of existing members |

| ZCARD key | Gets the number of members of an ordered collection |

| ZCOUNT key min max | Calculate the number of members of the specified interval score in the ordered set |

| ZINCRBY key n member | Adds the increment n to the score of the specified member in the ordered set |

| ZSCORE key member | Returns the score value of a member in an ordered set |

| ZRANK key member | Returns the index of a specified member in an ordered collection |

| ZRANGE key start end | The ordered set is returned through the index interval to synthesize the members in the specified interval |

| ZRANGEBYLEX key min max | Returns the members of an ordered set through a dictionary interval |

| ZRANGEBYSCORE key min max | Return the members in the specified interval of the ordered set through scores = = - inf and + inf represent the minimum and maximum values respectively, and only open intervals are supported ()== |

| ZLEXCOUNT key min max | Calculates the number of members in the specified dictionary interval in an ordered set |

| ZREM key member1 [member2..] | Remove one or more members from an ordered collection |

| ZREMRANGEBYLEX key min max | Removes all members of a given dictionary interval from an ordered set |

| ZREMRANGEBYRANK key start stop | Removes all members of a given ranking interval from an ordered set |

| ZREMRANGEBYSCORE key min max | Removes all members of a given score interval from an ordered set |

| ZREVRANGE key start end | Returns the members in the specified interval in the ordered set. Through the index, the score is from high to low |

| ZREVRANGEBYSCORRE key max min | Returns the members within the specified score range in the ordered set. The scores are sorted from high to low |

| ZREVRANGEBYLEX key max min | Returns the members in the specified dictionary interval in the ordered set, in reverse order of the dictionary |

| ZREVRANK key member | Returns the ranking of the specified members in the ordered set. The members of the ordered set are sorted by decreasing points (from large to small) |

| ZINTERSTORE destination numkeys key1 [key2 ..] | Calculate the intersection of one or more given ordered sets and store the result set in the new ordered set key. numkeys: indicates the number of sets involved in the operation, and add the score as the score of the result |

| ZUNIONSTORE destination numkeys key1 [key2..] | Calculate the intersection of one or more given ordered sets and store the result set in the new ordered set key |

| ZSCAN key cursor [MATCH pattern\] [COUNT count] | Iterate the elements in the ordered set (including element members and element scores) |

-------------------ZADD--ZCARD--ZCOUNT--------------

127.0.0.1:6379> ZADD myzset 1 m1 2 m2 3 m3 # Add members m1 score=1 and m2 score=2 to the ordered set myzset

(integer) 2

127.0.0.1:6379> ZCARD myzset # Gets the number of members of an ordered collection

(integer) 2

127.0.0.1:6379> ZCOUNT myzset 0 1 # Get the number of members whose score is in the [0,1] range

(integer) 1

127.0.0.1:6379> ZCOUNT myzset 0 2

(integer) 2

----------------ZINCRBY--ZSCORE--------------------------

127.0.0.1:6379> ZINCRBY myzset 5 m2 # Add the score of member m2 + 5

"7"

127.0.0.1:6379> ZSCORE myzset m1 # Get the score of member m1

"1"

127.0.0.1:6379> ZSCORE myzset m2

"7"

--------------ZRANK--ZRANGE-----------------------------------

127.0.0.1:6379> ZRANK myzset m1 # Get the index of member m1. The index is sorted by score, and the index value with the same score is increased in dictionary order

(integer) 0

127.0.0.1:6379> ZRANK myzset m2

(integer) 2

127.0.0.1:6379> ZRANGE myzset 0 1 # Get members with indexes between 0 and 1

1) "m1"

2) "m3"

127.0.0.1:6379> ZRANGE myzset 0 -1 # Get all members

1) "m1"

2) "m3"

3) "m2"

#Testset = > {ABC, add, amaze, apple, back, Java, redis} scores are all 0

------------------ZRANGEBYLEX---------------------------------

127.0.0.1:6379> ZRANGEBYLEX testset - + # Return all members

1) "abc"

2) "add"

3) "amaze"

4) "apple"

5) "back"

6) "java"

7) "redis"

127.0.0.1:6379> ZRANGEBYLEX testset - + LIMIT 0 3 # Display 0,1,2 records of query results by index in pages

1) "abc"

2) "add"

3) "amaze"

127.0.0.1:6379> ZRANGEBYLEX testset - + LIMIT 3 3 # Display 3, 4 and 5 records

1) "apple"

2) "back"

3) "java"

127.0.0.1:6379> ZRANGEBYLEX testset (- [apple # Show members in (-, apple] interval

1) "abc"

2) "add"

3) "amaze"

4) "apple"

127.0.0.1:6379> ZRANGEBYLEX testset [apple [java # Displays the members of the [apple,java] dictionary section

1) "apple"

2) "back"

3) "java"

-----------------------ZRANGEBYSCORE---------------------

127.0.0.1:6379> ZRANGEBYSCORE myzset 1 10 # Returns members whose score is between [1,10]

1) "m1"

2) "m3"

3) "m2"

127.0.0.1:6379> ZRANGEBYSCORE myzset 1 5

1) "m1"

2) "m3"

--------------------ZLEXCOUNT-----------------------------

127.0.0.1:6379> ZLEXCOUNT testset - +

(integer) 7

127.0.0.1:6379> ZLEXCOUNT testset [apple [java

(integer) 3

------------------ZREM--ZREMRANGEBYLEX--ZREMRANGBYRANK--ZREMRANGEBYSCORE--------------------------------

127.0.0.1:6379> ZREM testset abc # Remove member abc

(integer) 1

127.0.0.1:6379> ZREMRANGEBYLEX testset [apple [java # Remove all members in the dictionary interval [apple,java]

(integer) 3

127.0.0.1:6379> ZREMRANGEBYRANK testset 0 1 # Remove all members ranking 0 ~ 1

(integer) 2

127.0.0.1:6379> ZREMRANGEBYSCORE myzset 0 3 # Remove member with score in [0,3]

(integer) 2

# Testset = > {ABC, add, apple, amaze, back, Java, redis} scores are all 0

# myzset=> {(m1,1),(m2,2),(m3,3),(m4,4),(m7,7),(m9,9)}

----------------ZREVRANGE--ZREVRANGEBYSCORE--ZREVRANGEBYLEX-----------

127.0.0.1:6379> ZREVRANGE myzset 0 3 # Sort by score, and then return 0 ~ 3 of the results by index

1) "m9"

2) "m7"

3) "m4"

4) "m3"

127.0.0.1:6379> ZREVRANGE myzset 2 4 # Returns 2 ~ 4 of the index of the sorting result

1) "m4"

2) "m3"

3) "m2"

127.0.0.1:6379> ZREVRANGEBYSCORE myzset 6 2 # Returns the members with scores between [2,6] in the set in descending order of score

1) "m4"

2) "m3"

3) "m2"

127.0.0.1:6379> ZREVRANGEBYLEX testset [java (add # Returns the members of the (add,java] dictionary interval in the set in reverse dictionary order

1) "java"

2) "back"

3) "apple"

4) "amaze"

-------------------------ZREVRANK------------------------------

127.0.0.1:6379> ZREVRANK myzset m7 # Returns the member m7 index in descending order of score

(integer) 1

127.0.0.1:6379> ZREVRANK myzset m2

(integer) 4

# Mathscore = > {(XM, 90), (XH, 95), (XG, 87)} math scores of Xiao Ming, Xiao Hong and Xiao Gang

# Enscore = > {(XM, 70), (XH, 93), (XG, 90)} English scores of Xiao Ming, Xiao Hong and Xiao Gang

-------------------ZINTERSTORE--ZUNIONSTORE-----------------------------------

127.0.0.1:6379> ZINTERSTORE sumscore 2 mathscore enscore # Merge mathcore and enscore, and store the results in sumcore

(integer) 3

127.0.0.1:6379> ZRANGE sumscore 0 -1 withscores # The combined score is the sum of all scores in the previous set

1) "xm"

2) "160"

3) "xg"

4) "177"

5) "xh"

6) "188"

127.0.0.1:6379> ZUNIONSTORE lowestscore 2 mathscore enscore AGGREGATE MIN # Take the minimum member score of two sets as the result

(integer) 3

127.0.0.1:6379> ZRANGE lowestscore 0 -1 withscores

1) "xm"

2) "70"

3) "xg"

4) "87"

5) "xh"

6) "93"

Application case:

- set sort to store the sorting of class grade table and salary table!

- General message, 1 Important news 2 Judge with weight

- Leaderboard application implementation, taking the Top N test

4, Three special data types

Geospatial (geographical location)

The geographic coordinates are located using latitude and longitude and saved in an ordered set zset, so the zset command can also be used

| command | describe |

|---|---|

| Geodd key longitude latitude member [..] | Store the coordinates of specific longitude and latitude into an ordered set |

| geopos key member [member..] | Gets the coordinates of one or more members in the collection |

| geodist key member1 member2 [unit] | Returns the distance between two given positions. The default is in meters. |

| georadius key longitude latitude radius m|km|mi|ft [WITHCOORD][WITHDIST] [WITHHASH] [COUNT count] | Taking the given longitude and latitude as the center, returns all the location elements whose distance from the center does not exceed the given maximum distance among the location elements contained in the set. |

| GEORADIUSBYMEMBER key member radius... | The function is the same as that of GEORADIUS, except that the center position is not a specific longitude and latitude, but uses the existing members in the combination as the center point. |

| geohash key member1 [member2..] | Returns a Geohash representation of one or more location elements. Use Geohash position 52 point integer encoding. |

Effective latitude and longitude

- The effective longitude is from - 180 degrees to 180 degrees.

- The effective latitude ranges from -85.05112878 degrees to 85.05112878 degrees.

The parameter unit of the specified unit must be one of the following units:

- m is expressed in meters.

- km is expressed in kilometers.

- mi is expressed in miles.

- ft is in feet.

About the parameters of GEORADIUS

The function of nearby people can be completed through georadius

withcoord: bring coordinates

withdist: with distance, the unit is the same as the radius unit

COUNT n: only the first n are displayed (sorted by increasing distance)

----------------georadius---------------------

127.0.0.1:6379> GEORADIUS china:city 120 30 500 km withcoord withdist # Query the members within the 500km radius of longitude and latitude (120,30) coordinates

1) 1) "hangzhou"

2) "29.4151"

3) 1) "120.20000249147415"

2) "30.199999888333501"

2) 1) "shanghai"

2) "205.3611"

3) 1) "121.40000134706497"

2) "31.400000253193539"

------------geohash---------------------------

127.0.0.1:6379> geohash china:city yichang shanghai # Get the geohash representation of the longitude and latitude coordinates of the member

1) "wmrjwbr5250"

2) "wtw6ds0y300"

Hyperloglog (cardinality Statistics)

Redis HyperLogLog is an algorithm used for cardinality statistics. The advantage of HyperLogLog is that when the number or volume of input elements is very, very large, the space required to calculate the cardinality is always fixed and very small.

With 12 KB of memory, you can calculate the cardinality of nearly 2 ^ 64 different elements.

Because HyperLogLog only calculates the cardinality based on the input elements and does not store the input elements themselves, HyperLogLog cannot return the input elements like a collection.

Its underlying layer uses string data type

What is cardinality?

The number of non repeating elements in the dataset.

Application scenario:

Web page visits (UV): a user can only count as one person if he visits it many times.

The traditional implementation stores the user's id and compares it each time. When there are more users, this method wastes a lot of space, and our purpose is just counting. Hyperloglog can help us complete it with the smallest space.

| command | describe |

|---|---|

| PFADD key element1 [elememt2..] | Adds the specified element to the hyperlog |

| PFCOUNT key [key] | Returns the cardinality estimate for a given hyperlog. |

| PFMERGE destkey sourcekey [sourcekey..] | Merge multiple hyperlogs into one hyperlog |

----------PFADD--PFCOUNT--------------------- 127.0.0.1:6379> PFADD myelemx a b c d e f g h i j k # Add element (integer) 1 127.0.0.1:6379> type myelemx # The underlying layer of hyperloglog uses String string 127.0.0.1:6379> PFCOUNT myelemx # Estimating the cardinality of myelemx (integer) 11 127.0.0.1:6379> PFADD myelemy i j k z m c b v p q s (integer) 1 127.0.0.1:6379> PFCOUNT myelemy (integer) 11 ----------------PFMERGE----------------------- 127.0.0.1:6379> PFMERGE myelemz myelemx myelemy # Merge myelemx and myelemy to become myelemz OK 127.0.0.1:6379> PFCOUNT myelemz # Estimation base (integer) 17

If fault tolerance is allowed, Hyperloglog must be used!

If fault tolerance is not allowed, use set or your own data type!

Bitmaps (bitmaps)

Using bit storage, the information status is only 0 and 1

Bitmap is a series of consecutive binary digits (0 or 1). The position of each bit is offset. AND,OR,XOR,NOT and other bit operations can be performed on bitmap.

Application scenario

Check in statistics and status statistics

| command | describe |

|---|---|

| setbit key offset value | Sets the value for the offset bit of the specified key |

| getbit key offset | Gets the value of the offset bit |

| bitcount key [start end] | The statistics string is set to the number of bit s of 1, and the statistics range can also be specified in bytes |

| bitop operration destkey key[key..] | Perform bit operation on one or more string keys that save binary bits, and save the results to destkey. |

| BITPOS key bit [start] [end] | Returns the first bit set to 1 or 0 in the string. start and end can only be by byte, not by bit |

------------setbit--getbit-------------- 127.0.0.1:6379> setbit sign 0 1 # Set bit 0 of sign to 1 (integer) 0 127.0.0.1:6379> setbit sign 2 1 # Set the second bit of sign to 1. If not, the default is 0 (integer) 0 127.0.0.1:6379> setbit sign 3 1 (integer) 0 127.0.0.1:6379> setbit sign 5 1 (integer) 0 127.0.0.1:6379> type sign string 127.0.0.1:6379> getbit sign 2 # Gets the value of the second digit (integer) 1 127.0.0.1:6379> getbit sign 3 (integer) 1 127.0.0.1:6379> getbit sign 4 # Not set. The default is 0 (integer) 0 -----------bitcount---------------------------- 127.0.0.1:6379> BITCOUNT sign # Count the number of digits with 1 in sign (integer) 4

Bottom layer of bitmaps

[the external link image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-9PlszjhS-1597890996519)(D: \ I \ MyBlog \ crazy God said Redis.assets\image-20200803234336175.png)]

After this setting, the value you can get is: \ xA2\x80, so bitmaps is a binary string from left to right

5, Business

A single redis command is atomic, but a redis transaction cannot be atomic

Redis transaction essence: a collection of commands.

-----------------Queue set execution-------------------

Each command in the transaction will be serialized and executed in order during execution. Other commands are not allowed to interfere.

- disposable

- Order

- exclusiveness

- Redis transactions do not have the concept of isolation level

- Redis single command guarantees atomicity, but transactions do not guarantee atomicity!

Redis transaction operation process

- Open transaction (multi)

- Order to join the team

- Execute transaction (exec)

Therefore, the commands in the transaction are not executed at the time of joining, and the execution will not be started until the commit (Exec) is completed at one time.

127.0.0.1:6379> multi # Open transaction OK 127.0.0.1:6379> set k1 v1 # Order to join the team QUEUED 127.0.0.1:6379> set k2 v2 # .. QUEUED 127.0.0.1:6379> get k1 QUEUED 127.0.0.1:6379> set k3 v3 QUEUED 127.0.0.1:6379> keys * QUEUED 127.0.0.1:6379> exec # Transaction execution 1) OK 2) OK 3) "v1" 4) OK 5) 1) "k3" 2) "k2" 3) "k1"

Cancel transaction (occurred)

127.0.0.1:6379> multi OK 127.0.0.1:6379> set k1 v1 QUEUED 127.0.0.1:6379> set k2 v2 QUEUED 127.0.0.1:6379> DISCARD # Abandon transaction OK 127.0.0.1:6379> EXEC (error) ERR EXEC without MULTI # Transaction is not currently open 127.0.0.1:6379> get k1 # The command in the abandoned transaction was not executed (nil)

Transaction error

Code syntax error (compile time exception) all commands are not executed

127.0.0.1:6379> multi OK 127.0.0.1:6379> set k1 v1 QUEUED 127.0.0.1:6379> set k2 v2 QUEUED 127.0.0.1:6379> error k1 # This is a syntax error command (error) ERR unknown command `error`, with args beginning with: `k1`, # An error will be reported, but it will not affect the subsequent order to join the team 127.0.0.1:6379> get k2 QUEUED 127.0.0.1:6379> EXEC (error) EXECABORT Transaction discarded because of previous errors. # Execution error 127.0.0.1:6379> get k1 (nil) # Other commands were not executed

* * > > exception code is not guaranteed to run properly * * > > so other transactions are not guaranteed * * >

127.0.0.1:6379> multi OK 127.0.0.1:6379> set k1 v1 QUEUED 127.0.0.1:6379> set k2 v2 QUEUED 127.0.0.1:6379> INCR k1 # This command has a logical error (incrementing the string) QUEUED 127.0.0.1:6379> get k2 QUEUED 127.0.0.1:6379> exec 1) OK 2) OK 3) (error) ERR value is not an integer or out of range # Error reporting during operation 4) "v2" # Other commands are executed normally # Although one command in the middle reported an error, the subsequent instructions were still executed normally and successfully. # Therefore, a single Redis instruction guarantees atomicity, but Redis transactions cannot guarantee atomicity.

monitor

Pessimistic lock:

- I am very pessimistic. I think there will be problems at any time, and I will lock whatever I do

Optimistic lock:

- I'm optimistic that there will be no problem at any time, so I won't lock it! When updating the data, judge whether anyone has modified the data during this period

- Get version

- Compare version when updating

Using the watch key to monitor the specified data is equivalent to optimistic locking.

Normal execution

127.0.0.1:6379> set money 100 # Set balance: 100 OK 127.0.0.1:6379> set use 0 # Expenditure usage: 0 OK 127.0.0.1:6379> watch money # Monitor money (lock) OK 127.0.0.1:6379> multi OK 127.0.0.1:6379> DECRBY money 20 QUEUED 127.0.0.1:6379> INCRBY use 20 QUEUED 127.0.0.1:6379> exec # The monitoring value has not been modified halfway, and the transaction is executed normally 1) (integer) 80 2) (integer) 20

Test multithreading to modify the value. Using watch can be used as an optimistic lock operation of redis (equivalent to getversion)

We start another client to simulate queue jumping thread.

Thread 1:

127.0.0.1:6379> watch money # money lock OK 127.0.0.1:6379> multi OK 127.0.0.1:6379> DECRBY money 20 QUEUED 127.0.0.1:6379> INCRBY use 20 QUEUED 127.0.0.1:6379> # The transaction is not executed at this time

Simulate thread queue jumping, thread 2:

127.0.0.1:6379> INCRBY money 500 # Modified money monitored in thread 1 (integer) 600 12

Return to thread 1 and execute the transaction:

127.0.0.1:6379> EXEC # Before execution, another thread modifies our value, which will lead to transaction execution failure (nil) # If there is no result, the transaction execution fails 127.0.0.1:6379> get money # Thread 2 modification takes effect "600" 127.0.0.1:6379> get use # Thread 1 transaction execution failed. The value has not been modified "0"

Unlock to get the latest value, and then lock for transaction.

Unlock with unwatch.

Note: the lock will be released automatically every time exec is submitted, regardless of whether it is successful or not

6, Jedis

Use java to operate redis. Jedis is the Java client connecting to redis recommended by redis officials.

-

Import dependency

<!--Import jredis My bag--> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>3.2.0</version> </dependency> <!--fastjson--> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.70</version> </dependency> -

Coding test

-

Connect database

-

Modify redis configuration file

vim /usr/local/bin/myconfig/redis.conf 1

-

Only local comments will be bound

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-4irufj95-1597890996520) (crazy God said Redis.assets/image-20200813161921480.png)]

-

Change the protection mode to no

[the external link image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-okjivapw-1597890996521) (crazy God said Redis.assets/image-20200813161939847.png)]

-

Allow background operation

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-c2imvpzl-1597890996522) (crazy God said Redis.assets/image-20200813161954567.png)]

-

-

-

-

Open port 6379

firewall-cmd --zone=public --add-port=6379/tcp --permanet 1

Restart firewall service

systemctl restart firewalld.service 1

-

Configuring security groups on Alibaba cloud server console

-

Restart redis server

[root@AlibabaECS bin]# redis-server myconfig/redis.conf 1

-

-

Operation command

TestPing.java

public class TestPing { public static void main(String[] args) { Jedis jedis = new Jedis("192.168.xx.xxx", 6379); String response = jedis.ping(); System.out.println(response); // PONG } } -

Disconnect

-

affair

public class TestTX { public static void main(String[] args) { Jedis jedis = new Jedis("39.99.xxx.xx", 6379); JSONObject jsonObject = new JSONObject(); jsonObject.put("hello", "world"); jsonObject.put("name", "kuangshen"); // Open transaction Transaction multi = jedis.multi(); String result = jsonObject.toJSONString(); // jedis.watch(result) try { multi.set("user1", result); multi.set("user2", result); // Execute transaction multi.exec(); }catch (Exception e){ // Abandon transaction multi.discard(); } finally { // Close connection System.out.println(jedis.get("user1")); System.out.println(jedis.get("user2")); jedis.close(); } } }

7, SpringBoot integration

- Import dependency

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

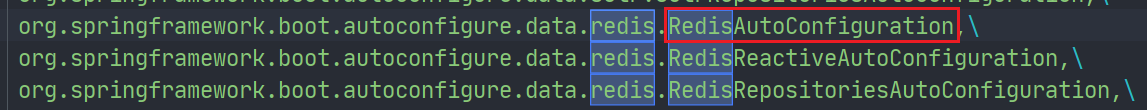

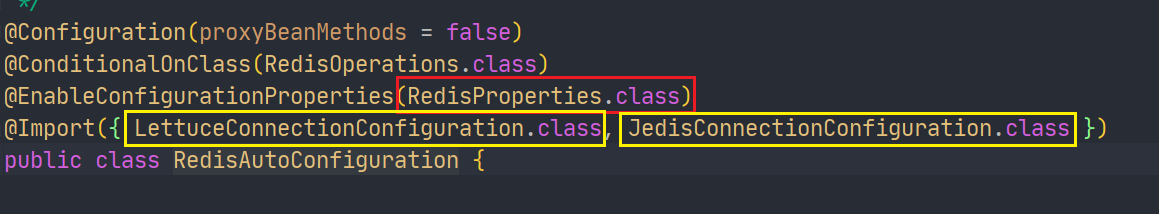

springboot 2. After X, the original Jedis is replaced by lettuce.

Jedis: direct connection and multi thread operation are not safe. If you want to avoid insecurity, use jedis pool connection pool! More like BIO mode

lettuce: with netty, instances can be shared among multiple threads. There is no thread insecurity! Thread data can be reduced, more like NIO mode

When we learn the principle of SpringBoot automatic configuration, we must integrate a component and configure it. There must be an automatic configuration class xxxAutoConfiguration in spring The fully qualified name of this class must also be found in factories. Redis is no exception.

Then there must be a RedisProperties class

We talked about springboot 2 After X, Lettuce is used to replace Jedis by default. Now we can verify it.

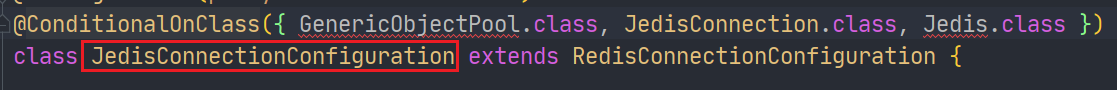

Look at Jedis first:

@Two classes in the ConditionalOnClass annotation do not exist by default, so Jedis cannot take effect

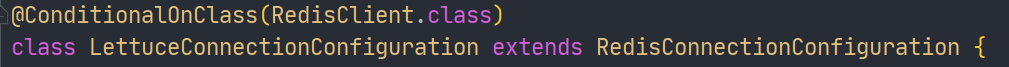

Then look at Lettuce:

Perfect effect.

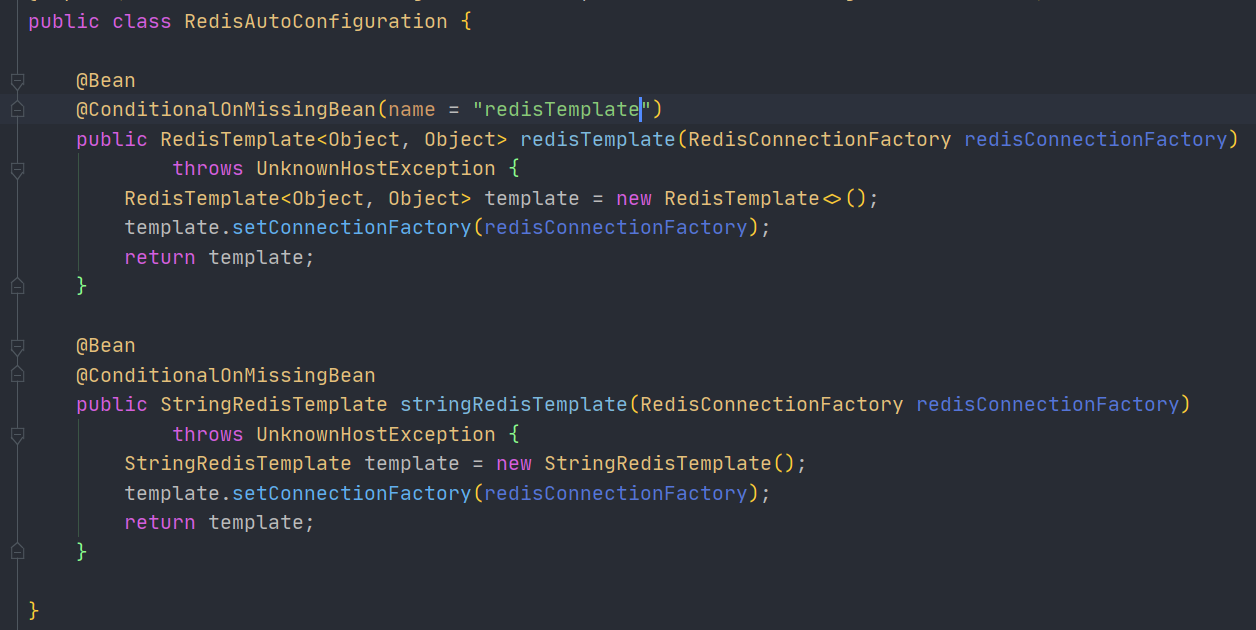

Now let's go back to RedisAutoConfiguratio

There are only two simple beans

- RedisTemplate

- StringRedisTemplate

When you see xxTemplate, you can compare RestTemplat and SqlSessionTemplate, and indirectly operate components by using these templates. Then these two are no exception. Used to operate String data types in Redis and Redis respectively.

There is also a condition annotation on RedisTemplate, which indicates that we can customize it

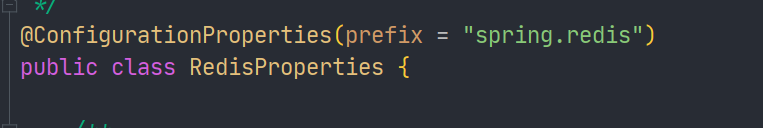

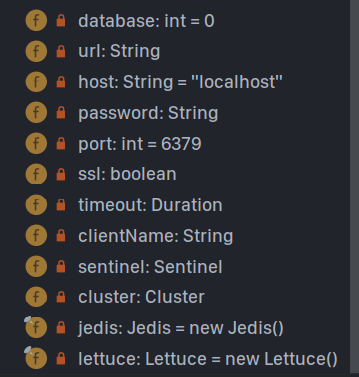

After that, we need to know how to write a configuration file and then connect to Redis. We need to read RedisProperties

These are some basic configuration properties.

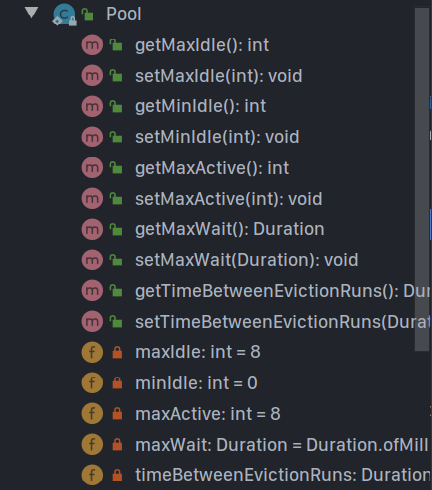

There are also some connection pool related configurations. Note that the connection pool of lettue must be used.

-

Write configuration file

# Configure redis spring.redis.host=39.99.xxx.xx spring.redis.port=6379

-

Using RedisTemplate

@SpringBootTest class Redis02SpringbootApplicationTests { @Autowired private RedisTemplate redisTemplate; @Test void contextLoads() { // redisTemplate operates on different data types, and the api is the same as our instructions // opsForValue operation String is similar to String // opsForList operation is similar to List // opsForHah // In addition to basic operations, our common methods can be operated directly through redisTemplate, such as transaction and basic CRUD // Get connection object //RedisConnection connection = redisTemplate.getConnectionFactory().getConnection(); //connection.flushDb(); //connection.flushAll(); redisTemplate.opsForValue().set("mykey","kuangshen"); System.out.println(redisTemplate.opsForValue().get("mykey")); } } -

test result

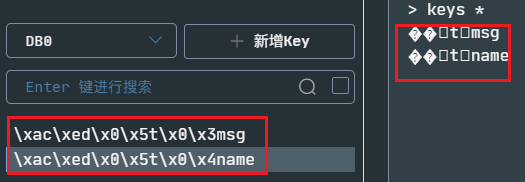

At this time, when we went back to Redis to check the data, we were surprised to find that it was all garbled, but the program can output normally:

At this time, it is related to the serialization of storage objects. The objects transmitted in the network also need serialization. Otherwise, it is all garbled.

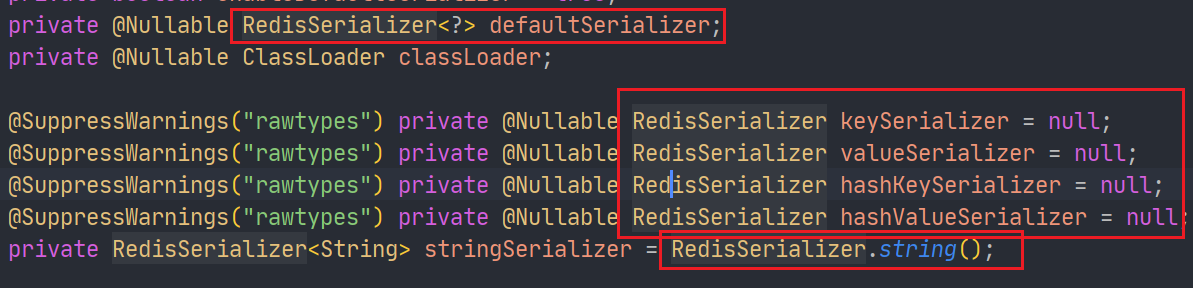

Let's go to the inside of the default RedisTemplate:

At the beginning, you can see several parameters about serialization.

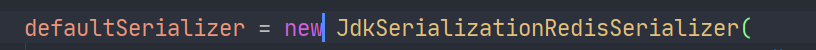

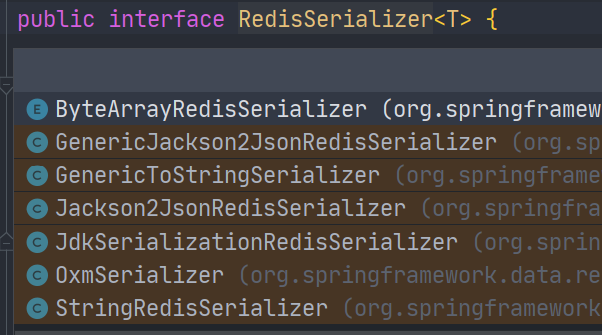

The default serializer is JDK serializer

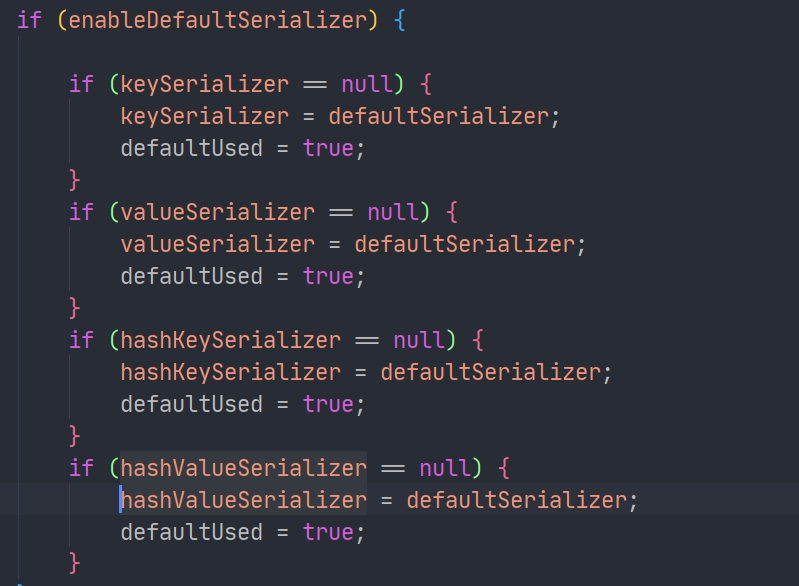

All serializers in the default RedisTemplate use this serializer:

Later, we can customize RedisTemplate to modify it.

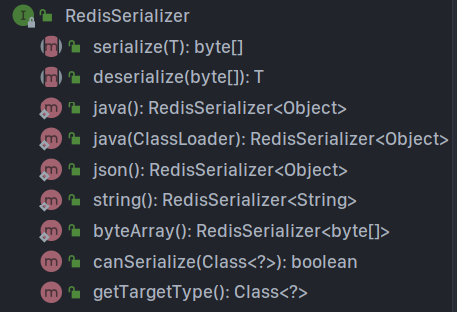

RedisSerializer provides a variety of serialization schemes:

-

Directly call the static method of RedisSerializer to return the serializer, and then set

-

Create the corresponding implementation class by yourself, and then set

-

-

Customized RedisTemplate template template:

When we create a Bean and add it to the container, the conditional annotation on the RedisTemplate will be triggered to invalidate the default RedisTemplate.

@Configuration public class RedisConfig { @Bean public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory) throws UnknownHostException { // Set the template generic type to < string, Object > RedisTemplate<String, Object> template = new RedisTemplate(); // Connection factory, no modification required template.setConnectionFactory(redisConnectionFactory); /* * Serialization settings */ // The key of key and hash adopts String serialization template.setKeySerializer(RedisSerializer.string()); template.setHashKeySerializer(RedisSerializer.string()); // The value of value and hash adopts Jackson serialization template.setValueSerializer(RedisSerializer.json()); template.setHashValueSerializer(RedisSerializer.json()); template.afterPropertiesSet(); return template; } }In this way, as long as the entity class is serialized, we won't worry about garbled code.

[the external chain image transfer fails, and the source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-oc8kjp08-1597890996523) (crazy God said Redis.assets/image-20200817175638086.png)]

8, Customize Redis tool class

RedisTemplate needs to be called frequently opForxxx can then perform the corresponding operation. In this way, the code efficiency is low. It is generally not used in work. Instead, these common public API s are extracted and encapsulated into a tool class, and then the tool class is directly used to indirectly operate Redis, which is not only efficient but also easy to use.

Tool reference blog:

https://www.cnblogs.com/zeng1994/p/03303c805731afc9aa9c60dbbd32a323.html

https://www.cnblogs.com/zhzhlong/p/11434284.html

9, Redis conf

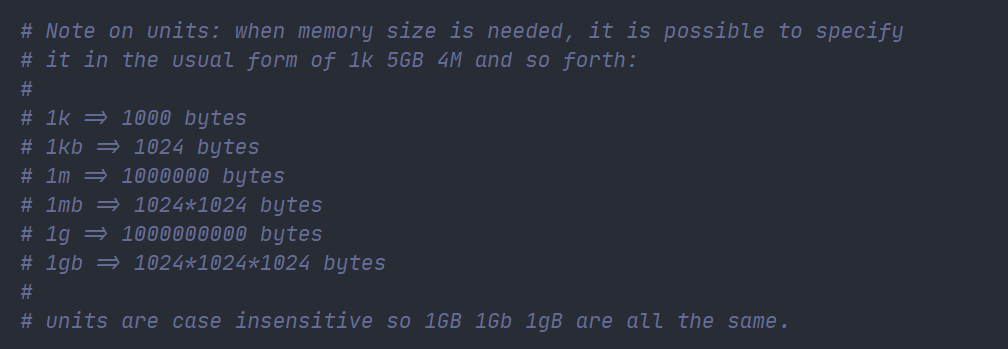

Capacity units are not case sensitive, and G and GB are different

You can use include to combine multiple configuration issues

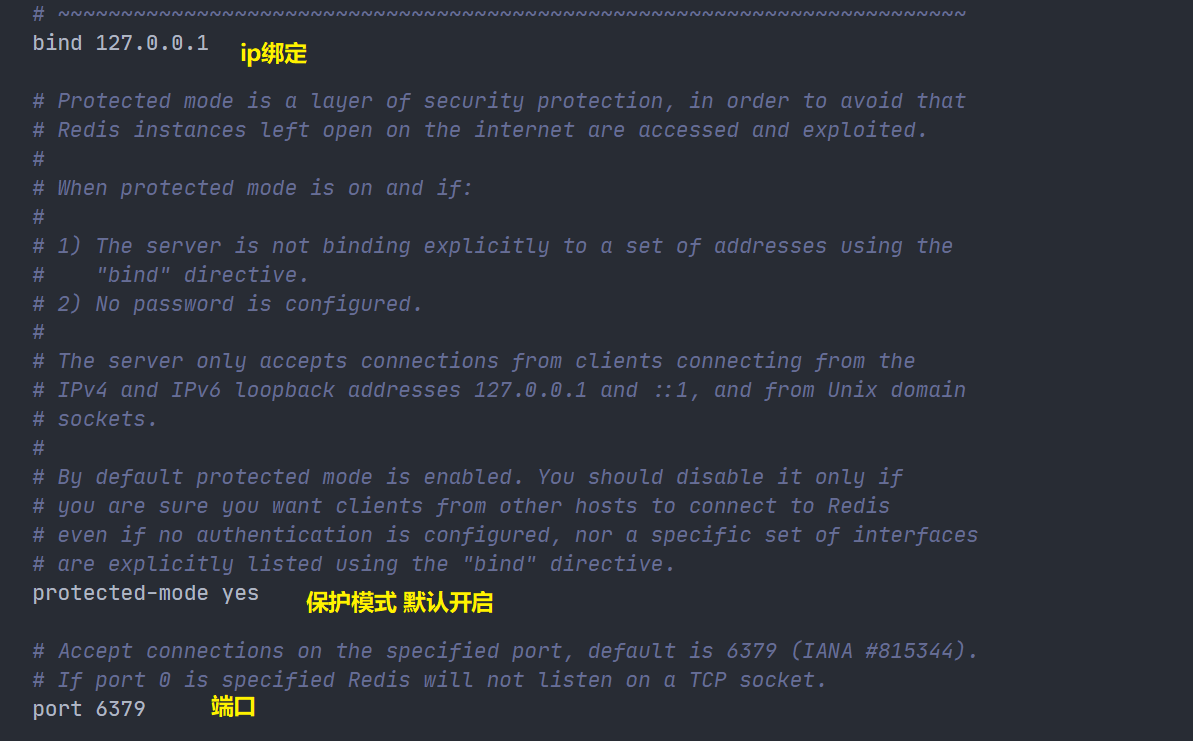

network configuration

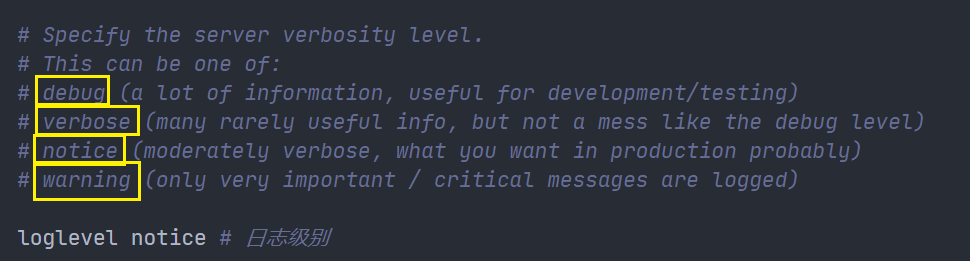

Log output level

Log output file

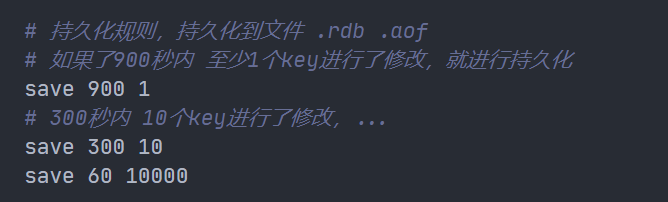

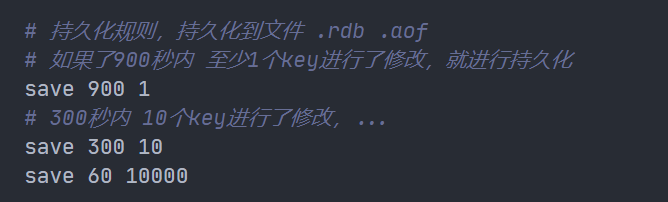

Persistence rules

Since Redis is a memory based database, it is necessary to persist the data from memory to files

Persistence mode:

- RDB

- AOF

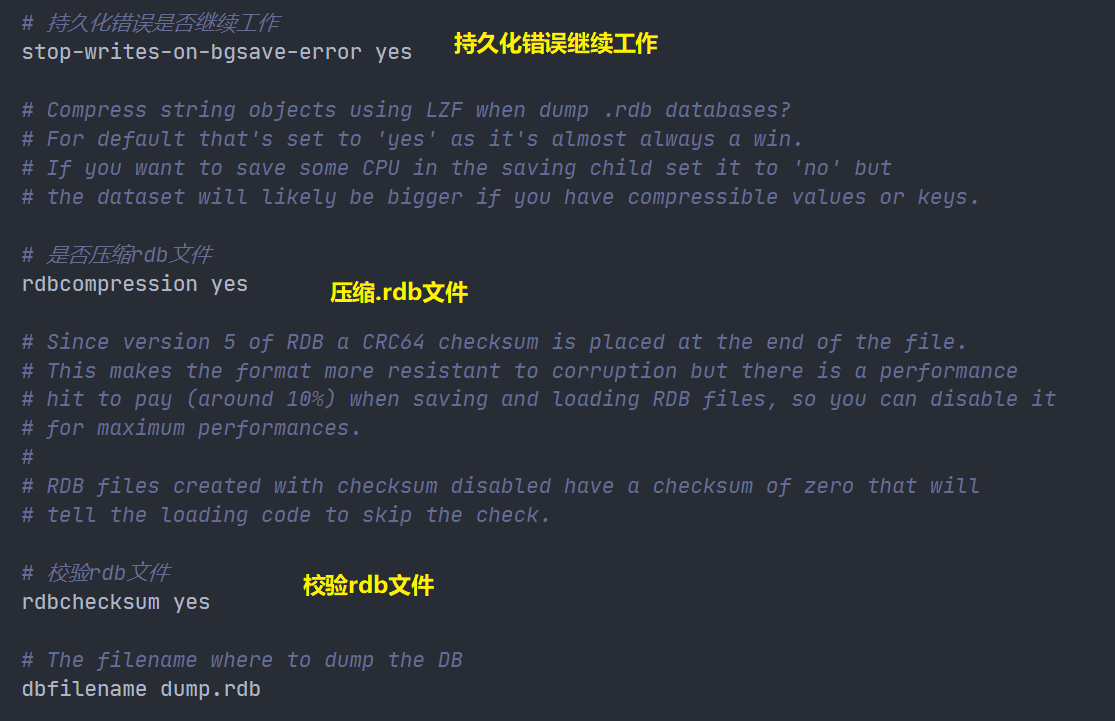

RDB file related

Master-slave replication

Password setting in Security module

Client connection related

maxclients 10000 Maximum number of clients maxmemory <bytes> Maximum memory limit maxmemory-policy noeviction # Processing strategy for memory reaching limit

The default expiration policy in redis is volatile LRU.

Setting mode

config set maxmemory-policy volatile-lru 1

Six ways of maxmemory policy

**1. Volatile LRU: * * LRU only for key s with expiration time set (default)

2. Allkeys lru: delete the key of lru algorithm

**3. Volatile random: * * randomly delete expired key s

**4. Allkeys random: * * random deletion

5. Volatile TTL: delete expired

6. noeviction: never expires, return error

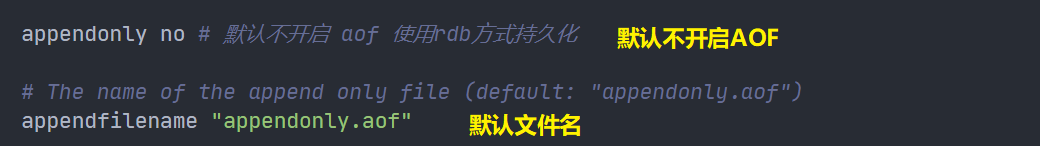

Relevant parts of AOF

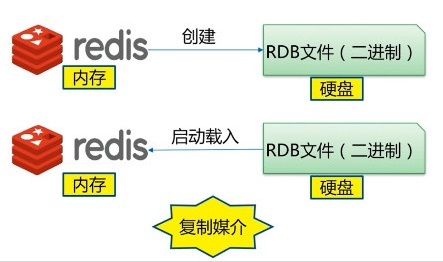

10, Persistence - RDB

RDB: Redis Databases

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-c0mm1d4a-1597890996524) (crazy God said Redis.assets/image-20200818122236614.png)]

What is RDB

After the specified time interval, write the dataset snapshot in memory to the database; During recovery, directly read the snapshot file to recover the data;

By default, Redis saves the database snapshot under the name dump RDB binary file. The file name can be customized in the configuration file.

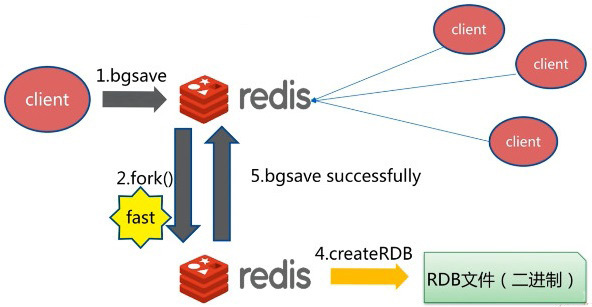

working principle

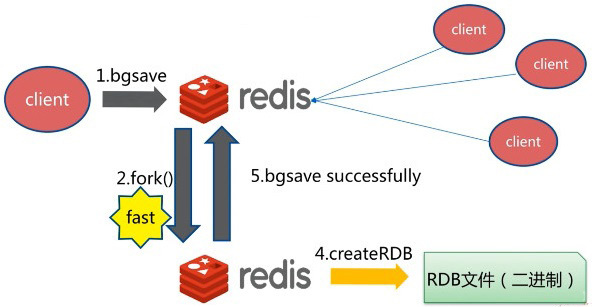

During RDB, the main thread of redis will not do io operation, and the main thread will fork a sub thread to complete the operation;

- Redis calls forks. Have both parent and child processes.

- The subprocess writes the data set to a temporary RDB file.

- When the child process finishes writing the new RDB file, Redis replaces the original RDB file with the new RDB file and deletes the old RDB file.

This way of working enables Redis to benefit from the copy on write mechanism (because the child process is used for write operations, while the parent process can still receive requests from the client)

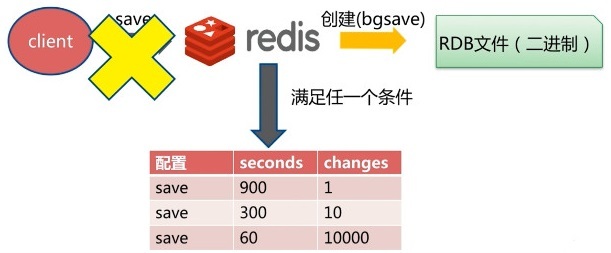

Trigger mechanism

- When the save rule is satisfied, the rdb policy will be triggered automatically

- Executing the flush command will also trigger our rdb policy

- When you exit redis, rdb files will also be generated automatically

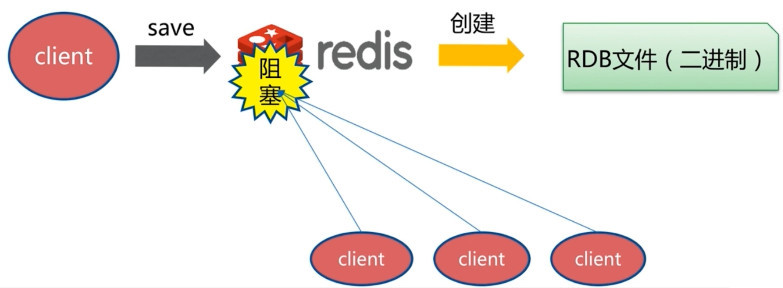

save

Using the save command will immediately persist the data in the current memory, but it will block, that is, it will not accept other operations;

Since the save command is a synchronization command, it will occupy the main process of Redis. If there is a lot of Redis data, the execution speed of the save command will be very slow, blocking the requests of all clients.

Flush command

The flush command also triggers persistence;

Trigger persistence rule

Meet the trigger conditions in the configuration conditions;

Redis can be set through the configuration file to automatically save the dataset when the condition of "at least M changes to the dataset in N seconds" is met.

bgsave

bgsave is done asynchronously. redis can also continue to respond to client requests during persistence;

bgsave vs save

| command | save | bgsave |

|---|---|---|

| IO type | synchronization | asynchronous |

| Blocking? | yes | Yes (blocking occurs in fock(), usually very fast) |

| Complexity | O(n) | O(n) |

| advantage | No additional memory is consumed | Do not block client commands |

| shortcoming | Blocking client commands | A fock subprocess is required, which consumes memory |

Advantages and disadvantages

advantage:

- Suitable for large-scale data recovery

- The requirements for data integrity are not high

Disadvantages:

- The operation needs a certain time interval. If redis goes down unexpectedly, the last modified data will be gone.

- The fork process will occupy a certain content space.

11, Persistent AOF

Append Only File

Record all our commands, history, and execute all the files again when recovering

[the external link image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-z8wr9lbw-1597890996525) (crazy God said redis. Assets / image-2020081812371375. PNG)]

Each write operation is recorded in the form of log, and all instructions executed by redis are recorded (read operation is not recorded). Only files can be added, but files cannot be overwritten. At the beginning of redis startup, redis will read the file and rebuild the data. In other words, if redis restarts, the write instructions will be executed from front to back according to the contents of the log file to complete the data recovery.

What is AOF

The snapshot function (RDB) is not very durable: if Redis fails and stops for some reason, the server will lose the data recently written and not saved to the snapshot. Since version 1.1, Redis has added a completely durable persistence method: AOF persistence.

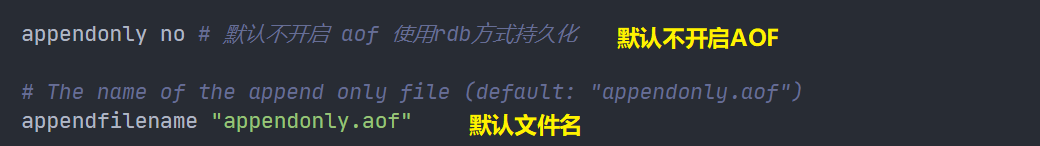

If you want to use AOF, you need to modify the configuration file:

appendonly no yes indicates that AOF is enabled

It is not enabled by default. We need to configure it manually and restart redis to take effect!

If the aof file is misplaced, redis cannot be started at this time. I need to modify the aof file

Redis provides us with a tool redis check AOF -- fix

merits and demerits

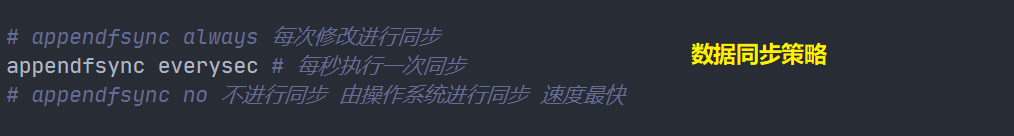

appendonly yes # aof mode is not enabled by default. rdb mode is used for persistence by default. In most cases, rdb is fully sufficient appendfilename "appendonly.aof" # appendfsync always # Each modification consumes sync performance appendfsync everysec # Performing sync once per second may lose this second of data # appendfsync no # When sync is not executed, the operating system synchronizes the data by itself, and the speed is the fastest

advantage

- Each modification will be synchronized, and the integrity of the file will be better

- If you don't synchronize every second, you may lose one second of data

- Never synchronized, most efficient

shortcoming

- Compared with data files, aof is much larger than rdb, and the repair speed is slower than rdb!

- The persistence of rdb is slower than that of our default configuration

12, RDB and AOP selection

RDB and AOF comparison

| RDB | AOF | |

|---|---|---|

| boot priority | low | high |

| volume | Small | large |

| Recovery speed | fast | slow |

| Data security | Lost data | Determined by strategy |

How to choose which persistence method to use?

Generally speaking, if you want to achieve data security comparable to PostgreSQL, you should use both persistence functions at the same time.

If you care about your data very much, but can still withstand data loss within a few minutes, you can only use RDB persistence.

Many users only use AOF persistence, but this method is not recommended: regular generation of RDB snapshot s is very convenient for database backup, and the speed of RDB recovery of data sets is faster than that of AOF recovery.

13, Redis publishing and subscription

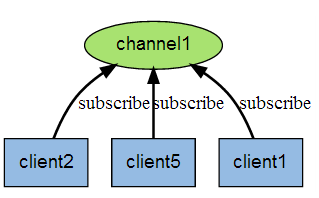

Redis publish / subscribe (pub/sub) is a message communication mode: the sender (pub) sends messages and the subscriber (sub) receives messages.

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-ibt2pjca-1597890996526) (crazy God said Redis.assets/image-20200818162849693.png)]

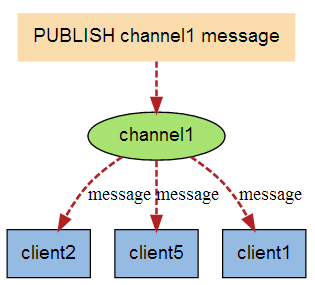

The following figure shows the relationship between channel 1 and the three clients subscribing to this channel - client2, client5 and client1:

When a new message is sent to channel 1 through the PUBLISH command, the message will be sent to the three clients subscribing to it:

command

| command | describe |

|---|---|

| PSUBSCRIBE pattern [pattern..] | Subscribe to one or more channels that match a given pattern. |

| PUNSUBSCRIBE pattern [pattern..] | Unsubscribe from one or more channels that match the given pattern. |

| PUBSUB subcommand [argument[argument]] | View subscription and publishing system status. |

| PUBLISH channel message | Publish messages to specified channels |

| SUBSCRIBE channel [channel..] | Subscribe to a given channel or channels. |

| SUBSCRIBE channel [channel..] | Unsubscribe from one or more channels |

Examples

------------Subscriber---------------------- 127.0.0.1:6379> SUBSCRIBE sakura # Subscribe to sakura channel Reading messages... (press Ctrl-C to quit) # Waiting to receive message 1) "subscribe" # Message of successful subscription 2) "sakura" 3) (integer) 1 1) "message" # Received message "hello world" from sakura channel 2) "sakura" 3) "hello world" 1) "message" # Received message "hello i am sakura" from sakura channel 2) "sakura" 3) "hello i am sakura" --------------Message publisher------------------- 127.0.0.1:6379> PUBLISH sakura "hello world" # Post messages to sakura channel (integer) 1 127.0.0.1:6379> PUBLISH sakura "hello i am sakura" # Release news (integer) 1 -----------------View active channels------------ 127.0.0.1:6379> PUBSUB channels 1) "sakura"

principle

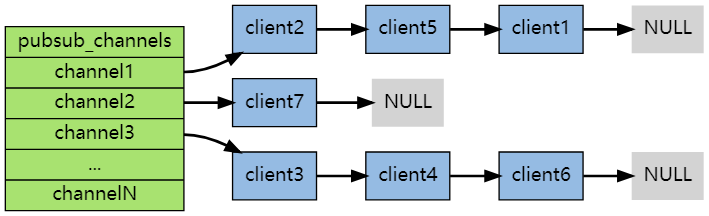

Each Redis server process maintains a Redis that represents the status of the server H / redisserver structure, PubSub of structure_ The channels attribute is a dictionary, which is used to save the information of subscribed channels. The key of the dictionary is the channel being subscribed, and the value of the dictionary is a linked list, which stores all clients subscribing to this channel.

When the client subscribes, it is linked to the tail of the linked list of the corresponding channel. Unsubscribing is to remove the client node from the linked list.

shortcoming

- If a client subscribes to a channel but does not read messages fast enough, the volume of redis output buffer will become larger and larger due to the continuous backlog of messages, which may slow down the speed of redis itself or even crash directly.

- This is related to the reliability of data transmission. If the subscriber is disconnected, he will lose all messages published by the publisher during the short term.

application

- Message subscription: official account subscription, micro-blog attention, and so on.

- Multiplayer online chat room.

In a slightly complex scenario, we will use message middleware MQ for processing.

14, Redis master-slave replication

concept

Master slave replication refers to copying data from one Redis server to other Redis servers. The former is called Master/Leader and the latter is called Slave/Follower. Data replication is one-way! It can only be copied from the master node to the slave node (the master node is dominated by writing and the slave node is dominated by reading).

By default, each Redis server is a master node. A master node can have 0 or more slave nodes, but each slave node can only have one master node.

effect

- Data redundancy: master-slave replication realizes the hot backup of data, which is a way of data redundancy other than persistence.

- Fault recovery: when the master node fails, the slave node can temporarily replace the master node to provide services, which is a way of service redundancy

- Load balancing: on the basis of master-slave replication, with read-write separation, the master node performs write operations and the slave node performs read operations to share the load of the server; Especially in the scenario of more reads and less writes, multiple slave nodes share the load and improve the concurrency.

- High availability cornerstone: master-slave replication is also the basis for sentinel and cluster implementation.

Why cluster

- It is difficult for a single server to load a large number of requests

- The failure rate of a single server is high and the system collapse probability is high

- The memory capacity of a single server is limited.

Environment configuration

When explaining the configuration file, we noticed a replication module (see article 8 in Redis.conf)

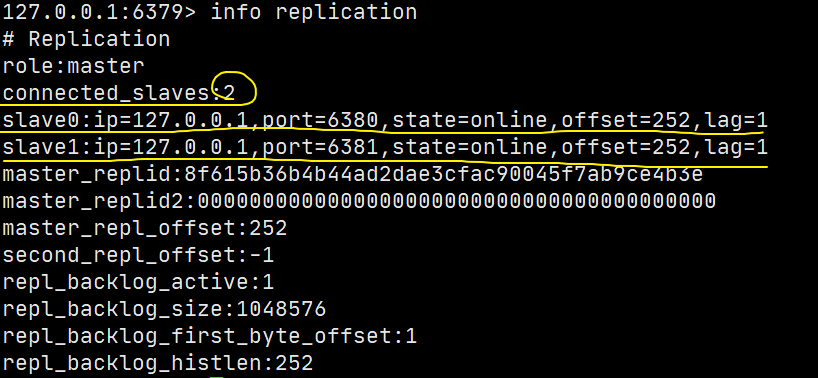

View the information of the current library: info replication

127.0.0.1:6379> info replication # Replication role:master # role connected_slaves:0 # Number of slaves master_replid:3b54deef5b7b7b7f7dd8acefa23be48879b4fcff master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0

Since you need to start multiple services, you need multiple configuration files. Modify the following information for each configuration file:

- Port number

- pid file name

- Log file name

- rdb file name

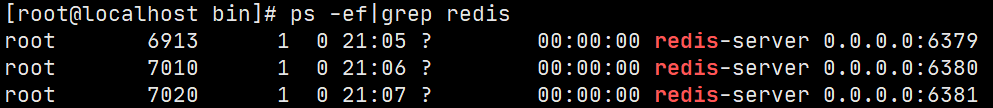

Start single machine multi service cluster:

One master and two slave configuration

==By default, each Redis server is the master node== In general, we only need to configure the slave!

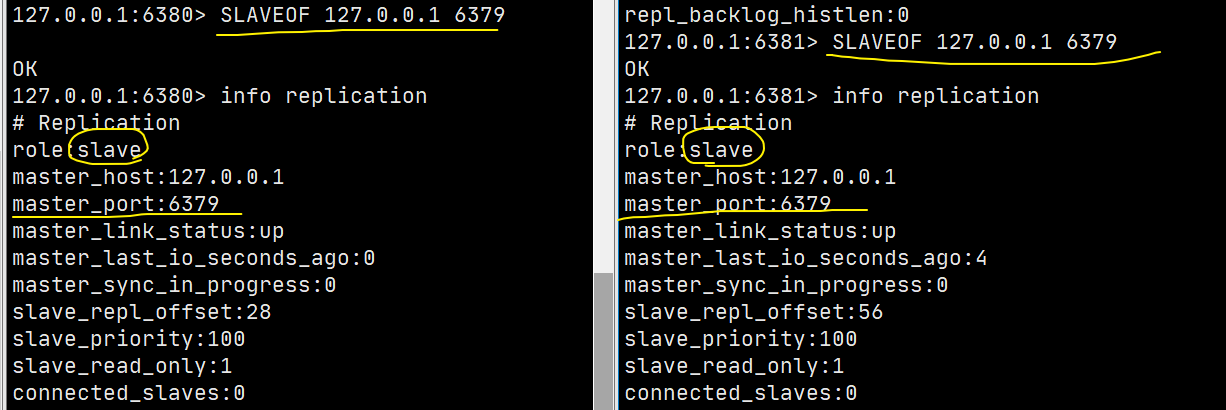

Recognize the boss! One master (79) and two slaves (80, 81)

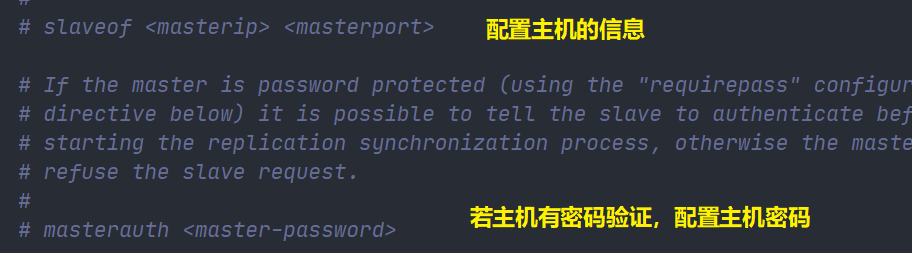

Use SLAVEOF host port to configure the host for the slave.

Then the status of the slave can also be seen on the host:

Here we use commands to build, which is temporary, = = in real development, it should be configured in the configuration file of the slave, = = in this case, it is permanent.

Usage rules

-

The slave can only read and cannot write. The host can read and write, but it is mostly used for writing.

127.0.0.1:6381> set name sakura # Slave 6381 write failed (error) READONLY You can't write against a read only replica. 127.0.0.1:6380> set name sakura # Slave 6380 write failed (error) READONLY You can't write against a read only replica. 127.0.0.1:6379> set name sakura OK 127.0.0.1:6379> get name "sakura"

-

When the host is powered off and down, the role of the slave will not change by default. The cluster only loses the write operation. After the host is restored, the slave will be connected and restored to its original state.

-

When the slave is powered off and down, if the slave is not configured with the configuration file, the data of the previous host cannot be obtained as the host after restart. If it is reconfigured at this time, it is called the slave, and all the data of the host can be obtained. Here we will mention a synchronization principle.

-

As mentioned in the second article, by default, new hosts will not appear after host failure. There are two ways to generate new hosts:

- The slave machine manually executes the command slave of no one. After execution, the slave machine becomes a master machine independently

- Use sentinel mode (automatic election)

If there is no boss, can you choose a boss at this time? Manual!

If the host is disconnected, we can turn the host into ofveone! Other nodes can be manually connected to the latest master node (manual)! If the boss fixes it at this time, reconnect it for so long!

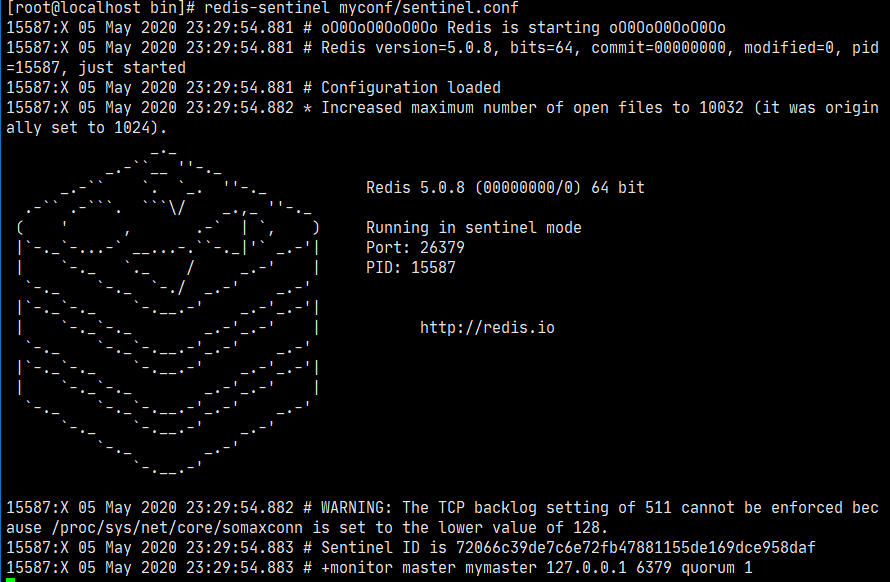

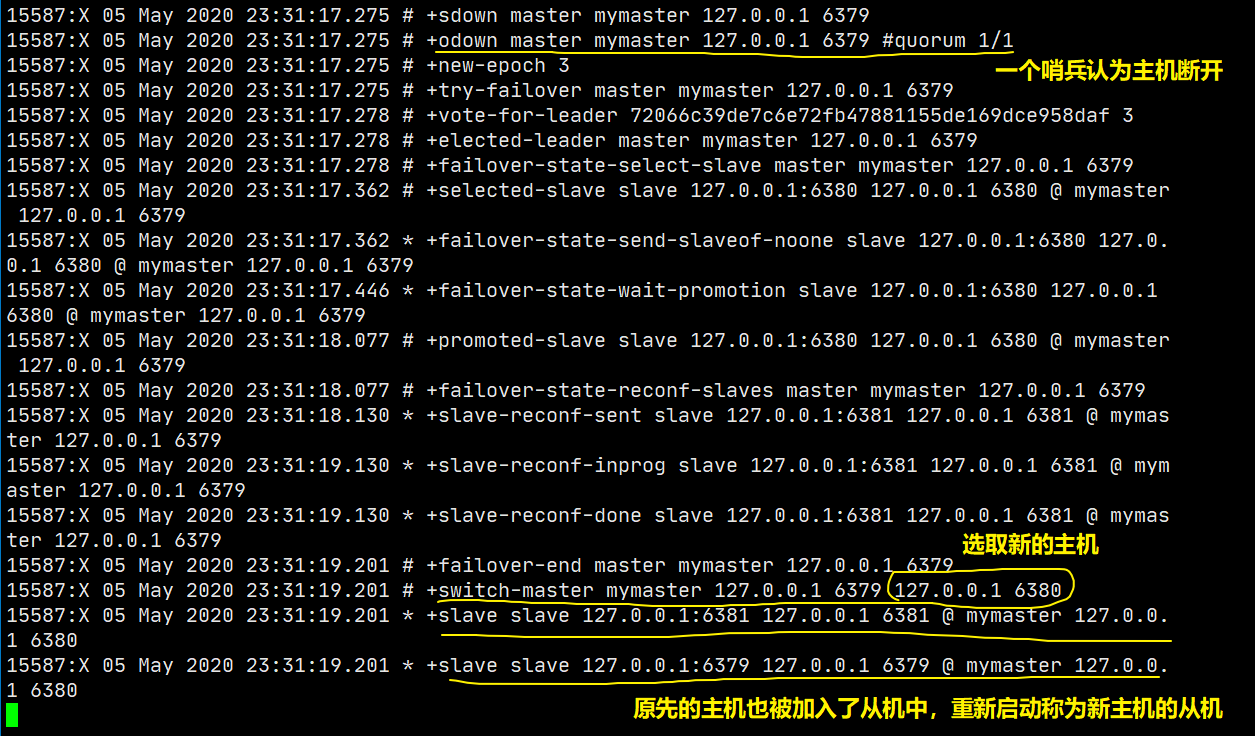

15, Sentinel mode

For more information, refer to the blog: https://www.jianshu.com/p/06ab9daf921d

The method of master-slave switching technology is: when the master server is down, a slave server needs to be manually switched to the master server, which requires manual intervention, which is laborious and laborious, and the service will not be available for a period of time. This is not a recommended way. More often, we give priority to sentinel mode.

Single Sentinel

[the external chain image transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the image and upload it directly (img-2enyvapp-1597890996527) (crazy God said Redis.assets/image-20200818233231154.png)]

The role of sentinels:

- Send commands to let Redis server return to monitor its running status, including master server and slave server.