Catalogue of series articles

preface

CUDA Programming is to use the parallel computing power of GPU equipment to realize the high-speed execution of the program. The optimal setting of CUDA kernel function on the size of Grid and Block can ensure the full application of this parallel computing power of CPU devices. This paper introduces the parallelism measurement index, which can measure the Grid and module size setting of optimal performance.

I Introduction to CUDA C parallelism measurement index

Occupancy rate (achieved occupancy in nvprof):

Occupancy refers to the ratio of the active thread bundle to the maximum thread bundle. There are enough active thread bundles to ensure the full implementation of parallelism (conducive to delay concealment). If the occupancy rate reaches a certain height, further increase will not improve the performance, so the occupancy rate is not the only standard to measure the performance.

Latency hiding: the latency of a thread bundle can be hidden by the execution of other thread bundles.

Thread bundle execution efficiency (warm execution efficiency in nvprof)

Execution of threads in thread bundle

Branch efficiency in nvprof:

Branch rate refers to the ratio of undifferentiated branches to all branches. It can be understood that the higher this value is, the stronger the parallel execution ability is. The undifferentiated branch here is relative to the thread bundle differentiation. Thread bundle differentiation means that threads in the same thread bundle execute different instructions, such as conditional control statements such as if/else in kernel functions. Threads in the same thread bundle execute the same instructions, and the performance is the best. nvcc compiler can optimize the differentiation of short if/else conditional statements, that is, you may see that the branching rate of the kernel function of conditional statements is 100%, which is the credit of CUDA compiler. Of course, for long if/else conditional statements, thread bundle differentiation will occur, that is, the branching rate is less than 100%;

Method to avoid thread bundle differentiation: adjust the branch granularity to adapt to the integer multiple of thread bundle size

Number of instructions per thread bundle (instructions per warp in nvprof):

The average number of instructions executed on each thread bundle

Global memory load efficiency in nvprof:

The ratio of the requested global load throughput to the required global load throughput can measure the extent to which the loading operation of the application utilizes the memory bandwidth of the device

Global load throughput in nvprof:

Check the memory reading efficiency of the kernel. Higher load throughput does not necessarily mean higher performance.

2, Case introduction

1. Case description

Here, taking integer protocol (data accumulation and summation) as an example, three different kernel functions are implemented, and the performance of interleaving protocol is the best.

Reduceneighbore kernel function flow (the figure below refers to CUDA C programming authority Guide):

reduceNeighboredLess kernel function flow (the figure below refers to CUDA C programming authority Guide):

reduceNeighboredLess kernel function flow (the figure below refers to CUDA C programming authority Guide):

reduceInterLeave kernel function flow (the figure below refers to CUDA C programming authority Guide):

reduceInterLeave kernel function flow (the figure below refers to CUDA C programming authority Guide):

2. Case realization

#include <stdio.h>

#include <iostream>

#include <cuda.h>

#include <cuda_runtime.h>

#include <cuda_runtime_api.h>

#include <device_launch_parameters.h>

#include <device_functions.h>

#include "CudaUtils.h"

//cpu recursive reduce

int recursiveReduce(int* data, const int size)

{

if (size == 1)

{

return data[0];

}

const int stride = size / 2;

// in-place reduction

for (int i = 0; i < stride; i++)

{

data[i] += data[i + stride];

}

//call recursively

return recursiveReduce(data, stride);

}

//accumulate by neighbor elements of array

__global__ void reduceNeighbored(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (tid >= n)

return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

if (tid % (2 * stride) == 0)

{

idata[tid] += idata[tid + stride];

}

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

//accumulate by neighbor elements of array

__global__ void reduceNeighboredLess(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = threadIdx.x + blockIdx.x * blockDim.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (idx >= n)

return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

int index= 2 * stride * tid;

if (index < blockDim.x)

idata[index] += idata[index + stride];

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

//accumulate by neighbor elements of array

__global__ void reduceInterLeave(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (tid >= n)

return;

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

idata[tid] += idata[tid + stride];

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

int main()

{

int nDevId = 0;

cudaDeviceProp stDeviceProp;

cudaGetDeviceProperties(&stDeviceProp, nDevId);

printf("device %d: %s\n", nDevId, stDeviceProp.name);

cudaSetDevice(nDevId);

bool bResult = false;

//initialization

int size = 1 << 24; //total number of elements to reduce

printf("array size: %d \n", size);

//execution configuration

int nBlockSize = 512;// initial block size

dim3 block(nBlockSize, 1);

dim3 grid((size + block.x - 1) / block.x, 1);

printf("grid: %d, block: %d\n", grid.x, block.x);

//allocate host memory

size_t bytes = size * sizeof(int);

int* h_idata = (int*)malloc(bytes);

int* h_odata = (int*)malloc(grid.x * sizeof(int));

int* tmp = (int*)malloc(bytes);

//initialize the array

for (int i = 0; i < size; i++)

{

h_idata[i] = i;

}

memcpy(tmp, h_idata, bytes);

double dElaps;

int nGpuNum = 0;

//allocate device memory

int* d_idata = NULL;

int* d_odata = NULL;

cudaMalloc(&d_idata, bytes);

cudaMalloc(&d_odata, grid.x * sizeof(int));

//cpu reducation

CudaUtils::Time::Start();

int cpu_sum = recursiveReduce(tmp, size);

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

printf("cpu reduce: elapsed %.2f ms gpu_sum: %d\n",

dElaps, cpu_sum);

// kernel 0: warpup -- reduceNeighbored

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighbored << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

size_t gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu Warmup: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 1: reduceNeighbored

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighbored << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu Neighbored: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 2: reduceNeighboredLess - reduce thread bundle differentiation

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighboredLess << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu NeighboredLess: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 3: reduceInterLeave - reduce thread bundle differentiation

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceInterLeave << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu InterLeave: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

//free host memory

free(h_idata);

free(h_odata);

//free device memory

cudaFree(d_idata);

cudaFree(d_odata);

system("pause");

return 0;

}

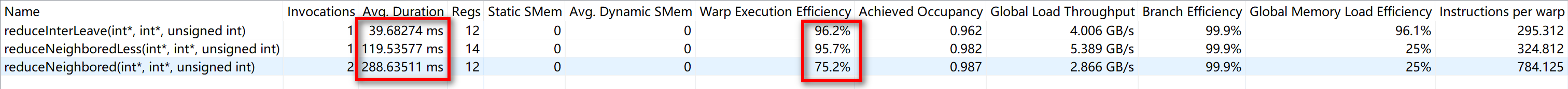

3. Result analysis

In terms of running time, the reduceneighbore kernel function is the slowest (the line bundle has the lowest execution efficiency), and the reduceInterLeave kernel function is the fastest (the line bundle has the highest execution efficiency).

In terms of running time, the reduceneighbore kernel function is the slowest (the line bundle has the lowest execution efficiency), and the reduceInterLeave kernel function is the fastest (the line bundle has the highest execution efficiency).

summary

There are many indicators to measure parallelism. In addition to those described above, there are many other indicators. By balancing multiple indicators and evaluating parallelism, we can get an approximately optimal grid and module size; Through the following cases, we can find that the optimal parallel ability is not necessarily the best in every measure.

reference material

CUDA C programming authority Guide