Catalogue of Series Articles

Preface

Here we share the third article on memory management: zero copy memory.

1. Zero Copy Memory Related Knowledge Points

In the CUDA knowledge previously learned, the host can not directly access the device variables, it needs to pass through c u d a M e m c p y cudaMemcpy The cudaMemcpy function implements data copying between the host and the device, although the device cannot directly access host variables.

The exception is zero-copy memory, which is accessible by both the host and the device.

Note that zero-copy memory is equivalent to a single block of memory that is separated from global memory, using fixed memory technology to achieve zero-copy memory.

The advantages of using zero copy memory in CUDA kernel functions are as follows:

(1) Host memory is available when the device is out of memory

(2) Avoid display data transfer between host and device

(3) Increase PCIe transfer rate

Since both the device and the host can access zero-copy memory data, attention should be paid to synchronization issues to avoid the host and the device changing zero-copy memory data at the same time, otherwise dirty data will be generated.

The technology of zero-copy memory relies mainly on fixed memory (non-paging), which maps to the device address space. A mapping to fixed memory can be created using the following functions:

c

u

d

a

E

r

r

o

r

_

t

c

u

d

a

H

o

s

t

A

l

l

o

c

(

v

o

i

d

∗

∗

p

H

o

s

t

,

s

i

z

e

_

t

c

o

u

n

t

,

u

n

s

i

g

n

e

d

i

n

t

f

l

a

g

s

)

;

cudaError\_t\ cudaHostAlloc(void\ **pHost,\ size\_t\ count,\ unsigned\ int\ flags);

cudaError_t cudaHostAlloc(void ∗∗pHost, size_t count, unsigned int flags);

Zero copy memory required c u d a F r e e H o s t cudaFreeHost The cudaFreeHost function is released.

flags that allocate zero copy memory are listed below:

(1)cudaHostAllocDefault

The behavior of the cudaHostAlloc function is consistent with that of the cudaMallocHost function, which allocates a fixed memory function.

(2)cudaHostAllocPortable

Enables a function to return fixed memory that can be used by all CUDA contexts, not just the one that performs memory allocation.

(3)cudaHostAllocWriteCombined

Make the function return to write-bound memory, which can be configured on some systems (which system configurations?) It is faster to transmit over the PCIe bus, but it cannot be read effectively on most hosts.

(4)cudaHostAllocMapped

This is the most obvious flag of zero copy memory, enabling host writes and device reads to be mapped to host memory in the device address space.

Device pointers mapped to fixed memory can be obtained through the following functions:

c

u

d

a

E

r

r

o

r

_

t

c

u

d

a

H

o

s

t

G

e

t

D

e

v

i

c

e

P

o

i

n

t

e

r

(

v

o

i

d

∗

∗

p

D

e

v

i

c

e

,

v

o

i

d

∗

p

H

o

s

t

,

u

n

s

i

g

n

e

d

i

n

t

f

l

a

g

s

)

;

cudaError\_t cudaHostGetDevicePointer(void\ **pDevice,\ void\ *pHost,\ unsigned\ int\ flags);

cudaError_tcudaHostGetDevicePointer(void ∗∗pDevice, void ∗pHost, unsigned int flags);

The above function returns a device pointer that can be referenced on the device to access the mapped fixed host memory.

Note that these functions will fail if the device does not support mapped fixed memory.

Using zero-copy memory as a supplement to device memory can significantly reduce performance during frequent read and write operations. Because each transfer mapped to memory must go through the PCIe bus. Latency is also significantly increased compared to global memory.

2. Example zero copy memory

1. Code implementation

#include <iostream>

#include <cuda.h>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <device_functions.h>

void InitData(float* data, size_t nElem)

{

for (size_t i = 0; i < nElem; i++)

{

data[i] = i % 255;

}

}

void SumArraysOnHost(float* h_A, float* h_B, float* hostRef, size_t nElem)

{

for (size_t i = 0; i < nElem; i++)

{

hostRef[i] = h_A[i] + h_B[i];

}

}

void CheckResults(float* hostRef, float* gpuRef, size_t nElem)

{

bool bSame = true;

for (size_t i = 0; i < nElem; i++)

{

if (abs(gpuRef[i] - hostRef[i]) > 1e-5)

{

bSame = false;

}

}

if (bSame)

{

printf("Result is correct!\n");

}

else

{

printf("Result is error!\n");

}

}

__global__ void GpuSumArrays(float* d_A, float* d_B, float* d_C, size_t nElem)

{

int tid = blockDim.x * blockIdx.x + threadIdx.x;

if (tid < nElem)

d_C[tid] = d_A[tid] + d_B[tid];

}

int main()

{

int nDev = 0;

cudaSetDevice(nDev);

cudaDeviceProp stDeviceProp;

cudaGetDeviceProperties(&stDeviceProp, nDev);

//check whether support mapped memory

if (!stDeviceProp.canMapHostMemory)

{

printf("Device %d does not support mapping CPU host memory!\n", nDev);

goto EXIT;

}

printf("Using device %d: %s\n", nDev, stDeviceProp.name);

// set up data size of vector

int nPower = 10;

int nElem = 1 << nPower;

size_t nBytes = nElem * sizeof(float);

if (nPower < 18) {

printf("Vector size %d power %d nbytes %3.0f KB\n",

nElem, nPower, (float)nBytes / (1024.0f));

}

else {

printf("Vector size %d power %d nbytes %3.0f MB\n",

nElem, nPower, (float)nBytes / (1024.0f * 1024.0f));

}

// part 1: using device memory

// malloc host memory

float *h_A, *h_B, *hostRef, *gpuRef;

h_A = (float*)malloc(nBytes);

h_B = (float*)malloc(nBytes);

hostRef = (float*)malloc(nBytes);

gpuRef = (float*)malloc(nBytes);

// initialize data at host side

InitData(h_A, nElem);

InitData(h_B, nElem);

memset(hostRef, 0, nBytes);

memset(gpuRef, 0, nBytes);

// add vector at host side for result checks

SumArraysOnHost(h_A, h_B, hostRef, nElem);

// malloc device global memory

float* d_A, *d_B, *d_C;

cudaMalloc(&d_A, nBytes);

cudaMalloc(&d_B, nBytes);

cudaMalloc(&d_C, nBytes);

//transfer data from host to device

cudaMemcpy(d_A, h_A, nBytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_B, h_B, nBytes, cudaMemcpyHostToDevice);

// set up execution configuration

int nLen = 512;

dim3 block(nLen);

dim3 grid((nElem + block.x - 1) / block.x);

//invoke kernel at host side

GpuSumArrays << <grid, block >> > (d_A, d_B, d_C, nElem);

//copy kernel result back to host side

cudaMemcpy(gpuRef, d_C, nBytes, cudaMemcpyDeviceToHost);

//check device results

CheckResults(hostRef, gpuRef, nElem);

// free device globl memory

cudaFree(d_A);

cudaFree(d_B);

free(h_A);

free(h_B);

// part2: using zerocopy memory for array A and B

// allocate zerocpy memory

unsigned int nFlags = cudaHostAllocMapped;

cudaHostAlloc(&h_A, nBytes, nFlags);

cudaHostAlloc(&h_B, nBytes, nFlags);

// initialize data at host side

InitData(h_A, nElem);

InitData(h_B, nElem);

memset(hostRef, 0, nBytes);

memset(gpuRef, 0, nBytes);

// pass the pointer to device

cudaHostGetDevicePointer(&d_A, h_A, 0);

cudaHostGetDevicePointer(&d_B, h_B, 0);

// add at host side for result checks

SumArraysOnHost(h_A, h_B, hostRef, nElem);

//execute kernle with zero copy memory

GpuSumArrays << <grid, block >> > (d_A, d_B, d_C, nElem);

//copy kernel result back to host side

cudaMemcpy(gpuRef, d_C, nBytes, cudaMemcpyDeviceToHost);

//check device results

CheckResults(hostRef, gpuRef, nElem);

// free memory

cudaFree(d_C);

cudaFreeHost(h_A);

cudaFreeHost(h_B);

free(hostRef);

free(gpuRef);

EXIT:

cudaDeviceReset();

system("pause");

return 0;

}

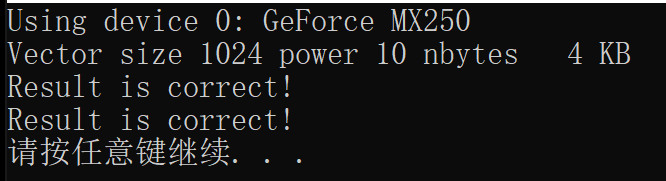

2. Running results

summary

Keep in mind that zero-copy memory is not suitable for frequent read-write memory operations and reduces performance.

Reference material

CUDA C Programming Authoritative Guide