1. Data analysis

1.1 problems

This case requires statistical analysis exercises:

- Use the client to create the input directory on hdfs

- And upload * txt file to input directory

- Call the cluster to analyze the uploaded files and count the words with the most occurrences

1.2 steps

To implement this case, you need to follow the following steps.

Step 1: word frequency statistics

[root@hadoop1 hadoop]# . / bin/hadoop fs -ls / / view the root of the cluster file system. There is no content [root@hadoop1 hadoop]# ./bin/hadoop fs -mkdir /aaa //Create aaa directory under cluster file system [root@hadoop1 hadoop]# . / bin/hadoop fs -ls / / check again. There is the aaa directory just created Found 1 items drwxr-xr-x - root supergroup 0 2018-09-10 09:56 /aaa [root@hadoop1 hadoop]# . / bin / Hadoop FS - touchz / fa / / create a fa file under the cluster file system [root@hadoop1 hadoop]# ./bin/hadoop fs -put *.txt /aaa //Upload * txt to the aaa directory under the cluster file system [root@hadoop1 hadoop]# . / bin/hadoop fs -ls /aaa / / view Found 3 items -rw-r--r-- 2 root supergroup 86424 2018-09-10 09:58 /aaa/LICENSE.txt -rw-r--r-- 2 root supergroup 14978 2018-09-10 09:58 /aaa/NOTICE.txt -rw-r--r-- 2 root supergroup 1366 2018-09-10 09:58 /aaa/README.txt [root@hadoop1 hadoop]# . / bin / Hadoop FS - get / aaa / / download the aaa directory of the cluster file system [root@hadoop1 hadoop]# ./bin/hadoop jar \ share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar wordcount /aaa /bbb //hadoop cluster analyzes big data, and the data in hadoop cluster / aaa is stored in hadoop cluster / bbb [root@hadoop1 hadoop]# . / bin/hadoop fs -cat /bbb / * / / view the data in the cluster

2. Node expansion

2.1 problems

This case requires node expansion:

- Minimum configuration: 2CPU, 2G memory, 10G hard disk

- Virtual machine IP: 192.168.1.54 newnode

- Add datanode and nodemanager

2.2 scheme

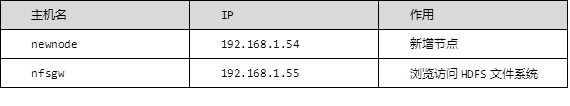

In addition, two hosts, newnode and nfsgw, are prepared as newly added nodes and gateways. The specific requirements are shown in table-2:

Table-2

2.3 steps

To implement this case, you need to follow the following steps.

Step 1: add nodes

1) Add a new node newnode

[root@hadoop5 ~]# Echo newnode > / etc / hostname / / change the hostname to newnode

[root@hadoop5 ~]# hostname newnode

[root@newnode ~]# yum -y install java-1.8.0-openjdk-devel

[root@newnode ~]# mkdir /var/hadoop

[root@hadoop1 .ssh]# ssh-copy-id 192.168.1.64

[root@hadoop1 .ssh]# vim /etc/hosts

192.168.1.50 hadoop1

192.168.1.51 node-0001

192.168.1.52 node-0002

192.168.1.53 node-0003

192.168.1.54 newnode

[root@hadoop1 .ssh]# scp /etc/hosts 192.168.1.54:/etc/

[root@hadoop1 ~]# cd /usr/local/hadoop/

[root@hadoop1 hadoop]# vim ./etc/hadoop/slaves

node-0001

node-0002

node-0003

newnode

[root@hadoop1 hadoop]# for i in {51..54}; do rsync -aSH --delete /usr/local/hadoop/

\ 192.168.1.$i:/usr/local/hadoop/ -e 'ssh' & done //Synchronous configuration

[1] 1841

[2] 1842

[3] 1843

[4] 1844

[root@newnode ~]# cd /usr/local/hadoop/

[root@newnode hadoop]# ./sbin/hadoop-daemon.sh start datanode / / start

2) View status

[root@newnode hadoop]# jps 24439 Jps 24351 DataNode

3) Set synchronization bandwidth

[root@newnode hadoop]# ./bin/hdfs dfsadmin -setBalancerBandwidth 60000000 Balancer bandwidth is set to 60000000 [root@newnode hadoop]# ./sbin/start-balancer.sh

3. Reduce cluster nodes

3.1 problems

This case requires cluster reduction:

- Delete the newnode node that just joined the cluster from the cluster

- In order to see the three states, first upload some files to HDFS

- Record the amount of data for each host, and then perform data migration

3.2 steps

To implement this case, you need to follow the following steps.

Step 1: reduce cluster size

1) Delete node

[root@hadoop1 hadoop]# vim /usr/local/hadoop/etc/hadoop/slaves

//Remove the previously added newnode

node-0001

node-0002

node-0003

[root@hadoop1 hadoop]# vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

//Add the following four lines to this configuration file

<property>

<name>dfs.hosts.exclude</name>

<value>/usr/local/hadoop/etc/hadoop/exclude</value>

</property>

[root@hadoop1 hadoop]# vim /usr/local/hadoop/etc/hadoop/exclude

newnode

5) Export data

[root@hadoop1 hadoop]# ./bin/hdfs dfsadmin -refreshNodes

Refresh nodes successful

[root@hadoop1 hadoop]# ./bin/hdfs dfsadmin -report

//View the new node to display decompmissioned

Name: 192.168.1.64:50010 (newnode)

Hostname: newnode

Decommission Status : Decommissioned

Configured Capacity: 2135949312 (1.99 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 1861509120 (1.73 GB)

DFS Remaining: 274436096 (261.72 MB)

DFS Used%: 0.00%

DFS Remaining%: 12.85%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Mar 05 17:17:09 CST 2019

[root@newnode hadoop]# ./sbin/hadoop-daemon.sh stop datanode / / stop datanode

stopping datanode

[root@newnode hadoop]# ./sbin/yarn-daemon.sh start nodemanager

//yarn adds nodemanager

[root@newnode hadoop]# ./sbin/yarn-daemon. SH stop nodemanager / / stop nodemanager

stopping nodemanager

[root@newnode hadoop]# ./bin/yarn node -list

//When yarn checks the node status, there is still a newnode node, which will disappear after a period of time

Total Nodes:4

Node-Id Node-State Node-Http-Address Number-of-Running-Containers

node-0003:34628 RUNNING node-0003:8042 0

node-0002:36300 RUNNING node-0002:8042 0

newnode:42459 RUNNING newnode:8042 0

node-0001:39196 RUNNING node-0001:8042

4. Create an account and authorize

4.1 problems

This case requires:

- Add user nfsuser in namenode and nfsgw

- Complete HDFS cluster authorization for nfsuser

- Minimum configuration: 1cpu, 1G memory, 10G hard disk

- Virtual machine IP: 192.168.1.55 nfsgw

4.2 steps

To implement this case, you need to follow the following steps.

Step 1: basic preparation

1) Change the host name and configure / etc/hosts (/ etc/hosts is configured on Hadoop 1 and nfsgw)

[root@localhost ~]# echo nfsgw > /etc/hostname [root@localhost ~]# hostname nfsgw [root@hadoop1 hadoop]# vim /etc/hosts 192.168.1.50 hadoop1 192.168.1.51 node-0001 192.168.1.52 node-0002 192.168.1.53 node-0003 192.168.1.54 newnode 192.168.1.55 nfsgw

2) Create a proxy user (Hadoop 1 and nfsgw are operated above). Take Hadoop 1 as an example

[root@hadoop1 hadoop]# groupadd -g 800 nfsuser [root@hadoop1 hadoop]# useradd -u 800 -g 800 -r -d /var/hadoop nfsuser

3) Configure core site xml

[root@hadoop1 hadoop]# ./sbin/stop-all.sh / / stop all services

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [hadoop1]

hadoop1: stopping namenode

node-0002: stopping datanode

newnode: no datanode to stop

node-0003: stopping datanode

node-0001: stopping datanode

Stopping secondary namenodes [hadoop1]

hadoop1: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

node-0002: stopping nodemanager

node-0003: stopping nodemanager

newnode: no nodemanager to stop

node-0001: stopping nodemanager

...

[root@hadoop1 hadoop]# cd etc/hadoop

[root@hadoop1 hadoop]# >exclude

[root@hadoop1 hadoop]# vim core-site.xml

<property>

<name>hadoop.proxyuser.nfsuser.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.nfsuser.hosts</name>

<value>*</value>

</property>

4) Synchronous configuration to node-0001, node-0002, node-0003

[root@hadoop1 hadoop]# for i in {51..53}; do rsync -aSH --delete /usr/local/hadoop/ 192.168.1.$i:/usr/local/hadoop/ -e 'ssh' & done

[4] 2722

[5] 2723

[6] 2724

5) Start cluster

[root@hadoop1 hadoop]# /usr/local/hadoop/sbin/start-dfs.sh

6) View status

[root@hadoop1 hadoop]# /usr/local/hadoop/bin/hdfs dfsadmin -report

5. Run the gateway service on nfsgw

5.1 problems

This case requires running the gateway service on nfsgw:

- Hadoop portmap

- Hadoop nfs3

5.2 steps

To implement this case, you need to follow the following steps.

Step 2: NFSGW configuration

1) Uninstall rpcbind and NFS utils

[root@nfsgw ~]# yum remove -y rpcbind nfs-utils

2) Install java-1.8.0-openjdk-devel and rsync

[root@nfsgw ~]# yum -y install java-1.8.0-openjdk-devel [root@hadoop1 hadoop]# rsync -avSH --delete \ /usr/local/hadoop/ 192.168.1.55:/usr/local/hadoop/ -e 'ssh'

3) Create data root directory / var/hadoop (operate on NFSGW host)

[root@nfsgw ~]# mkdir /var/hadoop

4) Create a dump directory and empower users with nfs

[root@nfsgw ~]# mkdir /var/nfstmp [root@nfsgw ~]# chown nfsuser:nfsuser /var/nfstmp

5) Empower / usr/local/hadoop/logs (operate on NFSGW host)

[root@nfsgw ~]# setfacl -m user:nfsuser:rwx /usr/local/hadoop/logs

[root@nfsgw ~]# vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<property>

<name>nfs.exports.allowed.hosts</name>

<value>* rw</value>

</property>

<property>

<name>nfs.dump.dir</name>

<value>/var/nfstmp</value>

</property>

6) You can create and delete it

[root@nfsgw ~]# su - nfs [nfs@nfsgw ~]$ cd /var/nfstmp/ [nfs@nfsgw nfstmp]$ touch 1 [nfs@nfsgw nfstmp]$ ls 1 [nfs@nfsgw nfstmp]$ rm -rf 1 [nfs@nfsgw nfstmp]$ ls [nfs@nfsgw nfstmp]$ cd /usr/local/hadoop/logs/ [nfs@nfsgw logs]$ touch 1 [nfs@nfsgw logs]$ ls 1 hadoop-root-secondarynamenode-hadoop1.log yarn-root-resourcemanager-hadoop1.log hadoop-root-namenode-hadoop1.log hadoop-root-secondarynamenode-hadoop1.out yarn-root-resourcemanager-hadoop1.out hadoop-root-namenode-hadoop1.out hadoop-root-secondarynamenode-hadoop1.out.1 hadoop-root-namenode-hadoop1.out.1 SecurityAuth-root.audit [nfs@nfsgw logs]$ rm -rf 1 [nfs@nfsgw logs]$ ls

7) Start service

[root@nfsgw ~]# /usr/local/hadoop/sbin/hadoop-daemon. sh --script ./ Bin / HDFS start portmap / / the portmap service can only be started by the root user starting portmap, logging to /usr/local/hadoop/logs/hadoop-root-portmap-nfsgw.out [root@nfsgw ~]# jps 23714 Jps 23670 Portmap [root@nfsgw ~]# su - nfsuser Last login: Mon Sep 10 12:31:58 CST 2018 on pts/0 [nfsuser @nfsgw ~]$ cd /usr/local/hadoop/ [nfsuser@nfsgw hadoop]$ ./sbin/hadoop-daemon.sh --script ./bin/hdfs start nfs3 //nfs3 can only be started with a proxy user starting nfs3, logging to /usr/local/hadoop/logs/hadoop-nfsuser-nfs3-nfsgw.out [nfs@nfsgw hadoop]$ jps 1362 Jps 1309 Nfs3 [root@nfsgw hadoop]# JPS / / the root user can see portmap and nfs3 1216 Portmap 1309 Nfs3 1374 Jps

6. Mount NFS

6.1 problems

This case requires:

- Mount NFS on newnode and start automatically

- Think about how to achieve high availability of NFS?

6.2 steps

To implement this case, you need to follow the following steps.

Step 2: NFSGW test

1) Implement client attachment (the client can use newnode as the host)

[root@newnode ~]# rm -rf /usr/local/hadoop [root@newnode ~]# yum -y install nfs-utils [root@newnode ~]# mount -t nfs -o \ vers=3,proto=tcp,nolock,noatime,sync,noacl 192.168.1.55:/ /mnt/ //mount [root@newnode ~]# cd /mnt/ [root@newnode mnt]# ls aaa bbb fa system tmp [root@newnode mnt]# touch a [root@newnode mnt]# ls a aaa bbb fa system tmp [root@newnode mnt]# rm -rf a [root@newnode mnt]# ls aaa bbb fa system tmp

8) Realize automatic mount after startup

[root@newnode ~]# vim /etc/fstab

192.168.1.55:/ /mnt/ nfs vers=3,proto=tcp,nolock,noatime,sync,noacl,_netdev 0 0

[root@newnode ~]# mount -a

[root@newnode ~]# df -h

192.168.1.26:/ 64G 6.2G 58G 10% /mnt

[root@newnode ~]# rpcinfo -p 192.168.1.55

program vers proto port service

100005 3 udp 4242 mountd

100005 1 tcp 4242 mountd

100000 2 udp 111 portmapper

100000 2 tcp 111 portmapper

100005 3 tcp 4242 mountd

100005 2 tcp 4242 mountd

100003 3 tcp 2049 nfs

100005 2 udp 4242 mountd

100005 1 udp 4242 mountd

Exercise

1 how to view the root of Hadoop cluster file system and how to create it

see

[root@nn01 hadoop]# /usr/local/hadoop/bin/hadoop fs -ls /

establish

[root@nn01 hadoop]# /usr/local/hadoop/bin/hadoop fs -mkdir /aaa

2 how to add a new node

1) Add a new node node4

[root@hadoop5 ~]# Echo node4 > / etc / hostname / / change the hostname to node4

[root@hadoop5 ~]# hostname node4

[root@node4 ~]# yum -y install rsync

[root@node4 ~]# yum -y install java-1.8.0-openjdk-devel

[root@node4 ~]# mkdir /var/hadoop

[root@nn01 .ssh]# ssh-copy-id 192.168.1.25

[root@nn01 .ssh]# vim /etc/hosts

192.168.1.21 nn01

192.168.1.22 node1

192.168.1.23 node2

192.168.1.24 node3

192.168.1.25 node4

[root@nn01 .ssh]# scp /etc/hosts 192.168.1.25:/etc/

[root@nn01 ~]# cd /usr/local/hadoop/

[root@nn01 hadoop]# vim ./etc/hadoop/slaves

node1

node2

node3

node4

[root@nn01 hadoop]# for i in {22..25}; do rsync -aSH --delete /usr/local/hadoop/

\ 192.168.1.$i:/usr/local/hadoop/ -e 'ssh' & done //Synchronous configuration

[1] 1841

[2] 1842

[3] 1843

[4] 1844

[root@node4 hadoop]# ./sbin/hadoop-daemon.sh start datanode / / start

2)View status

[root@node4 hadoop]# jps

24439 Jps

24351 DataNode

3 how to set synchronization bandwidth

[root@node4 hadoop]# ./bin/hdfs dfsadmin -setBalancerBandwidth 60000000 Balancer bandwidth is set to 60000000 [root@node4 hadoop]# ./sbin/start-balancer.sh

4. What should I pay attention to when starting NFS

The portmap service can only be started by the root user, and nfs3 can only be started by the proxy user. The portmap and nfs3 can be seen when the root user executes jps, and the portmap cannot be seen when the proxy user executes jps

[root@nfsgw ~]# /usr/local/hadoop/sbin/hadoop-daemon. sh --script ./ Bin / HDFS start portmap / / the portmap service can only be started by the root user starting portmap, logging to /usr/local/hadoop/logs/hadoop-root-portmap-nfsgw.out [root@nfsgw ~]# jps 23714 Jps 23670 Portmap [root@nfsgw ~]# su - nfs Last login: Mon Sep 10 12:31:58 CST 2018 on pts/0 [nfs@nfsgw ~]$ cd /usr/local/hadoop/ [nfs@nfsgw hadoop]$ ./sbin/hadoop-daemon.sh --script ./bin/hdfs start nfs3 //nfs3 can only be started with a proxy user starting nfs3, logging to /usr/local/hadoop/logs/hadoop-nfs-nfs3-nfsgw.out [nfs@nfsgw hadoop]$ jps 1362 Jps 1309 Nfs3 [root@nfsgw hadoop]# JPS / / the root user can see portmap and nfs3 1216 Portmap 1309 Nfs3 1374 Jps

In case of infringement, please contact the author to delete