Construction of Credit Card Scoring Model

Background description

At present, the total balance of user's age, credit card and personal credit line, the borrower's overdue in the past two years, forecast whether the borrower will anticipate the number of times, monthly income, debt ratio, family members and other information, through these information to establish wind control, credit scoring model, predict whether the borrower will anticipate.

I. Importing data and databases

Import the corresponding library

import datetime

import pandas as pd

import numpy as np

import os

import seaborn as sns

import re

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('always')

warnings.filterwarnings('ignore')

sns.set(style="darkgrid")

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

/opt/conda/lib/python3.6/importlib/_bootstrap.py:219: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/opt/conda/lib/python3.6/importlib/_bootstrap.py:219: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

time: 1.57 s

Import data

train = pd.read_csv('/home/kesci/input/kaggle4396/cs-training.csv')

test = pd.read_csv('/home/kesci/input/kaggle4396/cs-test.csv')

time: 248 ms

train.drop(columns=["Unnamed: 0"], inplace=True)

test.drop(columns=["Unnamed: 0"], inplace=True)

time: 9.83 ms

Data dimension

train.shape

(150000, 11)

time: 3.97 ms

Are there missing values?

train.isnull().sum()

SeriousDlqin2yrs 0

RevolvingUtilizationOfUnsecuredLines 0

age 0

NumberOfTime30-59DaysPastDueNotWorse 0

DebtRatio 0

MonthlyIncome 29731

NumberOfOpenCreditLinesAndLoans 0

NumberOfTimes90DaysLate 0

NumberRealEstateLoansOrLines 0

NumberOfTime60-89DaysPastDueNotWorse 0

NumberOfDependents 3924

dtype: int64

time: 30.9 ms

Is there a duplicate value?

train.duplicated().sum()

609

time: 61.2 ms

Overall distribution

train.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 150000 entries, 0 to 149999

Data columns (total 11 columns):

SeriousDlqin2yrs 150000 non-null int64

RevolvingUtilizationOfUnsecuredLines 150000 non-null float64

age 150000 non-null int64

NumberOfTime30-59DaysPastDueNotWorse 150000 non-null int64

DebtRatio 150000 non-null float64

MonthlyIncome 120269 non-null float64

NumberOfOpenCreditLinesAndLoans 150000 non-null int64

NumberOfTimes90DaysLate 150000 non-null int64

NumberRealEstateLoansOrLines 150000 non-null int64

NumberOfTime60-89DaysPastDueNotWorse 150000 non-null int64

NumberOfDependents 146076 non-null float64

dtypes: float64(4), int64(7)

memory usage: 12.6 MB

time: 32.2 ms

Look at the data.

train.head()

|

SeriousDlqin2yrs |

RevolvingUtilizationOfUnsecuredLines |

age |

NumberOfTime30-59DaysPastDueNotWorse |

DebtRatio |

MonthlyIncome |

NumberOfOpenCreditLinesAndLoans |

NumberOfTimes90DaysLate |

NumberRealEstateLoansOrLines |

NumberOfTime60-89DaysPastDueNotWorse |

NumberOfDependents |

| 0 |

1 |

0.766127 |

45 |

2 |

0.802982 |

9120.0 |

13 |

0 |

6 |

0 |

2.0 |

| 1 |

0 |

0.957151 |

40 |

0 |

0.121876 |

2600.0 |

4 |

0 |

0 |

0 |

1.0 |

| 2 |

0 |

0.658180 |

38 |

1 |

0.085113 |

3042.0 |

2 |

1 |

0 |

0 |

0.0 |

| 3 |

0 |

0.233810 |

30 |

0 |

0.036050 |

3300.0 |

5 |

0 |

0 |

0 |

0.0 |

| 4 |

0 |

0.907239 |

49 |

1 |

0.024926 |

63588.0 |

7 |

0 |

1 |

0 |

0.0 |

time: 12.1 ms

cor=train.corr()

fig, ax = plt.subplots(figsize=(10, 10))

sns.heatmap(cor, xticklabels=cor.columns, yticklabels=cor.columns, annot=True, ax=ax);

time: 1.2 s

II. Data Preprocessing

train_clean = train.copy()

time: 6.31 ms

Duplicate removal

train_clean.drop_duplicates(inplace=True)

time: 198 ms

Missing Value Processing

Fill in missing values by mode

def fill_na(df):

na_list = [i for i in df.isnull().sum().index if df.isnull().sum()[i] > 0]

for n in na_list:

train_fillna = train_clean[n][train_clean[n].isna() == False]

train_clean[n].fillna(train_fillna.median(), inplace=True)

time: 1.13 ms

fill_na(train_clean)

train_clean.isnull().sum()

SeriousDlqin2yrs 0

RevolvingUtilizationOfUnsecuredLines 0

age 0

NumberOfTime30-59DaysPastDueNotWorse 0

DebtRatio 0

MonthlyIncome 0

NumberOfOpenCreditLinesAndLoans 0

NumberOfTimes90DaysLate 0

NumberRealEstateLoansOrLines 0

NumberOfTime60-89DaysPastDueNotWorse 0

NumberOfDependents 0

dtype: int64

time: 360 ms

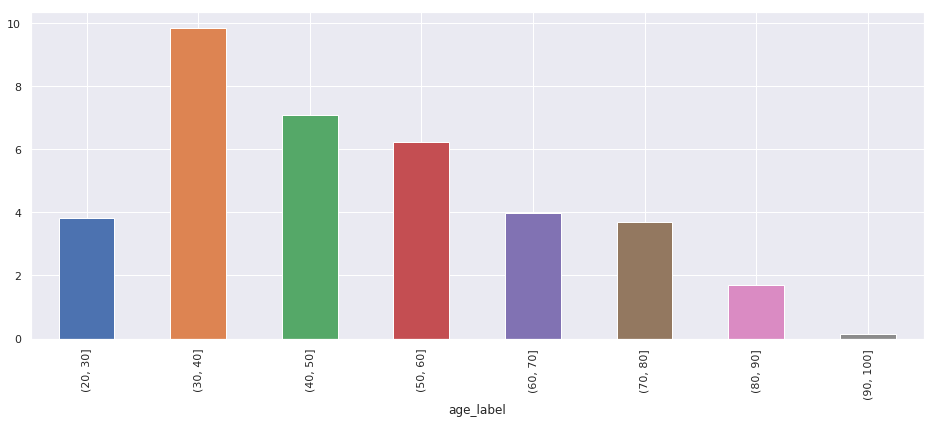

Age Distribution of Lenders

plt.figure(figsize=(16, 6))

sns.distplot(train_clean["age"], color = "black");

time: 665 ms

train_clean["age_label"] = pd.cut(train_clean["age"], np.arange(20, 110, 10))

time: 9.82 ms

bins = [0, 30, 40, 50, 60, 70, 110]

labels = ['0-29', '30-39', '40-49', '50-59', '60-69', '70+']

train_clean['age_grouped'] = pd.cut(train_clean['age'], bins, right=0, labels=labels)

train_clean.drop(columns="age", inplace=True)

time: 13.2 ms

def plot_age(col, fun):

data = pd.concat([train_clean[col], train_clean["age_label"]], axis = 1)

if fun == "s":

df = data.groupby("age_label")[col].sum()

elif fun == "m":

df = data.groupby("age_label")[col].mean()

df.plot(kind="bar", figsize=(16, 6))

time: 1.14 ms

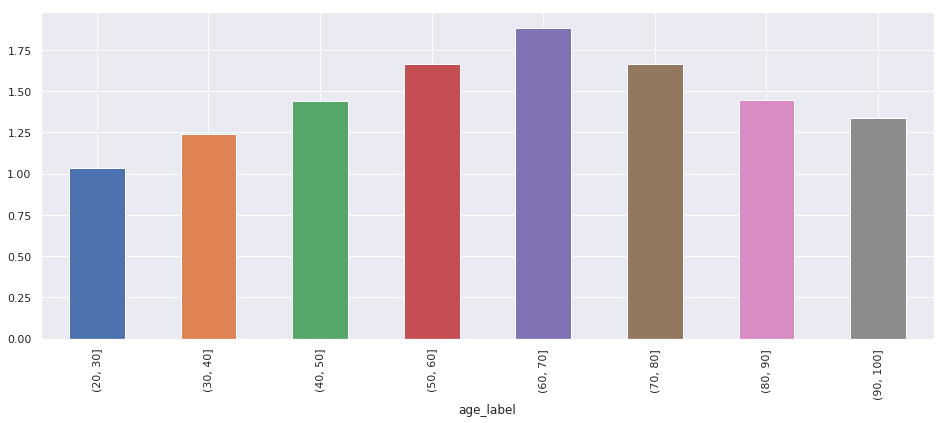

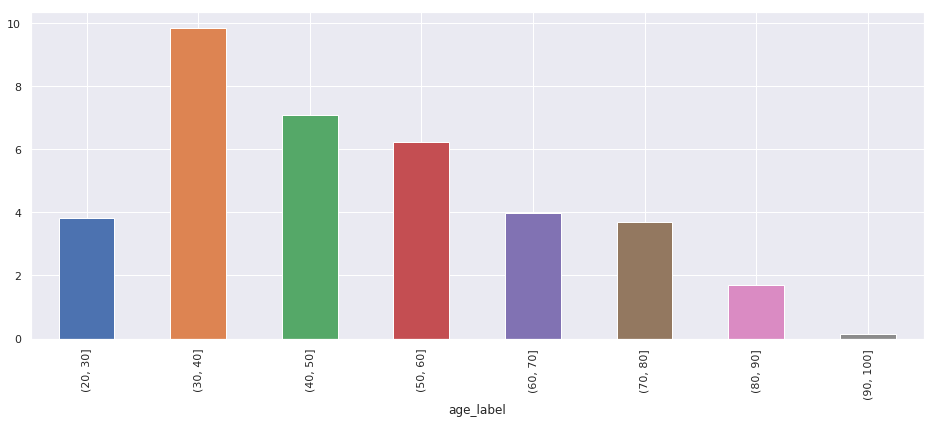

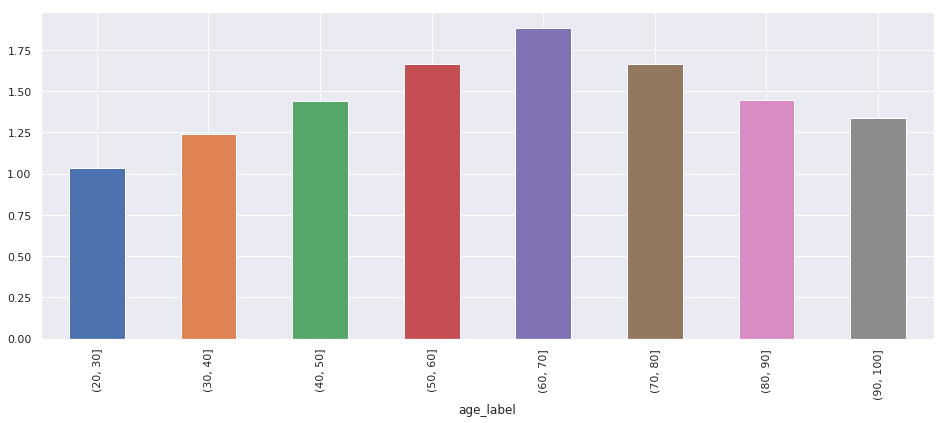

Age-related Total Balance of Credit Cards and Personal Credit Lines of Lenders

plot_age("RevolvingUtilizationOfUnsecuredLines", "m");

time: 294 ms

bins = [0, 0.15, 0.30, 0.45, 0.60, 0.75, 0.90, 1.05,

train_clean['RevolvingUtilizationOfUnsecuredLines'].max()*1.05]

labels = [

'0-0.15',

'0.15-0.30',

'0.30-0.45',

'0.45-0.60',

'0.60-0.75',

'0.75-0.90',

'0.90-1.05',

'1.05+']

train_clean['ru_grouped'] = pd.cut(train_clean['RevolvingUtilizationOfUnsecuredLines'],

bins, right=0, labels=labels)

train_clean.drop(columns='ru_grouped', inplace=True)

time: 12.8 ms

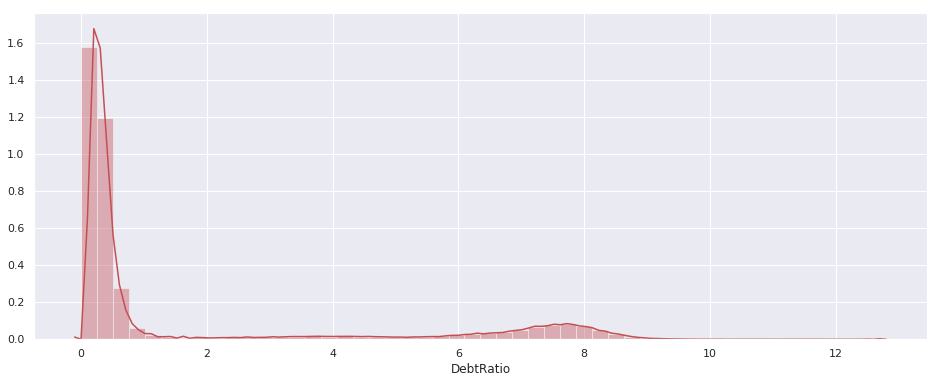

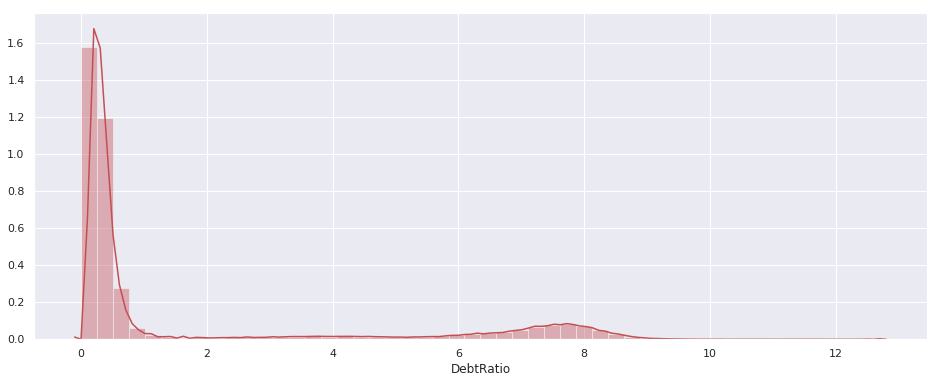

Whether there is abnormal value in debt ratio

plt.figure(figsize=(16, 6))

sns.distplot(train_clean['DebtRatio'].apply(np.log1p), color="r");

time: 748 ms

train_clean["dr_log"] = train_clean["DebtRatio"].apply(np.log1p)

train_clean.drop(columns="DebtRatio", inplace=True)

plot_age("dr_log", "m")

time: 452 ms

bins = [0, 2, 4, 6, 10, 14,

train_clean['NumberOfOpenCreditLinesAndLoans'].max()*1.05]

labels = ['0-1', '2-3', '4-5', '6-9', '10-13', '14+']

train_clean['num_oc_grouped'] = pd.cut(train_clean['NumberOfOpenCreditLinesAndLoans'], \

bins, right=0, labels=labels)

train_clean.drop(columns='NumberOfOpenCreditLinesAndLoans', inplace=True)

time: 13.2 ms

bins = [0, 1, 2, 4,

train_clean['NumberOfDependents'].max()*1.05]

labels = ['0', '1', '2-3', '4+']

train_clean['num_dep_grouped'] = pd.cut(train_clean['NumberOfDependents'], \

bins, right=0, labels=labels)

train_clean.drop(columns='num_dep_grouped', inplace=True)

time: 10.6 ms

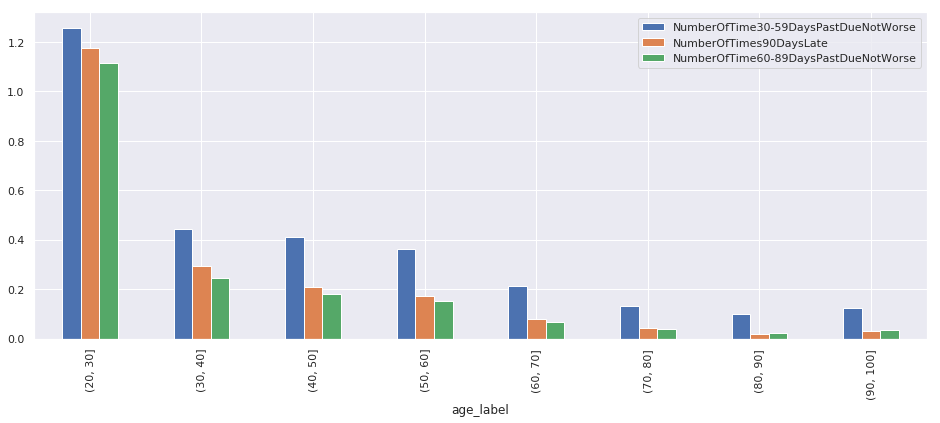

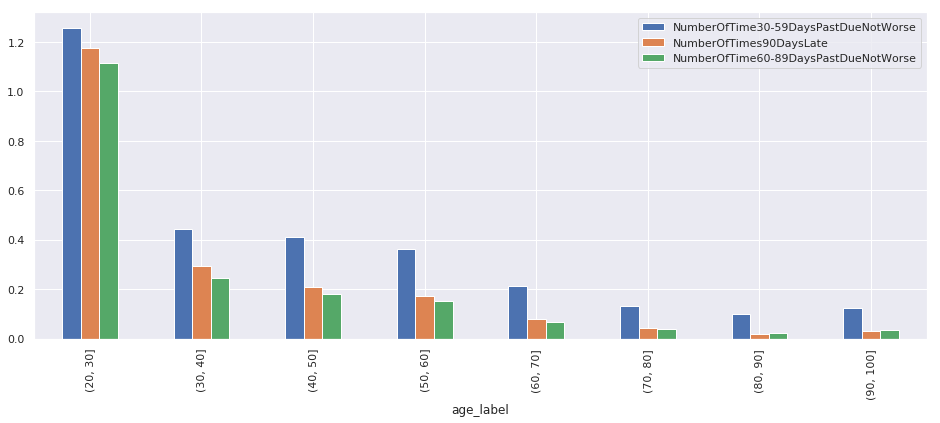

Overdue times of borrowers in the past two years

PastDueNotWorse = [i for i in train_clean.columns if "NumberOfTime" in i]

plot_age(PastDueNotWorse, fun = "m")

time: 566 ms

cor = train_clean[PastDueNotWorse].corr()

cor

|

NumberOfTime30-59DaysPastDueNotWorse |

NumberOfTimes90DaysLate |

NumberOfTime60-89DaysPastDueNotWorse |

| NumberOfTime30-59DaysPastDueNotWorse |

1.000000 |

0.980489 |

0.984535 |

| NumberOfTimes90DaysLate |

0.980489 |

1.000000 |

0.991409 |

| NumberOfTime60-89DaysPastDueNotWorse |

0.984535 |

0.991409 |

1.000000 |

time: 12 ms

train_clean.drop(columns=["NumberOfTime30-59DaysPastDueNotWorse", \

"NumberOfTime60-89DaysPastDueNotWorse"], inplace=True)

time: 3.13 ms

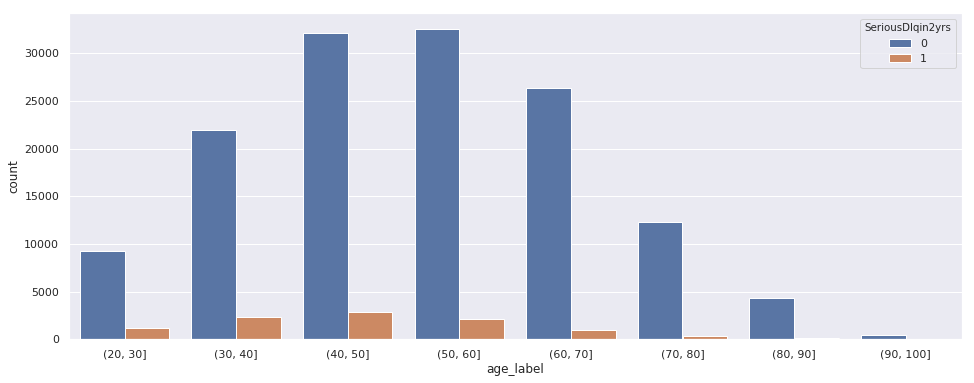

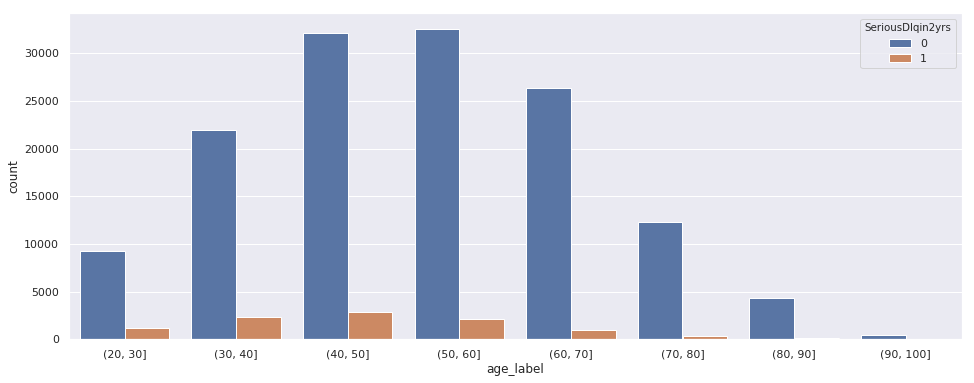

Whether the Borrower is Overdue and Age Relation

plt.figure(figsize=(16, 6))

sns.countplot(data=train_clean, x="age_label", hue="SeriousDlqin2yrs");

time: 376 ms

Distribution of overdue

train_clean['income_log'] = (train_clean['MonthlyIncome']/10000).apply(np.log1p)

train_clean.drop(columns=['MonthlyIncome'], inplace=True)

time: 8.29 ms

3. Training model

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.metrics import f1_score, roc_auc_score, confusion_matrix, accuracy_score, fbeta_score

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

time: 776 µs

Firstly, logistic regression model is used.

attributes = train_clean.columns.drop(['SeriousDlqin2yrs'])

sol = ['SeriousDlqin2yrs']

df = pd.get_dummies(train_clean, drop_first=True)

X = pd.get_dummies(train_clean[attributes], drop_first=True)

y = train_clean[sol]

X_train, X_valid, y_train, y_valid = train_test_split(

X, y, test_size=0.25, shuffle=True)

time: 77.2 ms

def plot_est_score(Range):

score_list = pd.DataFrame({}, index=np.arange(

Range.shape[0]+1), columns=[["train_score", "test_score"]])

for i in Range:

lg = LogisticRegression(C=i, solver='lbfgs')

pred = lg.fit(X_train, y_train).predict(X_valid)

ascore = lg.score(X_train, y_train)

fscore = lg.score(X_valid, y_valid)

score_list.loc[i-1, "train_score"] = ascore

score_list.loc[i-1, "test_score"] = fscore

score_list.dropna(inplace=True)

score_max = score_list.max()

score_max_index = score_list[score_list == score_list.max()].dropna().index[0]

print(

"nC={}\nmax =\n{}".format(

score_max_index,

score_max))

score_list.plot(figsize=(16, 4))

time: 1.95 ms

plot_est_score(np.array([0.01, 0.03, 0.1, 0.3, 1, 3, 10]))

nC=-0.99

max =

train_score 0.933534

test_score 0.932660

dtype: float64

time: 32.7 s

Here's the start of network tuning

params_LR = {'C': [0.01, 0.03, 0.1, 0.3, 1, 3, 10],

'solver': ['lbfgs', 'liblinear']}

gs = GridSearchCV(LogisticRegression(max_iter=1000),

param_grid = params_LR,

scoring = 'f1',

cv=5).fit(X_train, y_train)

gs.best_params_

{'C': 0.01, 'solver': 'lbfgs'}

time: 7min 41s

model_lr = LogisticRegression(C=gs.best_params_['C'], solver=gs.best_params_['solver']).fit(X_train, y_train)

print('train Score: %.6f' % model_lr.score(X_train, y_train))

print('valid Score: %.6f' % model_lr.score(X_valid, y_valid))

train Score: 0.933534

valid Score: 0.932660

time: 4.63 s

Modeling and Forecasting Using XGBOOST

import xgboost as xgb

params_xgb = {'max_depth': 6,

'eta': 1,

'silent': 1,

'objective': 'binary:logistic',

'eval_matric': 'f1'}

params_xgb2 = {'max_depth': 5,

'eta': 0.025,

'silent':1,

'objective': 'binary:logistic',

'eval_matric': 'auc',

'minchildweight': 10.0,

'maxdeltastep': 1.8,

'colsample_bytree': 0.4,

'subsample': 0.8,

'gamma': 0.65,

'numboostround' : 391}

time: 91.4 ms

regex = re.compile(r"\[|\]|<", re.IGNORECASE)

feature_name = [regex.sub("_", col) if any(x in str(col) for x in set(('[', ']', '<'))) else col for col in X.columns]

time: 1.16 ms

dtrain = xgb.DMatrix(X_train, y_train, feature_names=feature_name)

dvalid = xgb.DMatrix(X_valid, y_valid, feature_names=feature_name)

evals = [(dtrain, 'train'), (dvalid, 'valid')]

model_xgb = xgb.train(params_xgb2, dtrain, 1000, evals, early_stopping_rounds=100);

[0] train-error:0.066403 valid-error:0.068973

Multiple eval metrics have been passed: 'valid-error' will be used for early stopping.

Will train until valid-error hasn't improved in 100 rounds.

[1] train-error:0.065118 valid-error:0.066858

[2] train-error:0.065725 valid-error:0.067259

[3] train-error:0.066742 valid-error:0.067714

[4] train-error:0.066751 valid-error:0.067741

[5] train-error:0.066751 valid-error:0.067741

[6] train-error:0.066751 valid-error:0.067741

[7] train-error:0.066751 valid-error:0.067741

[8] train-error:0.066733 valid-error:0.067768

[9] train-error:0.066751 valid-error:0.067741

[10] train-error:0.066751 valid-error:0.067741

[11] train-error:0.066742 valid-error:0.067741

[12] train-error:0.066671 valid-error:0.067741

[13] train-error:0.066742 valid-error:0.067741

[14] train-error:0.066751 valid-error:0.067741

[15] train-error:0.066751 valid-error:0.067741

[16] train-error:0.066751 valid-error:0.067741

[17] train-error:0.066751 valid-error:0.067741

[18] train-error:0.066751 valid-error:0.067741

[19] train-error:0.066751 valid-error:0.067741

[20] train-error:0.066751 valid-error:0.067741

[21] train-error:0.066751 valid-error:0.067741

[22] train-error:0.066751 valid-error:0.067741

[23] train-error:0.066751 valid-error:0.067741

[24] train-error:0.066751 valid-error:0.067741

[25] train-error:0.066751 valid-error:0.067741

[26] train-error:0.066751 valid-error:0.067741

[27] train-error:0.066751 valid-error:0.067741

[28] train-error:0.066751 valid-error:0.067741

[29] train-error:0.066742 valid-error:0.067741

[30] train-error:0.066733 valid-error:0.067741

[31] train-error:0.066671 valid-error:0.067741

[32] train-error:0.066635 valid-error:0.067714

[33] train-error:0.066689 valid-error:0.067741

[34] train-error:0.066742 valid-error:0.067741

[35] train-error:0.066742 valid-error:0.067741

[36] train-error:0.066742 valid-error:0.067741

[37] train-error:0.066698 valid-error:0.067741

[38] train-error:0.066635 valid-error:0.067741

[39] train-error:0.066599 valid-error:0.067634

[40] train-error:0.066617 valid-error:0.067688

[41] train-error:0.066608 valid-error:0.067634

[42] train-error:0.066635 valid-error:0.067714

[43] train-error:0.066653 valid-error:0.067741

[44] train-error:0.066689 valid-error:0.067741

[45] train-error:0.066644 valid-error:0.067714

[46] train-error:0.066689 valid-error:0.067741

[47] train-error:0.066644 valid-error:0.067714

[48] train-error:0.066635 valid-error:0.067714

[49] train-error:0.066617 valid-error:0.067661

[50] train-error:0.066582 valid-error:0.067607

[51] train-error:0.06651 valid-error:0.067527

[52] train-error:0.066457 valid-error:0.067527

[53] train-error:0.066341 valid-error:0.067473

[54] train-error:0.066323 valid-error:0.067447

[55] train-error:0.06626 valid-error:0.067473

[56] train-error:0.066332 valid-error:0.067473

[57] train-error:0.06626 valid-error:0.0675

[58] train-error:0.066189 valid-error:0.067447

[59] train-error:0.066135 valid-error:0.067447

[60] train-error:0.066189 valid-error:0.067473

[61] train-error:0.066144 valid-error:0.067447

[62] train-error:0.066117 valid-error:0.067366

[63] train-error:0.066082 valid-error:0.067313

[64] train-error:0.066028 valid-error:0.067206

[65] train-error:0.0661 valid-error:0.06734

[66] train-error:0.066001 valid-error:0.067259

[67] train-error:0.065984 valid-error:0.067152

[68] train-error:0.065903 valid-error:0.067018

[69] train-error:0.065796 valid-error:0.066938

[70] train-error:0.065876 valid-error:0.066965

[71] train-error:0.065939 valid-error:0.067045

[72] train-error:0.065984 valid-error:0.067152

[73] train-error:0.065894 valid-error:0.066992

[74] train-error:0.065805 valid-error:0.066965

[75] train-error:0.065868 valid-error:0.067018

[76] train-error:0.065912 valid-error:0.067018

[77] train-error:0.065796 valid-error:0.066965

[78] train-error:0.065662 valid-error:0.066965

[79] train-error:0.065725 valid-error:0.066992

[80] train-error:0.065796 valid-error:0.067018

[81] train-error:0.065778 valid-error:0.066938

[82] train-error:0.065752 valid-error:0.066831

[83] train-error:0.065832 valid-error:0.066938

[84] train-error:0.065725 valid-error:0.066911

[85] train-error:0.065609 valid-error:0.066884

[86] train-error:0.065689 valid-error:0.066938

[87] train-error:0.065653 valid-error:0.066858

[88] train-error:0.065618 valid-error:0.066751

[89] train-error:0.065627 valid-error:0.066751

[90] train-error:0.065591 valid-error:0.066697

[91] train-error:0.065636 valid-error:0.066777

[92] train-error:0.065636 valid-error:0.066804

[93] train-error:0.065582 valid-error:0.066751

[94] train-error:0.065582 valid-error:0.06667

[95] train-error:0.065618 valid-error:0.066751

[96] train-error:0.065573 valid-error:0.066617

[97] train-error:0.065484 valid-error:0.066563

[98] train-error:0.065395 valid-error:0.06659

[99] train-error:0.065359 valid-error:0.066563

[100] train-error:0.065421 valid-error:0.066563

[101] train-error:0.065484 valid-error:0.066617

[102] train-error:0.065368 valid-error:0.066563

[103] train-error:0.06527 valid-error:0.066349

[104] train-error:0.065225 valid-error:0.066269

[105] train-error:0.065073 valid-error:0.066242

[106] train-error:0.064984 valid-error:0.066188

[107] train-error:0.064913 valid-error:0.066162

[108] train-error:0.064797 valid-error:0.065921

[109] train-error:0.064868 valid-error:0.066001

[110] train-error:0.064761 valid-error:0.065813

[111] train-error:0.064805 valid-error:0.06584

[112] train-error:0.064743 valid-error:0.065867

[113] train-error:0.064672 valid-error:0.065813

[114] train-error:0.064582 valid-error:0.065653

[115] train-error:0.064475 valid-error:0.065572

[116] train-error:0.06444 valid-error:0.065626

[117] train-error:0.06444 valid-error:0.065439

[118] train-error:0.064404 valid-error:0.065385

[119] train-error:0.064359 valid-error:0.065385

[120] train-error:0.06435 valid-error:0.065412

[121] train-error:0.064368 valid-error:0.065385

[122] train-error:0.064359 valid-error:0.065465

[123] train-error:0.06435 valid-error:0.065412

[124] train-error:0.064359 valid-error:0.065385

[125] train-error:0.064377 valid-error:0.065546

[126] train-error:0.064332 valid-error:0.065385

[127] train-error:0.064341 valid-error:0.065465

[128] train-error:0.064288 valid-error:0.065465

[129] train-error:0.064288 valid-error:0.065492

[130] train-error:0.064216 valid-error:0.065439

[131] train-error:0.064252 valid-error:0.065385

[132] train-error:0.064181 valid-error:0.065358

[133] train-error:0.064047 valid-error:0.065385

[134] train-error:0.064083 valid-error:0.065358

[135] train-error:0.064127 valid-error:0.065385

[136] train-error:0.064091 valid-error:0.065385

[137] train-error:0.064047 valid-error:0.065412

[138] train-error:0.06402 valid-error:0.065358

[139] train-error:0.064002 valid-error:0.065331

[140] train-error:0.06402 valid-error:0.065358

[141] train-error:0.063931 valid-error:0.065412

[142] train-error:0.063993 valid-error:0.065385

[143] train-error:0.06385 valid-error:0.065358

[144] train-error:0.063859 valid-error:0.065358

[145] train-error:0.063868 valid-error:0.065305

[146] train-error:0.063833 valid-error:0.065251

[147] train-error:0.063779 valid-error:0.065251

[148] train-error:0.063681 valid-error:0.065198

[149] train-error:0.063645 valid-error:0.065198

[150] train-error:0.06361 valid-error:0.065171

[151] train-error:0.06361 valid-error:0.06509

[152] train-error:0.06361 valid-error:0.065144

[153] train-error:0.063565 valid-error:0.06509

[154] train-error:0.063547 valid-error:0.065117

[155] train-error:0.063529 valid-error:0.065117

[156] train-error:0.063467 valid-error:0.065064

[157] train-error:0.06352 valid-error:0.065171

[158] train-error:0.063529 valid-error:0.065224

[159] train-error:0.063422 valid-error:0.06509

[160] train-error:0.063413 valid-error:0.065117

[161] train-error:0.063476 valid-error:0.065171

[162] train-error:0.063395 valid-error:0.065144

[163] train-error:0.063422 valid-error:0.065144

[164] train-error:0.063395 valid-error:0.065144

[165] train-error:0.06336 valid-error:0.065144

[166] train-error:0.063369 valid-error:0.065171

[167] train-error:0.063324 valid-error:0.065117

[168] train-error:0.06336 valid-error:0.065064

[169] train-error:0.063315 valid-error:0.065064

[170] train-error:0.063333 valid-error:0.065037

[171] train-error:0.063315 valid-error:0.06509

[172] train-error:0.063297 valid-error:0.065117

[173] train-error:0.063315 valid-error:0.065144

[174] train-error:0.063306 valid-error:0.065117

[175] train-error:0.063253 valid-error:0.065117

[176] train-error:0.063279 valid-error:0.065117

[177] train-error:0.063324 valid-error:0.065117

[178] train-error:0.063288 valid-error:0.06509

[179] train-error:0.063297 valid-error:0.065198

[180] train-error:0.063288 valid-error:0.06509

[181] train-error:0.063297 valid-error:0.065117

[182] train-error:0.063288 valid-error:0.065144

[183] train-error:0.06327 valid-error:0.065037

[184] train-error:0.063217 valid-error:0.064876

[185] train-error:0.063244 valid-error:0.06493

[186] train-error:0.063181 valid-error:0.064876

[187] train-error:0.063181 valid-error:0.064876

[188] train-error:0.063145 valid-error:0.06485

[189] train-error:0.063128 valid-error:0.06485

[190] train-error:0.06319 valid-error:0.064876

[191] train-error:0.063172 valid-error:0.064796

[192] train-error:0.063154 valid-error:0.064823

[193] train-error:0.063181 valid-error:0.06485

[194] train-error:0.063172 valid-error:0.06485

[195] train-error:0.063181 valid-error:0.064823

[196] train-error:0.06319 valid-error:0.064823

[197] train-error:0.063128 valid-error:0.06485

[198] train-error:0.063092 valid-error:0.06485

[199] train-error:0.063029 valid-error:0.064823

[200] train-error:0.063065 valid-error:0.064823

[201] train-error:0.06302 valid-error:0.06485

[202] train-error:0.063012 valid-error:0.064823

[203] train-error:0.062976 valid-error:0.06485

[204] train-error:0.063012 valid-error:0.06485

[205] train-error:0.062958 valid-error:0.064957

[206] train-error:0.062931 valid-error:0.064903

[207] train-error:0.062922 valid-error:0.064903

[208] train-error:0.06294 valid-error:0.06493

[209] train-error:0.062904 valid-error:0.064876

[210] train-error:0.062869 valid-error:0.064903

[211] train-error:0.062895 valid-error:0.06493

[212] train-error:0.062869 valid-error:0.064957

[213] train-error:0.062895 valid-error:0.06493

[214] train-error:0.062851 valid-error:0.06493

[215] train-error:0.062851 valid-error:0.06493

[216] train-error:0.062824 valid-error:0.064876

[217] train-error:0.062806 valid-error:0.064796

[218] train-error:0.062753 valid-error:0.064796

[219] train-error:0.062762 valid-error:0.064823

[220] train-error:0.062735 valid-error:0.064769

[221] train-error:0.062699 valid-error:0.064823

[222] train-error:0.062717 valid-error:0.06485

[223] train-error:0.06269 valid-error:0.064742

[224] train-error:0.06269 valid-error:0.064742

[225] train-error:0.062672 valid-error:0.064769

[226] train-error:0.062646 valid-error:0.064769

[227] train-error:0.062646 valid-error:0.064796

[228] train-error:0.062637 valid-error:0.064769

[229] train-error:0.062646 valid-error:0.064769

[230] train-error:0.062646 valid-error:0.064769

[231] train-error:0.062646 valid-error:0.064742

[232] train-error:0.062655 valid-error:0.064742

[233] train-error:0.062646 valid-error:0.064769

[234] train-error:0.062655 valid-error:0.064769

[235] train-error:0.062663 valid-error:0.064796

[236] train-error:0.062637 valid-error:0.064796

[237] train-error:0.06261 valid-error:0.064823

[238] train-error:0.062619 valid-error:0.06485

[239] train-error:0.062583 valid-error:0.064823

[240] train-error:0.062574 valid-error:0.064716

[241] train-error:0.062547 valid-error:0.064769

[242] train-error:0.062574 valid-error:0.064742

[243] train-error:0.062565 valid-error:0.064689

[244] train-error:0.062583 valid-error:0.064689

[245] train-error:0.062574 valid-error:0.064689

[246] train-error:0.062565 valid-error:0.064716

[247] train-error:0.062574 valid-error:0.064716

[248] train-error:0.062538 valid-error:0.064689

[249] train-error:0.062521 valid-error:0.064716

[250] train-error:0.06253 valid-error:0.064662

[251] train-error:0.06253 valid-error:0.064689

[252] train-error:0.062476 valid-error:0.064662

[253] train-error:0.062476 valid-error:0.064716

[254] train-error:0.062503 valid-error:0.064716

[255] train-error:0.062503 valid-error:0.064716

[256] train-error:0.062521 valid-error:0.064635

[257] train-error:0.062476 valid-error:0.064635

[258] train-error:0.062485 valid-error:0.064635

[259] train-error:0.062503 valid-error:0.064609

[260] train-error:0.062449 valid-error:0.064475

[261] train-error:0.062414 valid-error:0.064421

[262] train-error:0.062414 valid-error:0.064421

[263] train-error:0.062396 valid-error:0.064421

[264] train-error:0.062378 valid-error:0.064448

[265] train-error:0.062351 valid-error:0.064475

[266] train-error:0.062342 valid-error:0.064448

[267] train-error:0.062342 valid-error:0.064528

[268] train-error:0.062333 valid-error:0.064528

[269] train-error:0.062324 valid-error:0.064528

[270] train-error:0.062306 valid-error:0.064501

[271] train-error:0.062298 valid-error:0.064475

[272] train-error:0.062306 valid-error:0.064528

[273] train-error:0.06228 valid-error:0.064555

[274] train-error:0.062289 valid-error:0.064609

[275] train-error:0.062253 valid-error:0.064662

[276] train-error:0.062271 valid-error:0.064609

[277] train-error:0.062253 valid-error:0.064609

[278] train-error:0.062235 valid-error:0.064609

[279] train-error:0.062217 valid-error:0.064501

[280] train-error:0.062226 valid-error:0.064555

[281] train-error:0.062235 valid-error:0.064501

[282] train-error:0.062226 valid-error:0.064448

[283] train-error:0.062181 valid-error:0.064394

[284] train-error:0.062199 valid-error:0.064448

[285] train-error:0.062173 valid-error:0.064448

[286] train-error:0.062146 valid-error:0.064421

[287] train-error:0.062137 valid-error:0.064394

[288] train-error:0.062155 valid-error:0.064394

[289] train-error:0.062173 valid-error:0.064394

[290] train-error:0.062164 valid-error:0.064421

[291] train-error:0.062137 valid-error:0.064501

[292] train-error:0.062146 valid-error:0.064555

[293] train-error:0.062137 valid-error:0.064501

[294] train-error:0.06211 valid-error:0.064528

[295] train-error:0.062101 valid-error:0.064528

[296] train-error:0.062092 valid-error:0.064475

[297] train-error:0.062092 valid-error:0.064475

[298] train-error:0.062083 valid-error:0.064448

[299] train-error:0.062092 valid-error:0.064394

[300] train-error:0.06203 valid-error:0.064528

[301] train-error:0.061994 valid-error:0.064501

[302] train-error:0.061994 valid-error:0.064475

[303] train-error:0.062012 valid-error:0.064475

[304] train-error:0.061985 valid-error:0.064475

[305] train-error:0.062003 valid-error:0.064475

[306] train-error:0.061941 valid-error:0.064421

[307] train-error:0.061932 valid-error:0.064421

[308] train-error:0.061923 valid-error:0.064421

[309] train-error:0.061878 valid-error:0.064421

[310] train-error:0.061869 valid-error:0.064421

[311] train-error:0.061869 valid-error:0.064394

[312] train-error:0.061878 valid-error:0.064368

[313] train-error:0.061869 valid-error:0.064394

[314] train-error:0.061869 valid-error:0.064421

[315] train-error:0.061878 valid-error:0.064475

[316] train-error:0.061851 valid-error:0.064475

[317] train-error:0.061878 valid-error:0.064448

[318] train-error:0.061869 valid-error:0.064394

[319] train-error:0.061833 valid-error:0.064394

[320] train-error:0.061789 valid-error:0.064314

[321] train-error:0.061807 valid-error:0.064314

[322] train-error:0.061807 valid-error:0.064314

[323] train-error:0.061789 valid-error:0.064287

[324] train-error:0.061789 valid-error:0.064314

[325] train-error:0.061789 valid-error:0.064287

[326] train-error:0.061798 valid-error:0.06426

[327] train-error:0.061798 valid-error:0.06426

[328] train-error:0.061798 valid-error:0.06426

[329] train-error:0.061798 valid-error:0.064234

[330] train-error:0.061789 valid-error:0.064234

[331] train-error:0.061789 valid-error:0.06426

[332] train-error:0.061798 valid-error:0.064314

[333] train-error:0.061807 valid-error:0.064314

[334] train-error:0.061816 valid-error:0.064341

[335] train-error:0.061816 valid-error:0.064314

[336] train-error:0.061824 valid-error:0.064287

[337] train-error:0.061824 valid-error:0.064314

[338] train-error:0.061833 valid-error:0.064314

[339] train-error:0.061816 valid-error:0.064314

[340] train-error:0.061816 valid-error:0.064234

[341] train-error:0.061789 valid-error:0.06426

[342] train-error:0.061771 valid-error:0.06426

[343] train-error:0.06178 valid-error:0.064314

[344] train-error:0.061798 valid-error:0.064287

[345] train-error:0.061798 valid-error:0.06418

[346] train-error:0.061744 valid-error:0.064207

[347] train-error:0.061762 valid-error:0.064153

[348] train-error:0.061762 valid-error:0.064153

[349] train-error:0.061762 valid-error:0.064153

[350] train-error:0.061771 valid-error:0.064234

[351] train-error:0.061762 valid-error:0.064234

[352] train-error:0.061744 valid-error:0.064234

[353] train-error:0.06178 valid-error:0.064234

[354] train-error:0.061744 valid-error:0.064234

[355] train-error:0.061744 valid-error:0.06426

[356] train-error:0.061753 valid-error:0.064287

[357] train-error:0.061735 valid-error:0.064234

[358] train-error:0.061744 valid-error:0.06426

[359] train-error:0.061726 valid-error:0.06426

[360] train-error:0.061691 valid-error:0.06426

[361] train-error:0.0617 valid-error:0.06426

[362] train-error:0.061691 valid-error:0.064287

[363] train-error:0.061691 valid-error:0.064234

[364] train-error:0.061691 valid-error:0.064234

[365] train-error:0.061664 valid-error:0.064287

[366] train-error:0.061673 valid-error:0.064287

[367] train-error:0.061646 valid-error:0.064314

[368] train-error:0.061646 valid-error:0.064314

[369] train-error:0.061655 valid-error:0.064287

[370] train-error:0.061646 valid-error:0.064314

[371] train-error:0.061673 valid-error:0.064314

[372] train-error:0.061682 valid-error:0.064314

[373] train-error:0.061664 valid-error:0.064341

[374] train-error:0.061682 valid-error:0.064368

[375] train-error:0.061655 valid-error:0.064368

[376] train-error:0.061637 valid-error:0.064368

[377] train-error:0.061619 valid-error:0.064341

[378] train-error:0.06161 valid-error:0.064368

[379] train-error:0.061628 valid-error:0.064368

[380] train-error:0.061619 valid-error:0.064368

[381] train-error:0.061619 valid-error:0.064368

[382] train-error:0.061637 valid-error:0.064341

[383] train-error:0.061592 valid-error:0.064341

[384] train-error:0.061592 valid-error:0.064341

[385] train-error:0.061575 valid-error:0.06426

[386] train-error:0.061584 valid-error:0.064287

[387] train-error:0.061584 valid-error:0.064287

[388] train-error:0.061592 valid-error:0.064234

[389] train-error:0.061575 valid-error:0.06426

[390] train-error:0.061539 valid-error:0.064234

[391] train-error:0.061521 valid-error:0.06426

[392] train-error:0.061521 valid-error:0.064234

[393] train-error:0.06153 valid-error:0.064207

[394] train-error:0.061539 valid-error:0.064207

[395] train-error:0.061521 valid-error:0.064234

[396] train-error:0.061485 valid-error:0.064287

[397] train-error:0.061485 valid-error:0.064287

[398] train-error:0.061485 valid-error:0.064287

[399] train-error:0.061494 valid-error:0.064287

[400] train-error:0.061485 valid-error:0.064287

[401] train-error:0.061503 valid-error:0.064287

[402] train-error:0.061494 valid-error:0.064287

[403] train-error:0.061494 valid-error:0.064314

[404] train-error:0.061512 valid-error:0.064314

[405] train-error:0.061521 valid-error:0.064314

[406] train-error:0.061503 valid-error:0.064341

[407] train-error:0.061494 valid-error:0.064368

[408] train-error:0.061476 valid-error:0.064368

[409] train-error:0.061476 valid-error:0.064341

[410] train-error:0.061476 valid-error:0.064341

[411] train-error:0.061459 valid-error:0.064314

[412] train-error:0.061423 valid-error:0.06426

[413] train-error:0.061432 valid-error:0.064207

[414] train-error:0.06145 valid-error:0.064207

[415] train-error:0.061467 valid-error:0.064207

[416] train-error:0.061459 valid-error:0.064207

[417] train-error:0.061467 valid-error:0.064234

[418] train-error:0.061459 valid-error:0.064234

[419] train-error:0.061423 valid-error:0.064234

[420] train-error:0.061432 valid-error:0.064234

[421] train-error:0.06145 valid-error:0.06426

[422] train-error:0.061441 valid-error:0.06426

[423] train-error:0.061423 valid-error:0.06426

[424] train-error:0.061441 valid-error:0.06426

[425] train-error:0.061432 valid-error:0.064234

[426] train-error:0.061432 valid-error:0.064234

[427] train-error:0.061414 valid-error:0.064234

[428] train-error:0.061432 valid-error:0.064234

[429] train-error:0.061396 valid-error:0.064234

[430] train-error:0.061423 valid-error:0.064234

[431] train-error:0.061405 valid-error:0.06426

[432] train-error:0.06136 valid-error:0.06426

[433] train-error:0.061369 valid-error:0.06426

[434] train-error:0.061396 valid-error:0.06426

[435] train-error:0.061405 valid-error:0.06426

[436] train-error:0.061405 valid-error:0.06426

[437] train-error:0.061378 valid-error:0.064287

[438] train-error:0.061369 valid-error:0.064314

[439] train-error:0.061378 valid-error:0.064314

[440] train-error:0.06136 valid-error:0.064314

[441] train-error:0.061343 valid-error:0.064314

[442] train-error:0.061325 valid-error:0.064287

[443] train-error:0.061325 valid-error:0.064341

[444] train-error:0.061307 valid-error:0.064314

[445] train-error:0.061325 valid-error:0.064314

[446] train-error:0.061325 valid-error:0.064314

[447] train-error:0.061307 valid-error:0.064341

Stopping. Best iteration:

[347] train-error:0.061762 valid-error:0.064153

time: 1min 59s

Save the model

model_xgb.dump_model('xgb_v1')

time: 206 ms

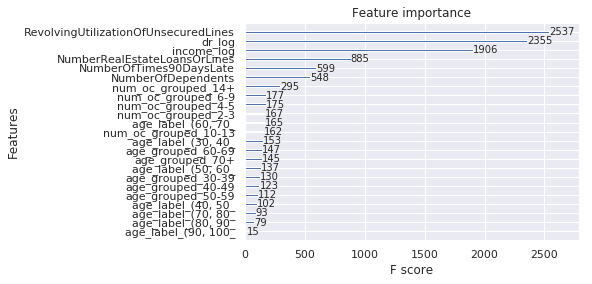

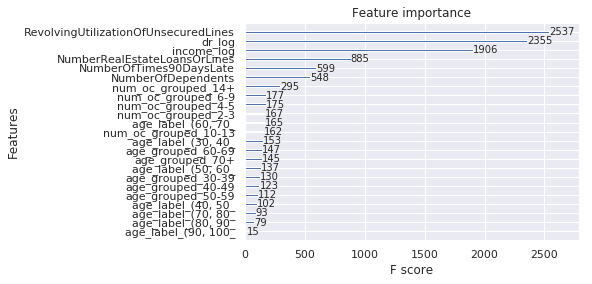

The Importance of Characteristic Assessment of Credit Card

xgb.plot_importance(model_xgb);

time: 559 ms

Visualization of XGBOOST Trees

xgb.to_graphviz(model_xgb)

time: 159 ms

Forecast whether the borrower will anticipate

dtest = xgb.DMatrix(X_valid, feature_names=feature_name)

y_test = model_xgb.predict(dtest)

entry = pd.DataFrame()

entry['ID'] = np.arange(1, len(y_test)+1)

entry['Probability'] = y_test

time: 1.17 s

entry.to_csv('pred.csv', header=True, index=False)

time: 258 ms

IV. Summary

- The age distribution of the loan population is basically normal, and the loan amount of 30-40 is the largest.

- 20-30 people are at high risk of credit card overdue

- The total balance of credit cards and personal credit lines, debt ratio and monthly income are the three most important factors for whether the lender will be overdue.

- Because of the sparse data, the discrete processing is awakened before the modeling, which is helpful to build a strong model.