Data transmission of AudioTrack

brief introduction

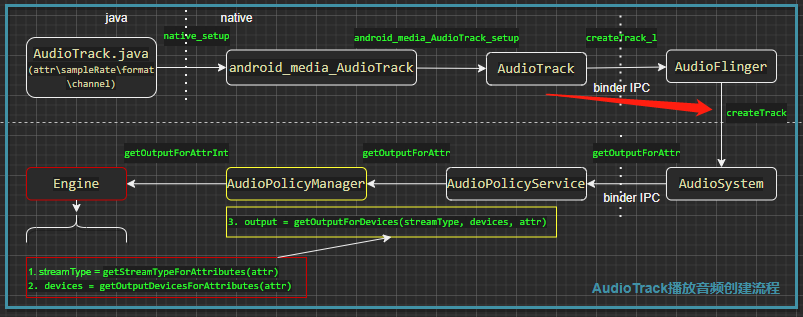

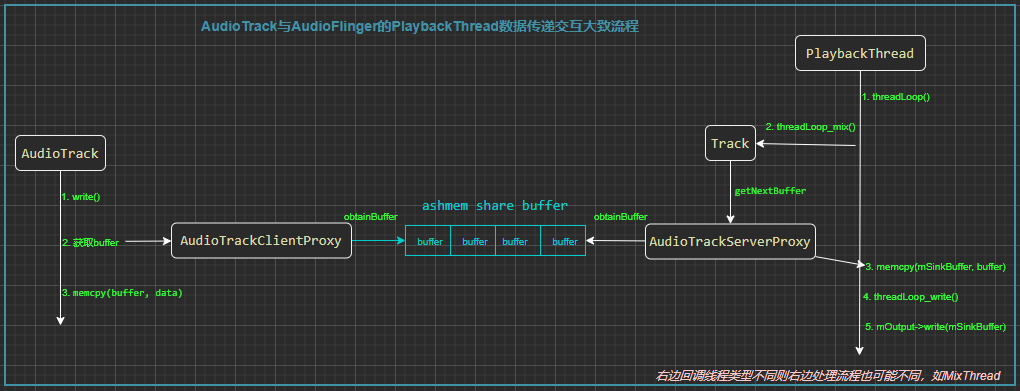

Continued AudioTrack establishment channel for playing audio The unique handle value output of the channel is found. This output is essentially the key value of (output, PlaybackThread) saved in the form of key value after AudioFlinger creates the PlaybackThread. Through output, you can find out which PlaybackThread thread the audio will transfer data from. This thread is equivalent to an intermediate role, The application layer process transfers the audio data to the PlaybackThread in the form of anonymous shared memory, and the PlaybackThread also transfers the data to the HAL layer in the form of anonymous shared memory; In fact, it can also be understood that the more efficient way to transfer big data across processes is to share memory;

Here, PlaybackThread refers to all callback threads, but the actual threads may be MixerThread, DirectOutputThread, OffloadThread and so on. Which thread does this output point to? Generally speaking, it is determined by the transmitted audio parameter Flag

Here we need to know how the shared memory between the application layer and AudioFlinger(PlaybackThread belongs to AudioFlinger module), AudioFinger and HAL is established and how the data is transferred on it?

Establishment of shared memory between application layer and AudioFlinger

AudioFlinger server module creates anonymous shared memory

The time to create shared memory is in AudioFlinger. After obtaining the output channel successfully, it is located at the place pointed by the red arrow in the figure below:

sp<IAudioTrack> AudioFlinger::createTrack(const CreateTrackInput& input,

CreateTrackOutput& output,

status_t *status)

{

.......

audio_attributes_t localAttr = input.attr;

//Enter the localAttr audio attribute and obtain the output handle and other information of the output channel from the AudioPolicyService

lStatus = AudioSystem::getOutputForAttr(&localAttr, &output.outputId, sessionId, &streamType,

clientPid, clientUid, &input.config, input.flags,

&output.selectedDeviceId, &portId, &secondaryOutputs);

........

{

Mutex::Autolock _l(mLock);

/** outputId It is returned by AudioPolicyService, which is the output handle of the output channel,

* In fact, this id is originally created by AudioFlinger when opening the device of HAL layer

* PlaybackThread Thread, which is saved in AudioFlinger in the form of key value

* mPlaybackThreads In the member, this is to find the corresponding PlaybackThread from the member

* */

PlaybackThread *thread = checkPlaybackThread_l(output.outputId);

if (thread == NULL) {

ALOGE("no playback thread found for output handle %d", output.outputId);

lStatus = BAD_VALUE;

goto Exit;

}

/** clientPid It's on the application layer. Registration here is to use a Client class to save Client information

* ,And saved in the collection member mClients of AudioFlinger

* */

client = registerPid(clientPid);

//Save audio properties

output.sampleRate = input.config.sample_rate;

output.frameCount = input.frameCount;

output.notificationFrameCount = input.notificationFrameCount;

output.flags = input.flags;

/** Create a Track on the AudioFlinger side, corresponding to the AudioTrack on the application layer side, one-to-one

* ,Shared memory is also completed by the logic in it

* */

track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,

input.config.format, input.config.channel_mask,

&output.frameCount, &output.notificationFrameCount,

input.notificationsPerBuffer, input.speed,

input.sharedBuffer, sessionId, &output.flags,

callingPid, input.clientInfo.clientTid, clientUid,

&lStatus, portId);

LOG_ALWAYS_FATAL_IF((lStatus == NO_ERROR) && (track == 0));

// we don't abort yet if lStatus != NO_ERROR; there is still work to be done regardless

output.afFrameCount = thread->frameCount();

output.afSampleRate = thread->sampleRate();

output.afLatencyMs = thread->latency();

output.portId = portId;

}

.......

//TrackHandler wraps the internal Track and returns it to AudioTrack client, which communicates through Binder

trackHandle = new TrackHandle(track);

//Release resources in case of error failure

Exit:

if (lStatus != NO_ERROR && output.outputId != AUDIO_IO_HANDLE_NONE) {

AudioSystem::releaseOutput(portId);

}

*status = lStatus;

return trackHandle;

}

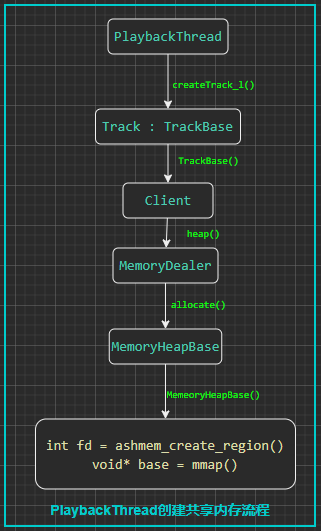

It can be concluded from the above functions that after obtaining the output handle of the output channel, the corresponding PlaybackThread thread will be found. After using this thread to create a Track, this Track corresponds to the AudioTrack of the application layer one by one, and they can be accessed through binder IPC. PlaybackThread will complete the creation and allocation of anonymous shared memory while creating the Track, It can be said that the Track manager shares memory; Its internal logic is relatively complex. Let's make a flow chart and explain the key points:

In the above figure, the TrackBase constructor uses the parameter client to create shared memory. The client is created in AudioFlinger and saves the information of the application side

It can be seen from the above figure that the anonymous shared memory is used to transfer audio data at the end of the process. The general principle of anonymous shared memory is: the files created on the tmpfs file system are bound to the virtual address space, while the tmpfs file system exists in the pageCache and swap cache. The IO processing speed is fast. When mmap mapping, the actual physical memory allocation is triggered and mapped to the process space, In this way, the process can directly use this memory; The principle of Android anonymous shared memory is not the focus of this article. If you are interested, you can click to check it Principle of anonymous shared memory,The difference between shared memory and file memory mapping Here we focus on the process of creating shared memory, what is returned after creating shared memory, and how to use it?

Let's see how to create shared memory in memoryhepbase. In its construction method:

MemoryHeapBase::MemoryHeapBase(size_t size, uint32_t flags, char const * name)

: mFD(-1), mSize(0), mBase(MAP_FAILED), mFlags(flags),

mDevice(nullptr), mNeedUnmap(false), mOffset(0)

{

const size_t pagesize = getpagesize();

size = ((size + pagesize-1) & ~(pagesize-1));

//Create anonymous shared memory on tmpfs temporary file system

int fd = ashmem_create_region(name == nullptr ? "MemoryHeapBase" : name, size);

ALOGE_IF(fd<0, "error creating ashmem region: %s", strerror(errno));

if (fd >= 0) {

//Map the shared memory to the current process space for easy operation

if (mapfd(fd, size) == NO_ERROR) {

if (flags & READ_ONLY) {

ashmem_set_prot_region(fd, PROT_READ);

}

}

}

}

status_t MemoryHeapBase::mapfd(int fd, size_t size, off_t offset)

{

......

if ((mFlags & DONT_MAP_LOCALLY) == 0) {

//Map shared memory to this process

void* base = (uint8_t*)mmap(nullptr, size,

PROT_READ|PROT_WRITE, MAP_SHARED, fd, offset);

if (base == MAP_FAILED) {

ALOGE("mmap(fd=%d, size=%zu) failed (%s)",

fd, size, strerror(errno));

close(fd);

return -errno;

}

//mBase is the assigned address

mBase = base;

mNeedUnmap = true;

} else {

mBase = nullptr; // not MAP_FAILED

mNeedUnmap = false;

}

mFD = fd; //Shared memory file descriptor

mSize = size; //Memory size

mOffset = offset; //Offset address

return NO_ERROR;

}

The above code is the anonymous shared memory allocation process. Note that the two parameters returned after allocation are mFd is the file descriptor and mBase is the mapped memory address; How are these two used? In TrackBase, you can view:

AudioFlinger::ThreadBase::TrackBase::TrackBase(

ThreadBase *thread,

const sp<Client>& client,

const audio_attributes_t& attr,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

//static mode buffer has a value that is not empty

void *buffer,

size_t bufferSize,

audio_session_t sessionId,

pid_t creatorPid,

uid_t clientUid,

bool isOut,

alloc_type alloc,

track_type type,

audio_port_handle_t portId)

.......

{

//roundup is up to frameCount+1

size_t minBufferSize = buffer == NULL ? roundup(frameCount) : frameCount;

// check overflow when computing bufferSize due to multiplication by mFrameSize.

if (minBufferSize < frameCount // roundup rounds down for values above UINT_MAX / 2

|| mFrameSize == 0 // format needs to be correct

|| minBufferSize > SIZE_MAX / mFrameSize) {

android_errorWriteLog(0x534e4554, "34749571");

return;

}

//Multiply by the size of each frame

minBufferSize *= mFrameSize;

if (buffer == nullptr) {

bufferSize = minBufferSize; // allocated here.

//It is not allowed to set the buffer size. bufferSize is smaller than the minimum buffer size, which will cause the provided data to be too small

} else if (minBufferSize > bufferSize) {

android_errorWriteLog(0x534e4554, "38340117");

return;

}

//audio_track_cblk_t is a structure

size_t size = sizeof(audio_track_cblk_t);

if (buffer == NULL && alloc == ALLOC_CBLK) {

// check overflow when computing allocation size for streaming tracks.

//Because at least one audio must be assigned_ track_ cblk_ T structure size

if (size > SIZE_MAX - bufferSize) {

android_errorWriteLog(0x534e4554, "34749571");

return;

}

/** Total capacity = mFrameSize * frameCount + sizeof(audio_track_cblk_t)

* frameCount It comes from the application side and is equal to the buffer size set by the user / data size of each frame

* */

size += bufferSize;

}

if (client != 0) {

/** This is the above call to allocate anonymous shared memory. It returns the Allocation type,

* Because he did a package subpackaging for memoryhepbase

* */

mCblkMemory = client->heap()->allocate(size);

if (mCblkMemory == 0 ||

//The pointer function returns the mBase of memoryhepapbase, that is, the actual mapped memory address, and then

//The wall tiles are called audio_track_cblk_t type and assigned to the mCblk member

(mCblk = static_cast<audio_track_cblk_t *>(mCblkMemory->pointer())) == NULL) {

ALOGE("%s(%d): not enough memory for AudioTrack size=%zu", __func__, mId, size);

client->heap()->dump("AudioTrack");

mCblkMemory.clear();

return;

}

} else {

//If the client cannot be found, it will be mapped locally directly

mCblk = (audio_track_cblk_t *) malloc(size);

if (mCblk == NULL) {

ALOGE("%s(%d): not enough memory for AudioTrack size=%zu", __func__, mId, size);

return;

}

}

// construct the shared structure in-place.

if (mCblk != NULL) {

//Assignment constructor, but the initial value is also empty

new(mCblk) audio_track_cblk_t();

switch (alloc) {

........

case ALLOC_CBLK:

//stream mode uses anonymous shared memory

if (buffer == NULL) {

//mBuffer is the first address of the shared memory map, not the 0

mBuffer = (char*)mCblk + sizeof(audio_track_cblk_t);

memset(mBuffer, 0, bufferSize);

} else {

//The static mode directly uses the buffer passed from the application layer

mBuffer = buffer;

#if 0

mCblk->mFlags = CBLK_FORCEREADY; // FIXME hack, need to fix the track ready logic

#endif

}

break;

.......

}

}

}

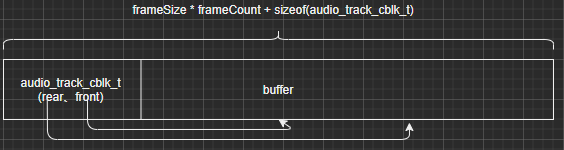

It can be seen from the above code that in the AudioFlinger module, the creation of anonymous shared memory is completed by memoryhepbase, the management is managed by Track and TrackBase, and the internal member mCblk is an audio_ Track_ cblk_ T type is responsible for the anonymous shared memory usage scheduling of the client and server, which is very important. The memory block that really transmits data is in the charge of the mBuffer member, and the memory size is equal to frameSize*frameCount+sizeof(audio_track_cblk_t). However, it should also be noted that if it is in the static mode, mBuffer is the buffer transmitted from the application end, rather than using the shared memory created earlier

The anonymous shared memory allocation diagram is shown above, audio_track_cblk_t is used to synchronize the write and read relationship between the client and the server, and buffer is the memory really used for data reading and writing; It can be understood that mBuffer is a buffer buffer, rear is the position pointer of writing, and front is the position pointer of reading. Each write will be written from the rear address, and each read will be read from the front; But how much can I write each time I write, and how much can I read when I read? This is by the audio in front_ track_ Cblk came to control

AudioFlinger server module manages shared memory

Back to the constructor of Track, there will be a class responsible for interactive management of shared memory with the application side, as follows:

AudioFlinger::PlaybackThread::Track::Track(

PlaybackThread *thread,

const sp<Client>& client,

audio_stream_type_t streamType,

const audio_attributes_t& attr,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

void *buffer,

size_t bufferSize,

const sp<IMemory>& sharedBuffer,

audio_session_t sessionId,

pid_t creatorPid,

uid_t uid,

audio_output_flags_t flags,

track_type type,

audio_port_handle_t portId)

: TrackBase(thread, client, attr, sampleRate, format, channelMask, frameCount,

(sharedBuffer != 0) ? sharedBuffer->pointer() : buffer,

(sharedBuffer != 0) ? sharedBuffer->size() : bufferSize,

sessionId, creatorPid, uid, true /*isOut*/,

(type == TYPE_PATCH) ? ( buffer == NULL ? ALLOC_LOCAL : ALLOC_NONE) : ALLOC_CBLK,

type, portId)

{

//mCblk indicates that the assignment has been completed in TrackBase. If it is empty, it indicates that the shared memory allocation has failed and returns directly

if (mCblk == NULL) {

return;

}

//shareBuffer comes from the application side. 0 means stream mode, and non-0 means static mode

if (sharedBuffer == 0) {

//stream mode

mAudioTrackServerProxy = new AudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize, !isExternalTrack(), sampleRate);

} else {

//static mode. Note that the essence of mBuffer here is the memory address passed from the application side

mAudioTrackServerProxy = new StaticAudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize);

}

//This mServerProxy interacts with the application side to share memory and synchronize

mServerProxy = mAudioTrackServerProxy;

......

}

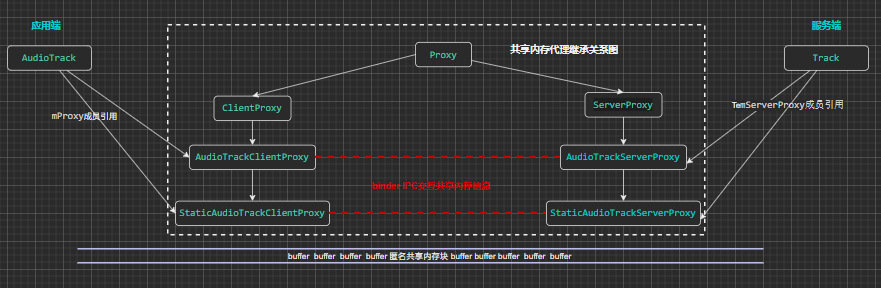

The above important logic is to remember the mAudioTrackServerProxy, which is responsible for managing and synchronizing the use of anonymous shared memory, IPC communication, and the application side will also have a set of ClientProxy correspondence. Through this Proxy, the application side and the server side use the obtainBuffer to apply for memory, control write and read, etc; Here, AudioFlinger on the server has finished the work of shared memory; In stream mode, mBuffer is equal to the anonymous shared memory address created by the server, while in Static mode, mBuffer comes from the sharedBuffer passed by the application

Later, there is another important work to be handled in PlaybackThread, as follows:

sp<AudioFlinger::PlaybackThread::Track> AudioFlinger::PlaybackThread::createTrack_l(.....){

.......

track = new Track(this, client, streamType, attr, sampleRate, format,

channelMask, frameCount,

nullptr /* buffer */, (size_t)0 /* bufferSize */, sharedBuffer,

sessionId, creatorPid, uid, *flags, TrackBase::TYPE_DEFAULT, portId);

mTracks.add(track);

.......

return track;

}

sp<IAudioTrack> AudioFlinger::createTrack(const CreateTrackInput& input,

CreateTrackOutput& output,

status_t *status)

{

........

Track track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,

input.config.format, input.config.channel_mask,

&output.frameCount, &output.notificationFrameCount,

input.notificationsPerBuffer, input.speed,

input.sharedBuffer, sessionId, &output.flags,

callingPid, input.clientInfo.clientTid, clientUid,

&lStatus, portId);

........

// return handle to client

trackHandle = new TrackHandle(track);

}

The above code has two key points:

- PlaybackThread adds track to its own mTracks members. What is the significance of this?

- Wrap the track once, encapsulate it as TrackHandle, and return it to the application layer; So how does the application layer module handle the TrackHandle?

The application layer module handles shared memory

After the application layer receives that the AudioFlinger server module creates a Track successfully, the code logic is as follows:

status_t AudioTrack::createTrack_l()

{

.......

IAudioFlinger::CreateTrackOutput output;

//The returned TrackHandler contains the Track created by AudioFlinger, and the Track contains various

//portId\outPutId, session, and shared memory information

sp<IAudioTrack> track = audioFlinger->createTrack(input,

output,

&status);

.......

//getCblk is to obtain the mCblkMemory member of TrackBase on the server side, and the total management of shared memory

sp<IMemory> iMem = track->getCblk();

if (iMem == 0) {

ALOGE("%s(%d): Could not get control block", __func__, mPortId);

status = NO_INIT;

goto exit;

}

//This pointer is the first address of the allocated memory map. The first address is an audio_track_cblk_t member

void *iMemPointer = iMem->pointer();

if (iMemPointer == NULL) {

ALOGE("%s(%d): Could not get control block pointer", __func__, mPortId);

status = NO_INIT;

goto exit;

}

// mAudioTrack is the TrackHandler that interacted with the AudioFlinger server before. If it exists, the previous relationship will be unregistered

if (mAudioTrack != 0) {

IInterface::asBinder(mAudioTrack)->unlinkToDeath(mDeathNotifier, this);

mDeathNotifier.clear();

}

//The successful track created by audioFlinger can be saved and then interacted in the manner of binder

mAudioTrack = track;

mCblkMemory = iMem;

IPCThreadState::self()->flushCommands();

//The first byte is cast to audio_track_cblk_t structure, followed by the actual mapping address

audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);

mCblk = cblk;

.......

//buffer is the actual mapping address

void* buffers;

if (mSharedBuffer == 0) {

buffers = cblk + 1;

} else {

//The static mode directly uses the address generated by the client

buffers = mSharedBuffer->pointer();

if (buffers == NULL) {

ALOGE("%s(%d): Could not get buffer pointer", __func__, mPortId);

status = NO_INIT;

goto exit;

}

}

mAudioTrack->attachAuxEffect(mAuxEffectId);

// If IAudioTrack is re-created, don't let the requested frameCount

// decrease. This can confuse clients that cache frameCount().

if (mFrameCount > mReqFrameCount) {

mReqFrameCount = mFrameCount;

}

// reset server position to 0 as we have new cblk.

mServer = 0;

// update proxy; Where the stream mode writes data is the internal function of this mProxy

/**

* mProxy Is the final proxy, data memory; The static mode is StaticAudioTrackClientProxy,

* buffers Memory is provided by app client; The stream mode is AudioTrackClientProxy, and the memory buffer is provided by audioFlinger

* */

if (mSharedBuffer == 0) {

mStaticProxy.clear();

mProxy = new AudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

} else {

mStaticProxy = new StaticAudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

mProxy = mStaticProxy;

}

.......

}

On the application layer side, after getting the TrackHandle of the server side, the main work is to take out the shared memory management structure audio from the TrackHandle_ track_ cblk_ T and the actual memory mapped address buffers. If it is in static mode, the allocated memory address msharbuffer created by the client will be directly used. Finally, the client also checks buffers and audio_track_cblk_t is packaged as AudioTrackClientProxy for subsequent data writing and memory management. Finally, the application layer and AudioFlinger server are summarized as follows:

It should be noted in the above figure that the communication mode between clientProxy and ServerProxy is Binder communication IPC. The content of their communication is the information of anonymous shared memory, how to write it, where to write it, etc; The following anonymous shared memory buffer is used to read and write audio data;

What is the sharedBuffer of static mode? Is it also anonymous shared memory?

Answer: Yes, to confirm this answer, you need to go to the logic of creating sharedBuffer in the static mode of the application side, which is located on Android when creating audiotrack on the application side_ media_ AudioTrack. Android of CPP_ media_ AudioTrack_ Under the setup function:

static jint

android_media_AudioTrack_setup(){

......

switch (memoryMode) {

case MODE_STREAM:

......

case MODE_STATIC:

//static mode is the memory created by the client application itself

if (!lpJniStorage->allocSharedMem(buffSizeInBytes)) {

ALOGE("Error creating AudioTrack in static mode: error creating mem heap base");

goto native_init_failure;

}

......

}

bool allocSharedMem(int sizeInBytes) {

mMemHeap = new MemoryHeapBase(sizeInBytes, 0, "AudioTrack Heap Base");

if (mMemHeap->getHeapID() < 0) {

return false;

}

mMemBase = new MemoryBase(mMemHeap, 0, sizeInBytes);

return true;

}

Are you familiar with memehead? It is a memoryhepbase class, which is responsible for creating anonymous shared memory; Therefore, whether in stream or static mode, the audio data transmission between AudioFlinger and the application end is shared memory. The difference is that the creator is different and the allocated size is different.

The application layer writes data to the AudioFlinger server module

Here, we will explain how to write data in stream mode. Static mode is similar to static mode, which is the way of shared memory transmission. The data writing logic of stream mode is roughly as follows:

The above figure does not involve the part from java to native in the application layer, but only the part of data transmission from native AudioTrack to AudioFlinger, which is also the part we need to pay attention to; The logic of this part of the code is relatively complex. With the above refined diagram, you only need to share both ends of the memory. How to write and read?

Application layer write

In the write function of AudioTrack:

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

if (mTransfer != TRANSFER_SYNC && mTransfer != TRANSFER_SYNC_NOTIF_CALLBACK) {

return INVALID_OPERATION;

}

.......

size_t written = 0;

Buffer audioBuffer;

while (userSize >= mFrameSize) {

audioBuffer.frameCount = userSize / mFrameSize;

//Get memory buffer from shared memory

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking);

if (err < 0) {

if (written > 0) {

break;

}

if (err == TIMED_OUT || err == -EINTR) {

err = WOULD_BLOCK;

}

return ssize_t(err);

}

size_t toWrite = audioBuffer.size;

//Copy audio data to audioBuffer

memcpy(audioBuffer.i8, buffer, toWrite);

buffer = ((const char *) buffer) + toWrite;

userSize -= toWrite;

written += toWrite;

releaseBuffer(&audioBuffer);

}

if (written > 0) {

mFramesWritten += written / mFrameSize;

if (mTransfer == TRANSFER_SYNC_NOTIF_CALLBACK) {

//t is the callback thread, which is called back to the upper application layer of java

const sp<AudioTrackThread> t = mAudioTrackThread;

if (t != 0) {

t->wake();

}

}

}

return written;

}

The above is mainly to apply for free memory from the shared memory first, then memcpy writes data, and then AudioFlinger will read it; The obtainBuffer of the above function will eventually be executed into the obtainBuffer of ClientProxy. Let's go in and have a look:

status_t ClientProxy::obtainBuffer(Buffer* buffer, const struct timespec *requested,

struct timespec *elapsed)

{

.......

// compute number of frames available to write (AudioTrack) or read (AudioRecord)

int32_t front; //front is the read pointer and rear is the write pointer

int32_t rear;

//mIsOut Peugeot means writing data

if (mIsOut) {

//In order to write data as successfully as possible, you should know as much space as possible,

//So read the latest mFront pointer to see how much data the server reads

// The barrier following the read of mFront is probably redundant.

// We're about to perform a conditional branch based on 'filled',

// which will force the processor to observe the read of mFront

// prior to allowing data writes starting at mRaw.

// However, the processor may support speculative execution,

// and be unable to undo speculative writes into shared memory.

// The barrier will prevent such speculative execution.

front = android_atomic_acquire_load(&cblk->u.mStreaming.mFront);

rear = cblk->u.mStreaming.mRear;

} else {

// On the other hand, this barrier is required.

rear = android_atomic_acquire_load(&cblk->u.mStreaming.mRear);

front = cblk->u.mStreaming.mFront;

}

// write to rear, read from front

ssize_t filled = audio_utils::safe_sub_overflow(rear, front);

// pipe should not be overfull

if (!(0 <= filled && (size_t) filled <= mFrameCount)) {

if (mIsOut) {

ALOGE("Shared memory control block is corrupt (filled=%zd, mFrameCount=%zu); "

"shutting down", filled, mFrameCount);

mIsShutdown = true;

status = NO_INIT;

goto end;

}

// for input, sync up on overrun

filled = 0;

cblk->u.mStreaming.mFront = rear;

(void) android_atomic_or(CBLK_OVERRUN, &cblk->mFlags);

}

// Don't allow filling pipe beyond the user settable size.

// The calculation for avail can go negative if the buffer size

// is suddenly dropped below the amount already in the buffer.

// So use a signed calculation to prevent a numeric overflow abort.

ssize_t adjustableSize = (ssize_t) getBufferSizeInFrames();

//Available indicates the size of free memory

ssize_t avail = (mIsOut) ? adjustableSize - filled : filled;

if (avail < 0) {

avail = 0;

} else if (avail > 0) {

// 'avail' may be non-contiguous, so return only the first contiguous chunk

size_t part1;

if (mIsOut) {

rear &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - rear;

} else {

front &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - front;

}

//part1 is the calculated final free memory size

if (part1 > (size_t)avail) {

part1 = avail;

}

//If part1 is larger than the number of frames required by the buffer, if it can't be used so much, take the size required by the actual buffer

if (part1 > buffer->mFrameCount) {

part1 = buffer->mFrameCount;

}

buffer->mFrameCount = part1;

//mRaw stores the memory address pointer, rear is the bit shift of the write pointer, and mFrameSize is the unit size of each shift

buffer->mRaw = part1 > 0 ?

&((char *) mBuffers)[(mIsOut ? rear : front) * mFrameSize] : NULL;

buffer->mNonContig = avail - part1;

mUnreleased = part1;

status = NO_ERROR;

break;

}

......

}

The above figure is mainly to obtain the free buffer and calculate whether the remaining memory space meets the buffer requirements. If so, assign the free address pointer real to the buffer mRaw; In the write function returned to AudioTrack, write the audio data into the buffer. After writing, release the buffer. This release buffer does not release the memory, because the server has not read the data, and the release buffer here only saves the write pointer state, as shown in the following function:

/**

* releaseBuffer Instead of freeing memory, it just updates the write data status to the rear and front pointers of mCblk

* **/

__attribute__((no_sanitize("integer")))

void ClientProxy::releaseBuffer(Buffer* buffer)

{

LOG_ALWAYS_FATAL_IF(buffer == NULL);

//stepCount records the number of frames written this time

size_t stepCount = buffer->mFrameCount;

if (stepCount == 0 || mIsShutdown) {

// prevent accidental re-use of buffer

buffer->mFrameCount = 0;

buffer->mRaw = NULL;

buffer->mNonContig = 0;

return;

}

.......

mUnreleased -= stepCount;

audio_track_cblk_t* cblk = mCblk;

// Both of these barriers are required

//When writing data, synchronize the mRear pointer

if (mIsOut) {

int32_t rear = cblk->u.mStreaming.mRear;

android_atomic_release_store(stepCount + rear, &cblk->u.mStreaming.mRear);

When reading data, synchronize mFront Pointer

} else {

int32_t front = cblk->u.mStreaming.mFront;

android_atomic_release_store(stepCount + front, &cblk->u.mStreaming.mFront);

}

}

Insert a detail. In essence, we need to call the start function of AudioTrack before writing audio data. The framework has already called it for us, and there is no need to call it manually; After the start function is executed, it will cross the process to start in AudioFlinger's Track through TrackHandle. See the key details of Track's start function:

status_t AudioFlinger::PlaybackThread::Track::start(AudioSystem::sync_event_t event __unused,

audio_session_t triggerSession __unused)

{

......

status = playbackThread->addTrack_l(this);

......

}

status_t AudioFlinger::PlaybackThread::addTrack_l(const sp<Track>& track)

{

mActiveTracks.add(track);

}

That is, add the current track to the m activetracks of the PlaybackThread, and then look at the above data transfer block diagram to know which track the PlaybackThread thread determines to use

The AudioFlinger server module reads the data of the application

There are many similarities between the data reading process of the server and the writing process of the client. Get the front pointer from cblk

void AudioFlinger::DirectOutputThread::threadLoop_mix()

{

size_t frameCount = mFrameCount;

int8_t *curBuf = (int8_t *)mSinkBuffer;

// output audio to hardware

while (frameCount) {

AudioBufferProvider::Buffer buffer;

buffer.frameCount = frameCount;

status_t status = mActiveTrack->getNextBuffer(&buffer);

if (status != NO_ERROR || buffer.raw == NULL) {

// no need to pad with 0 for compressed audio

if (audio_has_proportional_frames(mFormat)) {

memset(curBuf, 0, frameCount * mFrameSize);

}

break;

}

//Copy audio data to curbuffer, that is, mSinkBuffer

memcpy(curBuf, buffer.raw, buffer.frameCount * mFrameSize);

frameCount -= buffer.frameCount;

curBuf += buffer.frameCount * mFrameSize;

mActiveTrack->releaseBuffer(&buffer);

}

mCurrentWriteLength = curBuf - (int8_t *)mSinkBuffer;

mSleepTimeUs = 0;

mStandbyTimeNs = systemTime() + mStandbyDelayNs;

mActiveTrack.clear();

}

The DirectOutputThread used here can only have one Track in this thread, so here is m activetrack, not a complex number; In the case of MixThread, multiple tracks are saved internally with m activetracks, and mixing is also involved;

The above is a simple reading process. Find the memory where the data has been written, copy the data, and copy it to the mSnkBuffer member in threadloop_write out data during write:

ssize_t AudioFlinger::PlaybackThread::threadLoop_write()

{

LOG_HIST_TS();

mInWrite = true;

ssize_t bytesWritten;

const size_t offset = mCurrentWriteLength - mBytesRemaining;

// If an NBAIO sink is present, use it to write the normal mixer's submix

if (mNormalSink != 0) {

const size_t count = mBytesRemaining / mFrameSize;

ATRACE_BEGIN("write");

// update the setpoint when AudioFlinger::mScreenState changes

uint32_t screenState = AudioFlinger::mScreenState;

if (screenState != mScreenState) {

mScreenState = screenState;

MonoPipe *pipe = (MonoPipe *)mPipeSink.get();

if (pipe != NULL) {

pipe->setAvgFrames((mScreenState & 1) ?

(pipe->maxFrames() * 7) / 8 : mNormalFrameCount * 2);

}

}

ssize_t framesWritten = mNormalSink->write((char *)mSinkBuffer + offset, count);

ATRACE_END();

if (framesWritten > 0) {

bytesWritten = framesWritten * mFrameSize;

#ifdef TEE_SINK

mTee.write((char *)mSinkBuffer + offset, framesWritten);

#endif

} else {

bytesWritten = framesWritten;

}

// otherwise use the HAL / AudioStreamOut directly

} else {

// Direct output and offload threads

if (mUseAsyncWrite) {

ALOGW_IF(mWriteAckSequence & 1, "threadLoop_write(): out of sequence write request");

mWriteAckSequence += 2;

mWriteAckSequence |= 1;

ALOG_ASSERT(mCallbackThread != 0);

mCallbackThread->setWriteBlocked(mWriteAckSequence);

}

// FIXME We should have an implementation of timestamps for direct output threads.

// They are used e.g for multichannel PCM playback over HDMI. The msinkbuffer writes audio data, which is written to the mOutput here

bytesWritten = mOutput->write((char *)mSinkBuffer + offset, mBytesRemaining);

if (mUseAsyncWrite &&

((bytesWritten < 0) || (bytesWritten == (ssize_t)mBytesRemaining))) {

// do not wait for async callback in case of error of full write

mWriteAckSequence &= ~1;

ALOG_ASSERT(mCallbackThread != 0);

mCallbackThread->setWriteBlocked(mWriteAckSequence);

}

}

mNumWrites++;

mInWrite = false;

mStandby = false;

return bytesWritten;

}

The audio data has been written to mSinkBuffer. Here we will start to talk about writing the data to HAL; For PlaybackThread, the data may be output to three outputs: mOutput, mPipeSink and mNormalSink. The previous mOutput is written to HAL through shared memory, mPipeSink The first two variables are selected as their own variables according to the business.

Here, we can analyze the shared memory service write of mOutput

Write audio data to HAL layer

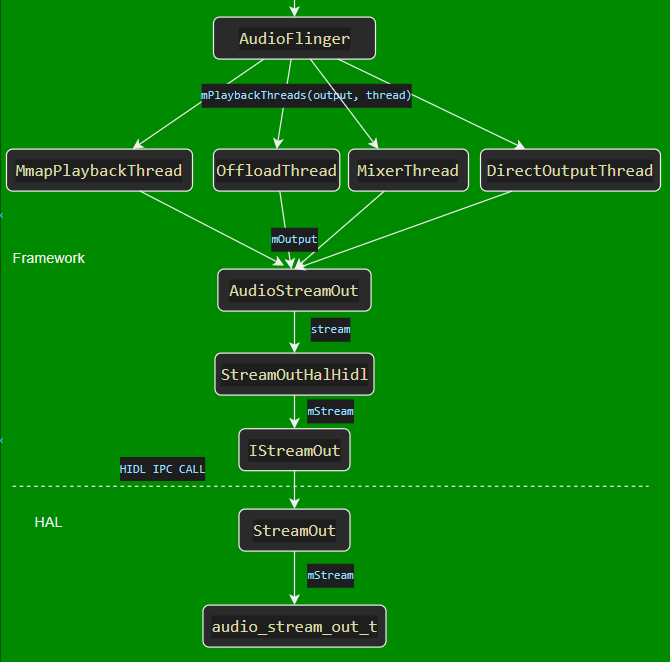

adopt audio open output service It can be seen from the article that the type of the mOutput variable is AudioStreamOut, and the reference types held by the lower layer are as follows:

The business logic is as follows:

AudioStreamOut. write() -> StreamOutHalHidl. write(); Look directly at the second processing function:

status_t StreamOutHalHidl::write(const void *buffer, size_t bytes, size_t *written) {

......

status_t status;

//Mdatmq shared memory

if (!mDataMQ) {

//Get cache size

size_t bufferSize;

if ((status = getCachedBufferSize(&bufferSize)) != OK) {

return status;

}

if (bytes > bufferSize) bufferSize = bytes;

//Request memory

if ((status = prepareForWriting(bufferSize)) != OK) {

return status;

}

}

//Execute writing HAL data

status = callWriterThread(

WriteCommand::WRITE, "write", static_cast<const uint8_t*>(buffer), bytes,

[&] (const WriteStatus& writeStatus) {

*written = writeStatus.reply.written;

// Diagnostics of the cause of b/35813113.

ALOGE_IF(*written > bytes,

"hal reports more bytes written than asked for: %lld > %lld",

(long long)*written, (long long)bytes);

});

mStreamPowerLog.log(buffer, *written);

return status;

}

status_t StreamOutHalHidl::callWriterThread(

WriteCommand cmd, const char* cmdName,

const uint8_t* data, size_t dataSize, StreamOutHalHidl::WriterCallback callback) {

//Write command CMD

if (!mCommandMQ->write(&cmd)) {

ALOGE("command message queue write failed for \"%s\"", cmdName);

return -EAGAIN;

}

if (data != nullptr) {

size_t availableToWrite = mDataMQ->availableToWrite();

if (dataSize > availableToWrite) {

ALOGW("truncating write data from %lld to %lld due to insufficient data queue space",

(long long)dataSize, (long long)availableToWrite);

dataSize = availableToWrite;

}

//Write data to shared memory daya

if (!mDataMQ->write(data, dataSize)) {

ALOGE("data message queue write failed for \"%s\"", cmdName);

}

}

mEfGroup->wake(static_cast<uint32_t>(MessageQueueFlagBits::NOT_EMPTY));

......

}

data is mainly written through mdatmq. Where does mdatmq come from? In essence, in the prepareForWriting function:

status_t StreamOutHalHidl::prepareForWriting(size_t bufferSize) {

std::unique_ptr<CommandMQ> tempCommandMQ;

std::unique_ptr<DataMQ> tempDataMQ;

std::unique_ptr<StatusMQ> tempStatusMQ;

Result retval;

pid_t halThreadPid, halThreadTid;

Return<void> ret = mStream->prepareForWriting(

1, bufferSize,

[&](Result r,

const CommandMQ::Descriptor& commandMQ,

const DataMQ::Descriptor& dataMQ,

const StatusMQ::Descriptor& statusMQ,

const ThreadInfo& halThreadInfo) {

retval = r;

if (retval == Result::OK) {

tempCommandMQ.reset(new CommandMQ(commandMQ));

tempDataMQ.reset(new DataMQ(dataMQ));

....

}

});

i

mCommandMQ = std::move(tempCommandMQ);

mDataMQ = std::move(tempDataMQ);

mStatusMQ = std::move(tempStatusMQ);

mWriterClient = gettid();

return OK;

}

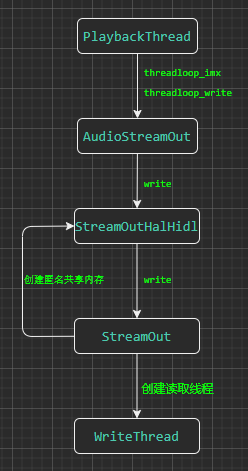

Finally, it will call hardware / interface / audio / core / all version / default / streamout Of course, the prepareForWriting method of CPP is called across processes through HIDL. After the call is successful, dataMQ is returned and assigned to mdatmq for data writing;

Return<void> StreamOut::prepareForWriting(uint32_t frameSize, uint32_t framesCount,

prepareForWriting_cb _hidl_cb) {

.......

//Create shared memory

std::unique_ptr<DataMQ> tempDataMQ(new DataMQ(frameSize * framesCount, true /* EventFlag */));

......

// When a thread is created, it reads and writes audio data from the Framework layer

auto tempWriteThread =

std::make_unique<WriteThread>(&mStopWriteThread, mStream, tempCommandMQ.get(),

tempDataMQ.get(), tempStatusMQ.get(), tempElfGroup.get());

if (!tempWriteThread->init()) {

ALOGW("failed to start writer thread: %s", strerror(-status));

sendError(Result::INVALID_ARGUMENTS);

return Void();

}

status = tempWriteThread->run("writer", PRIORITY_URGENT_AUDIO);

mCommandMQ = std::move(tempCommandMQ);

mDataMQ = std::move(tempDataMQ);

.......

//Callback to Framework layer

_hidl_cb(Result::OK, *mCommandMQ->getDesc(), *mDataMQ->getDesc(), *mStatusMQ->getDesc(),

threadInfo);

return Void();

}

emplate <typename T, MQFlavor flavor>

MessageQueue<T, flavor>::MessageQueue(size_t numElementsInQueue, bool configureEventFlagWord) {

......

/*

*Create anonymous shared memory

*/

int ashmemFd = ashmem_create_region("MessageQueue", kAshmemSizePageAligned);

ashmem_set_prot_region(ashmemFd, PROT_READ | PROT_WRITE);

/*

* The native handle will contain the fds to be mapped.

*/

native_handle_t* mqHandle =

native_handle_create(1 /* numFds */, 0 /* numInts */);

if (mqHandle == nullptr) {

return;

}

mqHandle->data[0] = ashmemFd;

mDesc = std::unique_ptr<Descriptor>(new (std::nothrow) Descriptor(kQueueSizeBytes,

mqHandle,

sizeof(T),

configureEventFlagWord));

if (mDesc == nullptr) {

return;

}

initMemory(true);

}

Here we know that anonymous shared memory is created in HAL layer and passed to StreamHalHidl of Framework layer. You can write when writing; When creating shared memory, a WriteThread thread thread is created to read audio data; The summary is shown in the figure below:

HAL layer reads audio data

From the summary diagram in the previous section, we can see that the HAL layer has enabled the WriteThread thread. It is not difficult to guess that the audio data will be read in this thread. Take a look at the threadloop function of the thread:

bool WriteThread::threadLoop() {

while (!std::atomic_load_explicit(mStop, std::memory_order_acquire)) {

uint32_t efState = 0;

mEfGroup->wait(static_cast<uint32_t>(MessageQueueFlagBits::NOT_EMPTY), &efState);

if (!(efState & static_cast<uint32_t>(MessageQueueFlagBits::NOT_EMPTY))) {

continue; // Nothing to do.

}

if (!mCommandMQ->read(&mStatus.replyTo)) {

continue; // Nothing to do.

}

switch (mStatus.replyTo) {

//The application layer passes WRITE

case IStreamOut::WriteCommand::WRITE:

doWrite();

break;

case IStreamOut::WriteCommand::GET_PRESENTATION_POSITION:

doGetPresentationPosition();

break;

case IStreamOut::WriteCommand::GET_LATENCY:

doGetLatency();

break;

default:

ALOGE("Unknown write thread command code %d", mStatus.replyTo);

mStatus.retval = Result::NOT_SUPPORTED;

break;

}

.....

}

return false;

}

void WriteThread::doWrite() {

const size_t availToRead = mDataMQ->availableToRead();

mStatus.retval = Result::OK;

mStatus.reply.written = 0;

//Reading data from mdatmq shared memory is only at the external HIDL of HAL layer

if (mDataMQ->read(&mBuffer[0], availToRead)) {

//The mStream is written to the Hal layer through the adev of Hal_ open_ output_ Obtained by stream

ssize_t writeResult = mStream->write(mStream, &mBuffer[0], availToRead);

if (writeResult >= 0) {

mStatus.reply.written = writeResult;

} else {

mStatus.retval = Stream::analyzeStatus("write", writeResult);

}

}

}

In the above code, the audio data buffer has been read from the server of HIDL, and then written into the HAL layer with mStream; What is mStream?

Writing HAL layer audio data to Kernel driver

After that, mStream is essentially an audio_stream_out type. Because different manufacturers of HAL layer have different implementations, it is analyzed here based on Qcom Qualcomm; The code is located in the directory / harware/qcom/audio /_ hw. C defines the equipment function node of HAL; And in adev_ open_ output_ Created audio stream function_ stream_ Out type and configured the methods supported by the flow, which are roughly as follows:

static int adev_open_output_stream(struct audio_hw_device *dev,

audio_io_handle_t handle,

audio_devices_t devices,

audio_output_flags_t flags,

struct audio_config *config,

struct audio_stream_out **stream_out,

const char *address __unused)

{

struct audio_device *adev = (struct audio_device *)dev;

struct stream_out *out;

int i, ret = 0;

bool is_hdmi = devices & AUDIO_DEVICE_OUT_AUX_DIGITAL;

bool is_usb_dev = audio_is_usb_out_device(devices) &&

(devices != AUDIO_DEVICE_OUT_USB_ACCESSORY);

bool force_haptic_path =

property_get_bool("vendor.audio.test_haptic", false);

if (is_usb_dev && !is_usb_ready(adev, true /* is_playback */)) {

return -ENOSYS;

}

*stream_out = NULL;

out = (struct stream_out *)calloc(1, sizeof(struct stream_out));

......

out->stream.set_callback = out_set_callback;

out->stream.pause = out_pause;

out->stream.resume = out_resume;

out->stream.drain = out_drain;

out->stream.flush = out_flush;

out->stream.write = out_write;

......

*stream_out = &out->stream;

}

The follow-up process is relatively complex. It is mainly to open the / dev/snd/pcmCXDX drive device and write buffer data to the device in ioctl; The write function is located at / hardware / qcom / aduio / legacy / libalsa INTF / alsa_ pcm. PCM in C_ The write function will involve the write drive of audio data; This part is Qualcomm's encapsulation of alsa framework, which is roughly the same path as that of other manufacturers. Alsa is an Advanced Audio Architecture Framework under linux. The Android system is tailored to use tinyalsa. For more alsa knowledge courses, please refer to ALSA introduction

Here, the analysis of audio data transmission is almost finished, but there are still many knowledge points not involved, such as how to mix audio, how to process audio data by offload playback thread, how to play audio data by ALSA, etc. these knowledge will be expanded one by one in the future!