UDF GIS

What is GIS

GIS, fully known as Geographic Information System (GIS), is also known as Geographic Information Science, that is, Geographic Information Science. This definition is familiar to all. When I first learned GIS, I saw someone say that GIS is a sunrise industry, but it will never reach noon. This is naturally a joke, but it also contains GISer's sadness. Because of the definition of GIS, many people who have studied and climbed in schools and industries for many years may not be able to say it, and its role naturally becomes blurred.

GIS is not a mature subject in developed countries, let alone in China. Many schools even put many contents such as surveying and mapping, geology and so on in the curriculum of GIS specialty. Too broad learning has lost the possibility of in-depth exploration.

In fact, most people's cognition of geography is limited. They think that geography only studies the landform of mountains, rivers and lakes, and even can't distinguish the difference between geology and geography. They think that geographers need to study the composition of stones all day. Of course, as soon as more people hear that you study geography, they will find a strange country and ask which city is your capital. This cognitive limitation also limits the potential of geography. In fact, maps and zoning are the basis of geography. All information depends on these basis to establish connections. The principle of establishing such connections is that everything affects other things, but it has a greater impact on things close to each other. Location affects the natural environment and human environment, and then affects human activities. Therefore, although geography itself sounds like a discipline, its content involves Sociology (urbanization, population, housing, race), politics (International Relations), Environmental Science (water resources utilization and protection, wildlife protection) and even medical treatment and law. Based on location, geography connects all parts closely related to our life, finds the connection, and then uses this connection to solve the problem. Therefore, geographic information is not only the altitude of a mountain or the ice age of a river, but all the information with location labels.

Take the Census Data well managed in the United States as an example. The census will divide the United States into small Census Tracks or Block Groups. The census areas and Block Groups here are geographic tags. For each geographic tag, the database will record the actual information under the tag in detail, such as the median income.

Therefore, the foundation of a qualified GISer is not a solid programming foundation or rich statistical knowledge, but a deep understanding of geographic information and the ability to think about space. The biggest difference between a GISer and a software engineer, statistician, historian or sociologist is that it habitually brings the geographical labels contained in information and data into the thinking process and uses geographical associations to solve problems.

The role of programming knowledge in GIS can not be underestimated. For example, ArcGIS, the most commonly used software of GISer, can greatly improve the operation efficiency by using Python Script. A complete Python Script can automatically complete a spatial analysis process without the operator doing anything in it. Many web page embedded maps and visualization tools use JavaScript and HTML language, and SQL and Spatial SQL are also used frequently to manage the database of spatial data closely related to GIS. (Spatial SQL is almost the same as SQL in language structure, but the content that can be filtered and managed is different) even without development, programming knowledge plays an important role in GIS discipline.

For example, the mobile phone map that we often use is the path from one place to another. The calculation process actually uses spatial analysis to consider the road network between the two places and whether there are rivers. If there are rivers and whether there are bridges, the road network, rivers and bridges are each a data layer. After superposition and calculation, the shortest path is analyzed, If the fastest path needs to be calculated, the data of road speed limit, traffic flow and traffic speed need to be superimposed. When Google Maps calculates the path for users, it will also consider whether to close the road, whether the road section is charged, etc. Although the algorithm and implementation method may be different, the basic principles are the same.

The examples of road calculation are relatively objective, and some analysis related to social sciences are relatively subjective. For example, where to establish a food bank, although it is also to find data and overlay analysis according to the factors that may need to be considered, the variables are relatively large. A and B may make completely different results because they use different data. For similar situations, the influencing factors can only be fully considered by understanding the background knowledge as much as possible. In the final analysis, GIS is only an analysis tool. The specific use still requires GIS practitioners to specialize in one direction at the same time, or have a practitioner of related majors in the team.

background information

Apache Hadoop has a set of open source geospatial projects. For details, see Github In other words, the Spatial Framework for Hadoop allows developers and data scientists to use Hadoop for spatial data analysis

However, we know that the MR task of coding Hadoop itself is very complex, not to mention the inherently complex GIS calculation. Therefore, although the positioning of this system is based on Hadoop system, it is used through Hive.

Package compilation

Please confirm that you have completed the following operations:

- Git is installed.

- Maven is installed and environment variables are set.

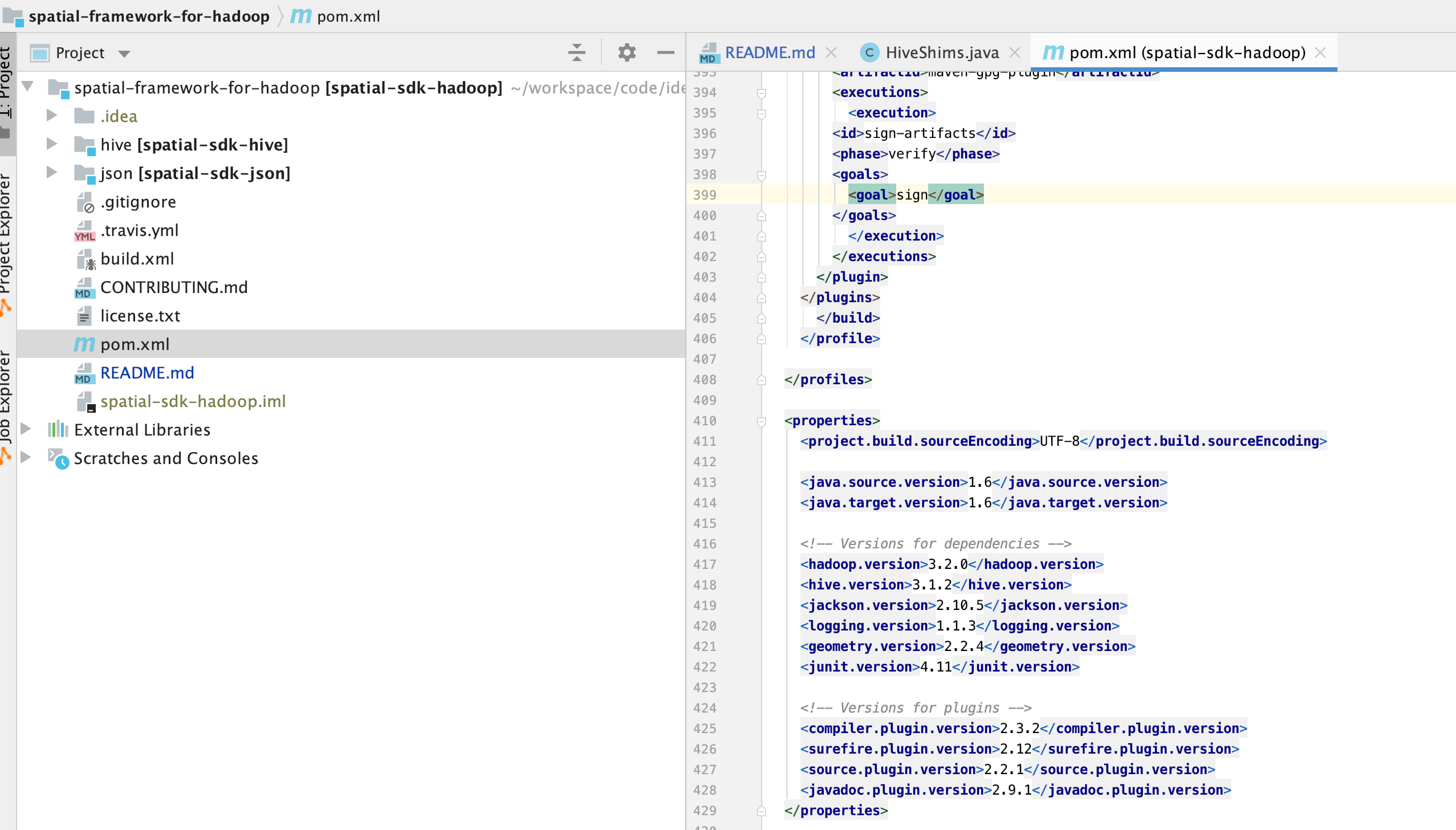

The first is the whole project git clone https://github.com/Esri/spatial-framework-for-hadoop.git Then open it with idea to see the project structure

At the same time, we see the version information in the pom file. Of course, we also notice that a large number of profile s are provided in the pom file, so we can select the version according to the version information of hadoop and hive

mvn clean package -DskipTests -P java-8,hadoop-3.2,hive-3.1

Create UDF s and use

Before creating UDF, we also need to download a dependency, Esri Geometry API for Java , this is the Java package that our framework relies on

add jar /Users/liuwenqiang/workspace/code/idea/spatial-framework-for-hadoop/hive/target/spatial-sdk-hive-2.2.0.jar; add jar /Users/liuwenqiang/workspace/code/idea/spatial-framework-for-hadoop/json/target/spatial-sdk-json-2.2.0.jar; add jar /Users/liuwenqiang/Downloads/esri-geometry-api-2.2.4.jar;

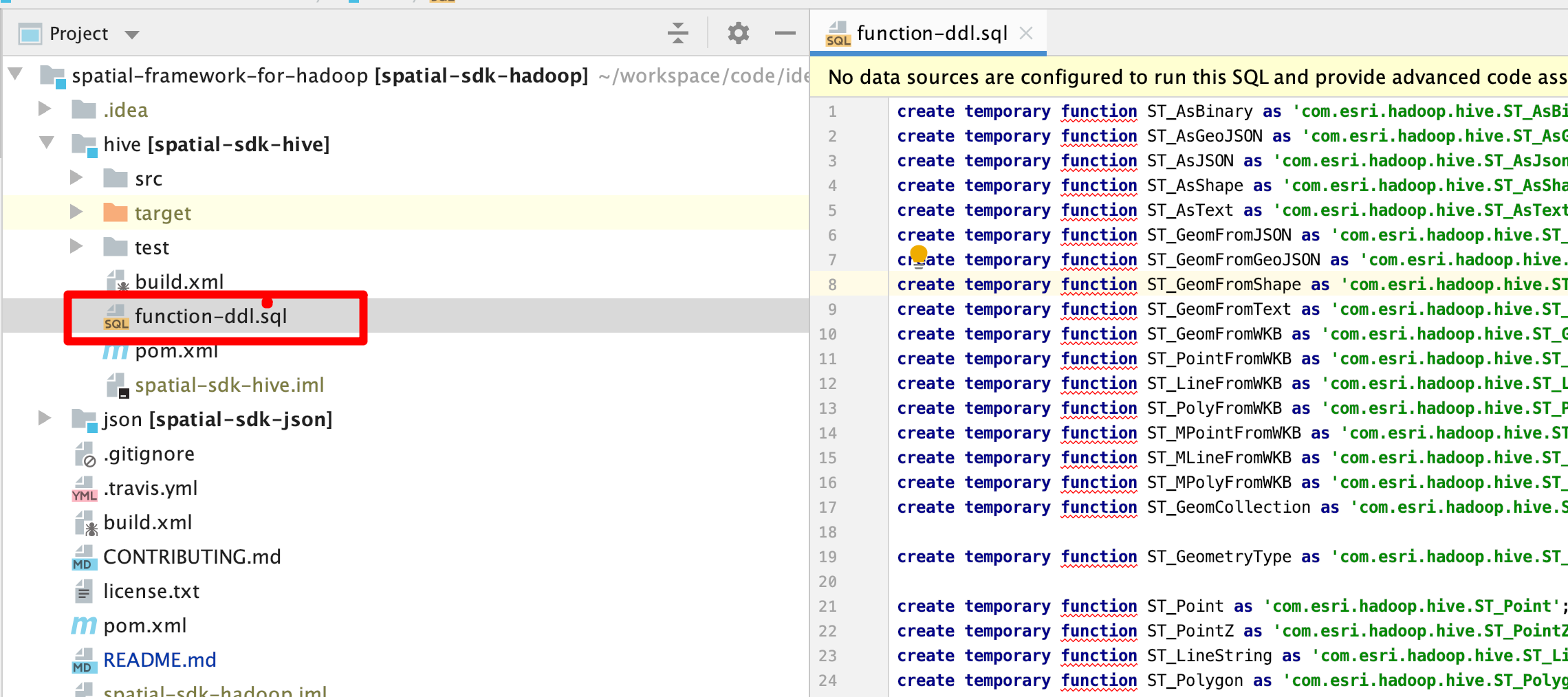

After loading the dependencies, we can create the UDF. There is function DDL in the project directory SQL is a file that contains all the UDFs we need

The contents are as follows, which can be executed directly

create temporary function ST_AsBinary as 'com.esri.hadoop.hive.ST_AsBinary'; create temporary function ST_AsGeoJSON as 'com.esri.hadoop.hive.ST_AsGeoJson'; create temporary function ST_AsJSON as 'com.esri.hadoop.hive.ST_AsJson'; create temporary function ST_AsShape as 'com.esri.hadoop.hive.ST_AsShape'; create temporary function ST_AsText as 'com.esri.hadoop.hive.ST_AsText'; create temporary function ST_GeomFromJSON as 'com.esri.hadoop.hive.ST_GeomFromJson'; create temporary function ST_GeomFromGeoJSON as 'com.esri.hadoop.hive.ST_GeomFromGeoJson'; create temporary function ST_GeomFromShape as 'com.esri.hadoop.hive.ST_GeomFromShape'; create temporary function ST_GeomFromText as 'com.esri.hadoop.hive.ST_GeomFromText'; create temporary function ST_GeomFromWKB as 'com.esri.hadoop.hive.ST_GeomFromWKB'; create temporary function ST_PointFromWKB as 'com.esri.hadoop.hive.ST_PointFromWKB'; create temporary function ST_LineFromWKB as 'com.esri.hadoop.hive.ST_LineFromWKB'; create temporary function ST_PolyFromWKB as 'com.esri.hadoop.hive.ST_PolyFromWKB'; create temporary function ST_MPointFromWKB as 'com.esri.hadoop.hive.ST_MPointFromWKB'; create temporary function ST_MLineFromWKB as 'com.esri.hadoop.hive.ST_MLineFromWKB'; create temporary function ST_MPolyFromWKB as 'com.esri.hadoop.hive.ST_MPolyFromWKB'; create temporary function ST_GeomCollection as 'com.esri.hadoop.hive.ST_GeomCollection'; create temporary function ST_GeometryType as 'com.esri.hadoop.hive.ST_GeometryType'; create temporary function ST_Point as 'com.esri.hadoop.hive.ST_Point'; create temporary function ST_PointZ as 'com.esri.hadoop.hive.ST_PointZ'; create temporary function ST_LineString as 'com.esri.hadoop.hive.ST_LineString'; create temporary function ST_Polygon as 'com.esri.hadoop.hive.ST_Polygon'; create temporary function ST_MultiPoint as 'com.esri.hadoop.hive.ST_MultiPoint'; create temporary function ST_MultiLineString as 'com.esri.hadoop.hive.ST_MultiLineString'; create temporary function ST_MultiPolygon as 'com.esri.hadoop.hive.ST_MultiPolygon'; create temporary function ST_SetSRID as 'com.esri.hadoop.hive.ST_SetSRID'; create temporary function ST_SRID as 'com.esri.hadoop.hive.ST_SRID'; create temporary function ST_IsEmpty as 'com.esri.hadoop.hive.ST_IsEmpty'; create temporary function ST_IsSimple as 'com.esri.hadoop.hive.ST_IsSimple'; create temporary function ST_Dimension as 'com.esri.hadoop.hive.ST_Dimension'; create temporary function ST_X as 'com.esri.hadoop.hive.ST_X'; create temporary function ST_Y as 'com.esri.hadoop.hive.ST_Y'; create temporary function ST_MinX as 'com.esri.hadoop.hive.ST_MinX'; create temporary function ST_MaxX as 'com.esri.hadoop.hive.ST_MaxX'; create temporary function ST_MinY as 'com.esri.hadoop.hive.ST_MinY'; create temporary function ST_MaxY as 'com.esri.hadoop.hive.ST_MaxY'; create temporary function ST_IsClosed as 'com.esri.hadoop.hive.ST_IsClosed'; create temporary function ST_IsRing as 'com.esri.hadoop.hive.ST_IsRing'; create temporary function ST_Length as 'com.esri.hadoop.hive.ST_Length'; create temporary function ST_GeodesicLengthWGS84 as 'com.esri.hadoop.hive.ST_GeodesicLengthWGS84'; create temporary function ST_Area as 'com.esri.hadoop.hive.ST_Area'; create temporary function ST_Is3D as 'com.esri.hadoop.hive.ST_Is3D'; create temporary function ST_Z as 'com.esri.hadoop.hive.ST_Z'; create temporary function ST_MinZ as 'com.esri.hadoop.hive.ST_MinZ'; create temporary function ST_MaxZ as 'com.esri.hadoop.hive.ST_MaxZ'; create temporary function ST_IsMeasured as 'com.esri.hadoop.hive.ST_IsMeasured'; create temporary function ST_M as 'com.esri.hadoop.hive.ST_M'; create temporary function ST_MinM as 'com.esri.hadoop.hive.ST_MinM'; create temporary function ST_MaxM as 'com.esri.hadoop.hive.ST_MaxM'; create temporary function ST_CoordDim as 'com.esri.hadoop.hive.ST_CoordDim'; create temporary function ST_NumPoints as 'com.esri.hadoop.hive.ST_NumPoints'; create temporary function ST_PointN as 'com.esri.hadoop.hive.ST_PointN'; create temporary function ST_StartPoint as 'com.esri.hadoop.hive.ST_StartPoint'; create temporary function ST_EndPoint as 'com.esri.hadoop.hive.ST_EndPoint'; create temporary function ST_ExteriorRing as 'com.esri.hadoop.hive.ST_ExteriorRing'; create temporary function ST_NumInteriorRing as 'com.esri.hadoop.hive.ST_NumInteriorRing'; create temporary function ST_InteriorRingN as 'com.esri.hadoop.hive.ST_InteriorRingN'; create temporary function ST_NumGeometries as 'com.esri.hadoop.hive.ST_NumGeometries'; create temporary function ST_GeometryN as 'com.esri.hadoop.hive.ST_GeometryN'; create temporary function ST_Centroid as 'com.esri.hadoop.hive.ST_Centroid'; create temporary function ST_Contains as 'com.esri.hadoop.hive.ST_Contains'; create temporary function ST_Crosses as 'com.esri.hadoop.hive.ST_Crosses'; create temporary function ST_Disjoint as 'com.esri.hadoop.hive.ST_Disjoint'; create temporary function ST_EnvIntersects as 'com.esri.hadoop.hive.ST_EnvIntersects'; create temporary function ST_Envelope as 'com.esri.hadoop.hive.ST_Envelope'; create temporary function ST_Equals as 'com.esri.hadoop.hive.ST_Equals'; create temporary function ST_Overlaps as 'com.esri.hadoop.hive.ST_Overlaps'; create temporary function ST_Intersects as 'com.esri.hadoop.hive.ST_Intersects'; create temporary function ST_Relate as 'com.esri.hadoop.hive.ST_Relate'; create temporary function ST_Touches as 'com.esri.hadoop.hive.ST_Touches'; create temporary function ST_Within as 'com.esri.hadoop.hive.ST_Within'; create temporary function ST_Distance as 'com.esri.hadoop.hive.ST_Distance'; create temporary function ST_Boundary as 'com.esri.hadoop.hive.ST_Boundary'; create temporary function ST_Buffer as 'com.esri.hadoop.hive.ST_Buffer'; create temporary function ST_ConvexHull as 'com.esri.hadoop.hive.ST_ConvexHull'; create temporary function ST_Intersection as 'com.esri.hadoop.hive.ST_Intersection'; create temporary function ST_Union as 'com.esri.hadoop.hive.ST_Union'; create temporary function ST_Difference as 'com.esri.hadoop.hive.ST_Difference'; create temporary function ST_SymmetricDiff as 'com.esri.hadoop.hive.ST_SymmetricDiff'; create temporary function ST_SymDifference as 'com.esri.hadoop.hive.ST_SymmetricDiff'; create temporary function ST_Aggr_ConvexHull as 'com.esri.hadoop.hive.ST_Aggr_ConvexHull'; create temporary function ST_Aggr_Intersection as 'com.esri.hadoop.hive.ST_Aggr_Intersection'; create temporary function ST_Aggr_Union as 'com.esri.hadoop.hive.ST_Aggr_Union'; create temporary function ST_Bin as 'com.esri.hadoop.hive.ST_Bin'; create temporary function ST_BinEnvelope as 'com.esri.hadoop.hive.ST_BinEnvelope';

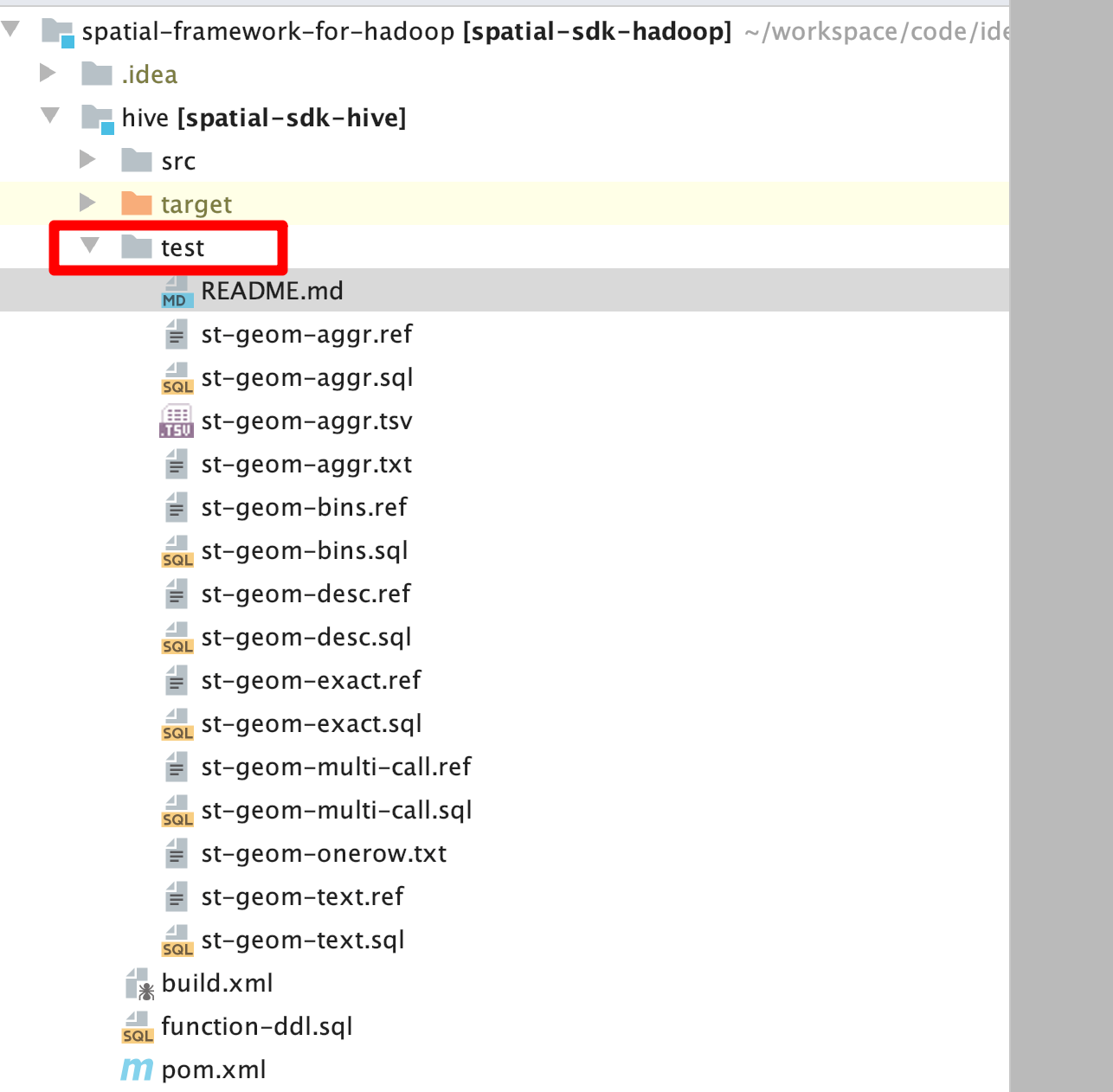

The UDF can be used after it is created. Here we create a temporary UDF. If you want to create a permanent UDF, just remove the temporary. A large number of data and test cases are provided in the test directory

More examples and tutorials can be referred to wiki and sample sample provides a lot of data. Here we take a look at an example of seismic data

-- Basic table structure/Seismic information table CREATE TABLE IF NOT EXISTS earthquakes (earthquake_date STRING, latitude DOUBLE, longitude DOUBLE, magnitude DOUBLE) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','; -- Load data LOAD DATA LOCAL INPATH '/Users/liuwenqiang/gis-tools-for-hadoop/samples/data/earthquake-data/earthquakes.csv' OVERWRITE INTO TABLE earthquakes; -- Regional information mainly records the geographical scope of each region CREATE TABLE counties (Area string, Perimeter string, State string, County string, Name string, BoundaryShape binary) ROW FORMAT SERDE 'com.esri.hadoop.hive.serde.EsriJsonSerDe' STORED AS INPUTFORMAT 'com.esri.json.hadoop.EnclosedEsriJsonInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'; -- Load data LOAD DATA LOCAL INPATH '/Users/liuwenqiang/gis-tools-for-hadoop/samples/data/counties-data/california-counties.json' OVERWRITE INTO TABLE counties; -- Execute query/ Count the number of earthquakes in each region SELECT counties.name, count(*) cnt FROM counties JOIN earthquakes WHERE ST_Contains(counties.boundaryshape, ST_Point(earthquakes.longitude, earthquakes.latitude)) GROUP BY counties.name ORDER BY cnt desc;

The results are as follows:

Kern 36 San Bernardino 35 Imperial 28 Inyo 20 Los Angeles 18 Riverside 14 Monterey 14 Santa Clara 12 Fresno 11 San Benito 11 San Diego 7 Santa Cruz 5 San Luis Obispo 3 Ventura 3 Orange 2 San Mateo 1

reference material

- GeoData Blog on the ArcGIS Blogs

- Big Data Place on GeoNet

- ArcGIS Geodata Resource Center

- ArcGIS Blog

- twitter@esri

summary

Spatial Framework for Hadoop is actually a set of solutions provided by Hadoop for geospatial computing, so that the powerful computing power of Hadoop can be used for geospatial computing.

The official website provides many cases. At the same time, there are many excellent blogs worth learning. The source code of this framework is worth learning, because it defines a large number of UDF, Serde and Format, which can be used as a reference for our learning, and the number is certainly not seen in other projects.