This is a local docker deployment note. Basically, refer to the following articles. Record some problems encountered in actual operation.

elasticsearch latest quick start details_ Waiting for flowers to bloom - CSDN blog_ Getting started with elasticsearch

elasticsearch Chinese word segmentation, this is enough_ Waiting for flowers to bloom - CSDN blog_ elasticsearch Chinese word segmentation

The most clear and understandable elasticsearch operation manual | necessary for favorites_ Waiting for flowers to bloom - CSDN blog_ Elasticsearch operation manual

elasticsearch using ik Chinese word splitter - SegmentFault no

1 image preparation

docker search elasticsearch

Search elasticsearch to see the following image,

Here we use the second one, which integrates es and kibana.

nshou/elasticsearch-kibana - Docker Image | Docker Hub

Pull image

docker pull nshou/elasticsearch-kibana

Create and run container

docker run -d -p 9200:9200 -p 9300:9300 -p 5601:5601 --name eskibana nshou/elasticsearch-kibana

Enter the container (here ddade0ae3 is the container ID returned in the previous sentence)

docker exec -it ddade0ae3 bash

2 mount the data / configuration directory

If you do not use mount, the data will be gone after the container is deleted. In actual use, persistent data is usually mounted.

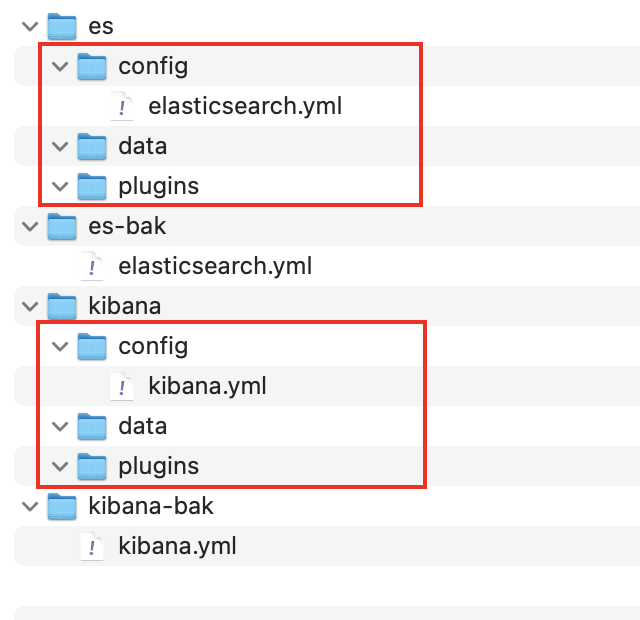

Files / directories to be mounted: configuration file, data directory, plug-in directory.

You can copy the default configuration file in the container first to avoid creating one yourself.

Here 018d550c168c is the container ID. the command is executed on the host computer. The execution directory is a directory selected by you to be mounted.

My directory here is: / Users/myname/Documents/docker/es/vol/eskibana

docker cp 018d550c168c:/home/elasticsearch/kibana-7.16.2-linux-x86_64/config/kibana.yml ./kibana-bak/

docker cp 018d550c168c:/home/elasticsearch/elasticsearch-7.16.2/config/elasticsearch.yml ./es-bak/

After copying the file, prepare the directory to mount.

The final directory is like this. The bak directory is used for backup.

Assign read and write permissions

chmod -R 777 ./es

chmod -R 777 ./kibana

Close the previously running container (otherwise the port will be occupied), and use mount to run the new container

docker run --name esk \ -p 9200:9200 -p 9300:9300 -p 5601:5601 \ -e "discovery.type=single-node" \ -e ES_JAVA_OPTS="-Xms64m -Xmx512m" \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/config/elasticsearch.yml:/home/elasticsearch/elasticsearch-7.16.2/config/elasticsearch.yml \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/data:/home/elasticsearch/elasticsearch-7.16.2/data \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/plugins:/home/elasticsearch/elasticsearch-7.16.2/plugins \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/config/kibana.yml:/home/elasticsearch/kibana-7.16.2-linux-x86_64/config/kibana.yml \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/data:/home/elasticsearch/kibana-7.16.2-linux-x86_64/data \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/plugins:/home/elasticsearch/kibana-7.16.2-linux-x86_64/plugins \ -d nshou/elasticsearch-kibana

reference resources:

Docker deployment Elasticsearch

docker installing ElasticSearch

If the following errors are found at runtime,

Error response from daemon: Mounts denied: The path /es/data is not shared from the host and is not known to Docker. You can configure shared paths from Docker -> Preferences... -> Resources -> File Sharing. See https://docs.docker.com/desktop/mac for more info.

1 check the docker configuration according to the prompt, 2 use the absolute path

reference resources: is not shared from OS X and is not known to Docker_ The program and I have one that can run - CSDN blog

3. Install Chinese word segmentation plug-in

I use the method of manual download first and then command installation.

3.1 download the plug-in zip package from github

https://github.com/medcl/elasticsearch-analysis-ik/releases

3.2 copy the zip package to the container

docker cp ./ik.zip f8ad1fb16b0:/home/elasticsearch/mytmp

3.3 installing inserts in containers

This sentence needs to be run in the bin directory in the es directory. Elastic search plugin is not a global instruction

./elasticsearch-plugin install file:///home/elasticsearch/mytmp/ik.zip

After installing the plug-in, you need to restart the container

When restarting, if the following error occurs,

Exception in thread "main" java.nio.file.NotDirectoryException: /home/elasticsearch/elasticsearch-7.16.2/plugins/.DS_Store

You need to delete the in the plugins directory DS_Store file

ls -a

rm .DS_Store

Then restart the container

Test Chinese word segmentation

POST /_analyze

{

"analyzer": "ik_max_word",

"text": "Listen to the wind"

}

result:

{

"tokens" : [

{

"token" : "And listen",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "Wind chant",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

}

]

}

4 test ES and kibana

visit http://127.0.0.1:5601/ To access kibana and test in dev tools.

Use the elasticsearch head chrome plug-in to view the data.

5. Test the mounting effect

Close the container started above and use the above command to start a new container

docker run --name esk2 \ -p 9200:9200 -p 9300:9300 -p 5601:5601 \ -e "discovery.type=single-node" \ -e ES_JAVA_OPTS="-Xms64m -Xmx512m" \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/config/elasticsearch.yml:/home/elasticsearch/elasticsearch-7.16.2/config/elasticsearch.yml \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/data:/home/elasticsearch/elasticsearch-7.16.2/data \ -v /Users/xxx/Documents/docker/es/vol/eskibana/es/plugins:/home/elasticsearch/elasticsearch-7.16.2/plugins \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/config/kibana.yml:/home/elasticsearch/kibana-7.16.2-linux-x86_64/config/kibana.yml \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/data:/home/elasticsearch/kibana-7.16.2-linux-x86_64/data \ -v /Users/xxx/Documents/docker/es/vol/eskibana/kibana/plugins:/home/elasticsearch/kibana-7.16.2-linux-x86_64/plugins \ -d nshou/elasticsearch-kibana

If the previous container is not deleted, the -- name here needs a new name.

If everything is normal, after the restart is completed, the previous data and Kanban in kibana should still exist.