github Guide

Prepare the environment

Prepare environment:

1. Cloud Server: centos7

2.docker installation (refer to my other articles)

Originally intended to be written in the scrapy framework, but found that it is still not working, but deployment has been exhausting, so this time we are ready to make a simple version of 23333

Official start (humble version whining)

1. Write the crawler's main file locally first.

Project by

- main function

- Reptilian Weather

- Scheduler scheduler

Form

The main function and the crawler implement the crawl logic:

- request

- response

- Clean

- Preservation

The main function adds an error capture mechanism to prevent the request from failing

# main.py

from Weather import WeatherSpider

import time

"""Requirement:

Daily crawl http://Zhuhai has the highest and lowest temperatures in gd.weather.com.cn/zhuhai/index.shtml.

"""

def main():

url = "http://gd.weather.com.cn/zhuhai/index.shtml"

# Instantiate a crawler object

spider = WeatherSpider(url)

# Add error capture mechanism

try:

# Get response

spider.get_response()

except:

try:

time.sleep(60) # Take a minute off to catch

spider.get_response()

except: # If it fails again, there's no way

spider.save("Grab Failure")

else: # If it's okay to get it, clean the data and save it

# Cleaning data

spider.clean()

# Save data

spider.save()

if __name__ == '__main__':

main()

# weather.py

import requests

from bs4 import BeautifulSoup

import time

class WeatherSpider:

def __init__(self, url):

self.url = url

self.header = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36"}

def get_response(self):

response = requests.get(self.url, header=self.header)

self.text = response.text

def clean(self):

soup = BeautifulSoup(self.text, "html.parser")

self.low = soup.find(id="forecastID").find_all('a')[3].find('span').text

self.high = soup.find(id="forecastID").find_all('a')[2].find('span').text

self.date_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

def save(self, defalut=None):

if defalut != None:

# Preservation

with open("./Dayweather_log.txt", 'a') as f:

f.write("Today's date is{},{}\n".format(self.date_time, defalut))

# Save the temperature of the day

with open("./Dayweather_log.txt", 'a') as f:

f.write("Today's date is{},Maximum temperature{}, Minimum temperature{}\n".format(self.date_time, self.high, self.low))

Scheduler is designed to allow crawlers to crawl the target site at a fixed time

import schedule

import main

# Set Scheduler

# schedule.every(5).seconds.do(_timer.main)# Execute job every 5s

schedule.every().day.at("00:00").do(main.main) # Perform tasks at regular times per day

if __name__ == '__main__':

# Evidence-Based Execution

while True:

schedule.run_pending()

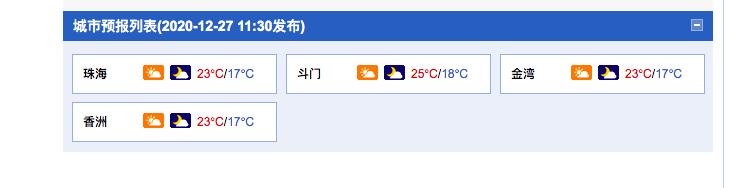

Target Site Screenshot:

Deploy on Server

As a qualified timer crawler, in addition to being more robust than regular crawlers (i.e., error capture as a stabilizing technique, framework is actually the best choice, but unfortunately I don't know wwww), you need a running system, so the server is the best choice. Here, I choose centos7+docker for deployment.

clone first takes a look at our project (really not advertising 2333)

git clone https://github.com/mi4da/Spyder-Wednesday

Suppose you have docker installed and have a python mirror (don't go to my other blogs and do an ad)

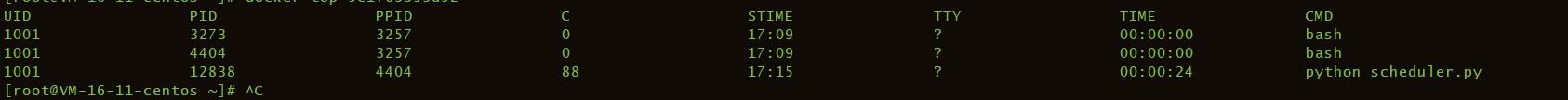

Let's use the second to last image

Create containers:

docker run -itd -v /home/weatherspider/:/home/weatherspider centos/python-36-centos7 weatherspider_py36 bash

-d, running in the background.

Clone item:

git clone https://gitee.com/jobilojostar/Spyder-Wednesday.git

Run in docker,

nohup python scheduler.py &

nohup-&: Ignore shell shutdown signal,

In this way, the python crawler can run in a completely undisturbed environment all the time!

docker top [CONTAINER ID] #Check the progress of the target container

Note that we have exited the container at this time. So successful!