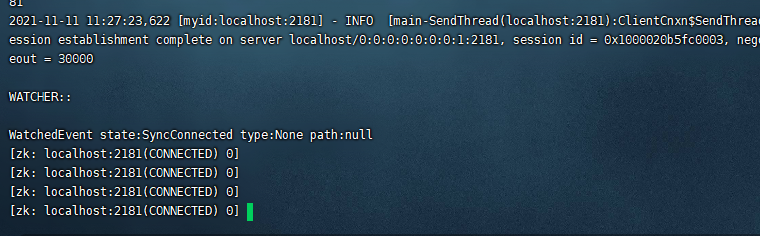

zkCli.sh client command

[root@iZwz9fyh8d6xm9hs0q453mZ bin]# ./zkCli.sh

./zkCli.sh -timeout 0 -r -server ip:port ./zkCli.sh -timeout 5000 -server 192.9.200.242:2181 -r : even if ZooKeeper The server cluster usually shuts down the above servers and also provides the client system with read services

h: Show all commands

[zk: localhost:2181(CONNECTED) 0] [zk: localhost:2181(CONNECTED) 0] h ZooKeeper -server host:port -client-configuration properties-file cmd args addWatch [-m mode] path # optional mode is one of [PERSISTENT, PERSISTENT_RECURSIVE] - default is PERSISTENT_RECURSIVE addauth scheme auth close config [-c] [-w] [-s] connect host:port create [-s] [-e] [-c] [-t ttl] path [data] [acl] delete [-v version] path deleteall path [-b batch size] delquota [-n|-b] path get [-s] [-w] path getAcl [-s] path getAllChildrenNumber path getEphemerals path history listquota path ls [-s] [-w] [-R] path printwatches on|off quit reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*] redo cmdno removewatches path [-c|-d|-a] [-l] set [-s] [-v version] path data setAcl [-s] [-v version] [-R] path acl setquota -n|-b val path stat [-w] path sync path version Command not found: Command not found h [zk: localhost:2181(CONNECTED) 1]

Create node

create [-s] [-e] [-c] [-t ttl] path [data] [acl]

[- s]: serially serialized, that is, it can be created repeatedly, and the serial number is added after the path [- e]: ephemeral is temporary and will automatically fail after disconnection [- c]: indicates container node, [- t ttl]: indicates TTL Nodes (nodes with timeout) [acl]: create a permission for this node. If the permission is created, only those with permission can access it Note: the temporary node will disappear automatically after the client ends the session with the server

Delete node

Delete the node, - v indicates the version number and implements the optimistic locking mechanism

Delete the node of the specified path. If there are child nodes, delete the child nodes first

delete [-v version] path

Update node

Modify the data content of the current node. If a version is specified, it needs to be consistent with the data version of the current node

Assign - s to the node to return the node status

set [-s] [-v version] path data

Query node information

Gets the value of the specified node

get [-s] [-w] path

ls path: view the information of all child nodes under a node

ls /: lists all child node information under the root node

stat path: get the status information of the specified node. Analysis:

[zk: localhost:2181(CONNECTED) 5] stat /zookeeper cZxid = 0x0 ctime = Thu Jan 01 08:00:00 CST 1970 mZxid = 0x0 mtime = Thu Jan 01 08:00:00 CST 1970 pZxid = 0x0 cversion = -2 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 2 [zk: localhost:2181(CONNECTED) 6]

czxid create the transaction ID of this node

ctime the time the node was created

mZxid updates the transaction ID of the node

The time when mtime updates the node

pZxid operates the transaction ID of the child node list of the current node (this operation includes adding child nodes and deleting child nodes)

cversion the version number of the child node of the current node

dataVersion the data version number of the current node

aclVersion the acl permission version number of the current node

ephemeralowner if the current node is a temporary node, this attribute is the transaction ID of the temporary node

dataLength the data length of d the current node

numchildren the number of child nodes of the current node

ls2 path: a combination of ls and stat commands

Quit: quit client

rmr path deletes the current path node and all its child nodes

connect host:port and close: connect other ZooKeeper servers and close servers in the current connection

history and redo cmdno: view all commands executed by the client in this session and execute the specified historical commands

Zoomeeper memory adjustment method

Zookeeper 3.4.5 memory allocation

There are some questions about the memory setting of zookeeper. Here are some answers:

First, let's introduce how to allocate memory:

File path: zookeeper/bin/zkEnv.sh

This file has clearly stated the setting file with independent JVM memory, and the path is zookeeper/conf/ Java.env

There is no java.env file in this path during installation. You need to create one yourself:

vi java.env

The contents of the java.env file are as follows:

#!/bin/sh export JAVA_HOME=/usr/java/jdk # heap size MUST be modified according to cluster environment export JVMFLAGS="-Xms512m -Xmx1024m $JVMFLAGS"

The allocation of memory depends on the project and machine. If the memory is sufficient, the appropriate size can improve zk performance.

zookeeper nodes are odd

1. Fault tolerance:

First, let's explain from the fault tolerance rate: (it is necessary to ensure that half of the cluster can vote)

-

For two servers, at least two servers can operate normally (half of them are 1, and more than half are at least 2). It is not allowed to hang up one server in normal operation. However, compared with single node servers, the two servers still have two single points of failure, so they are directly eliminated.

-

For 3 servers, at least 2 servers should be in normal operation (1.5 for half of 3 and at least 2 for more than half). One server can be allowed to hang up in normal operation

-

For 4 servers, at least 3 servers should be in normal operation (2 for half of 4 and at least 3 for more than half). One server can be allowed to hang up in normal operation

-

For 5 servers, at least 3 servers should be in normal operation (2.5 for half of 5 and at least 3 for more than half). Two servers can be allowed to hang up in normal operation

2. Prevention of brain crack

The brain crack of brain crack cluster usually occurs when the communication between nodes is unreachable. The cluster will be divided into different small clusters. The small clusters select their own leader nodes, resulting in multiple leader nodes in the original cluster, which is brain crack.

-

For three servers, half of the voting is 1.5. One service is split and cannot pass through the other two servers. At this time, the cluster of two servers (more than half of the voting is 1.5), so the leader can be elected, while the cluster of one server cannot be elected.

-

For 4 servers, half of the voting is 2, which can be divided into 1,3 clusters or 2,2 clusters. For 1,3 clusters, 3 clusters can be elected; For 2,2 clusters, it cannot be selected, resulting in no leader node.

-

For 5 servers, the voting half is 2.5, which can be divided into 1,4 clusters or 2,3 clusters. These two clusters can only elect one cluster respectively, meeting the number of zookeeper clusters.

Based on the above analysis, we have explained that three servers are the minimum number of clusters in terms of fault tolerance and prevention of brain crack. When four servers have brain crack, there will be no leader node error.