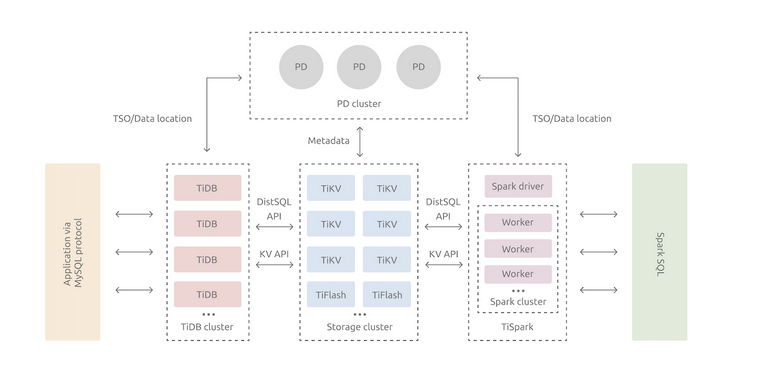

See the architecture and introduction of tidb

TiDB Overall Architecture | PingCAP Docs understands the overall architecture of TiDB. https://docs.pingcap.com/zh/tidb/v4.0/tidb-architecture brief introduction

https://docs.pingcap.com/zh/tidb/v4.0/tidb-architecture brief introduction

TiDB yes PingCAP The Open Source Distributed Relational Database, designed and developed independently by the company, is a hybrid distributed database product that supports both online transaction processing and online analysis processing (HTAP). It has horizontal expansion or scaling, high availability at financial level, real-time HTAP, cloud-native distributed database, Compatible with important features such as MySQL 5.7 protocol and MySQL ecology. The goal is to provide users with one-stop OLTP (Online Transactional Processing), OLAP (Online Analytical Processing), and HTAP solutions. TiDB is suitable for a variety of scenarios, such as high availability, high consistency requirements, and large data sizes

Architecture diagram

assembly

-

TiDB Server: The SQL layer, which exposes MySQL protocol connection endpoint s, accepts client connections, performs SQL parsing and optimization, and ultimately generates distributed execution plans. The TiDB layer itself is stateless, and you can start multiple TiDB instances in practice. By providing a unified access address to a load balancing component such as LVS, HAProxy, or F5, client connections can be evenly distributed across multiple TiDB instances to achieve load balancing. TiDB Server does not store data itself, it simply parses SQL and forwards actual data read requests to the underlying storage node TiKV (or TiFlash).

-

PD (Placement Driver) Server: A meta-information management module for the entire TiDB cluster that stores the real-time data distribution of each TiKV node and the overall topology of the cluster, provides a TiDB Dashboard management interface, and assigns transaction ID s to distributed transactions. PDs not only store meta-information, but also send data dispatch commands to specific TiKV nodes according to the data distribution status reported by TiKV nodes in real time, which can be regarded as the "brain" of the whole cluster. In addition, the PDs themselves are made up of at least three nodes and have high availability. It is recommended that an odd number of PD nodes be deployed.

-

Storage Node

- TiKV Server: Stores data and externally, TiKV is a distributed Key-Value storage engine that provides transactions. The basic unit of data storage is Region, where each Region stores data from a Key Range (left-closed right-open range from StartKey to EndKey), where each TiKV node is responsible for multiple Regions. TiKV's API provides native support for distributed transactions at the KV key-value pair level, with the default isolation level of SI (Snapshot Isolation). This is also the core of TiDB's support for distributed transactions at the SQLL level. When the SQL layer of TiDB has finished SQL parsing, the execution plan of the SQL is converted to the actual call to the TiKV API. Therefore, the data is stored in TiKV. In addition, data in TiKV automatically maintains multiple copies (three copies by default), naturally supporting high availability and automatic failover.

- TiFlash: TiFlash is a special type of storage node. Unlike regular TiKV nodes, within TiFlash, data is stored as a column, mainly for analytical scene acceleration.

Experimental environment

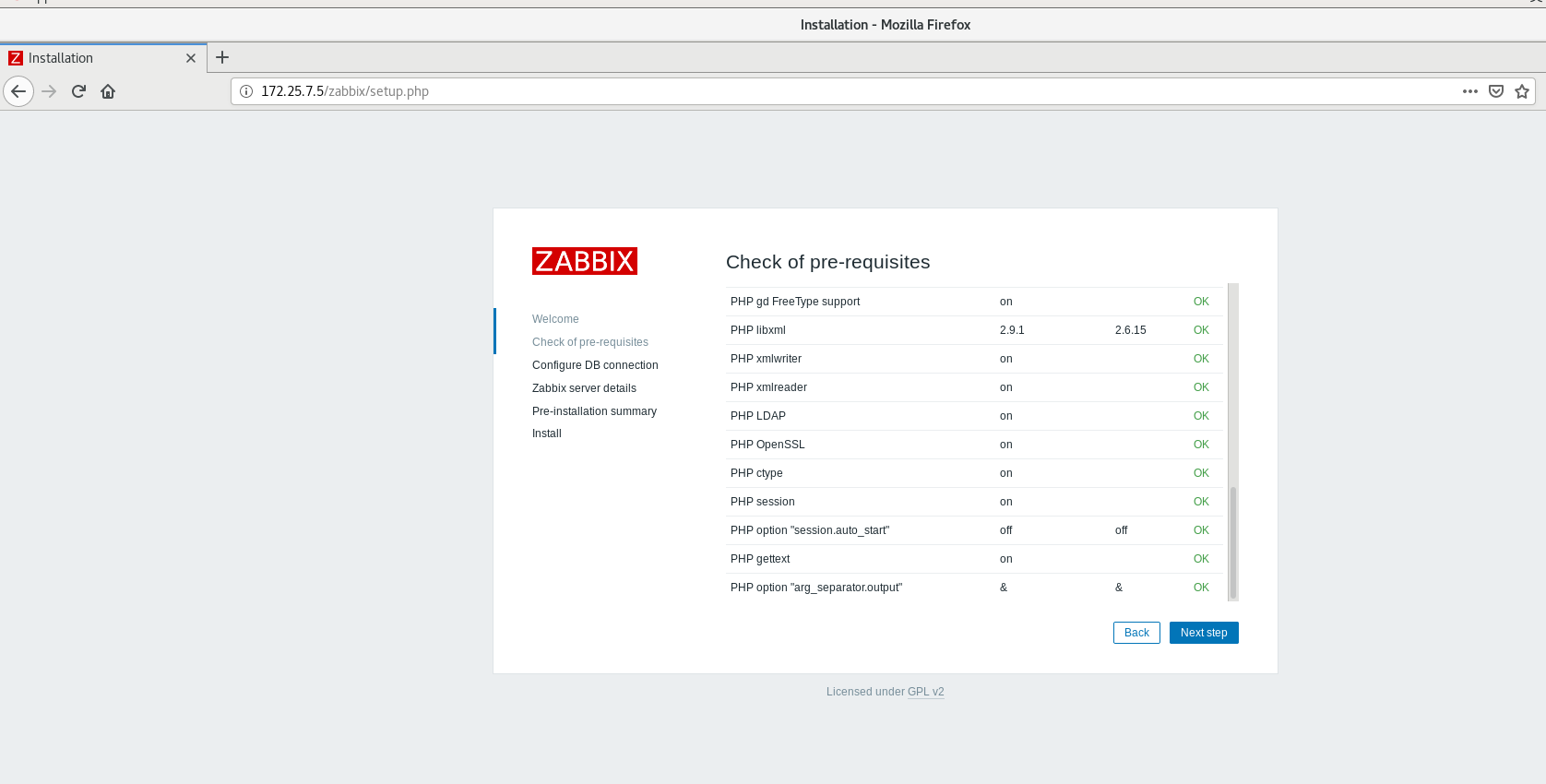

vm1 with zabbix monitoring installed

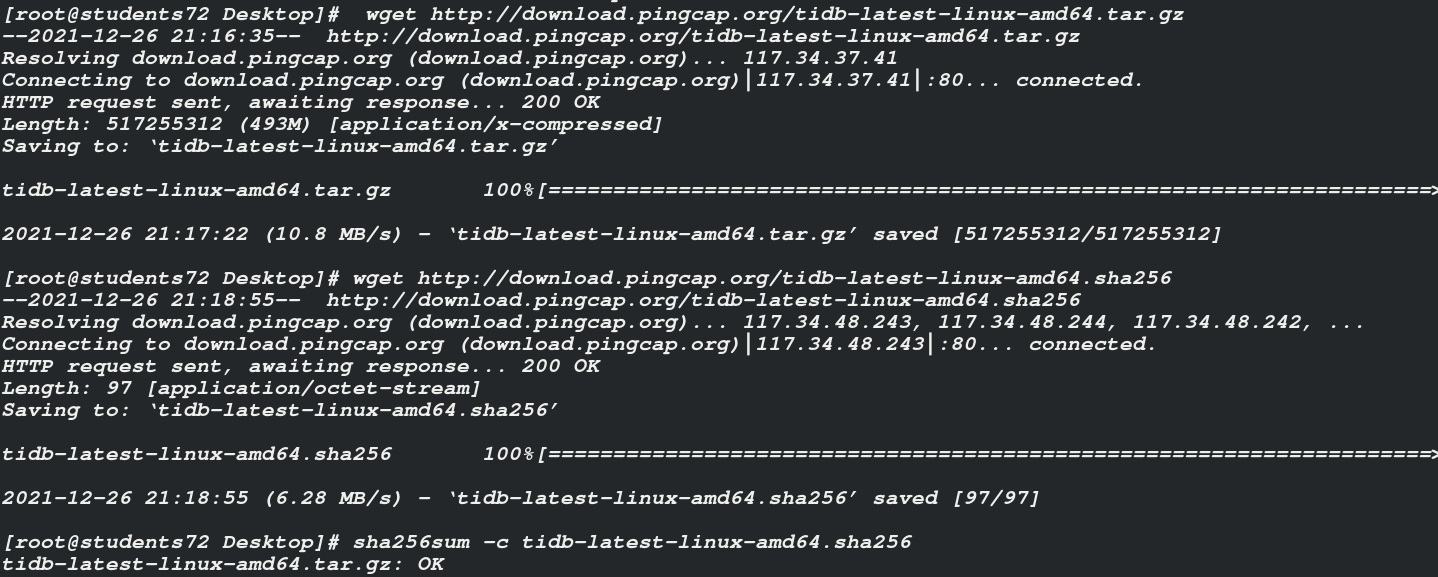

tidb installation

yum install wget -y wget http://download.pingcap.org/tidb-latest-linux-amd64.sha256 wget http://download.pingcap.org/tidb-latest-linux-amd64.tar.gz sha256sum -c tidb-latest-linux-amd64.sha256 Verification

Initialization of database installation

yum install mysql-community-libs-compat-5.7.24-1.el7.x86_64.rpm mysql-community-server-5.7.24-1.el7.x86_64.rpm mysql-community-client-5.7.24-1.el7.x86_64.rpm mysql-community-common-5.7.24-1.el7.x86_64.rpm mysql-community-libs-5.7.24-1.el7.x86_64.rpm -y systemctl start mysqld cat /var/log/mysqld.log | grep password 2021-12-26T13:29:08.521584Z 1 [Note] A temporary password is generated for root@localhost: cp++8q:%2yY1 mysql_secure_installation

Unzip tidb to start

tar zxf tidb-latest-linux-amd64.tar.gz cd tidb-v5.0.1-linux-amd64/

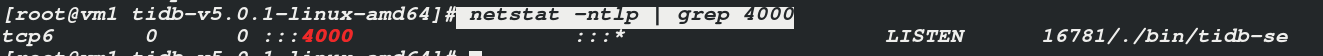

./bin/pd-server --name=pd1 --data-dir=pd1 --client-urls="http://172.25.7.5:2379" --peer-urls="http://172.25.8.8:2380" --initial-cluster="pd1=http://172.25.7.5:2380" --log-file=pd.log & open pd ./bin/tidb-server & open tidb netstat -ntlp | grep 4000 View service ports

Database and tidb connections

mysql -h 172.25.7.5 -P 4000 -uroot

data base

create database zabbix character set utf8 collate utf8_bin; CREATE USER 'zabbix'@'%' IDENTIFIED BY 'westos'; grant all privileges on zabbix.* to zabbix@'%';

Data Import

cd /usr/share/doc/zabbix-server-mysql-5.0.19/ zcat create.sql.gz | mysql -h 172.25.7.5 -P 4000 -uroot zabbix

vim /etc/zabbix/zabbix_server.conf

124 DBPassword=westos

vim /etc/opt/rh/rh-php72/php-fpm.d/zabbix.conf

25 php_value[date.timezone] = Asia/Shanghai

systemctl restart rh-php72-php-fpm.service

systemctl enable zabbix-server.service zabbix-agent.service