according to the business requirements that business developers usually face, we divide the log into operation (request) log and system operation log. The operation (request) log allows administrators or operators to easily query and track the specific operations of users in the system interface, so as to analyze and count user behavior; The system operation log is divided into different levels (Log4j2): off > fat > error > warn > info > debug > trace > all. These log levels are determined by the developer when writing the code, written in the code, and recorded when the system is running, which is convenient for the system developer to analyze, locate and solve the problem and find the system performance bottleneck.

we can customize the annotation and use AOP to intercept the Controller request to record the system (log) operation log. The system operation log can use log4j2 or logback. Under the SpringCloud microservice architecture, the Gateway can be used to record operation (request) logs uniformly. Due to the distributed cluster deployment of microservices, there are multiple services in the same service. Here, the log tracking needs the help of Skywalking and ELK to realize specific tracking, analysis and recording.

due to the recent outbreak of log4j2 and logback vulnerabilities, please select the latest version to fix the vulnerabilities. According to many online performance comparisons, log4j2 is significantly better than logback, so we modify the default log logback of SpringBoot to log4j2.

in the framework design, we try our best to consider the use scenario of the log system, design the implementation mode of the log system to be dynamically configurable, and then select and use the appropriate log system according to the business requirements. According to the common business requirements, we temporarily implement the microservice log system in the following way:

Operation log:

-

Use the AOP feature to customize the annotation to intercept the Controller request and realize the system operation log

Advantages: it is easy to realize. The operation log can be recorded through annotation.

Disadvantages: it needs to be hard coded into the code, and the flexibility is poor. -

Read the configuration at the Gateway and record the operation log uniformly

Advantages: configurable, which operation logs need to be recorded for real-time changes.

Disadvantages: the configuration and implementation are slightly complex.

The operation log is divided into two implementation methods, each of which has its own advantages and disadvantages. No matter which implementation method is adopted, the log record is recorded through Log4j2. Through the configuration of Log4j2, it can be dynamically selected to record to files, relational database MySQL, NoSQL database MongoDB, message middleware Kafka, etc.

System log:

- Log4j2 record log, ELK collection and analysis display

The system log can be recorded in a common way. It can be recorded to the log file through Log4j2 and displayed through ELK collection and analysis.

The following are the specific implementation steps:

1, Link tracking ID + 4walkingjlog2

Logback is an excellent tool used by the founder of Java to record logs in detail. Later, logback is used as the default tool to record logs, which can be compared with logj, which was used by the founder of Java. In recent years, Apache has upgraded log4j and launched log4j2 version, which is superior to log4j and logback in terms of design and performance. The detailed comparison can be tested by yourself. There are corresponding test reports on the Internet, which are not described in detail here. Therefore, we definitely need to choose the most appropriate logging tool at present.

1. Change the default log Logback of SpringBoot to Log4j2

Exclude spring boot starter web and other dependent logback s

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<!-- remove springboot default logback to configure-->

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

The spring boot starter log4j2 dependency is introduced because the log4j2 version introduced by the corresponding version of SpringBoot is vulnerable. Here, the default log4j2 version is excluded and the latest version to fix the vulnerability is introduced.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

</exclusion>

</exclusions>

</dependency>

Introduce log4j2 to fix the dependency of vulnerable version

<!-- repair log4j2 loophole -->

<log4j2.version>2.17.1</log4j2.version>

<!-- log4j2 Support asynchronous log, import disruptor Dependency. You don't need to support asynchronous logging. You can also remove the dependency package -->

<log4j2.disruptor.version>3.4.4</log4j2.disruptor.version>

<!-- repair log4j2 loophole -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j2.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j2.version}</version>

</dependency>

<!-- log4j2 read spring Configured dependent Libraries -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-spring-boot</artifactId>

<version>${log4j2.version}</version>

</dependency>

<!-- log4j2 Support asynchronous log, import disruptor Dependency. You don't need to support asynchronous logging. You can also remove the dependency package -->

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

<version>${log4j2.disruptor.version}</version>

</dependency>

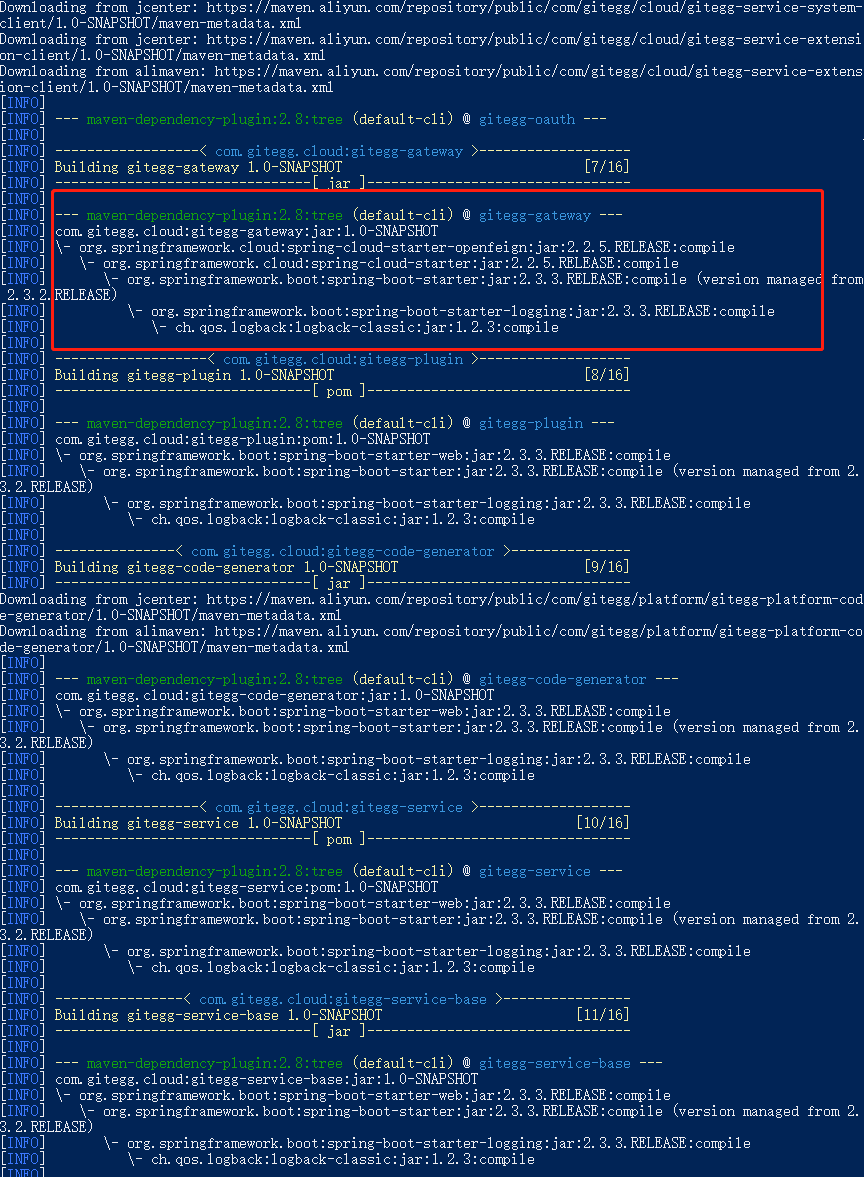

Because there are many sub dependencies in SpringBoot and other jar packages that depend on logback in jar packages, Maven tool needs to be used to locate and find these jar packages that depend on logback and eliminate them one by one. Execute Maven command under the project folder:

mvn dependency:tree -Dverbose -Dincludes="ch.qos.logback:logback-classic"

As shown in the figure above, they are all jar packages that rely on logback and need to be excluded, otherwise they will conflict with log4j2.

2. Integrated printable SkyWalking link tracking TraceId dependency

<!-- skywalking-log4j2 link id Version number -->

<skywalking.log4j2.version>6.4.0</skywalking.log4j2.version>

<!-- skywalking-log4j2 link id -->

<dependency>

<groupId>org.apache.skywalking</groupId>

<artifactId>apm-toolkit-log4j-2.x</artifactId>

<version>${skywalking.log4j2.version}</version>

</dependency>

2. Log4j2 configuration instance

Configure the required log4j2 XML, configuring [% traceId] in Pattern can display the link tracking ID. if you want to read the configuration of yaml in springboot, you must introduce log4j spring boot dependency.

<?xml version="1.0" encoding="UTF-8"?>

<!--Log level and prioritization: OFF > FATAL > ERROR > WARN > INFO > DEBUG > TRACE > ALL -->

<configuration monitorInterval="5" packages="org.apache.skywalking.apm.toolkit.log.log4j.v2.x">

<!--Variable configuration-->

<Properties>

<!-- Format output:%date Indicates the date, traceId Represents a micro service Skywalking track id,%thread Represents the thread name,%-5level: The level is displayed 5 characters wide from the left %m: Log messages,%n Is a newline character-->

<!-- %c Output class details %M Output method name %pid output pid %line On which line is the log printed -->

<!-- %logger{80} express Logger The maximum length of the first name is 80 characters -->

<!-- value="${LOCAL_IP_HOSTNAME} %date [%p] %C [%thread] pid:%pid line:%line %throwable %c{10} %m%n"/>-->

<property name="CONSOLE_LOG_PATTERN"

value="%d %highlight{%-5level [%traceId] pid:%pid-%line}{ERROR=Bright RED, WARN=Bright Yellow, INFO=Bright Green, DEBUG=Bright Cyan, TRACE=Bright White} %style{[%t]}{bright,magenta} %style{%c{1.}.%M(%L)}{cyan}: %msg%n"/>

<property name="LOG_PATTERN"

value="%d %highlight{%-5level [%traceId] pid:%pid-%line}{ERROR=Bright RED, WARN=Bright Yellow, INFO=Bright Green, DEBUG=Bright Cyan, TRACE=Bright White} %style{[%t]}{bright,magenta} %style{%c{1.}.%M(%L)}{cyan}: %msg%n"/>

<!-- read application.yaml Log path set in file logging.file.path-->

<property name="FILE_PATH" value="/var/log"/>

<property name="FILE_STORE_MAX" value="50MB"/>

<property name="FILE_WRITE_INTERVAL" value="1"/>

<property name="LOG_MAX_HISTORY" value="60"/>

</Properties>

<appenders>

<!-- console output -->

<console name="Console" target="SYSTEM_OUT">

<!-- Format of output log -->

<PatternLayout pattern="${CONSOLE_LOG_PATTERN}"/>

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch) -->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

</console>

<!-- This will print out all the info And below, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileInfo" fileName="${FILE_PATH}/info.log"

filePattern="${FILE_PATH}/INFO-%d{yyyy-MM-dd}_%i.log.gz">

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the debug And below, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive-->

<RollingRandomAccessFile name="RollingFileDebug" fileName="${FILE_PATH}/debug.log"

filePattern="${FILE_PATH}/DEBUG-%d{yyyy-MM-dd}_%i.log.gz">

<!--Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="debug" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the warn And above, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive-->

<RollingRandomAccessFile name="RollingFileWarn" fileName="${FILE_PATH}/warn.log"

filePattern="${FILE_PATH}/WARN-%d{yyyy-MM-dd}_%i.log.gz">

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="warn" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!-- interval Property is used to specify how often to scroll. The default is 1 hour -->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder -->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the error And above, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileError" fileName="${FILE_PATH}/error.log"

filePattern="${FILE_PATH}/ERROR-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="error" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

</appenders>

<!-- Logger Node is used to specify the form of log separately, for example, for the log under the specified package class Specify different log levels, etc -->

<!-- Then define loggers,Only defined logger And introduced appender,appender Will take effect -->

<loggers>

<!--Filter out spring and mybatis Some useless DEBUG information-->

<logger name="org.mybatis" level="info" additivity="false">

<AppenderRef ref="Console"/>

</logger>

<!--If additivity Set as false,Zezi Logger Only in their own appender Output in, not in the parent Logger of appender Inner output -->

<Logger name="org.springframework" level="info" additivity="false">

<AppenderRef ref="Console"/>

</Logger>

<AsyncLogger name="AsyncLogger" level="debug" additivity="false">

<AppenderRef ref="Console"/>

<AppenderRef ref="RollingFileDebug"/>

<AppenderRef ref="RollingFileInfo"/>

<AppenderRef ref="RollingFileWarn"/>

<AppenderRef ref="RollingFileError"/>

</AsyncLogger>

<root level="trace">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileDebug"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="RollingFileWarn"/>

<appender-ref ref="RollingFileError"/>

</root>

</loggers>

</configuration>

3. The IDEA console displays a color log

above log4j2 * log in XML_ The configuration of pattern has been set. Each log displays the same color. It has no effect in IDEA by default. Here you need to set it: click Edit Configurations in the upper right corner of the running window and add - dlog4j in VM options Skipjansi = false, run it again, and you can see the color log displayed on the IDEA console.

2, Customize the extended log level to realize the configurable log access mode

although Log4j2 provides log saving to MySQL, MongoDB and other databases, it is not recommended to directly use Log4j2 to save logs to MySQL, MongoDB and other databases. This is not only when each micro service introduces Log4j2 components, Increase the number of database link pools (do not consider reusing the existing database connection pools of the business system, because not every micro service needs a log table). In addition, in the case of high concurrency, this will be a fatal problem for the whole business system. Considering that the project system has few access and operations, in order to reduce the complexity of system maintenance and avoid introducing too many components and environments, it is necessary to consider whether the business system needs to use microservice architecture.

in the case of high concurrency, we recommend two ways to record the operation log: first, save the log to the message queue as a buffer of the database, and consumers save the log data to the database in batches to reduce the coupling, so that the log operation does not affect the business operation as much as possible; Second, the asynchronous file log of Log4j2 is used to cooperate with the establishment of ELK log collection and analysis system to realize the function of saving operation log.

1. Customize operation log and interface access log levels

the default logging level cannot meet our requirements for recording operation logs and interface logs. Here, we extend the logging level of Log4j2 to realize custom operation logs and interface logs.

Create a new LogLevelConstant to define the log level

/**

* Custom log level

* Business operation log level (the higher the level, the smaller the number) off 0, fatal 100, error 200, warn 300, info 400, debug 500

* warn operation api

* @author GitEgg

*/

public class LogLevelConstant {

/**

* Operation log

*/

public static final Level OPERATION_LEVEL = Level.forName("OPERATION", 310);

/**

* Interface log

*/

public static final Level API_LEVEL = Level.forName("API", 320);

/**

* Operation log information

*/

public static final String OPERATION_LEVEL_MESSAGE = "{type:'operation', content:{}}";

/**

* Interface log information

*/

public static final String API_LEVEL_MESSAGE = "{type:'api', content:{}}";

}

It should be noted here that when using logs, you need to use @ Log4j2 annotation instead of @ Slf4j, because the method provided by @ Slf4j by default cannot set the log level. Test code:

log.log(LogLevelConstant.OPERATION_LEVEL,"Operation log:{} , {}", "Parameter 1", "Parameter 2");

log.log(LogLevelConstant.API_LEVEL,"Interface log:{} , {}", "Parameter 1", "Parameter 2");

2. Custom operation log annotation

when recording the operation log, we may not need to write the code recording the log directly in the code. Here, we can customize the annotation and realize the recording of the operation log through the annotation. First, customize three types of logging types according to the characteristics of Spring AOP, BeforeLog (before method execution), AfterLog (after method execution), AroundLog (before and after method execution)

BeforeLog

/**

*

* @ClassName: BeforeLog

* @Description: Record pre log

* @author GitEgg

* @date 2019 April 27, 2013 3:36:29 PM

*

*/

@Retention(RetentionPolicy.RUNTIME)

@Target({ ElementType.METHOD })

public @interface BeforeLog {

String name() default "";

}

AfterLog

/**

*

* @ClassName: AfterLog

* @Description: Record post log

* @author GitEgg

* @date 2019 April 27, 2013 3:36:29 PM

*

*/

@Retention(RetentionPolicy.RUNTIME)

@Target({ ElementType.METHOD })

public @interface AfterLog {

String name() default "";

}

AroundLog

/**

*

* @ClassName: AroundLog

* @Description:Log around

* @author GitEgg

* @date 2019 April 27, 2013 3:36:29 PM

*

*/

@Retention(RetentionPolicy.RUNTIME)

@Target({ ElementType.METHOD })

public @interface AroundLog {

String name() default "";

}

After the above customized annotation, write the aspect implementation of LogAspect logging

/**

*

* @ClassName: LogAspect

* @Description:

* @author GitEgg

* @date 2019 April 27, 2014 4:02:12 PM

*

*/

@Log4j2

@Aspect

@Component

public class LogAspect {

/**

* Before Tangent point

*/

@Pointcut("@annotation(com.gitegg.platform.base.annotation.log.BeforeLog)")

public void beforeAspect() {

}

/**

* After Tangent point

*/

@Pointcut("@annotation(com.gitegg.platform.base.annotation.log.AfterLog)")

public void afterAspect() {

}

/**

* Around Tangent point

*/

@Pointcut("@annotation(com.gitegg.platform.base.annotation.log.AroundLog)")

public void aroundAspect() {

}

/**

* The pre notification records the user's operation

*

* @param joinPoint Tangent point

*/

@Before("beforeAspect()")

public void doBefore(JoinPoint joinPoint) {

try {

// Processing input parameters

Object[] args = joinPoint.getArgs();

StringBuffer inParams = new StringBuffer("");

for (Object obj : args) {

if (null != obj && !(obj instanceof ServletRequest) && !(obj instanceof ServletResponse)) {

String objJson = JsonUtils.objToJson(obj);

inParams.append(objJson);

}

}

Method method = getMethod(joinPoint);

String operationName = getBeforeLogName(method);

addSysLog(joinPoint, String.valueOf(inParams), "BeforeLog", operationName);

} catch (Exception e) {

log.error("doBefore Logging exception,Abnormal information:{}", e.getMessage());

}

}

/**

* The post notification records the user's actions

*

* @param joinPoint Tangent point

*/

@AfterReturning(value = "afterAspect()", returning = "returnObj")

public void doAfter(JoinPoint joinPoint, Object returnObj) {

try {

// Processing out parameters

String outParams = JsonUtils.objToJson(returnObj);

Method method = getMethod(joinPoint);

String operationName = getAfterLogName(method);

addSysLog(joinPoint, "AfterLog", outParams, operationName);

} catch (Exception e) {

log.error("doAfter Logging exception,Abnormal information:{}", e.getMessage());

}

}

/**

* The pre post notification is used to intercept the user's operation records

*

* @param joinPoint Tangent point

* @throws Throwable

*/

@Around("aroundAspect()")

public Object doAround(ProceedingJoinPoint joinPoint) throws Throwable {

// Out reference

Object value = null;

// Whether the intercepted method is executed

boolean execute = false;

// Enter reference

Object[] args = joinPoint.getArgs();

try {

// Processing input parameters

StringBuffer inParams = new StringBuffer();

for (Object obj : args) {

if (null != obj && !(obj instanceof ServletRequest) && !(obj instanceof ServletResponse)) {

String objJson = JsonUtils.objToJson(obj);

inParams.append(objJson);

}

}

execute = true;

// Execution target method

value = joinPoint.proceed(args);

// Processing out parameters

String outParams = JsonUtils.objToJson(value);

Method method = getMethod(joinPoint);

String operationName = getAroundLogName(method);

// Log

addSysLog(joinPoint, String.valueOf(inParams), String.valueOf(outParams), operationName);

} catch (Exception e) {

log.error("around Exception log,Abnormal information:{}", e.getMessage());

// If not, continue to execute. The log exception does not affect the operation process

if (!execute) {

value = joinPoint.proceed(args);

}

throw e;

}

return value;

}

/**

* Log warehousing addsyslog (the function of this method is described in one sentence here)

*

* @Title: addSysLog

* @Description:

* @param joinPoint

* @param inParams

* @param outParams

* @param operationName

* @return void

*/

@SneakyThrows

public void addSysLog(JoinPoint joinPoint, String inParams, String outParams, String operationName) throws Exception {

try {

HttpServletRequest request = ((ServletRequestAttributes) RequestContextHolder.getRequestAttributes())

.getRequest();

String ip = request.getRemoteAddr();

GitEggLog gitEggLog = new GitEggLog();

gitEggLog.setMethodName(joinPoint.getSignature().getName());

gitEggLog.setInParams(String.valueOf(inParams));

gitEggLog.setOutParams(String.valueOf(outParams));

gitEggLog.setOperationIp(ip);

gitEggLog.setOperationName(operationName);

log.log(LogLevelConstant.OPERATION_LEVEL,LogLevelConstant.OPERATION_LEVEL_MESSAGE, JsonUtils.objToJson(gitEggLog));

} catch (Exception e) {

log.error("addSysLog Logging exception,Abnormal information:{}", e.getMessage());

throw e;

}

}

/**

* Get the description information of the method in the annotation

*

* @param joinPoint Tangent point

* @return Method description

* @throws Exception

*/

public Method getMethod(JoinPoint joinPoint) throws Exception {

String targetName = joinPoint.getTarget().getClass().getName();

String methodName = joinPoint.getSignature().getName();

Object[] arguments = joinPoint.getArgs();

Class<?> targetClass = Class.forName(targetName);

Method[] methods = targetClass.getMethods();

Method methodReturn = null;

for (Method method : methods) {

if (method.getName().equals(methodName)) {

Class<?>[] clazzs = method.getParameterTypes();

if (clazzs.length == arguments.length) {

methodReturn = method;

break;

}

}

}

return methodReturn;

}

/**

*

* getBeforeLogName(Get before name)

*

* @Title: getBeforeLogName

* @Description:

* @param method

* @return String

*/

public String getBeforeLogName(Method method) {

String name = method.getAnnotation(BeforeLog.class).name();

return name;

}

/**

*

* getAfterLogName(Get after name)

*

* @Title: getAfterLogName

* @Description:

* @param method

* @return String

*/

public String getAfterLogName(Method method) {

String name = method.getAnnotation(AfterLog.class).name();

return name;

}

/**

*

* getAroundLogName(Get around name)

* @Title: getAroundLogName

* @Description:

* @param method

* @return String

*

*/

public String getAroundLogName(Method method) {

String name = method.getAnnotation(AroundLog.class).name();

return name;

}

After the above code is completed, you need to log 4j2 Configure the custom log level in XML to print the custom log to the specified file:

<!-- This will print out all the operation Level of information, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileOperation" fileName="${FILE_PATH}/operation.log"

filePattern="${FILE_PATH}/OPERATION-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only action level Level information( onMatch),Other direct rejection( onMismatch)-->

<LevelRangeFilter minLevel="OPERATION" maxLevel="OPERATION" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the api Level of information, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileApi" fileName="${FILE_PATH}/api.log"

filePattern="${FILE_PATH}/API-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only visit level Level information( onMatch),Other direct rejection( onMismatch)-->

<LevelRangeFilter minLevel="API" maxLevel="API" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<loggers>

<AsyncLogger name="AsyncLogger" level="debug" additivity="false">

<AppenderRef ref="Console"/>

<AppenderRef ref="RollingFileDebug"/>

<AppenderRef ref="RollingFileInfo"/>

<AppenderRef ref="RollingFileWarn"/>

<AppenderRef ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

</AsyncLogger>

<root level="trace">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileDebug"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="RollingFileWarn"/>

<appender-ref ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

</root>

</loggers>

3. Save logs to Kafka

the previous configuration has basically met our basic requirements for the log system. Here, we can consider configuring the configuration file of Log4j2 to realize dynamic configuration and record the log file to the specified file or message middleware.

Log4j2 needs Kfaka client jar package to send log messages to Kafka. Therefore, first introduce Kafka clients package:

<!-- log4j2 Recorded kafka Required dependencies -->

<kafka.clients.version>3.1.0</kafka.clients.version>

<!-- log4j2 kafka appender -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.clients.version}</version>

</dependency>

Modify Log4j2 XML configuration records the operation log to Kafka. Note that Log4j2 official website indicates that configuration must be added here, otherwise recursive calls will occur.

<Kafka name="KafkaOperationLog" topic="operation_log" ignoreExceptions="false">

<LevelRangeFilter minLevel="OPERATION" maxLevel="OPERATION" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Property name="bootstrap.servers">172.16.20.220:9092,172.16.20.221:9092,172.16.20.222:9092</Property>

<Property name="max.block.ms">2000</Property>

</Kafka>

<Kafka name="KafkaApiLog" topic="api_log" ignoreExceptions="false">

<LevelRangeFilter minLevel="API" maxLevel="API" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Property name="bootstrap.servers">172.16.20.220:9092,172.16.20.221:9092,172.16.20.222:9092</Property>

<Property name="max.block.ms">2000</Property>

</Kafka>

<!-- Logger Node is used to specify the form of log separately, for example, for the log under the specified package class Specify different log levels, etc -->

<!-- Then define loggers,Only defined logger And introduced appender,appender Will take effect -->

<loggers>

<!--Filter out spring and mybatis Some useless DEBUG information-->

<logger name="org.mybatis" level="info" additivity="false">

<AppenderRef ref="Console"/>

</logger>

<!--If additivity Set as false,Zezi Logger Only in their own appender Output in, not in the parent Logger of appender Inner output -->

<Logger name="org.springframework" level="info" additivity="false">

<AppenderRef ref="Console"/>

</Logger>

<!-- Avoid recursive logging -->

<Logger name="org.apache.kafka" level="INFO" />

<AsyncLogger name="AsyncLogger" level="debug" additivity="false">

<AppenderRef ref="Console"/>

<AppenderRef ref="RollingFileDebug"/>

<AppenderRef ref="RollingFileInfo"/>

<AppenderRef ref="RollingFileWarn"/>

<AppenderRef ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

<AppenderRef ref="KafkaOperationLog"/>

<AppenderRef ref="KafkaApiLog"/>

</AsyncLogger>

<root level="trace">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileDebug"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="RollingFileWarn"/>

<appender-ref ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

<AppenderRef ref="KafkaOperationLog"/>

<AppenderRef ref="KafkaApiLog"/>

</root>

</loggers>

To sum up, the modified complete log4j The XML is as follows. You can choose not to record the operation log to the file according to the configuration:

<?xml version="1.0" encoding="UTF-8"?>

<!--Log level and prioritization: OFF > FATAL > ERROR > WARN > INFO > DEBUG > TRACE > ALL -->

<configuration monitorInterval="5" packages="org.apache.skywalking.apm.toolkit.log.log4j.v2.x">

<!--Variable configuration-->

<Properties>

<!-- Format output:%date Indicates the date, traceId Represents a micro service Skywalking track id,%thread Represents the thread name,%-5level: The level is displayed 5 characters wide from the left %m: Log messages,%n Is a newline character-->

<!-- %c Output class details %M Output method name %pid output pid %line On which line is the log printed -->

<!-- %logger{80} express Logger The maximum length of the first name is 80 characters -->

<!-- value="${LOCAL_IP_HOSTNAME} %date [%p] %C [%thread] pid:%pid line:%line %throwable %c{10} %m%n"/>-->

<property name="CONSOLE_LOG_PATTERN"

value="%d %highlight{%-5level [%traceId] pid:%pid-%line}{ERROR=Bright RED, WARN=Bright Yellow, INFO=Bright Green, DEBUG=Bright Cyan, TRACE=Bright White} %style{[%t]}{bright,magenta} %style{%c{1.}.%M(%L)}{cyan}: %msg%n"/>

<property name="LOG_PATTERN"

value="%d %highlight{%-5level [%traceId] pid:%pid-%line}{ERROR=Bright RED, WARN=Bright Yellow, INFO=Bright Green, DEBUG=Bright Cyan, TRACE=Bright White} %style{[%t]}{bright,magenta} %style{%c{1.}.%M(%L)}{cyan}: %msg%n"/>

<!-- read application.yaml Log path set in file logging.file.path-->

<Property name="FILE_PATH">${spring:logging.file.path}</Property>

<!-- <property name="FILE_PATH">D:\\log4j2_cloud</property> -->

<property name="applicationName">${spring:spring.application.name}</property>

<property name="FILE_STORE_MAX" value="50MB"/>

<property name="FILE_WRITE_INTERVAL" value="1"/>

<property name="LOG_MAX_HISTORY" value="60"/>

</Properties>

<appenders>

<!-- console output -->

<console name="Console" target="SYSTEM_OUT">

<!-- Format of output log -->

<PatternLayout pattern="${CONSOLE_LOG_PATTERN}"/>

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch) -->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

</console>

<!-- This will print out all the info And below, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileInfo" fileName="${FILE_PATH}/info.log"

filePattern="${FILE_PATH}/INFO-%d{yyyy-MM-dd}_%i.log.gz">

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the debug And below, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive-->

<RollingRandomAccessFile name="RollingFileDebug" fileName="${FILE_PATH}/debug.log"

filePattern="${FILE_PATH}/DEBUG-%d{yyyy-MM-dd}_%i.log.gz">

<!--Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="debug" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the warn And above, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive-->

<RollingRandomAccessFile name="RollingFileWarn" fileName="${FILE_PATH}/warn.log"

filePattern="${FILE_PATH}/WARN-%d{yyyy-MM-dd}_%i.log.gz">

<!-- Console output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="warn" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!-- interval Property is used to specify how often to scroll. The default is 1 hour -->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder -->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the error And above, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileError" fileName="${FILE_PATH}/error.log"

filePattern="${FILE_PATH}/ERROR-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only level And above( onMatch),Other direct rejection( onMismatch)-->

<ThresholdFilter level="error" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the operation Level of information, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileOperation" fileName="${FILE_PATH}/operation.log"

filePattern="${FILE_PATH}/OPERATION-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only action level Level information( onMatch),Other direct rejection( onMismatch)-->

<LevelRangeFilter minLevel="OPERATION" maxLevel="OPERATION" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<!-- This will print out all the api Level of information, each time the size exceeds size,Then this size Logs of size are automatically saved by year-Under the folder created in the month and compressed as an archive -->

<RollingRandomAccessFile name="RollingFileApi" fileName="${FILE_PATH}/api.log"

filePattern="${FILE_PATH}/API-%d{yyyy-MM-dd}_%i.log.gz">

<!--Output only visit level Level information( onMatch),Other direct rejection( onMismatch)-->

<LevelRangeFilter minLevel="API" maxLevel="API" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<!--interval Property is used to specify how often to scroll. The default is 1 hour-->

<TimeBasedTriggeringPolicy interval="${FILE_WRITE_INTERVAL}"/>

<SizeBasedTriggeringPolicy size="${FILE_STORE_MAX}"/>

</Policies>

<!-- DefaultRolloverStrategy If the property is not set, the default is to overwrite up to 7 files in the same folder-->

<DefaultRolloverStrategy max="${LOG_MAX_HISTORY}"/>

</RollingRandomAccessFile>

<Kafka name="KafkaOperationLog" topic="operation_log" ignoreExceptions="false">

<LevelRangeFilter minLevel="OPERATION" maxLevel="OPERATION" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Property name="bootstrap.servers">172.16.20.220:9092,172.16.20.221:9092,172.16.20.222:9092</Property>

<Property name="max.block.ms">2000</Property>

</Kafka>

<Kafka name="KafkaApiLog" topic="api_log" ignoreExceptions="false">

<LevelRangeFilter minLevel="API" maxLevel="API" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Property name="bootstrap.servers">172.16.20.220:9092,172.16.20.221:9092,172.16.20.222:9092</Property>

<Property name="max.block.ms">2000</Property>

</Kafka>

</appenders>

<!-- Logger Node is used to specify the form of log separately, for example, for the log under the specified package class Specify different log levels, etc -->

<!-- Then define loggers,Only defined logger And introduced appender,appender Will take effect -->

<loggers>

<!--Filter out spring and mybatis Some useless DEBUG information-->

<logger name="org.mybatis" level="info" additivity="false">

<AppenderRef ref="Console"/>

</logger>

<!--If additivity Set as false,Zezi Logger Only in their own appender Output in, not in the parent Logger of appender Inner output -->

<Logger name="org.springframework" level="info" additivity="false">

<AppenderRef ref="Console"/>

</Logger>

<!-- Avoid recursive logging -->

<Logger name="org.apache.kafka" level="INFO" />

<AsyncLogger name="AsyncLogger" level="debug" additivity="false">

<AppenderRef ref="Console"/>

<AppenderRef ref="RollingFileDebug"/>

<AppenderRef ref="RollingFileInfo"/>

<AppenderRef ref="RollingFileWarn"/>

<AppenderRef ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

<AppenderRef ref="KafkaOperationLog"/>

<AppenderRef ref="KafkaApiLog"/>

</AsyncLogger>

<root level="trace">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileDebug"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="RollingFileWarn"/>

<appender-ref ref="RollingFileError"/>

<AppenderRef ref="RollingFileOperation"/>

<AppenderRef ref="RollingFileApi"/>

<AppenderRef ref="KafkaOperationLog"/>

<AppenderRef ref="KafkaApiLog"/>

</root>

</loggers>

</configuration>

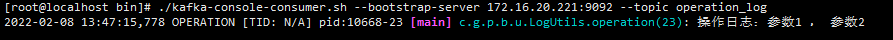

After the above configuration is completed, we test the log record to see whether the log is recorded in the asynchronous file and Kafka. Start the consumer service on the Kfaka server to see whether the log is pushed to Kafka in real time:

4. The Gateway logs configurable requests

in the process of business development, in addition to the requirements of operation log, we usually encounter the requirements of interface log. The system needs to make statistical analysis on the requests of interface. The gateway is responsible for forwarding requests to various microservices, which is more suitable for API log collection.

we are bound to face the problem of which services need to collect API logs and what types of API logs need to be collected. In the design, we need to consider making API log collection configurable flexibly. Based on the consideration of simple configuration, we put these configurations into the Nacos configuration center. If there are more detailed customization requirements, we can design and implement the system configuration interface and put the configuration into the Redis cache.

because the RequestBody and ResponseBody in the request can only be read once, we need to process the data in the filter. Although the Gateway provides the filter adaptcachedbody globalfilter for caching the RequestBody, we may use ResponseBody in addition to some customization requirements for requests, So it's best to customize the filter here.

there is an open source plug-in spring-cloud-gateway-plugin This is a very comprehensive implementation of the Gateway's filter for collecting request logs. Here we directly refer to its implementation, because this plug-in has other unnecessary functions in addition to logging, and the plug-in depends on the spring cloud version. Therefore, here we only take its logging function and make some adjustments according to our needs.

1. Add the following configuration items to our configuration file:

- Log plug-in switch

- Record request parameter switch

- Record return parameter switch

- List of microservice ID s that need to record API logs

- List of URL s that need to log API logs

spring:

cloud:

gateway:

plugin:

config:

# Whether to open the Gateway log plug-in

enable: true

# When requestlog = = true & & responselog = = false, only the request parameter log is recorded; When responseLog==true, record the request parameters and return parameters.

# When the record parameter requestLog==false, the log will not be recorded

requestLog: true

# In the production environment, try to record only the parameters, because the returned parameter data is too large and meaningless in most cases

# Record parameters

responseLog: true

# All: all logs configure: intersection of serviceId and pathList serviceId: record only serviceId configuration list pathList: record only pathList configuration list

logType: all

serviceIdList:

- "gitegg-oauth"

- "gitegg-service-system"

pathList:

- "/gitegg-oauth/oauth/token"

- "/gitegg-oauth/oauth/user/info"

2. GatewayPluginConfig configuration class, which filters can be enabled and initialized can be selected according to the configuration items spring-cloud-gateway-plugin GatewayPluginConfig.java modification.

/**

* Quoted from @see https://github.com/chenggangpro/spring-cloud-gateway-plugin

*

* Gateway Plugin Config

* @author chenggang

* @date 2019/01/29

*/

@Slf4j

@Configuration

public class GatewayPluginConfig {

@Bean

@ConditionalOnMissingBean(GatewayPluginProperties.class)

@ConfigurationProperties(GatewayPluginProperties.GATEWAY_PLUGIN_PROPERTIES_PREFIX)

public GatewayPluginProperties gatewayPluginProperties(){

return new GatewayPluginProperties();

}

@Bean

@ConditionalOnBean(GatewayPluginProperties.class)

@ConditionalOnMissingBean(GatewayRequestContextFilter.class)

@ConditionalOnProperty(prefix = GatewayPluginProperties.GATEWAY_PLUGIN_PROPERTIES_PREFIX, value = { "enable", "requestLog" },havingValue = "true")

public GatewayRequestContextFilter gatewayContextFilter(@Autowired GatewayPluginProperties gatewayPluginProperties , @Autowired(required = false) ContextExtraDataGenerator contextExtraDataGenerator){

GatewayRequestContextFilter gatewayContextFilter = new GatewayRequestContextFilter(gatewayPluginProperties, contextExtraDataGenerator);

log.debug("Load GatewayContextFilter Config Bean");

return gatewayContextFilter;

}

@Bean

@ConditionalOnMissingBean(GatewayResponseContextFilter.class)

@ConditionalOnProperty(prefix = GatewayPluginProperties.GATEWAY_PLUGIN_PROPERTIES_PREFIX, value = { "enable", "responseLog" }, havingValue = "true")

public GatewayResponseContextFilter responseLogFilter(){

GatewayResponseContextFilter responseLogFilter = new GatewayResponseContextFilter();

log.debug("Load Response Log Filter Config Bean");

return responseLogFilter;

}

@Bean

@ConditionalOnBean(GatewayPluginProperties.class)

@ConditionalOnMissingBean(RemoveGatewayContextFilter.class)

@ConditionalOnProperty(prefix = GatewayPluginProperties.GATEWAY_PLUGIN_PROPERTIES_PREFIX, value = { "enable" }, havingValue = "true")

public RemoveGatewayContextFilter removeGatewayContextFilter(){

RemoveGatewayContextFilter gatewayContextFilter = new RemoveGatewayContextFilter();

log.debug("Load RemoveGatewayContextFilter Config Bean");

return gatewayContextFilter;

}

@Bean

@ConditionalOnMissingBean(RequestLogFilter.class)

@ConditionalOnProperty(prefix = GatewayPluginProperties.GATEWAY_PLUGIN_PROPERTIES_PREFIX, value = { "enable" },havingValue = "true")

public RequestLogFilter requestLogFilter(@Autowired GatewayPluginProperties gatewayPluginProperties){

RequestLogFilter requestLogFilter = new RequestLogFilter(gatewayPluginProperties);

log.debug("Load Request Log Filter Config Bean");

return requestLogFilter;

}

}

3. GatewayRequestContextFilter is a filter that processes request parameters according to spring-cloud-gateway-plugin GatewayContextFilter.java modification.

/**

* Quoted from @see https://github.com/chenggangpro/spring-cloud-gateway-plugin

*

* Gateway Context Filter

* @author chenggang

* @date 2019/01/29

*/

@Slf4j

@AllArgsConstructor

public class GatewayRequestContextFilter implements GlobalFilter, Ordered {

private GatewayPluginProperties gatewayPluginProperties;

private ContextExtraDataGenerator contextExtraDataGenerator;

private static final AntPathMatcher ANT_PATH_MATCHER = new AntPathMatcher();

/**

* default HttpMessageReader

*/

private static final List<HttpMessageReader<?>> MESSAGE_READERS = HandlerStrategies.withDefaults().messageReaders();

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

ServerHttpRequest request = exchange.getRequest();

GatewayContext gatewayContext = new GatewayContext();

gatewayContext.setReadRequestData(shouldReadRequestData(exchange));

gatewayContext.setReadResponseData(gatewayPluginProperties.getResponseLog());

HttpHeaders headers = request.getHeaders();

gatewayContext.setRequestHeaders(headers);

if(Objects.nonNull(contextExtraDataGenerator)){

GatewayContextExtraData gatewayContextExtraData = contextExtraDataGenerator.generateContextExtraData(exchange);

gatewayContext.setGatewayContextExtraData(gatewayContextExtraData);

}

if(!gatewayContext.getReadRequestData()){

exchange.getAttributes().put(GatewayContext.CACHE_GATEWAY_CONTEXT, gatewayContext);

log.debug("[GatewayContext]Properties Set To Not Read Request Data");

return chain.filter(exchange);

}

gatewayContext.getAllRequestData().addAll(request.getQueryParams());

/*

* save gateway context into exchange

*/

exchange.getAttributes().put(GatewayContext.CACHE_GATEWAY_CONTEXT, gatewayContext);

MediaType contentType = headers.getContentType();

if(headers.getContentLength()>0){

if(MediaType.APPLICATION_JSON.equals(contentType) || MediaType.APPLICATION_JSON_UTF8.equals(contentType)){

return readBody(exchange, chain,gatewayContext);

}

if(MediaType.APPLICATION_FORM_URLENCODED.equals(contentType)){

return readFormData(exchange, chain,gatewayContext);

}

}

log.debug("[GatewayContext]ContentType:{},Gateway context is set with {}",contentType, gatewayContext);

return chain.filter(exchange);

}

@Override

public int getOrder() {

return FilterOrderEnum.GATEWAY_CONTEXT_FILTER.getOrder();

}

/**

* check should read request data whether or not

* @return boolean

*/

private boolean shouldReadRequestData(ServerWebExchange exchange){

if(gatewayPluginProperties.getRequestLog()

&& GatewayLogTypeEnum.ALL.getType().equals(gatewayPluginProperties.getLogType())){

log.debug("[GatewayContext]Properties Set Read All Request Data");

return true;

}

boolean serviceFlag = false;

boolean pathFlag = false;

boolean lbFlag = false;

List<String> readRequestDataServiceIdList = gatewayPluginProperties.getServiceIdList();

List<String> readRequestDataPathList = gatewayPluginProperties.getPathList();

if(!CollectionUtils.isEmpty(readRequestDataPathList)

&& (GatewayLogTypeEnum.PATH.getType().equals(gatewayPluginProperties.getLogType())

|| GatewayLogTypeEnum.CONFIGURE.getType().equals(gatewayPluginProperties.getLogType()))){

String requestPath = exchange.getRequest().getPath().pathWithinApplication().value();

for(String path : readRequestDataPathList){

if(ANT_PATH_MATCHER.match(path,requestPath)){

log.debug("[GatewayContext]Properties Set Read Specific Request Data With Request Path:{},Math Pattern:{}", requestPath, path);

pathFlag = true;

break;

}

}

}

Route route = exchange.getAttribute(ServerWebExchangeUtils.GATEWAY_ROUTE_ATTR);

URI routeUri = route.getUri();

if(!"lb".equalsIgnoreCase(routeUri.getScheme())){

lbFlag = true;

}

String routeServiceId = routeUri.getHost().toLowerCase();

if(!CollectionUtils.isEmpty(readRequestDataServiceIdList)

&& (GatewayLogTypeEnum.SERVICE.getType().equals(gatewayPluginProperties.getLogType())

|| GatewayLogTypeEnum.CONFIGURE.getType().equals(gatewayPluginProperties.getLogType()))){

if(readRequestDataServiceIdList.contains(routeServiceId)){

log.debug("[GatewayContext]Properties Set Read Specific Request Data With ServiceId:{}",routeServiceId);

serviceFlag = true;

}

}

if (GatewayLogTypeEnum.CONFIGURE.getType().equals(gatewayPluginProperties.getLogType())

&& serviceFlag && pathFlag && !lbFlag)

{

return true;

}

else if (GatewayLogTypeEnum.SERVICE.getType().equals(gatewayPluginProperties.getLogType())

&& serviceFlag && !lbFlag)

{

return true;

}

else if (GatewayLogTypeEnum.PATH.getType().equals(gatewayPluginProperties.getLogType())

&& pathFlag)

{

return true;

}

return false;

}

/**

* ReadFormData

* @param exchange

* @param chain

* @return

*/

private Mono<Void> readFormData(ServerWebExchange exchange, GatewayFilterChain chain, GatewayContext gatewayContext){

HttpHeaders headers = exchange.getRequest().getHeaders();

return exchange.getFormData()

.doOnNext(multiValueMap -> {

gatewayContext.setFormData(multiValueMap);

gatewayContext.getAllRequestData().addAll(multiValueMap);

log.debug("[GatewayContext]Read FormData Success");

})

.then(Mono.defer(() -> {

Charset charset = headers.getContentType().getCharset();

charset = charset == null? StandardCharsets.UTF_8:charset;

String charsetName = charset.name();

MultiValueMap<String, String> formData = gatewayContext.getFormData();

/*

* formData is empty just return

*/

if(null == formData || formData.isEmpty()){

return chain.filter(exchange);

}

StringBuilder formDataBodyBuilder = new StringBuilder();

String entryKey;

List<String> entryValue;

try {

/*

* repackage form data

*/

for (Map.Entry<String, List<String>> entry : formData.entrySet()) {

entryKey = entry.getKey();

entryValue = entry.getValue();

if (entryValue.size() > 1) {

for(String value : entryValue){

formDataBodyBuilder.append(entryKey).append("=").append(URLEncoder.encode(value, charsetName)).append("&");

}

} else {

formDataBodyBuilder.append(entryKey).append("=").append(URLEncoder.encode(entryValue.get(0), charsetName)).append("&");

}

}

}catch (UnsupportedEncodingException e){}

/*

* substring with the last char '&'

*/

String formDataBodyString = "";

if(formDataBodyBuilder.length()>0){

formDataBodyString = formDataBodyBuilder.substring(0, formDataBodyBuilder.length() - 1);

}

/*

* get data bytes

*/

byte[] bodyBytes = formDataBodyString.getBytes(charset);

int contentLength = bodyBytes.length;

HttpHeaders httpHeaders = new HttpHeaders();

httpHeaders.putAll(exchange.getRequest().getHeaders());

httpHeaders.remove(HttpHeaders.CONTENT_LENGTH);

/*

* in case of content-length not matched

*/

httpHeaders.setContentLength(contentLength);

/*

* use BodyInserter to InsertFormData Body

*/

BodyInserter<String, ReactiveHttpOutputMessage> bodyInserter = BodyInserters.fromObject(formDataBodyString);

CachedBodyOutputMessage cachedBodyOutputMessage = new CachedBodyOutputMessage(exchange, httpHeaders);

log.debug("[GatewayContext]Rewrite Form Data :{}",formDataBodyString);

return bodyInserter.insert(cachedBodyOutputMessage, new BodyInserterContext())

.then(Mono.defer(() -> {

ServerHttpRequestDecorator decorator = new ServerHttpRequestDecorator(

exchange.getRequest()) {

@Override

public HttpHeaders getHeaders() {

return httpHeaders;

}

@Override

public Flux<DataBuffer> getBody() {

return cachedBodyOutputMessage.getBody();

}

};

return chain.filter(exchange.mutate().request(decorator).build());

}));

}));

}

/**

* ReadJsonBody

* @param exchange

* @param chain

* @return

*/

private Mono<Void> readBody(ServerWebExchange exchange, GatewayFilterChain chain, GatewayContext gatewayContext){

return DataBufferUtils.join(exchange.getRequest().getBody())

.flatMap(dataBuffer -> {

/*

* read the body Flux<DataBuffer>, and release the buffer

* //TODO when SpringCloudGateway Version Release To G.SR2,this can be update with the new version's feature

* see PR https://github.com/spring-cloud/spring-cloud-gateway/pull/1095

*/

byte[] bytes = new byte[dataBuffer.readableByteCount()];

dataBuffer.read(bytes);

DataBufferUtils.release(dataBuffer);

Flux<DataBuffer> cachedFlux = Flux.defer(() -> {

DataBuffer buffer = exchange.getResponse().bufferFactory().wrap(bytes);

DataBufferUtils.retain(buffer);

return Mono.just(buffer);

});

/*

* repackage ServerHttpRequest

*/

ServerHttpRequest mutatedRequest = new ServerHttpRequestDecorator(exchange.getRequest()) {

@Override

public Flux<DataBuffer> getBody() {

return cachedFlux;

}

};

ServerWebExchange mutatedExchange = exchange.mutate().request(mutatedRequest).build();

return ServerRequest.create(mutatedExchange, MESSAGE_READERS)

.bodyToMono(String.class)

.doOnNext(objectValue -> {

gatewayContext.setRequestBody(objectValue);

log.debug("[GatewayContext]Read JsonBody Success");

}).then(chain.filter(mutatedExchange));

});

}

}

4. GatewayResponseContextFilter processes the filter of the returned parameter according to spring-cloud-gateway-plugin ResponseLogFilter.java modification.

/**

* Quoted from @see https://github.com/chenggangpro/spring-cloud-gateway-plugin

*

*

* @author: chenggang

* @createTime: 2019-04-11

* @version: v1.2.0

*/

@Slf4j

public class GatewayResponseContextFilter implements GlobalFilter, Ordered {

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

GatewayContext gatewayContext = exchange.getAttribute(GatewayContext.CACHE_GATEWAY_CONTEXT);

if(!gatewayContext.getReadResponseData()){

log.debug("[ResponseLogFilter]Properties Set Not To Read Response Data");

return chain.filter(exchange);

}

ServerHttpResponseDecorator responseDecorator = new ServerHttpResponseDecorator(exchange.getResponse()) {

@Override

public Mono<Void> writeWith(Publisher<? extends DataBuffer> body) {

return DataBufferUtils.join(Flux.from(body))

.flatMap(dataBuffer -> {

byte[] bytes = new byte[dataBuffer.readableByteCount()];

dataBuffer.read(bytes);

DataBufferUtils.release(dataBuffer);

Flux<DataBuffer> cachedFlux = Flux.defer(() -> {

DataBuffer buffer = exchange.getResponse().bufferFactory().wrap(bytes);

DataBufferUtils.retain(buffer);

return Mono.just(buffer);

});

BodyInserter<Flux<DataBuffer>, ReactiveHttpOutputMessage> bodyInserter = BodyInserters.fromDataBuffers(cachedFlux);

CachedBodyOutputMessage outputMessage = new CachedBodyOutputMessage(exchange, exchange.getResponse().getHeaders());

DefaultClientResponse clientResponse = new DefaultClientResponse(new ResponseAdapter(cachedFlux, exchange.getResponse().getHeaders()), ExchangeStrategies.withDefaults());

Optional<MediaType> optionalMediaType = clientResponse.headers().contentType();

if(!optionalMediaType.isPresent()){

log.debug("[ResponseLogFilter]Response ContentType Is Not Exist");

return Mono.defer(()-> bodyInserter.insert(outputMessage, new BodyInserterContext())

.then(Mono.defer(() -> {

Flux<DataBuffer> messageBody = cachedFlux;

HttpHeaders headers = getDelegate().getHeaders();

if (!headers.containsKey(HttpHeaders.TRANSFER_ENCODING)) {

messageBody = messageBody.doOnNext(data -> headers.setContentLength(data.readableByteCount()));

}

return getDelegate().writeWith(messageBody);

})));

}

MediaType contentType = optionalMediaType.get();

if(!contentType.equals(MediaType.APPLICATION_JSON) && !contentType.equals(MediaType.APPLICATION_JSON_UTF8)){

log.debug("[ResponseLogFilter]Response ContentType Is Not APPLICATION_JSON Or APPLICATION_JSON_UTF8");

return Mono.defer(()-> bodyInserter.insert(outputMessage, new BodyInserterContext())

.then(Mono.defer(() -> {

Flux<DataBuffer> messageBody = cachedFlux;

HttpHeaders headers = getDelegate().getHeaders();

if (!headers.containsKey(HttpHeaders.TRANSFER_ENCODING)) {

messageBody = messageBody.doOnNext(data -> headers.setContentLength(data.readableByteCount()));

}

return getDelegate().writeWith(messageBody);

})));

}

return clientResponse.bodyToMono(Object.class)

.doOnNext(originalBody -> {

GatewayContext gatewayContext = exchange.getAttribute(GatewayContext.CACHE_GATEWAY_CONTEXT);

gatewayContext.setResponseBody(originalBody);

log.debug("[ResponseLogFilter]Read Response Data To Gateway Context Success");

})

.then(Mono.defer(()-> bodyInserter.insert(outputMessage, new BodyInserterContext())

.then(Mono.defer(() -> {

Flux<DataBuffer> messageBody = cachedFlux;

HttpHeaders headers = getDelegate().getHeaders();

if (!headers.containsKey(HttpHeaders.TRANSFER_ENCODING)) {

messageBody = messageBody.doOnNext(data -> headers.setContentLength(data.readableByteCount()));

}

return getDelegate().writeWith(messageBody);

}))));

});

}

@Override

public Mono<Void> writeAndFlushWith(Publisher<? extends Publisher<? extends DataBuffer>> body) {

return writeWith(Flux.from(body)

.flatMapSequential(p -> p));

}

};

return chain.filter(exchange.mutate().response(responseDecorator).build());

}

@Override

public int getOrder() {

return FilterOrderEnum.RESPONSE_DATA_FILTER.getOrder();

}

public class ResponseAdapter implements ClientHttpResponse {

private final Flux<DataBuffer> flux;

private final HttpHeaders headers;

public ResponseAdapter(Publisher<? extends DataBuffer> body, HttpHeaders headers) {

this.headers = headers;

if (body instanceof Flux) {

flux = (Flux) body;

} else {

flux = ((Mono)body).flux();

}

}

@Override

public Flux<DataBuffer> getBody() {

return flux;

}

@Override

public HttpHeaders getHeaders() {

return headers;

}

@Override

public HttpStatus getStatusCode() {

return null;

}

@Override

public int getRawStatusCode() {

return 0;

}

@Override

public MultiValueMap<String, ResponseCookie> getCookies() {

return null;

}

}

}

5. RemoveGatewayContextFilter clears the filter of the request parameters according to spring-cloud-gateway-plugin RemoveGatewayContextFilter.java modification.

/**

* Quoted from @see https://github.com/chenggangpro/spring-cloud-gateway-plugin

*

* remove gatewayContext Attribute

* @author chenggang

* @date 2019/06/19

*/

@Slf4j

public class RemoveGatewayContextFilter implements GlobalFilter, Ordered {

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

return chain.filter(exchange).doFinally(s -> exchange.getAttributes().remove(GatewayContext.CACHE_GATEWAY_CONTEXT));

}

@Override

public int getOrder() {

return HIGHEST_PRECEDENCE;

}

}

6. RequestLogFilter is a filter for logging according to spring-cloud-gateway-plugin RequestLogFilter.java modification.

/**

* Quoted from @see https://github.com/chenggangpro/spring-cloud-gateway-plugin

*

* Filter To Log Request And Response(exclude response body)

* @author chenggang

* @date 2019/01/29

*/

@Log4j2

@AllArgsConstructor

public class RequestLogFilter implements GlobalFilter, Ordered {

private static final String START_TIME = "startTime";

private static final String HTTP_SCHEME = "http";

private static final String HTTPS_SCHEME = "https";

private GatewayPluginProperties gatewayPluginProperties;

@Override

public Mono<Void> filter(ServerWebExchange exchange, GatewayFilterChain chain) {

ServerHttpRequest request = exchange.getRequest();

URI requestURI = request.getURI();

String scheme = requestURI.getScheme();

GatewayContext gatewayContext = exchange.getAttribute(GatewayContext.CACHE_GATEWAY_CONTEXT);

/*

* not http or https scheme

*/

if ((!HTTP_SCHEME.equalsIgnoreCase(scheme) && !HTTPS_SCHEME.equals(scheme)) || !gatewayContext.getReadRequestData()){

return chain.filter(exchange);

}

long startTime = System.currentTimeMillis();

exchange.getAttributes().put(START_TIME, startTime);

// When the return parameter is true, record the request parameter and return parameter

if (gatewayPluginProperties.getEnable())

{

return chain.filter(exchange).then(Mono.fromRunnable(() -> logApiRequest(exchange)));

}

else {

return chain.filter(exchange);

}

}

@Override

public int getOrder() {

return FilterOrderEnum.REQUEST_LOG_FILTER.getOrder();

}

/**

* log api request

* @param exchange

*/

private Mono<Void> logApiRequest(ServerWebExchange exchange){

ServerHttpRequest request = exchange.getRequest();

URI requestURI = request.getURI();

String scheme = requestURI.getScheme();

Long startTime = exchange.getAttribute(START_TIME);

Long endTime = System.currentTimeMillis();

Long duration = ( endTime - startTime);

ServerHttpResponse response = exchange.getResponse();

GatewayApiLog gatewayApiLog = new GatewayApiLog();

gatewayApiLog.setClientHost(requestURI.getHost());

gatewayApiLog.setClientIp(IpUtils.getIP(request));

gatewayApiLog.setStartTime(startTime);

gatewayApiLog.setEndTime(startTime);

gatewayApiLog.setDuration(duration);

gatewayApiLog.setMethod(request.getMethodValue());

gatewayApiLog.setScheme(scheme);

gatewayApiLog.setRequestUri(requestURI.getPath());

gatewayApiLog.setResponseCode(String.valueOf(response.getRawStatusCode()));

GatewayContext gatewayContext = exchange.getAttribute(GatewayContext.CACHE_GATEWAY_CONTEXT);

// Record parameter request log

if (gatewayPluginProperties.getRequestLog())

{

MultiValueMap<String, String> queryParams = request.getQueryParams();

if(!queryParams.isEmpty()){

queryParams.forEach((key,value)-> log.debug("[RequestLogFilter](Request)Query Param :Key->({}),Value->({})",key,value));

gatewayApiLog.setQueryParams(JsonUtils.mapToJson(queryParams));

}

HttpHeaders headers = request.getHeaders();

MediaType contentType = headers.getContentType();

long length = headers.getContentLength();

log.debug("[RequestLogFilter](Request)ContentType:{},Content Length:{}",contentType,length);

if(length>0 && null != contentType && (contentType.includes(MediaType.APPLICATION_JSON)

||contentType.includes(MediaType.APPLICATION_JSON_UTF8))){

log.debug("[RequestLogFilter](Request)JsonBody:{}",gatewayContext.getRequestBody());

gatewayApiLog.setRequestBody(gatewayContext.getRequestBody());

}

if(length>0 && null != contentType && contentType.includes(MediaType.APPLICATION_FORM_URLENCODED)){

log.debug("[RequestLogFilter](Request)FormData:{}",gatewayContext.getFormData());

gatewayApiLog.setRequestBody(JsonUtils.mapToJson(gatewayContext.getFormData()));

}

}

// Record parameter return log

if (gatewayPluginProperties.getResponseLog())

{

log.debug("[RequestLogFilter](Response)HttpStatus:{}",response.getStatusCode());

HttpHeaders headers = response.getHeaders();

headers.forEach((key,value)-> log.debug("[RequestLogFilter]Headers:Key->{},Value->{}",key,value));

MediaType contentType = headers.getContentType();

long length = headers.getContentLength();

log.info("[RequestLogFilter](Response)ContentType:{},Content Length:{}", contentType, length);

log.debug("[RequestLogFilter](Response)Response Body:{}", gatewayContext.getResponseBody());

try {

gatewayApiLog.setResponseBody(JsonUtils.objToJson(gatewayContext.getResponseBody()));

} catch (Exception e) {

log.error("record API Log return data conversion JSON An error occurred:{}", e);

}

log.debug("[RequestLogFilter](Response)Original Path:{},Cost:{} ms", exchange.getRequest().getURI().getPath(), duration);

}

Route route = exchange.getAttribute(ServerWebExchangeUtils.GATEWAY_ROUTE_ATTR);

URI routeUri = route.getUri();

String routeServiceId = routeUri.getHost().toLowerCase();

// API logging level

try {

log.log(LogLevelConstant.API_LEVEL,"{\"serviceId\":{}, \"data\":{}}", routeServiceId, JsonUtils.objToJson(gatewayApiLog));

} catch (Exception e) {

log.error("record API Log data error occurred:{}", e);

}

return Mono.empty();

}

}

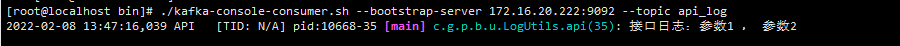

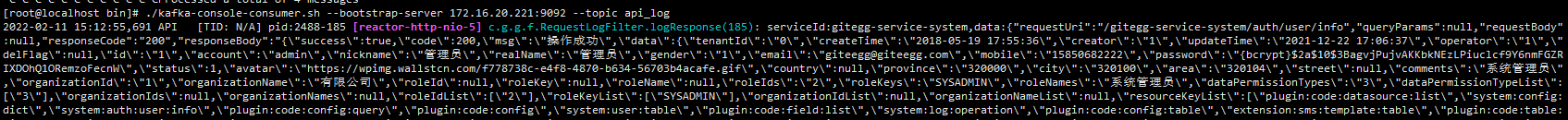

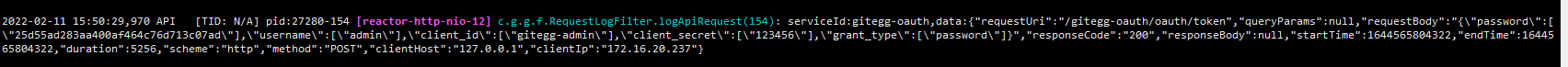

7. Start the service and test the data. We can start Kfaka consumers on the console and check whether there is an api_log subject message:

8. Storage and processing of log data

after saving log messages to files or Kafka, you need to consider how to process these data. In the large-scale micro service cluster mode, saving to relational databases such as MySQL is not advocated or prohibited as far as possible. If necessary, you can use Spring Cloud Stream to consume log messages through the previous introduction, And save to the specified database. The next chapter is about how to build ELK log analysis system to process, analyze and extract these log data with a large amount of data.

Gitegg cloud is an enterprise level microservice application development framework based on spring cloud integration. The open source project address is:

Gitee: https://gitee.com/wmz1930/GitEgg

GitHub: https://github.com/wmz1930/GitEgg

Welcome interested partners Star to support.