The last section describes how to submit consumer displacement. It is with the persistence of consumer displacement that consumers can continue to consume according to the previously stored consumption displacement when they are closing, rushing or encountering rebalancing. However, when a new consumer group is established, there is no consumer displacement that can be found at all. Or a new consumer in the consumer group subscribes to a new topic, and it has no consumer displacement to look for. ** (Messages from the same partition can only be consumed once for the same consumer group. So when a new consumer group is established or a new consumer subscribes to a new consumer group within the consumer group, it means that the consumer group has no displacement information in this sub-area.) ** When the displacement information about this consumer group in the _consumer_offsets topic expires and is deleted, it also has no searchable displacement.

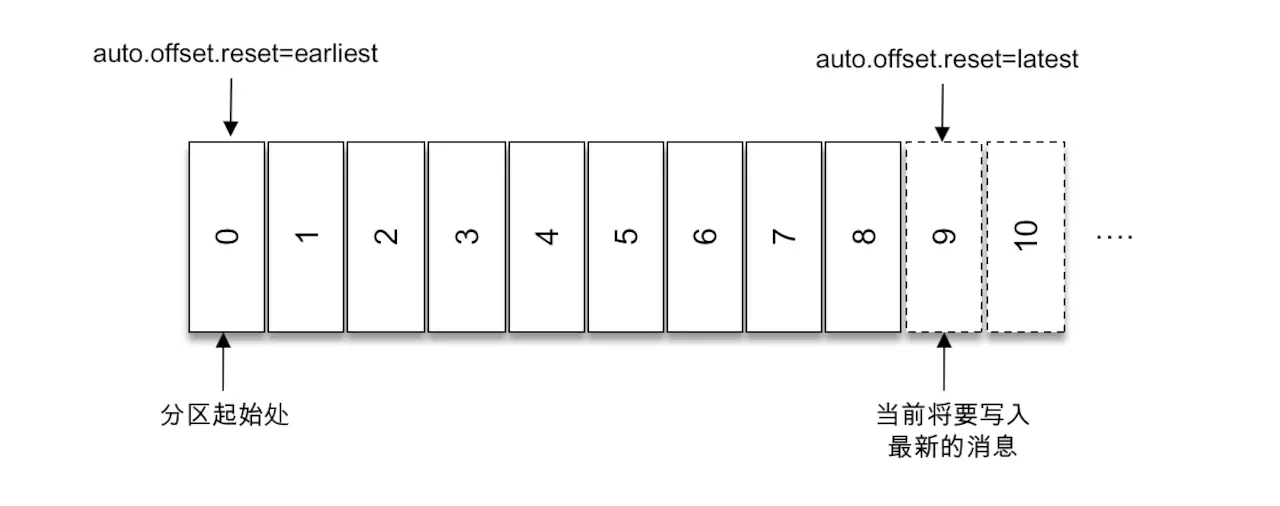

In Kafka, when the consumer can't find the recorded consumption displacement, it will decide where to start the consumption according to the configuration of the consumer client parameter auto.offset.reset. If the value is "earliese", then it will start from the beginning, if the value is "latest", then the default value is "latest" (9 is below). A location to write a message. In addition to finding no consumption displacement, displacement crossing the boundary will trigger the execution of auto.offset.reset parameters.

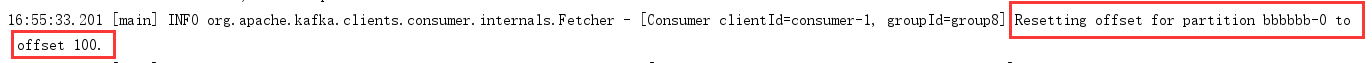

Under the default configuration, when a new consumer group is used to consume the theme, the client will report the information of reset displacement:

The auto.offset.reset parameter also has a configurable value of "none", which means that when an undetectable consumer displacement occurs, the NoOffset ForPartitionException exception will be reported instead of starting from the beginning or starting from the end:

org.apache.kafka.clients.consumer.NoOffsetForPartitionException: Undefined offset with no reset policy for partitions: [topic-demo-3, topic-demo-0, topic-demo-2, topic-demo-1].

If the consumption displacement can be found, then the configuration "none" will not report any anomalies. If the above three values are not configured, the ConfigException exception is thrown:

org.apache.kafka.common.config.ConfigException: Invalid value any for configuration auto.offset.reset: String must be one of: latest, earliest, none.

Pull-and-cancel interest rates are handled according to the logic of poll() method. For developers, they do not know the specific logic and can not accurately control the starting position of their consumption. The auto.offset.reset parameter provided can only be consumed from the beginning or the end when the consumption displacement cannot be found or the displacement crosses the boundary. But sometimes we need finer-grained control, which allows us to pull and cancel interest rates from a specific location, and the seek() method in Kafka Consumer provides this function to catch up with or retrospect consumption. The specific definition of seek() method is as follows:

public void seek(TopicPartition partition, long offset)

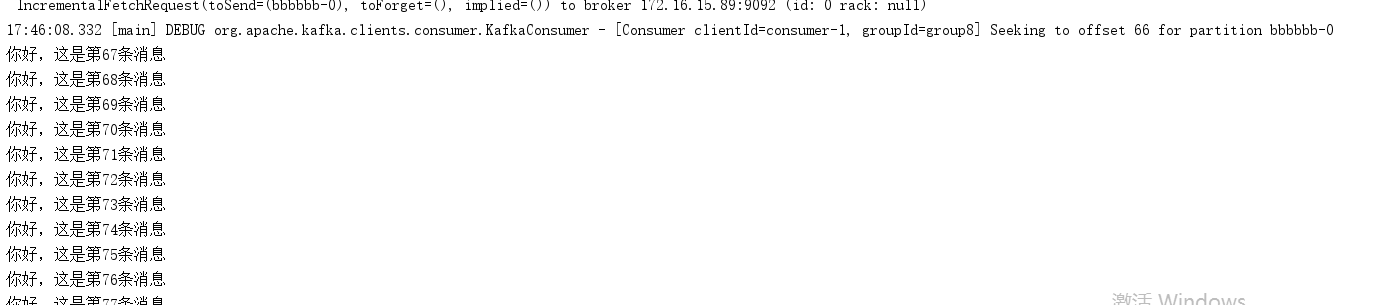

Partition represents a partition, and offset is used to specify where consumption begins from the partition. The seek() method can only reset the consumption location of the partition allocated by the consumer, and the partition allocation is implemented during the call of the poll() method. (For example, theme A has two partitions, consumption group A1 has two consumers, so Kafka will have an allocation strategy so that each consumer will be allocated to a partition.) That is to say, a poll() method needs to be invoked before the seek() method is invoked and allocated to the partition before it can be reset. Consumption position. Examples:

//Code Listing 12-1 Examples of the use of the seed method

private static final Logger logger = LoggerFactory.getLogger(KafkaConsumerAsync.class);

public static final String brokerList = "172.16.15.89:9092";

public static final String topic = "bbbbbb";

public static final String groupId = "group8";

public static final AtomicBoolean isRunning = new AtomicBoolean(true);

public static Properties ininConfig(){

Properties properties=new Properties();

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,brokerList);

properties.put(ConsumerConfig.GROUP_ID_CONFIG,groupId);

return properties;

}

public static void main(String[] args) {

Properties properties=ininConfig();

KafkaConsumer<String,String> consumer=new KafkaConsumer<String, String>(properties);

//Subscribe to Subjects

consumer.subscribe(Arrays.asList(topic));

//Call poll method

consumer.poll(Duration.ofMillis(1000));

//Get partitions assigned by consumers

for (TopicPartition tp:consumer.assignment()){

//Settings start with a displacement of 66 because there is only one partition, and 100 pieces of data are added in the previous section of the topic, so this time it should start with 66 pieces.

consumer.seek(tp,66);

}

while (isRunning.get()){

ConsumerRecords<String,String> records=consumer.poll(Duration.ofMillis(1000));

for(ConsumerRecord<String,String> record:records){

System.out.println(record.value());

}

}

consumer.close();

}

If the parameter of poll() method is set to 0, this method will return immediately, and the logic of partition allocation within poll() method will not be implemented. That is to say, if the consumption is not allocated to any partition, then assignment is an empty list, and the seek() method will not be called circularly. So how appropriate is the timeout parameter of the poll() method set? Too short can cause the allocation of partitions to fail, and too long can cause unnecessary waiting. We can use the assignment() method of KafkaConsumer to determine whether the partition is assigned to the corresponding partition.

//Another example of using the seek () method in Listing 12-2

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList(topic));

Set<TopicPartition> assignment = new HashSet<>();

//If the collection is empty, continue calling poll() to partition

while (assignment.size() == 0) {//If it is not zero, it indicates that the partition has been successfully allocated.

consumer.poll(Duration.ofMillis(100));

assignment = consumer.assignment();

}

for (TopicPartition tp : assignment) {

consumer.seek(tp, 10);

}

while (true) {

ConsumerRecords<String, String> records =

consumer.poll(Duration.ofMillis(1000));

//consume the record.

}

If the seek() method is executed on an unassigned partition, an IllegalStateException exception is reported:

consumer.subscribe(Arrays.asList(topic)); consumer.seek(new TopicPartition(topic,0),10);

Consumption from the beginning or from the end

If the consumer in the consumer group can find the consumption displacement at the start, then the auto.offset.reset parameter will not take effect unless the displacement crosses the boundary. If you want to start or end the consumption at this time, you need the help example of the seek() method:

//Used to retrieve the end of the specified partition. Note: This retrieves the location where the latest message will be written.

Map<TopicPartition, Long> offsets = consumer.endOffsets(assignment); ①

for (TopicPartition tp : assignment) {

consumer.seek(tp, offsets.get(tp)); ②

}

The consumer.endOffsets() method is defined as follows, where the partitions parameter represents the collection of partitions, and the timeout parameter sets the timeout time waiting to be acquired. If no timeout parameter is specified, it is set by the client parameter request.timeout.ms with a default value of 30000.

public Map<TopicPartition, Long> endOffsets(Collection<TopicPartition> partitions) public Map<TopicPartition, Long> endOffsets(Collection<TopicPartition> partitions, Duration timeout)

The beginningOffsets() method corresponds to the endOffsets() method, where a partition starts at 0, but does not mean that it starts at 0 every moment, because the log cleanup action cleans up the old data, so the partition's starting position naturally increases. The beginningOffsets() method is defined as follows:

public Map<TopicPartition, Long> beginningOffsets(Collection<TopicPartition> partitions) public Map<TopicPartition, Long> beginningOffsets(Collection<TopicPartition> partitions, Duration timeout)

The beginningOffsets() method has the same parameters as the endOffsets() method. One is consumed from the beginning of the partition and the other is consumed from the end of the partition. In fact, Kafka Consumer directly provides seekToBeginning() method and seekToEnd() method to realize these two functions, which are defined as follows:

public void seekToBeginning(Collection<TopicPartition> partitions) public void seekToEnd(Collection<TopicPartition> partitions)

Consumption according to time

Sometimes you don't know the specific consumption location, but you know a relevant time point, such as when you want to consume the news after 8 o'clock yesterday, which can be achieved by offsets ForTimes () method in Kafka Consumer. The parameter timestampsToSearch of offsets ForTimes () method is a Map type with key as the partition to be queried and value as the timestamp to be queried. This method returns the location and timestamp of the first message whose timestamp is greater than or equal to the time to be queried, corresponding to offset and timestamp fields in Offset AndTimestamp.

public Map<TopicPartition, OffsetAndTimestamp> offsetsForTimes(Map<TopicPartition, Long> timestampsToSearch) public Map<TopicPartition, OffsetAndTimestamp> offsetsForTimes(Map<TopicPartition, Long> timestampsToSearch, Duration timeout)

The following example demonstrates how offsets ForTimes () and seek() are used. First, offsetForTimes() is used to get the location of the day before, and then seek() is used to trace back to the corresponding location to start consumption.

Properties properties=ininConfig();

KafkaConsumer<String,String> consumer=new KafkaConsumer<String, String>(properties);

//Subscribe to Subjects

consumer.subscribe(Arrays.asList(topic));

//Call poll method

consumer.poll(Duration.ofMillis(1000));

Map<TopicPartition, Long> timestampToSearch = new HashMap<>();

//Partitions allocated by cyclic consumers

for (TopicPartition tp1:consumer.assignment()){

//Set key to partition value to the previous day

timestampToSearch.put(tp1,System.currentTimeMillis()-1*24*3600*1000);

}

//Call offsetsForTimes method to return

Map<TopicPartition, OffsetAndTimestamp> offsets=consumer.offsetsForTimes(timestampToSearch);

//Recycled consumer partitions

for (TopicPartition tp:consumer.assignment()){

//Get the value of the partition based on key

OffsetAndTimestamp offsetAndTimestamp=offsets.get(tp);

if (offsetAndTimestamp!=null) {

//Using seek Method to Set Consumption Displacement

consumer.seek(tp, offsetAndTimestamp.offset());

}

}

while (isRunning.get()){

ConsumerRecords<String,String> records=consumer.poll(Duration.ofMillis(1000));

for(ConsumerRecord<String,String> record:records){

System.out.println(record.value());

}

}

consumer.close();

Transboundary displacement

** Displacement cross-border refers to the fact that the location of consumption is known but cannot be found in the actual sub-area. For example, if you want to pull the interest rate from 10 places in the figure above, the displacement cross-border will occur. ** Note that the pull-out position is 9 and does not cross the border. Assuming that there are 100 messages and the displacement is 99, then using the seek() method to specify that the displacement 120 will cross the boundary, the pull displacement will be reset to 100 according to the default value of the auto.offset.reset parameter. At this time, the offset of the largest message in the partition is 99.

The previous section mentioned that the consumption displacement in Kafka is stored in an internal topic, and the seek() method in this section can break through this limitation: the consumption displacement can be stored in any storage medium, such as database, file system, etc. Taking the database as an example, we save the consumption displacement in a table. When we consume the next time, we can read the consumption displacement stored in the database table and point to this specific location through the seek() method.

//Code Listing 12-4 Consumer Displacement is saved in DB

consumer.subscribe(Arrays.asList(topic));

//Omitting poll() method and assignment logic

for(TopicPartition tp: assignment){

long offset = getOffsetFromDB(tp);//Reading Cancel Fee Shift from DB

consumer.seek(tp, offset);

}

while(true){

//Pull out interest rates

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000));

//Partitioning in a circular message set

for (TopicPartition partition : records.partitions()) {

//Get messages in partitions

List<ConsumerRecord<String, String>> partitionRecords = records.records(partition);

//Processing messages

for (ConsumerRecord<String, String> record : partitionRecords) {

//process the record.

}

//Get the displacement of the last item of the partition message set after consuming the partition message

long lastConsumedOffset = partitionRecords.get(partitionRecords.size() - 1).offset();

//Store the location of the next pulled message in DB

storeOffsetToDB(partition, lastConsumedOffset+1);

}

}

See () provides us with the ability to read messages from a specific location. We can skip a number of messages forward by this method, or retrieve a number of messages backward by this method, which provides great flexibility for message consumption. The seek() method also provides us with the ability to store the consumption displacement in the external storage medium. It can also cooperate with the rebalancing listener to provide more accurate consumption capacity.