Detailed analysis of Mybatis cache

1, Cache cache

The purpose of caching is to improve the efficiency of query and reduce the pressure on the database. MyBatis provides level 1 cache and level 2 cache, and reserves an interface for integrating third-party cache. All classes related to cache in MyBatis are in the cache package. There is a cache interface and only one default implementation class, PerpetualCache. In addition, there are many decorators. Through these decorators, you can realize many additional functions: recycling strategy, recording log, regular refresh, etc

All cache implementation classes can be divided into three categories: basic cache, obsolete algorithm cache and decorator cache

| Cache implementation class | describe | effect | Decoration conditions |

|---|---|---|---|

| Basic cache | Cache basic implementation class | The default is perpetual cache. You can also customize cache classes with basic functions, such as RedisCache and EhCache | nothing |

| LruCache | Caching of LRU policies | When the cache reaches the upper limit, delete the least recently used cache | Occurrence = "LRU" (default) |

| FifoCache | FIFO policy cache | When the cache reaches the upper limit, delete the first queued cache | eviction="FIFO" |

| SoftCache | Cache with cleanup policy | The soft reference and weak reference of the JVM are used to implement the cache. When the JVM memory is insufficient, these caches will be automatically cleaned up based on SoftReference and WeakReference | eviction="SOFT" |

| WeakCache | Cache with cleanup policy | The soft reference and weak reference of the JVM are used to implement the cache. When the JVM memory is insufficient, these caches will be automatically cleaned up based on SoftReference and WeakReference | eviction="WEAK" |

| LoggingCache | Caching with logging | For example: output cache hit rate | basic |

| SynchronizedCache | Synchronous cache | Based on the synchronized keyword implementation, the concurrency problem is solved | basic |

| BlockingCache | Blocking cache | By locking in the get/put mode, only one thread operation cache is guaranteed, which is implemented based on Java reentry lock | blocking=true |

| SerializedCache | Serialized cache supported | After serializing the object, it is stored in the cache and deserialized when taken out | readOnly=false (default) |

| ScheduledCache | Scheduled cache | Before performing get/put/remove/getSize and other operations, judge whether the cache time exceeds the set maximum cache time (one hour by default). If so, empty the cache - that is, empty the cache every other period of time | flushInterval is not empty |

| TransactionalCache | Transaction cache | Used in L2 cache, it can store multiple caches at a time and remove multiple caches | Maintain correspondence with Map in transactional cache manager |

2, Data preparation

3, L1 cache (local cache)

The L1 cache is also called local cache. The L1 cache of MyBatis is cached at the session level. MyBatis's L1 cache is enabled by default and does not require any configuration. Let's first think about a problem. Since the first level cache is session level, which object should the cache object be placed in for maintenance? In the default DefaultSqlSession, there are only two attributes, configuration and Executor. Configuration is global, so the cache can only be maintained in the Executor. A perpetual cache is held in the Executor's abstract implementation BaseExecutor. If the same SQL statement is executed multiple times in the same session, the cached results will be fetched directly from memory and the SQL will not be sent to the database. However, even as like as two peas in the same session, the same level of SQL is executed in different sessions, and the same level of cache can not be used in the same way through the same parameter of a Mapper.

Next, write a demo to verify the L1 cache. Firstly, the L2 cache is on by default. We need to close the L2 cache first, and then set localCacheScope to SESSION or not. The default is SESSION, caching all queries executed by a SESSION

<settings>

<!-- Print query statement -->

<setting name="logImpl" value="STDOUT_LOGGING" />

<!-- Control global cache (L2 cache)-->

<setting name="cacheEnabled" value="false"/>

<!-- L1 cache SEEION Cache all queries for a session-->

<setting name="localCacheScope" value="SESSION"/>

</settings>

1. Verify the same session and cross session queries

/**

* @Description: Tests whether the L1 cache caches a session query

* @Author zdp

* @Date 2022-01-05 14:09

*/

@Test

public void testFirstLevelCache() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session1 = sqlSessionFactory.openSession();

SqlSession session2 = sqlSessionFactory.openSession();

try {

BlogMapper mapper0 = session1.getMapper(BlogMapper.class);

BlogMapper mapper1 = session1.getMapper(BlogMapper.class);

Blog blog = mapper0.selectBlogById(1);

System.out.println("First query====================================");

System.out.println(blog);

System.out.println("Second query, same session===========================");

System.out.println(mapper1.selectBlogById(1));

System.out.println("Third query, different sessions===========================");

BlogMapper mapper2 = session2.getMapper(BlogMapper.class);

System.out.println(mapper2.selectBlogById(1));

} finally {

session1.close();

session2.close();

}

}

Effect display

From the information printed on the console, it can be seen that the first query prints the execution of Sql, and the second execution of the same Sql in the same session is indeed obtained from the cache. After the third cross session, the query is re queried from the database. The above verification shows that when the first level cache is set to SEEION in MyBatis, all queries of a session are indeed cached

2. How to verify that the queried data is updated in the same session

/**

* @Description: Update the queried data in the same session

* @Author zdp

* @Date 2022-01-05 14:22

*/

@Test

public void testCacheInvalid() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session = sqlSessionFactory.openSession();

try {

BlogMapper mapper = session.getMapper(BlogMapper.class);

System.out.println(mapper.selectBlogById(1));

Blog blog = new Blog();

blog.setBid(1);

blog.setName("Update data for the same session");

mapper.updateByPrimaryKey(blog);

session.commit();

System.out.println("Does the cache hit after the update operation?");

System.out.println(mapper.selectBlogById(1));

} finally {

session.close();

}

}

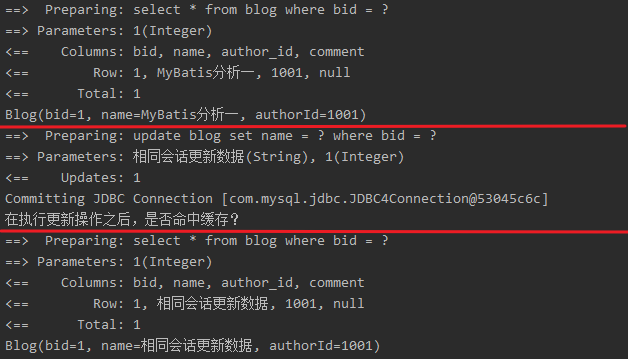

Effect display

It can be seen from the information printed on the console that after the first query, the update operation will lead to the invalidation of the first level cache and re query the database

3. Verify that the update operation is performed across sessions

/**

* @Description: Update data across sessions

* @Author zdp

* @Date 2022-01-05 14:27

*/

@Test

public void testDirtyRead() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session1 = sqlSessionFactory.openSession();

SqlSession session2 = sqlSessionFactory.openSession();

try {

BlogMapper mapper1 = session1.getMapper(BlogMapper.class);

System.out.println(mapper1.selectBlogById(1));

// Session 2 updates the data, and the L1 cache of session 2 is updated

Blog blog = new Blog();

blog.setBid(1);

blog.setName("after modified 112233445566");

BlogMapper mapper2 = session2.getMapper(BlogMapper.class);

mapper2.updateByPrimaryKey(blog);

session2.commit();

// Other sessions have updated data. Is the L1 cache of this session still there?

System.out.println("Did session 1 find the latest data?");

System.out.println(mapper1.selectBlogById(1));

} finally {

session1.close();

session2.close();

}

}

Effect display

It can be seen from the information printed on the console that after the first query, the update operation in session 2 will not lead to the invalidation of the first level cache and the query of wrong data. Here we see the deficiency of the first level cache. When using the first level cache, because the cache cannot be shared across sessions, different sessions may have different caches for data. In a multi session or distributed environment, there may be a problem of dirty data. Next, let's see how L2 cache solves this problem

4, L2 cache

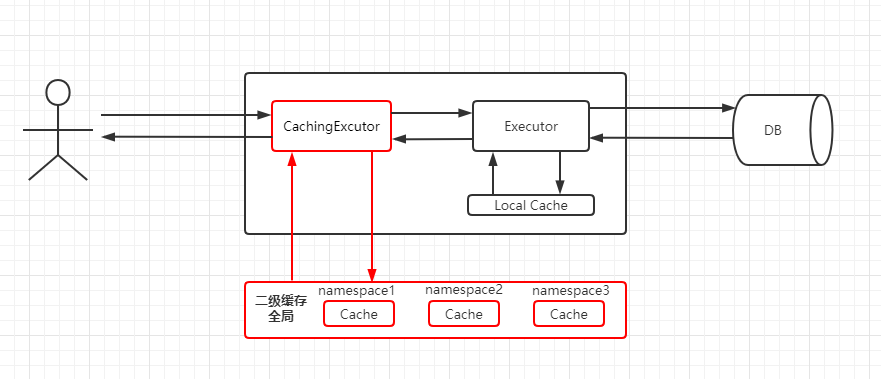

L2 cache is used to solve the problem that L1 cache cannot be shared across sessions. The scope is namespace level, which can be shared by multiple sqlsessions (as long as it is the same method in the same interface), life cycle and application synchronization.

Here, stop and think about whether the L2 cache works before or after the L1 cache? Where is the L2 cache maintained?

The first level cache is maintained in the BaseExecutor in the SqlSession. To realize cache sharing across sessions, it is obvious that the BaseExecutor can no longer meet the requirements, and the maintenance of the second level cache should be outside the SqlSession, so the second level cache should work before the first level cache. In MyBatis, it uses a decorator class cacheingexecution to maintain it. If L2 caching is enabled, MyBatis will decorate the Executor when creating the Executor object. For query requests, the cacheingexecution will judge whether the L2 cache has cache results. If so, it will be returned directly. If not, it will be delegated to the real query Executor implementation class, such as simpleexecution, to execute the query, and then go to the L1 cache process. Finally, the results are cached and returned to the user.

Enable the L2 cache and set cacheEnable to true or not. It is true by default in MyBatis

<setting name="cacheEnabled" value="true"/>

Analysis of cacheEnabled in MyBatis

private void settingsElement(Properties props) {

//If it is not set, the default value is true

configuration.setCacheEnabled(booleanValueOf(props.getProperty("cacheEnabled"), true));

//....

}

cacheEnabled determines whether to create a cacheingexecution

public Executor newExecutor(Transaction transaction, ExecutorType executorType) {

executorType = executorType == null ? defaultExecutorType : executorType;

//......

if (cacheEnabled) {

executor = new CachingExecutor(executor);

}

//......

}

In mapper Configure < cache / > tag in XML

<cache type="org.apache.ibatis.cache.impl.PerpetualCache"

size="1024"

eviction="LRU"

flushInterval="120000"

readOnly="false"/>

cache attribute interpretation:

| attribute | meaning | Value |

|---|---|---|

| type | Cache implementation class | The Cache interface needs to be implemented. The default is perpetual Cache |

| size | Maximum number of cached objects | Default 1024 |

| eviction | Recycling strategy (CACHE obsolescence algorithm) | LRU – least recently used: remove objects that have not been used for the longest time (default) FIFO – first in first out: remove objects in the order they enter the cache. SOFT – SOFT reference: removes objects based on garbage collector status and SOFT reference rules. WEAK – WEAK references: more actively remove objects based on garbage collector status and WEAK reference rules |

| flushInterval | Periodically and automatically empty the cache interval | Automatic refresh time, unit: ms. if it is not configured, it can only be refreshed when calling |

| readOnly | Read only | true: read only cache; The same instance of the cache object is returned to all callers. Therefore, these objects cannot be modified. This provides important performance advantages. False: read / write cache; A copy of the cached object is returned (through serialization) and is not shared. This will be slower, but safe, so the default is false. When changed to false, the object must support serialization |

| blocking | Whether to use reentrant lock to realize cache concurrency control | true, the BlockingCache will be used to decorate the Cache, and the default is false |

In mapper After the XML is configured, select() is cached. update(), delete(), insert() will refresh the Cache. As long as cacheEnabled=true, the basic actuator will be decorated. Whether there is a configuration determines whether the mapper's Cache object will be created at startup, which will eventually affect the judgment in the cachengexecution query method. If some query methods require high real-time data and do not need secondary Cache, we can explicitly close the secondary Cache on a single Statement ID (the default is true):

<select id="selectBlog" resultMap="BaseResultMap" useCache="false">

1. L2 cache validation

After basically understanding the use of L2 cache, next write a L2 cache test demo:

(1). Verify that cross session queries are cached

/**

* @Description: Testing L2 cache cross session queries

* @Author zdp

* @Date 2022-01-06 10:44

*/

@Test

public void testSecondCache() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session1 = sqlSessionFactory.openSession();

SqlSession session2 = sqlSessionFactory.openSession();

try {

BlogMapper mapper1 = session1.getMapper(BlogMapper.class);

System.err.println(mapper1.selectBlogById(1));

//The transaction commit here is very important. If the transaction is not committed, the L2 cache will not take effect

session1.commit();

System.err.println("Second query across sessions");

BlogMapper mapper2 = session2.getMapper(BlogMapper.class);

System.err.println(mapper2.selectBlogById(1));

} finally {

session1.close();

session2.close();

}

}

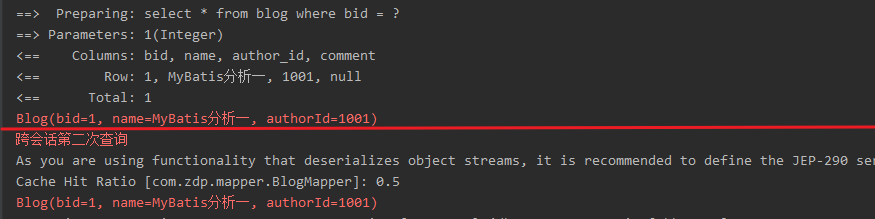

Effect display

If the above transaction is not committed, the L2 cache cannot be used because the L2 cache is managed by TransactionalCacheManager (TCM), and finally the getObject(), putObject and commit() methods of TransactionalCache are called. flushPendingEntries() is called only when its commit () method is called to actually write to the cache. It is called when DefaultSqlSession calls commit().

(2). Verify that the L2 cache is invalidated when performing update operations across sessions

/**

* @Description: Whether the L2 cache is invalidated when performing update operations across sessions

* @Author zdp

* @Date 2022-01-06 11:15

*/

@Test

public void testCacheInvalid() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session1 = sqlSessionFactory.openSession();

SqlSession session2 = sqlSessionFactory.openSession();

SqlSession session3 = sqlSessionFactory.openSession();

try {

BlogMapper mapper1 = session1.getMapper(BlogMapper.class);

BlogMapper mapper2 = session2.getMapper(BlogMapper.class);

BlogMapper mapper3 = session3.getMapper(BlogMapper.class);

System.out.println(mapper1.selectBlogById(1));

session1.commit();

// Whether to hit L2 cache

System.out.println("Hit L2 cache?");

System.out.println(mapper2.selectBlogById(1));

Blog blog = new Blog();

blog.setBid(1);

blog.setName("L2 cache update test..............................");

mapper3.updateByPrimaryKey(blog);

session3.commit();

System.out.println("Query again after the update. Do you hit the L2 cache?");

// If the update operation is performed in another session, is the L2 cache emptied?

System.out.println(mapper2.selectBlogById(1));

} finally {

session1.close();

session2.close();

session3.close();

}

}

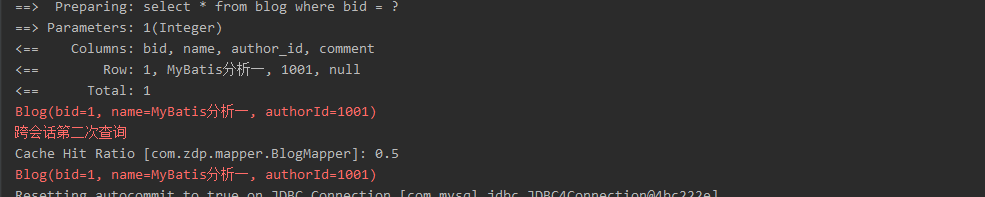

Effect display

It can be seen from the above that before session3 performs the update operation, session2 obtains data from the cache. At this time, the L2 cache is effective. After the update operation is performed, session2 queries again. At this time, the L2 cache has expired and the data is queried from the database, which also verifies that the L2 cache solves the shortage of the L1 cache, It solves the problem of dirty data caused by cross session operation in L1 cache

2. Why does addition, deletion and modification cause L2 cache invalidation

After the L2 cache is enabled, all additions, deletions and modifications will go to the cacheingexecution. Here, we can directly start from the invoke method of the method execution entry MapperProxy class, The previous execution logic is not clear. You can refer to this

@Override

public Object invoke(Object proxy, Method method, Object[] args) throws Throwable {

try {

if (Object.class.equals(method.getDeclaringClass())) {

return method.invoke(this, args);

} else {

return cachedInvoker(method).invoke(proxy, method, args, sqlSession);

}

} catch (Throwable t) {

throw ExceptionUtil.unwrapThrowable(t);

}

}

Here, first get from the cache methodcache < K, V > according to the method (method is the key in the cache). If MapperMethodInvoker is not obtained, execute the custom implementation of M - > {} and return the custom MapperMethodInvoker

private MapperMethodInvoker cachedInvoker(Method method) throws Throwable {

try {

return MapUtil.computeIfAbsent(methodCache, method, m -> {

//Determine whether it is the default method

if (m.isDefault()) {

try {

if (privateLookupInMethod == null) {

return new DefaultMethodInvoker(getMethodHandleJava8(method));

} else {

return new DefaultMethodInvoker(getMethodHandleJava9(method));

}

} catch (IllegalAccessException | InstantiationException | InvocationTargetException

| NoSuchMethodException e) {

throw new RuntimeException(e);

}

} else {

//Common method

return new PlainMethodInvoker(new MapperMethod(mapperInterface, method, sqlSession.getConfiguration()));

}

});

} catch (RuntimeException re) {

Throwable cause = re.getCause();

throw cause == null ? re : cause;

}

}

Here we will go to PlainMethodInvoker and call its invoke method

private static class PlainMethodInvoker implements MapperMethodInvoker {

private final MapperMethod mapperMethod;

public PlainMethodInvoker(MapperMethod mapperMethod) {

super();

this.mapperMethod = mapperMethod;

}

@Override

public Object invoke(Object proxy, Method method, Object[] args, SqlSession sqlSession) throws Throwable {

return mapperMethod.execute(sqlSession, args);

}

}

The execute method here only looks at INSERT, UPDATE and DELETE

public Object execute(SqlSession sqlSession, Object[] args) {

//....

switch (command.getType()) {

case INSERT: {

Object param = method.convertArgsToSqlCommandParam(args);

result = rowCountResult(sqlSession.insert(command.getName(), param));

break;

}

case UPDATE: {

Object param = method.convertArgsToSqlCommandParam(args);

result = rowCountResult(sqlSession.update(command.getName(), param));

break;

}

case DELETE: {

Object param = method.convertArgsToSqlCommandParam(args);

result = rowCountResult(sqlSession.delete(command.getName(), param));

break;

}

//....

}

}

Let's move on to sqlsession insert(command.getName(), param),sqlSession.update(command.getName(), param),sqlSession.delete(command.getName(), param),

@Override

public int insert(String statement, Object parameter) {

return update(statement, parameter);

}

@Override

public int delete(String statement, Object parameter) {

return update(statement, parameter);

}

@Override

public int update(String statement, Object parameter) {

try {

dirty = true;

MappedStatement ms = configuration.getMappedStatement(statement);

return executor.update(ms, wrapCollection(parameter));

} catch (Exception e) {

throw ExceptionFactory.wrapException("Error updating database. Cause: " + e, e);

} finally {

ErrorContext.instance().reset();

}

}

It can be seen from here that in fact, insert, delete and update all call the update(String statement, Object parameter) method, executor In the update method, the flush cache ifrequired (MS) method will be called at last

public class CachingExecutor implements Executor {

//......

@Override

public int update(MappedStatement ms, Object parameterObject) throws SQLException {

flushCacheIfRequired(ms);

return delegate.update(ms, parameterObject);

}

//......

}

public class CachingExecutor implements Executor {

private void flushCacheIfRequired(MappedStatement ms) {

Cache cache = ms.getCache();

//Here we get isFlushCacheRequired(), and this method is to get mapper The value of the flushCache attribute in the XML Mapping file. The default value of the flushCache attribute of update, insert and delete is true

if (cache != null && ms.isFlushCacheRequired()) {

tcm.clear(cache);

}

}

}

public boolean isFlushCacheRequired() {

return flushCacheRequired;

}

We can look at the value of flushCacheRequired when the MappedStatement ms object is created,

public void parseStatementNode() {

//......

String nodeName = context.getNode().getNodeName();

SqlCommandType sqlCommandType = SqlCommandType.valueOf(nodeName.toUpperCase(Locale.ENGLISH));

boolean isSelect = sqlCommandType == SqlCommandType.SELECT;

//All non Select operations default to true

boolean flushCache = context.getBooleanAttribute("flushCache", !isSelect);

//......

builderAssistant.addMappedStatement(id, sqlSource, statementType, sqlCommandType,

fetchSize, timeout, parameterMap, parameterTypeClass, resultMap, resultTypeClass,

resultSetTypeEnum, flushCache, useCache, resultOrdered,

keyGenerator, keyProperty, keyColumn, databaseId, langDriver, resultSets);

}

public MappedStatement addMappedStatement(/**...*/boolean flushCache/**...*/) {

//.....

boolean isSelect = sqlCommandType == SqlCommandType.SELECT;

MappedStatement.Builder statementBuilder = new MappedStatement.Builder(configuration, id, sqlSource, sqlCommandType).resource(resource)

//..... The value of flushCache is set here, and non Select is true

.flushCacheRequired(valueOrDefault(flushCache, !isSelect))

//.....

MappedStatement statement = statementBuilder.build();

configuration.addMappedStatement(statement);

return statement;

}

So here we can see that adding, deleting and modifying operations will cause L2 cache invalidation because in mapper When parsing the XML Mapping file, the default value of the flushCache attribute in the update, insert and delete elements is true. When updating, it will be judged by the value of flushCacheRequired (the value of flushCache). If it is true, the cache will be emptied

5, Redis does L2 cache

In addition to MyBatis's own L2 Cache, we can also customize the L2 Cache by implementing the Cache interface. Here, take Redis as an example and use it as a L2 Cache:

1. Introduce the mybatis redis dependency

<dependency>

<groupId>org.mybatis.caches</groupId>

<artifactId>mybatis-redis</artifactId>

<version>1.0.0-beta2</version>

</dependency>

2. In mapper RedisCache is configured in the XML Mapping file

<!-- use Redis As L2 cache -->

<cache type="org.mybatis.caches.redis.RedisCache"

eviction="FIFO"

flushInterval="60000"

size="512"

readOnly="true"/>

3. Configure redis Properties configuration file

If redis is not configured here The default address localhost and default port 6372 are used for the configuration file of properties. If reids is configured, the configuration file name must be redis The properties file should be placed under the resources directory

4. Write test class

@Test

public void testRedisCache() throws IOException {

String resource = "mybatis-config.xml";

InputStream inputStream = Resources.getResourceAsStream(resource);

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession session1 = sqlSessionFactory.openSession();

SqlSession session2 = sqlSessionFactory.openSession();

try {

BlogMapper mapper1 = session1.getMapper(BlogMapper.class);

System.err.println(mapper1.selectBlogById(1));

session1.commit();

System.err.println("Second query across sessions");

BlogMapper mapper2 = session2.getMapper(BlogMapper.class);

System.err.println(mapper2.selectBlogById(1));

} finally {

session1.close();

session2.close();

}

}

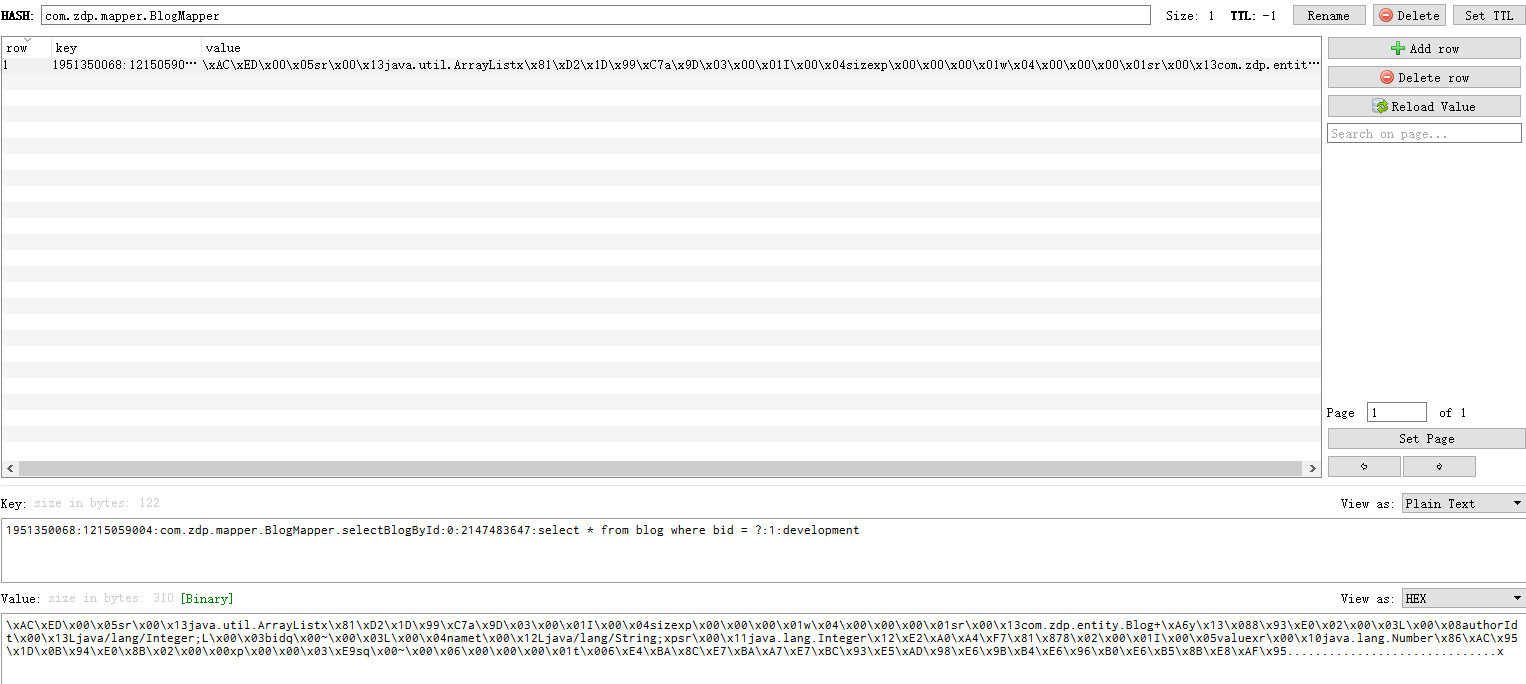

5. Effect display

The above is an example of using Redis as the secondary Cache. Of course, we can also implement the Cache interface provided by MyBatis and customize the Cache implementation.

The above is the introduction of MyBatis cache. If there are errors, I hope you can correct them and help you!