Tokenizer introduction and workflow

Transformers and the pre training model + fine tuning mode based on BERT family have become the standard configuration in the NLP field. Tokenizer, as the main method of text data preprocessing, has become an essential tool. This article takes the AutoTokenizer used in transformers as an example to illustrate its usage. However, if the pre training models such as BERT and ALBERT are used in the actual scene, the principle is similar, but the tokenizer corresponding to the model needs to be used, such as transformers The tokenizer corresponding to bertmodel is transformers BertTokenizer

Usually, we will directly use the AutoTokenizer class in the Transformers package to create and use the word breaker. The workflow of the word splitter is as follows:

- Splits the given text into words (or parts of words, punctuation, etc.) called tokens.

- These tokens are converted into digital codes to build tensors and provide them to the model.

- Add any input data required for the model to work properly. For example, special characters [CLS], [SEP], etc

The following describes several common inputs of Tokenizer and three common fields in the output.

The single sentence input of Tokenizer and the "input_ids" field in the output

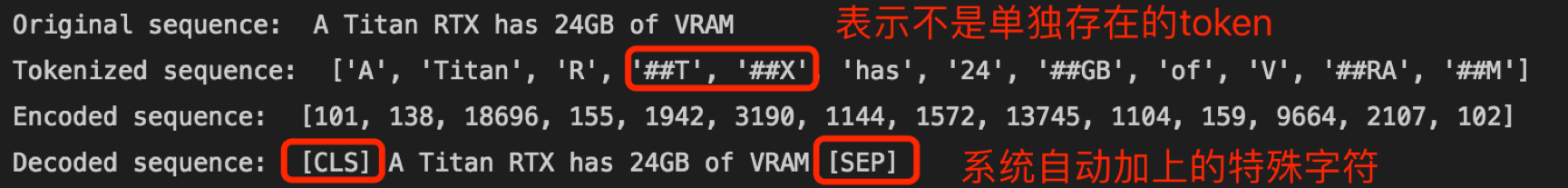

Let's first look at the single sentence input and corresponding output of tokenizer. tokenizer. The tokenize (sequence) method accepts the input of A single sentence, completes the task in step 1 above, and splits the text into token numbers. The tokenizer(sequence) directly completes steps 1 to 3. By default, the digital encoding of the output is A dictionary containing at least one constituent element "input_ids". The "input_ids" field is the only indispensable field in the output digital encoding dictionary, that is, the token indexes stored in the form of an array. For example, the number 138 in the following output corresponds to "A" and 18696 corresponds to "Titan". In the form of dictionary, you can directly access and output the token index sequence corresponding to the sentence. Of course, we can also use tokenizer The decode () method reversely decodes A token index sequence and returns the original sentence (actually A superset of the original sentence).

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained("bert-base-cased")

# Transformer's tokenizer - input_ids

sequence = "A Titan RTX has 24GB of VRAM"

print("Original sequence: ",sequence)

tokenized_sequence = tokenizer.tokenize(sequence)

print("Tokenized sequence: ",tokenized_sequence)

encodings = tokenizer(sequence)

encoded_sequence = encodings['input_ids']

print("Encoded sequence: ", encoded_sequence)

decoded_encodings=tokenizer.decode(encoded_sequence)

print("Decoded sequence: ", decoded_encodings)Output the results and note:

- Words composed of uppercase letters will be decomposed into one or more semantically independent tokens, but tokenizer will also be used ## to indicate that they do not exist alone.

- The decoded sentence is not completely consistent with the original sentence, but two more special characters. These two special characters are automatically added by the tokenizer() method during the task in step 3. For example, [CLS] represents the beginning or classification mark of the sentence, and [SEP] represents the end or separation mark of the sentence. When the method is called for reverse decoding, the additional characters will also be displayed.

Multiple sentence input of Tokenizer and attention in output_ mask

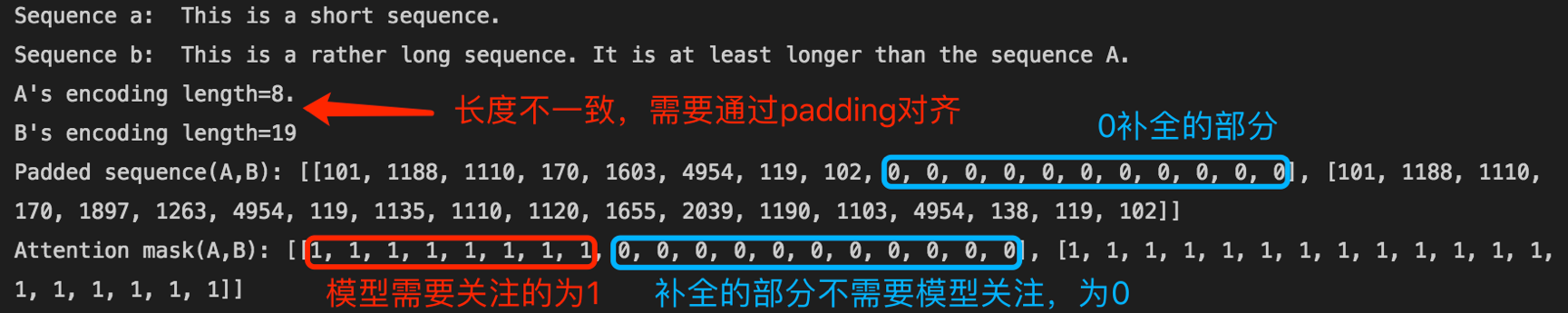

When inputting multiple sentences, it needs to be standardized so that they can be compared neatly and uniformly. For example, if the length of two sentences you input is inconsistent, you need to make them consistent through short sentence padding. But there are additional problems. For example, during training, the machine does not know that the filled content is meaningless, and will still faithfully calculate according to the filled value, which may introduce wrong information. To avoid this problem, the default output of tokenizer returns the second field "attention_mask". It will tell the machine which of the returned digital codes are the actual data that needs attention and which are the filling data that does not need attention.

# Transformer's tokenizer - attention_mask

sequence_a = "This is a short sequence."

sequence_b = "This is a rather long sequence. It is at least longer than the sequence A."

print("Sequence a: ",sequence_a)

print("Sequence b: ",sequence_b)

encoded_sequence_a = tokenizer(sequence_a)["input_ids"]

encoded_sequence_b = tokenizer(sequence_b)["input_ids"]

print("A's encoding length={}. \nB's encoding length={}".format(len(encoded_sequence_a),len(encoded_sequence_b)))

padded_sequence_ab = tokenizer([sequence_a,sequence_b],padding=True)

print("Padded sequence(A,B):", padded_sequence_ab["input_ids"])

print("Attention mask(A,B):", padded_sequence_ab["attention_mask"])Output results:

Tokenizer concatenates the input and output tokens of two sentences_ type_ ids

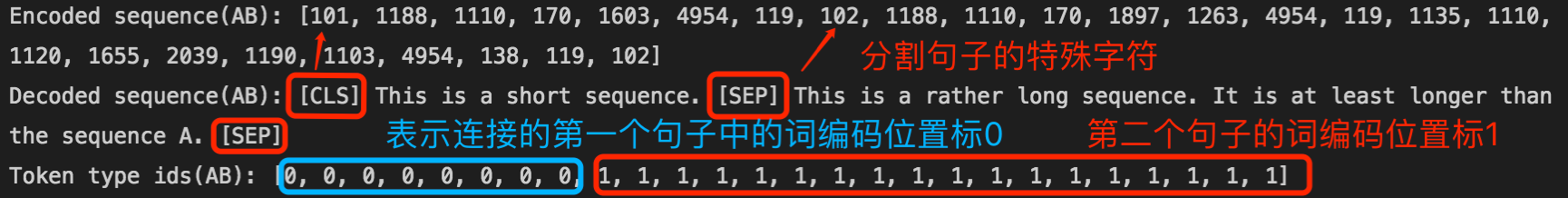

Some NLP tasks need to splice two sentences together, such as sequence tagging / classification and question answering. For example, in question answering, the first sentence is used as the context and the second sentence is used as the question. The model is required to output the answer. At this time, the tokenizer accepts the sequential input of two sentences and outputs the digital code. Although the returned numeric code also contains sentence separation information, the output of tokenizer still provides an optional third common field "token_type_ids". It is used to indicate which of the returned numeric codes belong to the first sentence and which belong to the second sentence.

# Transformer's tokenizer - token type id

encodings_ab = tokenizer(sequence_a, sequence_b)

print("Encoded sequence(AB):", encodings_ab["input_ids"])

decoded_ab = tokenizer.decode(encodings_ab["input_ids"])

print("Decoded sequence(AB):", decoded_ab)

print("Token type ids(AB):", encodings_ab["token_type_ids"])Output results:

This paper briefly summarizes the main functions and workflow of Tokenizer in the application based on Transformers, and explains in detail the three common inputs of tokenizer(), including single sentence, multi sentence and two sentence splicing inputs. In addition, the three most commonly used output fields "input_idx", "attention_mask" and "token_type_ids" are explained in detail for your reference.