preface

What is GPU?

GPU (Graphic Process Units). It is a single-chip processor, which is mainly used to manage and improve the performance of video and graphics. GPU accelerated computing refers to the use of graphics processor (GPU) and CPU to speed up the running speed of applications.

Why use GPU?

Deep learning involves many vector or multi matrix operations, such as matrix multiplication, matrix addition, matrix vector multiplication and so on. Deep model algorithms, such as BP, auto encoder, CNN, etc., can be written in the form of matrix operation without cyclic operation. However, when executed on a single core CPU, the matrix operation will be expanded into a circular form, which is still executed serially in essence. The multi-core architecture of GPU contains thousands of stream processors, which can parallelize the matrix operation and greatly shorten the computing time.

How to use GPU?

At present, many deep learning tools support GPU operation, which can be configured simply. Pytoch supports GPU. You can transfer data from memory to GPU video memory through the to(device) function. If there are multiple GPUs, you can locate which GPU or which GPU. Pytorch generally applies GPU to data structures such as tensors or models (including some network models under torch.nn and models created by itself).

1, About the function interface of CUDA

1.1 torch.cuda

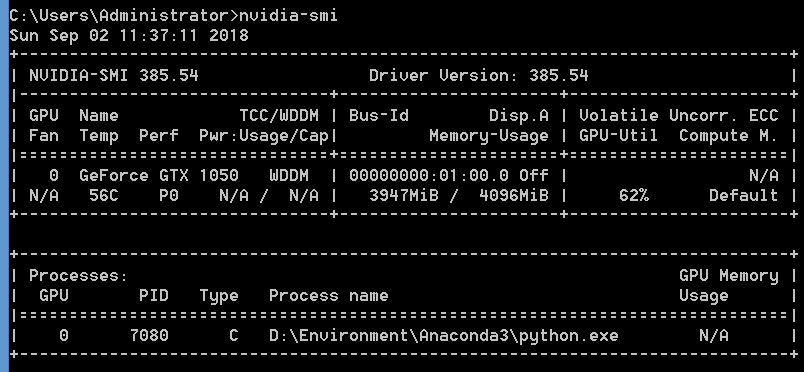

How to view the configuration information of the platform GPU? Enter the command NVIDIA SMI on the cmd command line (suitable for Linux or Windows environment).

Using this command for the first time may display

'NVIDIA SMI' is not an internal or external command, nor is it a runnable program or batch file.

We only need to configure the environment variables. The path to configure the environment is shown below,

C:\Program Files\NVIDIA Corporation\NVSMI

Then, after adding environment variables, the GPU configuration information of this machine can be displayed. Examples are as follows:

import torch print(torch.cuda.is_available()) # Check whether the system GPU can be used. It is often used to judge whether the GPU version of pytorch is installed print(torch.cuda.current_device())# Returns the serial number of the current device print(torch.cuda.get_device_name(0))# Returns the name of device 0 print(torch.cuda.device_count())# Returns the number of GPUs that can be used print(torch.cuda.memory_allocated(device="cuda:0"))#Returns the current GPU video memory usage of device 0 in bytes

1.2 torch.device

As an attribute of Tensor, it contains two device types, cpu and gpu, which are usually created in two ways:

#1. Create by string

eg:

torch.device('cuda:0')

torch.device('cpu')

torch.device('cuda') # Current cuda device

#2. Create by string plus equipment number

eg:

torch.device('cuda', 0)

torch.device('cpu', 0)

#There are several common ways to create Tensor objects on gpu devices:

torch.randn((2,3),device = torch.device('cuda:0'))

torch.randn((2,3),device = 'cuda:0')

torch.randn((2,3),device = 0) #Legacy practices, only gpu is supported

# Transfer data from memory to GPU, generally for tensors (the data we need) and models. For tensors (type: FloatTensor or long tensor, etc.), the method is used directly to(device) or cuda().

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#Or device = torch device("cuda:0")

device1 = torch.device("cuda:1")

for batch_idx, (img, label) in enumerate(train_loader):

img=img.to(device)

label=label.to(device)

# For the model, the same method is used to(device) or cuda to put the network into GPU video memory.

#Instantiation network

model = Net()

model.to(device) #Use GPU with sequence number 0

#or model.to(device1) #Use GPU with serial number 1

1.3 .to()

Device conversion is also a common way to set gpu.

One way I personally prefer to use is:

device=torch.device("cuda" if torch.cuda.is_available() else "cpu")

You can usually call:

to(device=None, dtype=None, non_blocking=False)

#The first one can set the current device, such as device = torch device('cuda:0')

#Or device = torch device("cuda" if torch.cuda.is_available() else "cpu")

#The second is the data type, such as torch float,torch. int,torch. double

#If the third parameter is set to True and the resource of this object is stored in pinned memory, the copy generated by this cuda() function will be synchronized with the original storage object on the host side. Otherwise, this parameter has no effect

1.4 use the specified GPU

PyTorch uses GPU starting from 0 by default. There are usually two ways to specify a specific GPU

1.CUDA_VISIBLE_DEVICES Terminal settings: CUDA_VISIBLE_DEVICES=1,2 python train.py (for instance) Set in code: import os os.environ["CUDA_VISIBLE_DEVICES"] = '1,2' 2.torch.cuda.set_device() Set in code: import torch torch.cuda.set_device(id) However, it is officially recommended CUDA_VISIBLE_DEVICES,Not recommended set_device Function.

1.5 multi GPU training

In order to improve the training speed, a machine often has multiple GPUs. At this time, parallel training can be carried out to improve efficiency. Parallel training can be divided into data parallel processing and model parallel processing.

Data parallel processing refers to using the same model to evenly distribute the data to multiple GPUs for training;

Model parallel processing means that different parts of multiple gpu training models use the same batch of data.

2, Training example code display

2.1 data parallel processing

This code takes Boston house price data as an example, with a total of 506 samples and 13 features. The data is divided into training set and test set, and then used data The dataloader is converted to a batch loadable mode. NN Dataparallel concurrency mechanism. The environment has two GPU s. Of course, the amount of data is very small, so it is reasonable not to use NN Dataparallel, here is just to illustrate the use method.

from sklearn import datasets

from sklearn.model_selection import train_test_split

import torch

import torch.nn as nn

import torch.nn.functional as F

# Load data

boston = datasets.load_boston()

X, y = (boston.data, boston.target)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

# Combined training data and labels

myset = list(zip(X_train, y_train))

# Convert the data to batch loading mode, the batch size is 128, and disrupt the data

from torch.utils import data

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

dtype = torch.FloatTensor

train_loader = data.DataLoader(myset,batch_size=128,shuffle=True)

# Define network

class Net1(nn.Module):

"""

use sequential Building networks, Sequential()The function is to group the layers of the network together

"""

def __init__(self, in_dim, n_hidden_1, n_hidden_2, out_dim):

super(Net1, self).__init__()

self.layer1 = torch.nn.Sequential(nn.Linear(in_dim, n_hidden_1))

self.layer2 = torch.nn.Sequential(nn.Linear(n_hidden_1, n_hidden_2))

self.layer3 = torch.nn.Sequential(nn.Linear(n_hidden_2, out_dim))

def forward(self, x):

x1 = F.relu(self.layer1(x))

x1 = F.relu(self.layer2(x1))

x2 = self.layer3(x1)

# Displays the data size allocated for each GPU

print("\tIn Model: input size", x.size(), "output size", x2.size())

return x2

if __name__ == '__main__':

# Convert the model to multi GPU concurrent processing format

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# Instantiation network

model = Net1(13, 16, 32, 1)

if torch.cuda.device_count() > 1:

print("Let's use", torch.cuda.device_count(), "GPUs")

# dim = 0 [64, xxx] -> [32, ...], [32, ...] on 2GPUs

model = nn.DataParallel(model)

model.to(device)

# Select optimizer and loss function

optimizer_orig = torch.optim.Adam(model.parameters(), lr=0.01)

loss_func = torch.nn.MSELoss()

# Model training and visualization of loss values

# from torch.utils.tensorboard import SummaryWriter

# writer = SummaryWriter(log_dir='logs')

for epoch in range(100):

model.train()

for data, label in train_loader:

input = data.type(dtype).to(device)

label = label.type(dtype).to(device)

output = model(input)

loss = loss_func(output, label)

# Back propagation

optimizer_orig.zero_grad()

loss.backward()

optimizer_orig.step()

print("Outside: input size", input.size(), "output_size", output.size())

# writer.add_scalar('train_loss_paral', loss, epoch)

Operation results:

Let's use 2 GPUs DataParallel( (module): Net1( (layer1): Sequential( (0): Linear(in_features=13, out_features=16, bias=True) ) (layer2): Sequential( (0): Linear(in_features=16, out_features=32, bias=True) ) (layer3): Sequential( (0): Linear(in_features=32, out_features=1, bias=True) ) ) )

It can be seen from the running results that a batch data (batch size = 128) is divided into two copies, each with a size of 64 and placed on different GPU s.

In Model: input size torch.Size([64, 13]) output size torch.Size([64, 1]) In Model: input size torch.Size([64, 13]) output size torch.Size([64, 1]) Outside: input size torch.Size([128, 13]) output_size torch.Size([128, 1]) In Model: input size torch.Size([64, 13]) output size torch.Size([64, 1]) In Model: input size torch.Size([64, 13]) output size torch.Size([64, 1]) Outside: input size torch.Size([128, 13]) output_size torch.Size([128, 1])

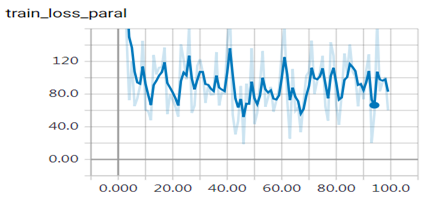

Loss:

The large amplitude in the graph is due to batch processing and no preprocessing of the data. The standardization of the data should be smoother. You can try it.

DistributedParallel can also be used for single machine multi GPU, which is mostly used for distributed training, but it can also be used for single machine multi GPU training. The configuration is better than NN Dataparallel is a little more troublesome, but the training speed and effect are better. The specific configuration is:

#Initialize the backend using nccl torch.distributed.init_process_group(backend="nccl") #Model parallelization model=torch.nn.parallel.DistributedDataParallel(model)

When a single machine is running, use the following method to start

Wolf nephritis - doctor online communication

Has been diagnosed as suffering from wolf nephritis. Can the condition be controlled through treatment? How long can the average patient last? At present, the general situation: hair loss, purple fingers when the weather gets cold, and sometimes feel powerless. Medical history: no previous diagnosis and treatment process and effect: he was diagnosed and changed to receive treatment now

Do you get sick without breakfast- Doctor online communication

Do you get sick without breakfast< BR

What's the reason for sweating at the slightest move - doctor online communication

Patient age: 18. The child is sweating after a little exercise. It's ten minutes' journey from school to home. His clothes can be soaked when he comes back. (wearing autumn clothes inside) the onset and duration of this disease: this summer (I think it's hot and sweating in summer)

I'm more than 40 days pregnant now. My eyes were a little itchy 10 days ago

I'm pregnant for more than 40 days now. 10 days ago, my eyes were a little itchy. I used some eye drops and wiped eye ointment myself. At that time, I didn't know I was pregnant. Are you pregnant now?

Unmarried, cervical erosion three degrees, how to treat- Doctor online communication

Hello! I am 26, unmarried and have had sex, but few. Last year, when I was doing abortion, I checked for three degrees of cervical erosion. I have been treated with drugs, but this year, I still have three examinations. And there is miscellaneous bacterial infection. Biopsy and colposcopy showed hyperplasia. The doctor advised me to use electric ironing. However, some people say that if the depth is not reached, it is impossible to cure at one time, and it may also affect future fertility. I have consulted other doctors and she suggested that I use microwave or Bohm. I mainly want to ask: which treatment method has less damage to the cervix and can be cured at one time without affecting future fertility. I look forward to your help. Thank you for your first question supplement: Thank you for your answer first. It's really annoying. I ran to several hospitals today. Different opinions and troubles. What should I do? The hospital I went to today had Lipper knife. The doctor said it could be cured at one time, and the operation cost was 600 yuan. I listened well, but some doctors said that the method was conical resection, which did too much harm to me. Others said focused ultrasound, Bohm's, and another said frozen 260. And they are all famous hospitals. Some doctors say that no matter what treatment method is adopted, it will have an impact on future delivery, and abdominal delivery should be abandoned in the future. I want to ask, is that so? If not, which has no impact on future natural production??? Is lip knife a conical resection?? Hurry!!!

For nearly half a year, hands and feet often sweat, low back pain and defecation are difficult to treat

BR

Hello, doctor. My wife's child born in April this year found some goiter in the recent physical examination. The color Doppler ultrasound diagnosis was: the internal echo of the thyroid was uneven, thickened, and no obvious nodules were found. CDFI: abundant blood flow signals can be detected in thyroid gland, showing Fire Sea sign. Right superior thyroid artery: Vmax: 72.6, RI:0.59 left superior thyroid artery: Vmax: 71.4cms:0.56 ultrasound tips: diffuse thyroid lesions with abundant blood supply. The results of three items of thyroid function and TG are: reference value range FT3 1.26 ↓ pmolL 2.2-5.3FT4 7.55 ↓ pmolL 9.1-23.8TSH 100.0 ↑ uuml 0.490-4.670anti TG 99.3 ↑ iuml 0-34anti TPO 201.7 ↑ IUmL 0-12. What disease does the above data reflect? Thank you. My wife is 28 years old. She is 158cm tall and weighs 47kg. Her weight has always been in this range.

BR

I'm sure there are worms in my stomach. I always feel something wriggling in my stomach. After taking the medicine, I feel better, but I feel it again as soon as I eat. Sometimes I feel the anus wriggling, but when I go to the hospital for examination, the doctor can't tell Thank you!

I am 36 years old and have been released for 8 years. I would like to ask the expert whether I need to replace it and how long it is generally easy to release it.

What is the solution for constipation of babies under three months - doctor online communication

What is the solution to constipation for babies less than three months

Come and help me diagnose, thanks- Doctor online communication

Once, sometimes every three or four months or even half a year. There were more in junior high school, and the amount of each time lasted for at least a week. Now, it is less and less especially after marriage (December 05). From September and October of the lunar calendar in 2005 (of course, there is a lot of time and a star period), to marriage, it came once until the end of June this year, and then miscarried in August, The night before the abortion, my husband and I (because we didn't know we were pregnant) went to the hospital for B-ultrasound and performed curettage after the test was weakly positive. We haven't come for nearly three months now. Moreover, the hair on my lower leg is long and black, and other parts of my body are normal. During the physical examination at University, my body is normal and not very fat. I'm of medium build. Please help me, I'm so confused! The first question added: the hair on the lower leg is always long, thick and dark. I'm sorry in summer, but my father's hair is also heavier. Is it related to heredity? The second question added: I want to ask how to treat this situation: can the hair be removed and how to regulate menstruation? Help me judge whether it belongs to polycystic ovary syndrome? I'm confused and helpless. I hope you can help me!!!

Can you do early pregnancy examination in the afternoon - doctor online communication

Can I have an early pregnancy examination in the afternoon

My child is four months old Why do you cry as soon as you eat milk And cry for a while before eating Cry and eat And I can't eat for a while If you don't give him food, he doesn't have the consciousness of feeding

A two-year-old child has not done penicillin skin test, but only xilixin skin test (negative skin test). How long will the adverse reaction occur after intravenous injection of 1G alexin??? If there is no adverse overtone within 4 hours, can it be said that there will be no adverse reaction again? The first question added: what happened three days later was allergic reaction, allergic rash or anaphylactic shock?

What's the matter with sore teeth? How- Doctor online communication

Patient age: 52 tooth soreness treatment this time onset and duration: more than 30 days current general condition: acid history: no previous diagnosis, treatment process and effect: brush lengsuanling toothpaste has no obvious effect auxiliary examination: no other: None

Gastropathy and anemia - doctor online communication

Hello! Because I had been hungry for a long time, I now had stomach problems and was easy to get sick. And because he is seriously anemic, his body is not very good, and he is malnourished. When sick, due to poor stomach, taking medicine is easy to vomit and can't swallow. I would like to ask the following questions: how should I match my diet in my usual diet, what to pay attention to, what to match, and what to add more. Also, because my family is in the countryside and my family is poor, is there any folk prescription that can treat my stomach and anemia? Is there any cheap medicine for stomach disease and anemia?