This article will give a detailed explanation of NuPlayer based on Android N source code. NuPlayer is a player used for local and streaming media playback in Android.

1. AHandler mechanism

Firstly, the ubiquitous AHandler mechanism in NuPlayer is introduced frameworks/av/include/media/stagefright/foundation/ frameworks/av/media/libstagefright/foundation/ AHandler is an asynchronous message mechanism implemented by Android native layer. In this mechanism, all processing is asynchronous. Variables are encapsulated into a message AMessage structure and then put into the queue. A thread in the background will take messages out of the queue and execute them. The execution function is onMessageReceived.

Ahandler mechanism includes the following classes

- AMessage

The message class is used to construct messages and post them to ALooper through the post method

status_t AMessage::post(int64_t delayUs) {

sp<ALooper> looper = mLooper.promote();

if (looper == NULL) {

ALOGW("failed to post message as target looper for handler %d is gone.", mTarget);

return -ENOENT;

}

looper->post(this, delayUs);

return OK;

}

void AMessage::deliver() {

sp<AHandler> handler = mHandler.promote();

if (handler == NULL) {

ALOGW("failed to deliver message as target handler %d is gone.", mTarget);

return;

}

handler->deliverMessage(this); //see AHandler@deliverMessage , the loop post is used in the front, and finally the delever here is called and sent to the handler

}- AHandler Message processing classes are generally regarded as parent classes. Subclasses that inherit this class need to implement onMessageReceived method

void AHandler::deliverMessage(const sp<AMessage> &msg) {

onMessageReceived(msg);

mMessageCounter++;

....

}- ALooper One to one correspondence with Ahandler, responsible for storing messages and distributing Ahandler's messages, one to many relationship with AMessage

// posts a message on this looper with the given timeout

void ALooper::post(const sp<AMessage> &msg, int64_t delayUs) {

Mutex::Autolock autoLock(mLock);

int64_t whenUs;

if (delayUs > 0) {

whenUs = GetNowUs() + delayUs;

} else {

whenUs = GetNowUs();

}

List<Event>::iterator it = mEventQueue.begin();

while (it != mEventQueue.end() && (*it).mWhenUs <= whenUs) {

++it;

}

Event event;

event.mWhenUs = whenUs;

event.mMessage = msg;

if (it == mEventQueue.begin()) {

mQueueChangedCondition.signal();

}

mEventQueue.insert(it, event);

}

----------------------------------------------------------

status_t ALooper::start(

bool runOnCallingThread, bool canCallJava, int32_t priority) {

if (runOnCallingThread) {

{

Mutex::Autolock autoLock(mLock);

if (mThread != NULL || mRunningLocally) {

return INVALID_OPERATION;

}

mRunningLocally = true;

}

do {

} while (loop());

return OK;

}

Mutex::Autolock autoLock(mLock);

if (mThread != NULL || mRunningLocally) {

return INVALID_OPERATION;

}

mThread = new LooperThread(this, canCallJava);

status_t err = mThread->run(

mName.empty() ? "ALooper" : mName.c_str(), priority);

if (err != OK) {

mThread.clear();

}

return err;

}

bool ALooper::loop() {

Event event;

{

Mutex::Autolock autoLock(mLock);

if (mThread == NULL && !mRunningLocally) {

return false;

}

if (mEventQueue.empty()) {

mQueueChangedCondition.wait(mLock);

return true;

}

int64_t whenUs = (*mEventQueue.begin()).mWhenUs;

int64_t nowUs = GetNowUs();

if (whenUs > nowUs) {

int64_t delayUs = whenUs - nowUs;

mQueueChangedCondition.waitRelative(mLock, delayUs * 1000ll);

return true;

}

event = *mEventQueue.begin();

mEventQueue.erase(mEventQueue.begin());

}

event.mMessage->deliver(); //see AHandler.deliverMessage

.....

return true;

}- LooperThread This thread calls ALooper's loop method to distribute messages

virtual status_t readyToRun() {

mThreadId = androidGetThreadId();

return Thread::readyToRun();

}

virtual bool threadLoop() {

return mLooper->loop();

}- ALooperRoaster It is a one to many relationship with Handler. It manages the one-to-one correspondence between Looper and Handler and is responsible for releasing the stale handler

ALooper::handler_id ALooperRoster::registerHandler(

const sp<ALooper> looper, const sp<AHandler> &handler) {

Mutex::Autolock autoLock(mLock);

if (handler->id() != 0) {

CHECK(!"A handler must only be registered once.");

return INVALID_OPERATION;

}

HandlerInfo info;

info.mLooper = looper;

info.mHandler = handler;

ALooper::handler_id handlerID = mNextHandlerID++;//one-on-one

mHandlers.add(handlerID, info);//One to many

handler->setID(handlerID, looper);

return handlerID;

}

void ALooperRoster::unregisterHandler(ALooper::handler_id handlerID) {

Mutex::Autolock autoLock(mLock);

ssize_t index = mHandlers.indexOfKey(handlerID);

if (index < 0) {

return;

}

const HandlerInfo &info = mHandlers.valueAt(index);

sp<AHandler> handler = info.mHandler.promote();

if (handler != NULL) {

handler->setID(0, NULL);

}

mHandlers.removeItemsAt(index);

}

void ALooperRoster::unregisterStaleHandlers() {

Vector<sp<ALooper> > activeLoopers;

{

Mutex::Autolock autoLock(mLock);

for (size_t i = mHandlers.size(); i > 0;) {

i--;

const HandlerInfo &info = mHandlers.valueAt(i);

sp<ALooper> looper = info.mLooper.promote();

if (looper == NULL) {

ALOGV("Unregistering stale handler %d", mHandlers.keyAt(i));

mHandlers.removeItemsAt(i);

} else {

// At this point 'looper' might be the only sp<> keeping

// the object alive. To prevent it from going out of scope

// and having ~ALooper call this method again recursively

// and then deadlocking because of the Autolock above, add

// it to a Vector which will go out of scope after the lock

// has been released.

activeLoopers.add(looper);

}

}

}

}- Creation of asynchronous message mechanism

sp<ALooper> mLooper = new ALooper; //Create an Alooper instance

sp<AHandlerReflector> mHandler = new AHandlerReflector //Create an Ahandler instance

mLooper->setName("xxxxx"); //Set looper name

mLooper->start(false, true, PRIORITY_XXX); //Create and start looper thread based on parameters

mLooper->regiserHandler(mHandler); //register handler will call the setID method of AHandler to set looper into Handler- Post message

sp<AMessage> msg = new AMessage(kWhatSayGoodbye, mHandler); //In the construction method of AMessage, obtain the Looper corresponding to Ahandler and save it

msg->post(); // Call the post method of looper

Message Post Call procedure for

Message::post

↓

ALooper::post

mEventQueue.insert

mQueueChangedCondition.signal() //If there is no event before, notify looper thread

↓

ALooper::loop()

if (mEventQueue.empty()) { //If the message queue is empty, wait

mQueueChangedCondition.wait(mLock);

return true;

}

event = *mEventQueue.begin();

event.mMessage->deliver();

↓

AHandler::deliverMessage

↓

AHandlerReflector:: onMessageReceived

↓

Specific implementationNuPlayer

Now let's get to the point, NuPlayer frameworks/av/media/libmediaplayerservice/nuplayer/

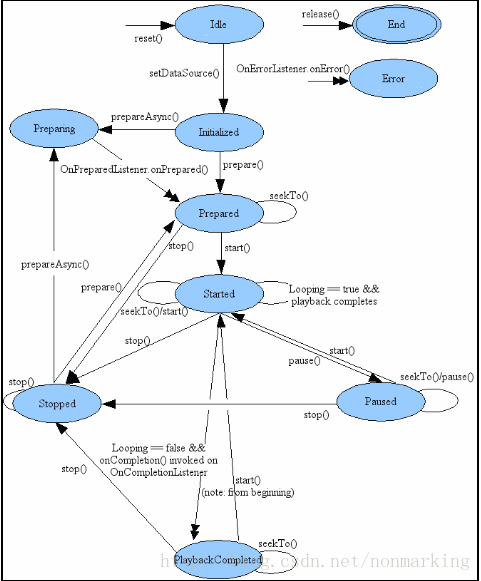

- NuPlayerDriver NuPlayerDriver encapsulates NuPlayer and inherits MediaPlayerInterface interface. The function of playing is realized through NuPlayer. The way to look at this part of the code is to first look at what is done in NuPlayerDriver, turn around and look for the implementation in NuPlayer. Generally, you have to go to onMessageReceive of NuPlayer to see the response of the message, and finally go back to various notifications of NuPlayerDriver to see the flow of the process. A flow chart of player state machine is attached below

NuPlayerDriver::NuPlayerDriver(pid_t pid)

: mState(STATE_IDLE), //Corresponding to the initialization state of the player state machine

mIsAsyncPrepare(false),

mAsyncResult(UNKNOWN_ERROR),

mSetSurfaceInProgress(false),

mDurationUs(-1),

mPositionUs(-1),

mSeekInProgress(false),

mLooper(new ALooper),

mPlayerFlags(0),

mAtEOS(false),

mLooping(false),

mAutoLoop(false) {

ALOGV("NuPlayerDriver(%p)", this);

//It is consistent with the asynchronous message creation mechanism described above

mLooper->setName("NuPlayerDriver Looper");

mLooper->start(

false, /* runOnCallingThread */

true, /* canCallJava */

PRIORITY_AUDIO);

//mPlayer is NuPlayer, which inherits from AHandler

mPlayer = AVNuFactory::get()->createNuPlayer(pid);

mLooper->registerHandler(mPlayer);

mPlayer->setDriver(this);

}

NuPlayerDriver::~NuPlayerDriver() {

ALOGV("~NuPlayerDriver(%p)", this);

mLooper->stop(); //The whole NuPlayerDriver is a big ALooper

}- AVNuFactory Responsible for the creation of key components, through which you can see: 1. Each NuPlayer corresponds to a process 2. The data flow is from source decoder renderer, driven by AMessages in the middle

sp<NuPlayer> AVNuFactory::createNuPlayer(pid_t pid) {

return new NuPlayer(pid);

}

sp<NuPlayer::DecoderBase> AVNuFactory::createPassThruDecoder(

const sp<AMessage> ¬ify,

const sp<NuPlayer::Source> &source,

const sp<NuPlayer::Renderer> &renderer) {

return new NuPlayer::DecoderPassThrough(notify, source, renderer);

}

sp<NuPlayer::DecoderBase> AVNuFactory::createDecoder(

const sp<AMessage> ¬ify,

const sp<NuPlayer::Source> &source,

pid_t pid,

const sp<NuPlayer::Renderer> &renderer) {

return new NuPlayer::Decoder(notify, source, pid, renderer);

}

sp<NuPlayer::Renderer> AVNuFactory::createRenderer(

const sp<MediaPlayerBase::AudioSink> &sink,

const sp<AMessage> ¬ify,

uint32_t flags) {

return new NuPlayer::Renderer(sink, notify, flags);

}Next, analyze source, decoder and renderer respectively

Source

Take setDataSource as the starting point

status_t NuPlayerDriver::setDataSource(const sp<IStreamSource> &source) {

ALOGV("setDataSource(%p) stream source", this);

Mutex::Autolock autoLock(mLock);

if (mState != STATE_IDLE) {

return INVALID_OPERATION;

}

mState = STATE_SET_DATASOURCE_PENDING;

mPlayer->setDataSourceAsync(source);//Because the driver is only the encapsulation of NuPlayer, you still need to call NuPlayer to complete the actual action

while (mState == STATE_SET_DATASOURCE_PENDING) {

mCondition.wait(mLock);

}

return mAsyncResult;

}

--------------------------------------

void NuPlayer::setDataSourceAsync(const sp<IStreamSource> &source) {

sp<AMessage> msg = new AMessage(kWhatSetDataSource, this);

sp<AMessage> notify = new AMessage(kWhatSourceNotify, this);

msg->setObject("source", new StreamingSource(notify, source));

msg->post(); //In NuPlayer, you don't operate directly, but send a message first to verify that everything mentioned above is driven by AMessage

}

---------------------------------------

void NuPlayer::onMessageReceived(const sp<AMessage> &msg) {

switch (msg->what()) {

case kWhatSetDataSource://The actual processing is here

{

ALOGV("kWhatSetDataSource");

CHECK(mSource == NULL);

status_t err = OK;

sp<RefBase> obj;

CHECK(msg->findObject("source", &obj));

if (obj != NULL) {

Mutex::Autolock autoLock(mSourceLock);

mSource = static_cast<Source *>(obj.get());//Assign to mSource

} else {

err = UNKNOWN_ERROR;

}

CHECK(mDriver != NULL);

sp<NuPlayerDriver> driver = mDriver.promote();

if (driver != NULL) {

driver->notifySetDataSourceCompleted(err);//Notify the driver that the setting is complete

}

break;

}......

---------------------------------------

void NuPlayerDriver::notifySetDataSourceCompleted(status_t err) {

Mutex::Autolock autoLock(mLock);

CHECK_EQ(mState, STATE_SET_DATASOURCE_PENDING);

mAsyncResult = err;

mState = (err == OK) ? STATE_UNPREPARED : STATE_IDLE;//Back to the driver, the flow player status enters the next stage

mCondition.broadcast();

}Let's see which sources are inherited from NuPlayer:Source (nuplayersource. H & nuplayersource. CPP) 1.HTTP - further judge which of the following: HTTPLiveSource,RTSPSource,GenericSource 2.File-GenericSource 3.StreamSource-StreamingSource 4.DataSource-GenericSource

- GenericSource

nuplayer/GenericSource.h & GenericSource.cpp

Several water levels

static int64_t kLowWaterMarkUs = 2000000ll; // 2secs

static int64_t kHighWaterMarkUs = 5000000ll; // 5secs

static int64_t kHighWaterMarkRebufferUs = 15000000ll; // 15secs, this is the newly added water level

static const ssize_t kLowWaterMarkBytes = 40000;

static const ssize_t kHighWaterMarkBytes = 200000;

status_t NuPlayer::GenericSource::initFromDataSource() {

init extractor;get track info and metadata

}

void NuPlayer::GenericSource::prepareAsync() {

if (mLooper == NULL) {

mLooper = new ALooper;

mLooper->setName("generic");

mLooper->start();

mLooper->registerHandler(this);

}

sp<AMessage> msg = new AMessage(kWhatPrepareAsync, this);

msg->post();

}

status_t NuPlayer::GenericSource::feedMoreTSData() {

return OK;

}- LiveSession

libstagefright/httplive/LiveSession.h & cpp

// static // Bandwidth Switch Mark Defaults const int64_t LiveSession::kUpSwitchMarkUs = 15000000ll; const int64_t LiveSession::kDownSwitchMarkUs = 20000000ll; const int64_t LiveSession::kUpSwitchMarginUs = 5000000ll; const int64_t LiveSession::kResumeThresholdUs = 100000ll; // Buffer Prepare/Ready/Underflow Marks const int64_t LiveSession::kReadyMarkUs = 5000000ll; const int64_t LiveSession::kPrepareMarkUs = 1500000ll; const int64_t LiveSession::kUnderflowMarkUs = 1000000ll; And Fetcher,Bandwidth Estimater(and ExoPlayer The same is sliding window average),switching,Buffering All relevant operations are here

- HTTPLiveSource

nuplayer directory

enum Flags {

// Don't log any URLs. Do not record URL in log

kFlagIncognito = 1,

};

NuPlayer::HTTPLiveSource::HTTPLiveSource(

if (headers) { //A header mechanism has also been established

mExtraHeaders = *headers;

ssize_t index =

mExtraHeaders.indexOfKey(String8("x-hide-urls-from-log"));

if (index >= 0) {

mFlags |= kFlagIncognito;

mExtraHeaders.removeItemsAt(index);

}

}

}

---------------------------------------

void NuPlayer::HTTPLiveSource::prepareAsync() {

if (mLiveLooper == NULL) {

mLiveLooper = new ALooper;//ALooper as always

mLiveLooper->setName("http live");

mLiveLooper->start();

mLiveLooper->registerHandler(this);

}

sp<AMessage> notify = new AMessage(kWhatSessionNotify, this);

mLiveSession = new LiveSession(

notify,

(mFlags & kFlagIncognito) ? LiveSession::kFlagIncognito : 0,

mHTTPService);

mLiveLooper->registerHandler(mLiveSession);

mLiveSession->connectAsync(//HTTPLiveSource contains LiveSession, and a lot of actual work is done by LiveSession

mURL.c_str(), mExtraHeaders.isEmpty() ? NULL : &mExtraHeaders);

}- ATSParser frameworks/av/media/libstagefright/mpeg2ts/ATSParser.cpp It is a TS Parser. Although it is also called Axx, there is no message mechanism in it

- StreamingSource nuplayer directory

void NuPlayer::StreamingSource::prepareAsync() {

if (mLooper == NULL) {

mLooper = new ALooper;

mLooper->setName("streaming");

mLooper->start();//what a striking similarity

mLooper->registerHandler(this);

}

notifyVideoSizeChanged();

notifyFlagsChanged(0);

notifyPrepared();

}

---------------------------------------

StreamingSource Data from onReadBuffer Drive, last EOS,Discontiunity I'll give you everything ATSParser Deal with it, ATSParser Finally handed over to AnotherPacketSource To do the real deal.In fact, the three mentioned here Source It will be used in the end AnotherPacketSource

void NuPlayer::StreamingSource::onReadBuffer() {

for (int32_t i = 0; i < kNumListenerQueuePackets; ++i) {

char buffer[188];

sp<AMessage> extra;

ssize_t n = mStreamListener->read(buffer, sizeof(buffer), &extra);//Actually use NuPlayerStreamListener to complete the work

if (n == 0) {

ALOGI("input data EOS reached.");

mTSParser->signalEOS(ERROR_END_OF_STREAM);//EOS

setError(ERROR_END_OF_STREAM);

break;

} else if (n == INFO_DISCONTINUITY) {

int32_t type = ATSParser::DISCONTINUITY_TIME;

int32_t mask;

if (extra != NULL

&& extra->findInt32(

IStreamListener::kKeyDiscontinuityMask, &mask)) {

if (mask == 0) {

ALOGE("Client specified an illegal discontinuity type.");

setError(ERROR_UNSUPPORTED);

break;

}

type = mask;

}

mTSParser->signalDiscontinuity(

(ATSParser::DiscontinuityType)type, extra);

} else if (n < 0) {

break;

} else {

if (buffer[0] == 0x00) {

// XXX legacy

if (extra == NULL) {

extra = new AMessage;

}

uint8_t type = buffer[1];

if (type & 2) {

int64_t mediaTimeUs;

memcpy(&mediaTimeUs, &buffer[2], sizeof(mediaTimeUs));

extra->setInt64(IStreamListener::kKeyMediaTimeUs, mediaTimeUs);

}

mTSParser->signalDiscontinuity(

((type & 1) == 0)

? ATSParser::DISCONTINUITY_TIME

: ATSParser::DISCONTINUITY_FORMATCHANGE,

extra);

} else {

status_t err = mTSParser->feedTSPacket(buffer, sizeof(buffer));

if (err != OK) {

ALOGE("TS Parser returned error %d", err);

mTSParser->signalEOS(err);

setError(err);

break;

}

}

}

}

}- AnotherPacketSource

frameworks/av/media/libstagefright/mpeg2ts It can be compared with the chunk source in ExoPlayer. It is also responsible for buffer management, EOS\Discontinuity processing, etc. the first three sources will eventually fall to AnotherPacketSource

bool AnotherPacketSource::hasBufferAvailable(status_t *finalResult) {

Mutex::Autolock autoLock(mLock);

*finalResult = OK;

if (!mEnabled) {

return false;

}

if (!mBuffers.empty()) {//An ABuffer List is actually a ring buffer

return true;

}

*finalResult = mEOSResult;

return false;

}

--------------------------------------

void AnotherPacketSource::queueDiscontinuity(

ATSParser::DiscontinuityType type,

const sp<AMessage> &extra,

bool discard) {

Mutex::Autolock autoLock(mLock);

if (discard) {

// Leave only discontinuities in the queue.

......

}

mEOSResult = OK;

mLastQueuedTimeUs = 0;

mLatestEnqueuedMeta = NULL;

if (type == ATSParser::DISCONTINUITY_NONE) {

return;

}

mDiscontinuitySegments.push_back(DiscontinuitySegment());

sp<ABuffer> buffer = new ABuffer(0);

buffer->meta()->setInt32("discontinuity", static_cast<int32_t>(type));

buffer->meta()->setMessage("extra", extra);

mBuffers.push_back(buffer); //Push the ABuffer with discontinuity recorded into the buffer, so that each Source can correctly handle discontinuity when reading data from the buffer

mCondition.signal();

}Decoder

How is the Decoder initialized

from NuPlayer::OnStart Method looks like

void NuPlayer::onStart(int64_t startPositionUs) {

if (!mSourceStarted) {

mSourceStarted = true;

mSource->start();

}

if (startPositionUs > 0) {

performSeek(startPositionUs);

if (mSource->getFormat(false /* audio */) == NULL) {

return;

}

}

...

sp<AMessage> notify = new AMessage(kWhatRendererNotify, this);

++mRendererGeneration;

notify->setInt32("generation", mRendererGeneration);

//Here, initialize the Renderer and its corresponding loop with AVNuFactory

mRenderer = AVNuFactory::get()->createRenderer(mAudioSink, notify, flags);

mRendererLooper = new ALooper;

mRendererLooper->setName("NuPlayerRenderer");

mRendererLooper->start(false, false, ANDROID_PRIORITY_AUDIO);

mRendererLooper->registerHandler(mRenderer);

//Set the playback parameters of the Renderer

status_t err = mRenderer->setPlaybackSettings(mPlaybackSettings);

...

//Set the Renderer for the Decoder, and the relationship between the two is established

if (mVideoDecoder != NULL) {

mVideoDecoder->setRenderer(mRenderer);

}

if (mAudioDecoder != NULL) {

mAudioDecoder->setRenderer(mRenderer);

}

//Throw this message

postScanSources();

}

--------------------------------------

case kWhatScanSources:

{

int32_t generation;

CHECK(msg->findInt32("generation", &generation));

if (generation != mScanSourcesGeneration) {

// Drop obsolete msg.

break;

}

mScanSourcesPending = false;

ALOGV("scanning sources haveAudio=%d, haveVideo=%d",

mAudioDecoder != NULL, mVideoDecoder != NULL);

bool mHadAnySourcesBefore =

(mAudioDecoder != NULL) || (mVideoDecoder != NULL);

bool rescan = false;

// initialize video before audio because successful initialization of

// video may change deep buffer mode of audio.

//Initialize the decoder here

if (mSurface != NULL) {

if (instantiateDecoder(false, &mVideoDecoder) == -EWOULDBLOCK) {

rescan = true;

}

}

// Don't try to re-open audio sink if there's an existing decoder.

if (mAudioSink != NULL && mAudioDecoder == NULL) {

if (instantiateDecoder(true, &mAudioDecoder) == -EWOULDBLOCK) {

rescan = true;

}

}

if (!mHadAnySourcesBefore

&& (mAudioDecoder != NULL || mVideoDecoder != NULL)) {

// This is the first time we've found anything playable.

if (mSourceFlags & Source::FLAG_DYNAMIC_DURATION) {

schedulePollDuration();

}

}

status_t err;

if ((err = mSource->feedMoreTSData()) != OK) {

if (mAudioDecoder == NULL && mVideoDecoder == NULL) {

// We're not currently decoding anything (no audio or

// video tracks found) and we just ran out of input data.

if (err == ERROR_END_OF_STREAM) {

notifyListener(MEDIA_PLAYBACK_COMPLETE, 0, 0);

} else {

notifyListener(MEDIA_ERROR, MEDIA_ERROR_UNKNOWN, err);

}

}

break;

}

//postScanSources, like doSomeWork in ExoPlayer, is a constant loop

if (rescan) {

msg->post(100000ll);

mScanSourcesPending = true;

}

break;

}

---------------------------------------

stay NuPlayer of instantiateDecoder Medium completion Decoder Initialization of

status_t NuPlayer::instantiateDecoder(

bool audio, sp<DecoderBase> *decoder, bool checkAudioModeChange) {

...

if (!audio) {

AString mime;

CHECK(format->findString("mime", &mime));

sp<AMessage> ccNotify = new AMessage(kWhatClosedCaptionNotify, this);

if (mCCDecoder == NULL) {

mCCDecoder = new CCDecoder(ccNotify); //new caption decoder

}

if (mSourceFlags & Source::FLAG_SECURE) {

format->setInt32("secure", true);

}

if (mSourceFlags & Source::FLAG_PROTECTED) {

format->setInt32("protected", true);

}

float rate = getFrameRate();

if (rate > 0) {

format->setFloat("operating-rate", rate * mPlaybackSettings.mSpeed);

}

}

if (audio) {

sp<AMessage> notify = new AMessage(kWhatAudioNotify, this);

++mAudioDecoderGeneration;

notify->setInt32("generation", mAudioDecoderGeneration);

if (checkAudioModeChange) {

determineAudioModeChange(format);

}

if (mOffloadAudio)

mSource->setOffloadAudio(true /* offload */);

if (mOffloadAudio) {

const bool hasVideo = (mSource->getFormat(false /*audio */) != NULL);

format->setInt32("has-video", hasVideo);

*decoder = AVNuFactory::get()->createPassThruDecoder(notify, mSource, mRenderer);//Create a pass through audio decoder using AVNuFactory method

} else {

AVNuUtils::get()->setCodecOutputFormat(format);

mSource->setOffloadAudio(false /* offload */);

*decoder = AVNuFactory::get()->createDecoder(notify, mSource, mPID, mRenderer);//Create a normal audio decoder

}

} else {

sp<AMessage> notify = new AMessage(kWhatVideoNotify, this);

++mVideoDecoderGeneration;

notify->setInt32("generation", mVideoDecoderGeneration);

*decoder = new Decoder(

notify, mSource, mPID, mRenderer, mSurface, mCCDecoder);

//new video decoder. Here, the caption decoder will be transmitted as a parameter

// enable FRC if high-quality AV sync is requested, even if not

// directly queuing to display, as this will even improve textureview

// playback.

{

char value[PROPERTY_VALUE_MAX];

if (property_get("persist.sys.media.avsync", value, NULL) &&

(!strcmp("1", value) || !strcasecmp("true", value))) {

format->setInt32("auto-frc", 1);

}

}

}

(*decoder)->init();//Decoder initialization

(*decoder)->configure(format);//Decoder configuration

// allocate buffers to decrypt widevine source buffers

if (!audio && (mSourceFlags & Source::FLAG_SECURE)) {

Vector<sp<ABuffer> > inputBufs;

CHECK_EQ((*decoder)->getInputBuffers(&inputBufs), (status_t)OK);

Vector<MediaBuffer *> mediaBufs;

for (size_t i = 0; i < inputBufs.size(); i++) {

const sp<ABuffer> &buffer = inputBufs[i];

MediaBuffer *mbuf = new MediaBuffer(buffer->data(), buffer->size());

mediaBufs.push(mbuf);

}

status_t err = mSource->setBuffers(audio, mediaBufs);

if (err != OK) {

for (size_t i = 0; i < mediaBufs.size(); ++i) {

mediaBufs[i]->release();

}

mediaBufs.clear();

ALOGE("Secure source didn't support secure mediaBufs.");

return err;

}

}

...

return OK;

}

-------------------------------------

-------------------------------------

void NuPlayer::Decoder::doFlush(bool notifyComplete) {

if (mCCDecoder != NULL) {

mCCDecoder->flush();//flush caption Decoder first

}

if (mRenderer != NULL) {

mRenderer->flush(mIsAudio, notifyComplete);

mRenderer->signalTimeDiscontinuity();//Then flush Renderer

}

status_t err = OK;

if (mCodec != NULL) {

err = mCodec->flush();//Finally, flush Decoder

mCSDsToSubmit = mCSDsForCurrentFormat; // copy operator

++mBufferGeneration;

}

...

releaseAndResetMediaBuffers();//Empty buffer

mPaused = true;

}- DecoderBase It is the base class of NuPlayer::Decoder. It also maintains a Looper internally. All kinds of work are still done by asynchronous message driving. However, the response methods of various onMessage are virtual functions, which need to be implemented by subclasses. You can also see the method combining setRenderer method and Renderer

NuPlayer::DecoderBase::DecoderBase(const sp<AMessage> ¬ify)

: mNotify(notify),

mBufferGeneration(0),

mPaused(false),

mStats(new AMessage),

mRequestInputBuffersPending(false) {

// Every decoder has its own looper because MediaCodec operations

// are blocking, but NuPlayer needs asynchronous operations.

mDecoderLooper = new ALooper;

mDecoderLooper->setName("NPDecoder");

mDecoderLooper->start(false, false, ANDROID_PRIORITY_AUDIO);

}

void NuPlayer::DecoderBase::init() {

mDecoderLooper->registerHandler(this);

}- NuPlayer::Decoder

By the corresponding onMessage Methods deal with all kinds of work

Focus first init and configure Two ways, init Directly inherited from DecoderBase,Just give Looper register Handler

--------------------------------------

void NuPlayer::Decoder::onConfigure(const sp<AMessage> &format) {

...

mCodec = AVUtils::get()->createCustomComponentByName(mCodecLooper, mime.c_str(), false /* encoder */, format);

if (mCodec == NULL) {

//Decoder created by mimeType

mCodec = MediaCodec::CreateByType(

mCodecLooper, mime.c_str(), false /* encoder */, NULL /* err */, mPid);

}

...

//mCodec is MediaCodec in libstagefright. There's nothing to say

err = mCodec->configure(

format, mSurface, NULL /* crypto */, 0 /* flags */);

if (err != OK) {

ALOGE("Failed to configure %s decoder (err=%d)", mComponentName.c_str(), err);

mCodec->release();

mCodec.clear();

handleError(err);

return;

}

rememberCodecSpecificData(format);

// the following should work in configured state

CHECK_EQ((status_t)OK, mCodec->getOutputFormat(&mOutputFormat));

CHECK_EQ((status_t)OK, mCodec->getInputFormat(&mInputFormat));

mStats->setString("mime", mime.c_str());

mStats->setString("component-name", mComponentName.c_str());

if (!mIsAudio) {

int32_t width, height;

if (mOutputFormat->findInt32("width", &width)

&& mOutputFormat->findInt32("height", &height)) {

mStats->setInt32("width", width);

mStats->setInt32("height", height);

}

}

...

//MediaCodec start

err = mCodec->start();

if (err != OK) {

ALOGE("Failed to start %s decoder (err=%d)", mComponentName.c_str(), err);

mCodec->release();

mCodec.clear();

handleError(err);

return;

}

//First set buffer release to null

releaseAndResetMediaBuffers();

...

}

Mentioned earlier NuPlayer::Decoder There's one inside MediaCodec,Therefore, there is no need to study how to decode. The focus is on what is output from this module and how to input it

Let's first look at the output:

When MediaCodec have Available Output When, in onMessageReceived Zhongyou

case MediaCodec::CB_OUTPUT_AVAILABLE:

{

int32_t index;

size_t offset;

size_t size;

int64_t timeUs;

int32_t flags;

CHECK(msg->findInt32("index", &index));

CHECK(msg->findSize("offset", &offset));

CHECK(msg->findSize("size", &size));

CHECK(msg->findInt64("timeUs", &timeUs));

CHECK(msg->findInt32("flags", &flags));

handleAnOutputBuffer(index, offset, size, timeUs, flags);

break;

}

-------------------------------------

bool NuPlayer::Decoder::handleAnOutputBuffer(

size_t index,

size_t offset,

size_t size,

int64_t timeUs,

int32_t flags) {

// CHECK_LT(bufferIx, mOutputBuffers.size());

sp<ABuffer> buffer;

mCodec->getOutputBuffer(index, &buffer);

....

//Send kWhatRenderBuffer message

sp<AMessage> reply = new AMessage(kWhatRenderBuffer, this);

reply->setSize("buffer-ix", index);

reply->setInt32("generation", mBufferGeneration);

if (eos) {

ALOGI("[%s] saw output EOS", mIsAudio ? "audio" : "video");

//EOS

buffer->meta()->setInt32("eos", true);

reply->setInt32("eos", true);

} else if (mSkipRenderingUntilMediaTimeUs >= 0) {

if (timeUs < mSkipRenderingUntilMediaTimeUs) {

ALOGV("[%s] dropping buffer at time %lld as requested.",

mComponentName.c_str(), (long long)timeUs);

//Don't render the middle paragraph, skip it

reply->post();

return true;

}

mSkipRenderingUntilMediaTimeUs = -1;

} else if ((flags & MediaCodec::BUFFER_FLAG_DATACORRUPT) &&

AVNuUtils::get()->dropCorruptFrame()) {

ALOGV("[%s] dropping corrupt buffer at time %lld as requested.",

mComponentName.c_str(), (long long)timeUs);

//The buffer in this section is broken. Throw it away

reply->post();

return true;

}

mNumFramesTotal += !mIsAudio;

// wait until 1st frame comes out to signal resume complete

notifyResumeCompleteIfNecessary();

if (mRenderer != NULL) {

// send the buffer to renderer. Send Buffer to Renderer

mRenderer->queueBuffer(mIsAudio, buffer, reply);

if (eos && !isDiscontinuityPending()) {

mRenderer->queueEOS(mIsAudio, ERROR_END_OF_STREAM);

}

}

return true;

}

--------------------------------------

case kWhatRenderBuffer:

{

if (!isStaleReply(msg)) {

onRenderBuffer(msg);

}

break;

}

-------------------------------------

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) {

...

if (!mIsAudio) {

int64_t timeUs;

sp<ABuffer> buffer = mOutputBuffers[bufferIx];

buffer->meta()->findInt64("timeUs", &timeUs);

if (mCCDecoder != NULL && mCCDecoder->isSelected()) {

mCCDecoder->display(timeUs);//Subtitle display

}

}

if (msg->findInt32("render", &render) && render) {

int64_t timestampNs;

CHECK(msg->findInt64("timestampNs", ×tampNs));

//Completed by MediaCodec's renderOutputBufferAndRelease

err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs);

} else {

mNumOutputFramesDropped += !mIsAudio;

err = mCodec->releaseOutputBuffer(bufferIx);

}

...

}

Let's look at the input

When MediaCodec have Available Input When, in onMessageReceived Zhongyou

case MediaCodec::CB_INPUT_AVAILABLE:

{

int32_t index;

CHECK(msg->findInt32("index", &index));

handleAnInputBuffer(index);

break;

}

------------------------------------

bool NuPlayer::Decoder::handleAnInputBuffer(size_t index) {

...

sp<ABuffer> buffer;

mCodec->getInputBuffer(index, &buffer);

...

if (index >= mInputBuffers.size()) {

for (size_t i = mInputBuffers.size(); i <= index; ++i) {

mInputBuffers.add();

mMediaBuffers.add();

mInputBufferIsDequeued.add();

mMediaBuffers.editItemAt(i) = NULL;

mInputBufferIsDequeued.editItemAt(i) = false;

}

}

mInputBuffers.editItemAt(index) = buffer;

//CHECK_LT(bufferIx, mInputBuffers.size());

if (mMediaBuffers[index] != NULL) {

mMediaBuffers[index]->release();

mMediaBuffers.editItemAt(index) = NULL;

}

mInputBufferIsDequeued.editItemAt(index) = true;

if (!mCSDsToSubmit.isEmpty()) {

sp<AMessage> msg = new AMessage();

msg->setSize("buffer-ix", index);

sp<ABuffer> buffer = mCSDsToSubmit.itemAt(0);

ALOGI("[%s] resubmitting CSD", mComponentName.c_str());

msg->setBuffer("buffer", buffer);

mCSDsToSubmit.removeAt(0);

if (!onInputBufferFetched(msg)) {

handleError(UNKNOWN_ERROR);

return false;

}

return true;

}

while (!mPendingInputMessages.empty()) {

sp<AMessage> msg = *mPendingInputMessages.begin();

if (!onInputBufferFetched(msg)) {

break;

}

mPendingInputMessages.erase(mPendingInputMessages.begin());

}

if (!mInputBufferIsDequeued.editItemAt(index)) {

return true;

}

mDequeuedInputBuffers.push_back(index);

onRequestInputBuffers();

return true;

}

------------------------------------

bool NuPlayer::Decoder::onInputBufferFetched(const sp<AMessage> &msg) {

...

sp<ABuffer> buffer;

bool hasBuffer = msg->findBuffer("buffer", &buffer);

// handle widevine classic source - that fills an arbitrary input buffer

MediaBuffer *mediaBuffer = NULL;

if (hasBuffer) {

//TODO: for more information, you can study mediabuffer

mediaBuffer = (MediaBuffer *)(buffer->getMediaBufferBase());

if (mediaBuffer != NULL) {

// likely filled another buffer than we requested: adjust buffer index

size_t ix;

for (ix = 0; ix < mInputBuffers.size(); ix++) {

const sp<ABuffer> &buf = mInputBuffers[ix];

if (buf->data() == mediaBuffer->data()) {

// all input buffers are dequeued on start, hence the check

if (!mInputBufferIsDequeued[ix]) {

ALOGV("[%s] received MediaBuffer for #%zu instead of #%zu",

mComponentName.c_str(), ix, bufferIx);

mediaBuffer->release();

return false;

}

// TRICKY: need buffer for the metadata, so instead, set

// codecBuffer to the same (though incorrect) buffer to

// avoid a memcpy into the codecBuffer

codecBuffer = buffer;

codecBuffer->setRange(

mediaBuffer->range_offset(),

mediaBuffer->range_length());

bufferIx = ix;

break;

}

}

CHECK(ix < mInputBuffers.size());

}

}

if (buffer == NULL /* includes !hasBuffer */) {

int32_t streamErr = ERROR_END_OF_STREAM;

CHECK(msg->findInt32("err", &streamErr) || !hasBuffer);

CHECK(streamErr != OK);

// attempt to queue EOS

status_t err = mCodec->queueInputBuffer(

bufferIx,

0,

0,

0,

MediaCodec::BUFFER_FLAG_EOS);

if (err == OK) {

mInputBufferIsDequeued.editItemAt(bufferIx) = false;

} else if (streamErr == ERROR_END_OF_STREAM) {

streamErr = err;

// err will not be ERROR_END_OF_STREAM

}

if (streamErr != ERROR_END_OF_STREAM) {

ALOGE("Stream error for %s (err=%d), EOS %s queued",

mComponentName.c_str(),

streamErr,

err == OK ? "successfully" : "unsuccessfully");

handleError(streamErr);

}

} else {

sp<AMessage> extra;

if (buffer->meta()->findMessage("extra", &extra) && extra != NULL) {

int64_t resumeAtMediaTimeUs;

if (extra->findInt64(

"resume-at-mediaTimeUs", &resumeAtMediaTimeUs)) {

ALOGI("[%s] suppressing rendering until %lld us",

mComponentName.c_str(), (long long)resumeAtMediaTimeUs);

mSkipRenderingUntilMediaTimeUs = resumeAtMediaTimeUs;

}

}

int64_t timeUs = 0;

uint32_t flags = 0;

CHECK(buffer->meta()->findInt64("timeUs", &timeUs));

int32_t eos, csd;

// we do not expect SYNCFRAME for decoder

if (buffer->meta()->findInt32("eos", &eos) && eos) {

flags |= MediaCodec::BUFFER_FLAG_EOS;

} else if (buffer->meta()->findInt32("csd", &csd) && csd) {

flags |= MediaCodec::BUFFER_FLAG_CODECCONFIG;

}

// copy into codec buffer

if (buffer != codecBuffer) {

if (buffer->size() > codecBuffer->capacity()) {

handleError(ERROR_BUFFER_TOO_SMALL);

mDequeuedInputBuffers.push_back(bufferIx);

return false;

}

codecBuffer->setRange(0, buffer->size());

memcpy(codecBuffer->data(), buffer->data(), buffer->size());

}

status_t err = mCodec->queueInputBuffer(

bufferIx,

codecBuffer->offset(),

codecBuffer->size(),

timeUs,

flags);

if (err != OK) {

if (mediaBuffer != NULL) {

mediaBuffer->release();

}

ALOGE("Failed to queue input buffer for %s (err=%d)",

mComponentName.c_str(), err);

handleError(err);

} else {

mInputBufferIsDequeued.editItemAt(bufferIx) = false;

if (mediaBuffer != NULL) {

CHECK(mMediaBuffers[bufferIx] == NULL);

mMediaBuffers.editItemAt(bufferIx) = mediaBuffer;

}

}

}

return true;

}No matter what you do, it's always the end MediaCodec.queueInputBuffer Completed the actual work

-------------------------------------

void NuPlayer::DecoderBase::onRequestInputBuffers() {

if (mRequestInputBuffersPending) {

return;

}

// doRequestBuffers() return true if we should request more data

if (doRequestBuffers()) {

mRequestInputBuffersPending = true;

//Note that here, it will call itself in a loop and keep request ing

sp<AMessage> msg = new AMessage(kWhatRequestInputBuffers, this);

msg->post(10 * 1000ll);

}

}

--------------------------------------

case kWhatRequestInputBuffers:

{

mRequestInputBuffersPending = false;

onRequestInputBuffers();

break;

}

--------------------------------------

/*

* returns true if we should request more data

*/

bool NuPlayer::Decoder::doRequestBuffers() {

// mRenderer is only NULL if we have a legacy widevine source that

// is not yet ready. In this case we must not fetch input.

if (isDiscontinuityPending() || mRenderer == NULL) {

return false;

}

status_t err = OK;

while (err == OK && !mDequeuedInputBuffers.empty()) {

size_t bufferIx = *mDequeuedInputBuffers.begin();

sp<AMessage> msg = new AMessage();

msg->setSize("buffer-ix", bufferIx);

err = fetchInputData(msg);

if (err != OK && err != ERROR_END_OF_STREAM) {

// if EOS, need to queue EOS buffer

break;

}

mDequeuedInputBuffers.erase(mDequeuedInputBuffers.begin());

if (!mPendingInputMessages.empty()

|| !onInputBufferFetched(msg)) { //This method has been analyzed before

mPendingInputMessages.push_back(msg);

}

}

return err == -EWOULDBLOCK

&& mSource->feedMoreTSData() == OK;

}

-------------------------------------

status_t NuPlayer::Decoder::fetchInputData(sp<AMessage> &reply) {

sp<ABuffer> accessUnit;

bool dropAccessUnit;

do {

//The method of source is called here to establish a connection

status_t err = mSource->dequeueAccessUnit(mIsAudio, &accessUnit);

...

}while(...)

}

------------------------------------So far, the Decoder part has been analyzed

Renderer

Player directory The initialization of the Renderer is completed in the NuPlayer::OnStart method

void NuPlayer::onStart(int64_t startPositionUs) {

...

sp<AMessage> notify = new AMessage(kWhatRendererNotify, this);

++mRendererGeneration;

notify->setInt32("generation", mRendererGeneration);

mRenderer = AVNuFactory::get()->createRenderer(mAudioSink, notify, flags);

mRendererLooper = new ALooper;

mRendererLooper->setName("NuPlayerRenderer");

mRendererLooper->start(false, false, ANDROID_PRIORITY_AUDIO);

mRendererLooper->registerHandler(mRenderer);

status_t err = mRenderer->setPlaybackSettings(mPlaybackSettings);

......

float rate = getFrameRate();

if (rate > 0) {

mRenderer->setVideoFrameRate(rate);

}

if (mVideoDecoder != NULL) {

mVideoDecoder->setRenderer(mRenderer);

}

if (mAudioDecoder != NULL) {

mAudioDecoder->setRenderer(mRenderer);

}

postScanSources();

}The data input of the Renderer is completed in nuplayer:: decoder:: handleanoutpuffer

bool NuPlayer::Decoder::handleAnOutputBuffer(

size_t index,

size_t offset,

size_t size,

int64_t timeUs,

int32_t flags) {

...

if (mRenderer != NULL) {

// send the buffer to renderer. Send Buffer to Renderer

mRenderer->queueBuffer(mIsAudio, buffer, reply);

if (eos && !isDiscontinuityPending()) {

mRenderer->queueEOS(mIsAudio, ERROR_END_OF_STREAM);

}

}

...

}

libmediaplayerservice/nuplayer/NuplayerRenderer.cpp

mRenderer->queueBuffer The following method will eventually be called

void NuPlayer::Renderer::onQueueBuffer(const sp<AMessage> &msg) {

int32_t audio;

CHECK(msg->findInt32("audio", &audio));

if (dropBufferIfStale(audio, msg)) {

return;

}

if (audio) {

mHasAudio = true;

} else {

mHasVideo = true;

}

if (mHasVideo) {

if (mVideoScheduler == NULL) {

mVideoScheduler = new VideoFrameScheduler();//Initialize videoframescheme for VSync

mVideoScheduler->init();

}

}

sp<ABuffer> buffer;

CHECK(msg->findBuffer("buffer", &buffer));

sp<AMessage> notifyConsumed;

CHECK(msg->findMessage("notifyConsumed", ¬ifyConsumed));

QueueEntry entry;//It can be understood as the abstraction of buffer circular queue

entry.mBuffer = buffer;

entry.mNotifyConsumed = notifyConsumed;

entry.mOffset = 0;

entry.mFinalResult = OK;

entry.mBufferOrdinal = ++mTotalBuffersQueued;

//Both mcaudioqueue and mVideoQueue are list < queueentry >

if (audio) {

Mutex::Autolock autoLock(mLock);

mAudioQueue.push_back(entry);

postDrainAudioQueue_l();

} else {

mVideoQueue.push_back(entry);

postDrainVideoQueue();//Called every once in a while

}

Mutex::Autolock autoLock(mLock);

if (!mSyncQueues || mAudioQueue.empty() || mVideoQueue.empty()) {

return;

}

sp<ABuffer> firstAudioBuffer = (*mAudioQueue.begin()).mBuffer;

sp<ABuffer> firstVideoBuffer = (*mVideoQueue.begin()).mBuffer;

if (firstAudioBuffer == NULL || firstVideoBuffer == NULL) {

// EOS signalled on either queue. A queue is empty

syncQueuesDone_l();

return;

}

int64_t firstAudioTimeUs;

int64_t firstVideoTimeUs;

CHECK(firstAudioBuffer->meta()

->findInt64("timeUs", &firstAudioTimeUs));

CHECK(firstVideoBuffer->meta()

->findInt64("timeUs", &firstVideoTimeUs));

int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

ALOGV("queueDiff = %.2f secs", diff / 1E6);

if (diff > 100000ll) {

// Audio data starts More than 0.1 secs before video.

// Drop some audio. Audio is 0.1s ahead of video, and some audio is lost

(*mAudioQueue.begin()).mNotifyConsumed->post();

mAudioQueue.erase(mAudioQueue.begin());

return;

}

syncQueuesDone_l();

}Data output of Renderer

Also in postDrainVideoQueue Method, will throw kwhatDrainVideoQueue News of

void NuPlayer::Renderer::postDrainVideoQueue() {

....

sp<AMessage> msg = new AMessage(kWhatDrainVideoQueue, this);

msg->setInt32("drainGeneration", getDrainGeneration(false /* audio */));

...}

case kWhatDrainVideoQueue:

{

int32_t generation;

CHECK(msg->findInt32("drainGeneration", &generation));

if (generation != getDrainGeneration(false /* audio */)) {

break;

}

mDrainVideoQueuePending = false;

onDrainVideoQueue();

postDrainVideoQueue();

break;

}

void NuPlayer::Renderer::onDrainVideoQueue() {

...

QueueEntry *entry = &*mVideoQueue.begin();

if (entry->mBuffer == NULL) {

// EOS

notifyEOS(false /* audio */, entry->mFinalResult);

...

return;

}

int64_t nowUs = ALooper::GetNowUs();

int64_t realTimeUs;

int64_t mediaTimeUs = -1;

if (mFlags & FLAG_REAL_TIME) {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs));

} else {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

bool tooLate = false;

if (!mPaused) {

//Compare media time with wall clock

setVideoLateByUs(nowUs - realTimeUs);

tooLate = (mVideoLateByUs > 40000);

if (tooLate) {

ALOGV("video late by %lld us (%.2f secs)",

(long long)mVideoLateByUs, mVideoLateByUs / 1E6);

} else {

int64_t mediaUs = 0;

mMediaClock->getMediaTime(realTimeUs, &mediaUs);

ALOGV("rendering video at media time %.2f secs",

(mFlags & FLAG_REAL_TIME ? realTimeUs :

mediaUs) / 1E6);...

}

} else {

setVideoLateByUs(0);

...

}

// Always render the first video frame while keeping stats on A/V sync.

if (!mVideoSampleReceived) {

realTimeUs = nowUs;

tooLate = false;

}

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

entry->mNotifyConsumed->setInt32("render", !tooLate);

entry->mNotifyConsumed->post();

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

mVideoSampleReceived = true;

if (!mPaused) {

if (!mVideoRenderingStarted) {

mVideoRenderingStarted = true;

notifyVideoRenderingStart();//I just started

}

Mutex::Autolock autoLock(mLock);

notifyIfMediaRenderingStarted_l();//started, which is basically the same way as notifyVideoRenderingStart above, will post the corresponding kwhatxxxx msg, and finally return to the onMessage of NuPlayer for processing

}

}

NuPlayer

case kWhatRendererNotify:

{

...

int32_t what;

CHECK(msg->findInt32("what", &what));

//You can see all kinds of familiar messages according to the different contents of what

if (what == Renderer::kWhatEOS) {

...

if (audio) {

mAudioEOS = true;

} else {

mVideoEOS = true;

}

if (finalResult == ERROR_END_OF_STREAM) {

ALOGV("reached %s EOS", audio ? "audio" : "video");

} else {

ALOGE("%s track encountered an error (%d)",

audio ? "audio" : "video", finalResult);

notifyListener(

MEDIA_ERROR, MEDIA_ERROR_UNKNOWN, finalResult);

}

if ((mAudioEOS || mAudioDecoder == NULL)

&& (mVideoEOS || mVideoDecoder == NULL)) {

notifyListener(MEDIA_PLAYBACK_COMPLETE, 0, 0);

}

} else if (what == Renderer::kWhatFlushComplete) {

int32_t audio;

CHECK(msg->findInt32("audio", &audio));

if (audio) {

mAudioEOS = false;

} else {

mVideoEOS = false;

}

ALOGV("renderer %s flush completed.", audio ? "audio" : "video");

if (audio && (mFlushingAudio == NONE || mFlushingAudio == FLUSHED

|| mFlushingAudio == SHUT_DOWN)) {

// Flush has been handled by tear down.

break;

}

handleFlushComplete(audio, false /* isDecoder */);

finishFlushIfPossible();

} else if (what == Renderer::kWhatVideoRenderingStart) {

//It corresponds to the first one in front, that is, it has just started render

notifyListener(MEDIA_INFO, MEDIA_INFO_RENDERING_START, 0);

} else if (what == Renderer::kWhatMediaRenderingStart) {

ALOGV("media rendering started");

//Corresponding to the second in front

notifyListener(MEDIA_STARTED, 0, 0);

} else if (what == Renderer::kWhatAudioTearDown) {

....

}

break;

}

framework\av\include\media

// The player just pushed the very first video frame for rendering

enum media_info_type {

MEDIA_INFO_RENDERING_START = 3,

}

enum media_event_type {

MEDIA_STARTED = 6,

}So far, we have completed the analysis of the ahandler mechanism of nuplayer and the three modules of source\decoder\renderer in android. Welcome to exchange and learn from each other.