Structure explanation

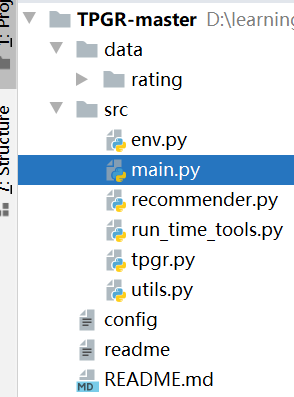

After extracting the github download code, you can see the following directory

- The data directory stores data sets, which can be replaced with their own data sets as needed.

- The src directory is all the code

Before looking at the code, let's look at the readme file. If there is readme in a code, we must look at it first. It usually includes a brief introduction to the whole project, including some parameter settings.

Its README file and explanation are as follows:

In run_time_tools.py, mf_with_bias() is to gain embeddings of items by utilizing the PMF model;

run_ time_ MF in Tools File_ with_ Bias () uses PMF to get the embedding of item and the result item_embeddings_value does not exist rating_file (defined in config file).

clustering_vector_constructor() is to construct item representation in order to building the balanced hierarchical clustering tree.

clustering_vector_constructor() is used to obtain the item representation of the hierarchical tree, according to rating/vae/mf. Result exists_ file_ Path.

In tpgr.py, class PRE_TRAIN, TREE and TPGR correspond to the rnn pretraining step, tree constructing step and model training step of the TPGR model.

tpgr.py has three classes, PRE_TRAIN: rnn pre training; TREE: build TREE; TPGR: TPGR training.

The config parameters are as follows. The specific meanings are written out and will not be explained:

[META] ACTION_DIM: the embedding dimension of each action (item). STATISTIC_DIM: the dimension of the statistic information, in our implementation, there are 9 kinds of statistic information. REWARD_DIM: the dimension of the one-hot reward representation. MAX_TRAINING_STEP: the maximum training steps. DISCOUNT_FACTOR: discount factor for calculating cumulated reward. EPISODE_LENGTH: the number of recommendation interactions of each episode. LOG_STEP: the number of interval steps for printing evaluation logs when training the TPGR model. [ENV] RATING_FILE: the name of the original rating file, the rating files are stored in data/rating as default. ALPHA: to control the ratio of the sequential reward as described in the paper. BOUNDARY_RATING: to divide positive and negative rating. MAX_RATING: maximum rating in the rating file. MIN_RATING: minimum rating in the rating file. [TPGR] PRE_TRAINING: bool type (T/F), indicating whether conducting pretraining step. PRE_TRAINING_STEP: the number of pretraining steps. PRE_TRAINING_MASK_LENGTH: control the length of the historical rewards to recover when pretraining. PRE_TRAINING_SEQ_LENGTH: the number of recommendation interactions of each episode when pretraining. PRE_TRAINING_MAX_ITEM_NUM: the maximum number of items to be considered when pretraining. PRE_TRAINING_RNN_TRUNCATED_LENGTH: rnn truncated length. PRE_TRAINING_BATCH_SIZE: the batch size adopted in pre-training step. PRE_TRAINING_LOG_STEP: the number of interval steps for printing evaluation logs when pre-training. PRE_TRAINING_LEARNING_RATE: initialized learning rate for AdamOptimizer adopted in pre-training step. PRE_TRAINING_L2_FACTOR: l2 factor for l2 normalization adopted in pre-training step. CONSTRUCT_TREE: bool type (T/F), indicating whether conducting tree building step. CHILD_NUM: children number of the non-leaf nodes in the tree. CLUSTERING_TYPE: clustering type, PCA or KMEANS. CLUSTERING_VECTOR_TYPE: item representation type, RATING, VAE or MF. CLUSTERING_VECTOR_VERSION: the version of the item representation. RNN_MODEL_VS: the version of the pretrained rnn. TREE_VS: the version of the tree. LOAD_MODEL: bool type (T/F), indicating whether loading existing model. MODEL_LOAD_VS: the version of the model to load from. MODEL_SAVE_VS: the version of the model to save to. HIDDEN_UNITS: the hidden units list for each policy network, seperated by ','. SAMPLE_EPISODES_PER_BATCH: the number of sample episodes for each user in a training batch. SAMPLE_USERS_PER_BATCH: the number of sample users in a training batch. EVAL_BATCH_SIZE: batch size of episodes for evaluation. LEARNING_RATE: initialized learning rate for AdamOptimizer. L2_FACTOR: l2 factor for l2 normalization. ENTROPY_FACTOR: to control the smooth of the possibility distribution of the policy.

DEBUG

- When running, if there is an error due to missing package, use conda install packagename to install the package.

- After installing the required packages, run the program and find the error filenotfounderror: [errno 2] no such file or directory: '/ data/run_ time/demo_ data_ env_ objects'

Find the corresponding statement:

# if you replace the rating file without renaming, you should delete the old env object file before you run the code

env_object_path = '../data/run_time/%s_env_objects'%self.config['ENV']['RATING_FILE']

####Many lines of code are omitted###

# dump the env object

utils.pickle_save({'r_matrix': self.r_matrix, 'user_to_rele_num': self.user_to_rele_num}, env_object_path)

Find the "pickle_save" function in utils

def pickle_save(object, file_path):

f = open(file_path, 'wb')

pickle.dump(object, f)

- pickle provides a simple persistence function. Objects can be stored on disk as files.

It is found that run does not exist in the data directory_ Time folder, so create one manually.

- If the directory is created, there is still an error: Ran out of input. Find the error location, or is it related to pickle:

def pickle_load(file_path):

f = open(file_path, 'rb')

return pickle.load(f)

Reason: this error occurs when the load file is empty. It was found that the file was not saved successfully in the previous step.

Error 'module' object is not callable when saving. After checking, it is found that pickle The above is correct

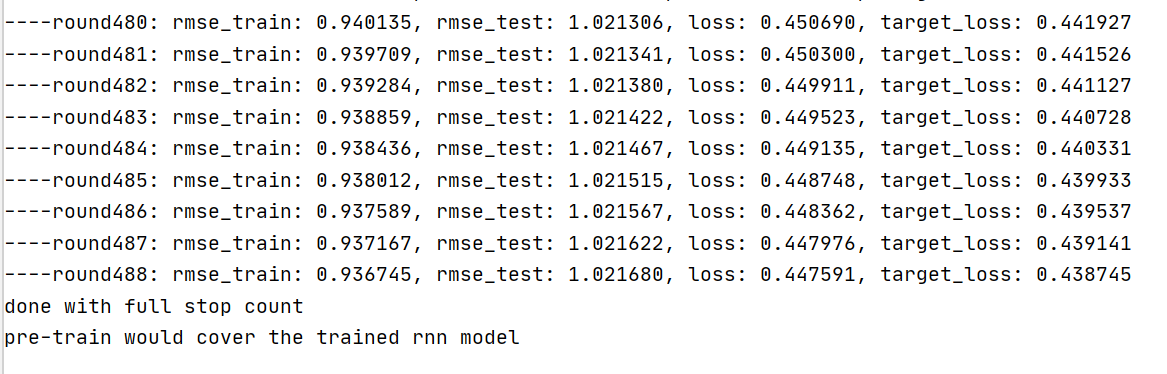

- In short, when you encounter a bug, you can change it one by one. After changing it, you can run it again as follows:

Code explanation

This section explains the whole code framework.

Supplementary knowledge

- configparser: the package used to read the configuration file. The format of the configuration file is as follows: the section contained in the brackets "[]". The following is a configuration similar to key value.

- When reading a file, the meaning of r,rb,w,wb, etc.:

'r': read only. The file must already exist.

'r +': readable and writable. The file must already exist and be written as append at the end of the file content.

'rb': indicates that the file is read in binary mode. The file must already exist.

'w': write only. When opened, a new file is created by default. If the file already exists, it will be overwritten (that is, the original data in the file will be cleared and overwritten by the newly written data).

'w +': write and read. Open, create a new file and write data. If the file already exists, overwrite the write.

'wb': indicates that it is opened in binary write mode and can only write files. If the file does not exist, create the file; Overwrite write if file already exists.

'a': additional write. If you open an existing file, you can directly operate on the existing file. If the open file does not exist, you can create a new file, which can only be written (appended later) and cannot be read.

'a +': additional reading and writing. The file opening method is the same as the writing method and 'a', but it can be read. It should be noted that if you just open a file with 'a +', you can't read it directly, because the cursor is already at the end of the file, unless you move the cursor to the initial position or any non end position. (you can use the seek() method to solve this problem. See the Model 8 example below for details.)