GitHub Linux source code

Reference link 1

Reference link 2

Reference link III

Reference link 4

- Multiplexing technology: process multiple times on a single channel and process multiple events with a single thread.

- The IO mentioned here mainly refers to the network io. In Linux, everything is a file, so the network IO is often represented by the file descriptor fd.

File descriptor fd

An abstract concept used to point to a file reference. actually fd Is a non negative integer used for the index of the file array maintained by a process. When a program creates or opens a file, the kernel returns a file descriptor to the process.

IO mode

stay Linux Cache of IO In, IO The data will be copied to the kernel cache area first, and then copied from the kernel buffer to the process address space.

- Blocking IO

When the user process calls the recvfrom function, it will enter the blocking state. At this time, the kernel will copy the IO data into the kernel. When the IO data is ready, it will copy the data to the user space, and then the process will cancel the Block. - non-blocking IO

Active polling: when the user process sends out a read operation, if the data in the kernel is not ready at this time, an error will be returned. For the user process, it will always receive a reply. It only needs to judge whether it is an error. If it is, it will send a read operation. - IO multiplexing

The essence is to block IO. If the user process calls select, it will enter blocking, and the kernel listens for events. The difference is that you can use one process to process multiple socket s at the same time. - Asynchronous IO

When the user process initiates read, it can do other things. When the kernel receives sync read, it will prepare the data and return a signal to the user process when it is ready.

Comparison of select and epoll

- The user mode copies files into the kernel mode

Select: create three descriptor sets, merge and copy them into the kernel, and listen for read, write and abnormal actions respectively. Limited by the number of fd that can be opened by a single process (select thread), the default is 1024;

Epoll: execute epoll_ The create function will create a red black tree and a ready linked list (store the ready file descriptor fd) in the high-speed cache area of the kernel. Then the user executes epoll_ Adding a file descriptor to the CTL function will add nodes to the red black tree; - How the kernel detects the read and write status of file descriptors

Select: select uses polling to traverse all fd;

Epoll: using callback mechanism. Executing epoll_ During the add operation of CTL, not only nodes will be added in the red black tree, but also callback functions will be registered. When the kernel detects that a fd is readable / writable, it will call the callback function, which will put fd into the ready linked list; - Find the way to return the ready fd to the user state

select: the fd passed to the kernel_ Set is transferred out to the user status and returns the number of fd ready. At this time, the user status does not know which FDS are ready. It needs to traverse to judge. If one is found, the number of returned ready FDS will be reduced by one;

epoll: epoll_ The wait function observes whether the ready linked list has fd, passes the data of the linked list into the array and returns the ready quantity. In user mode, you only need to traverse the array and process it one by one; The fd returned here allows the kernel and user mode to share a memory area through mmap, reducing unnecessary copies.

Detailed explanation of epoll

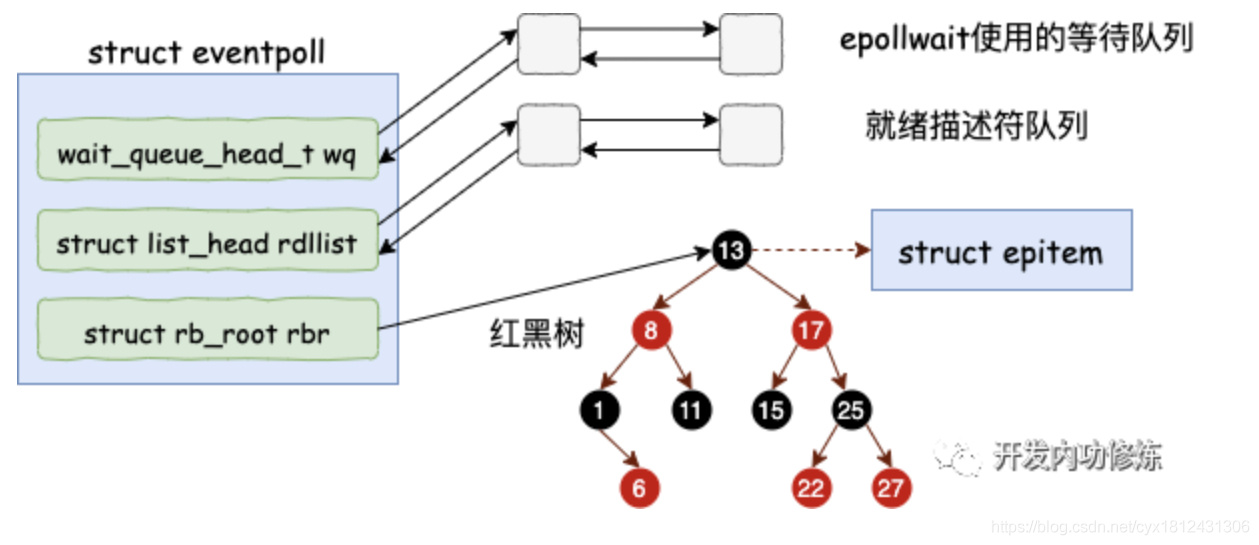

- epoll_create will create an eventpoll object, including red black tree (all socket connections), ready linked list and blocked processes;

// From github

// eventpoll structure

struct eventpoll {

...

/* Wait queue used by sys_epoll_wait() */

// When the soft interrupt data is ready, it will find the user process blocked on the epoll object through wq

wait_queue_head_t wq;

/* Wait queue used by file->poll() */

wait_queue_head_t poll_wait;

/* List of ready file descriptors */

// Ready linked list

struct list_head rdllist;

/* RB tree root used to store monitored fd structs */

// Red black tree, add all sockt connections

struct rb_root_cached rbr;

// The file opened by the current process is associated

struct file *file;

...

};

// Create eventpoll, file and associate them

static int do_epoll_create(int flags)

{

int error, fd;

struct eventpoll *ep = NULL;

struct file *file;

···

}

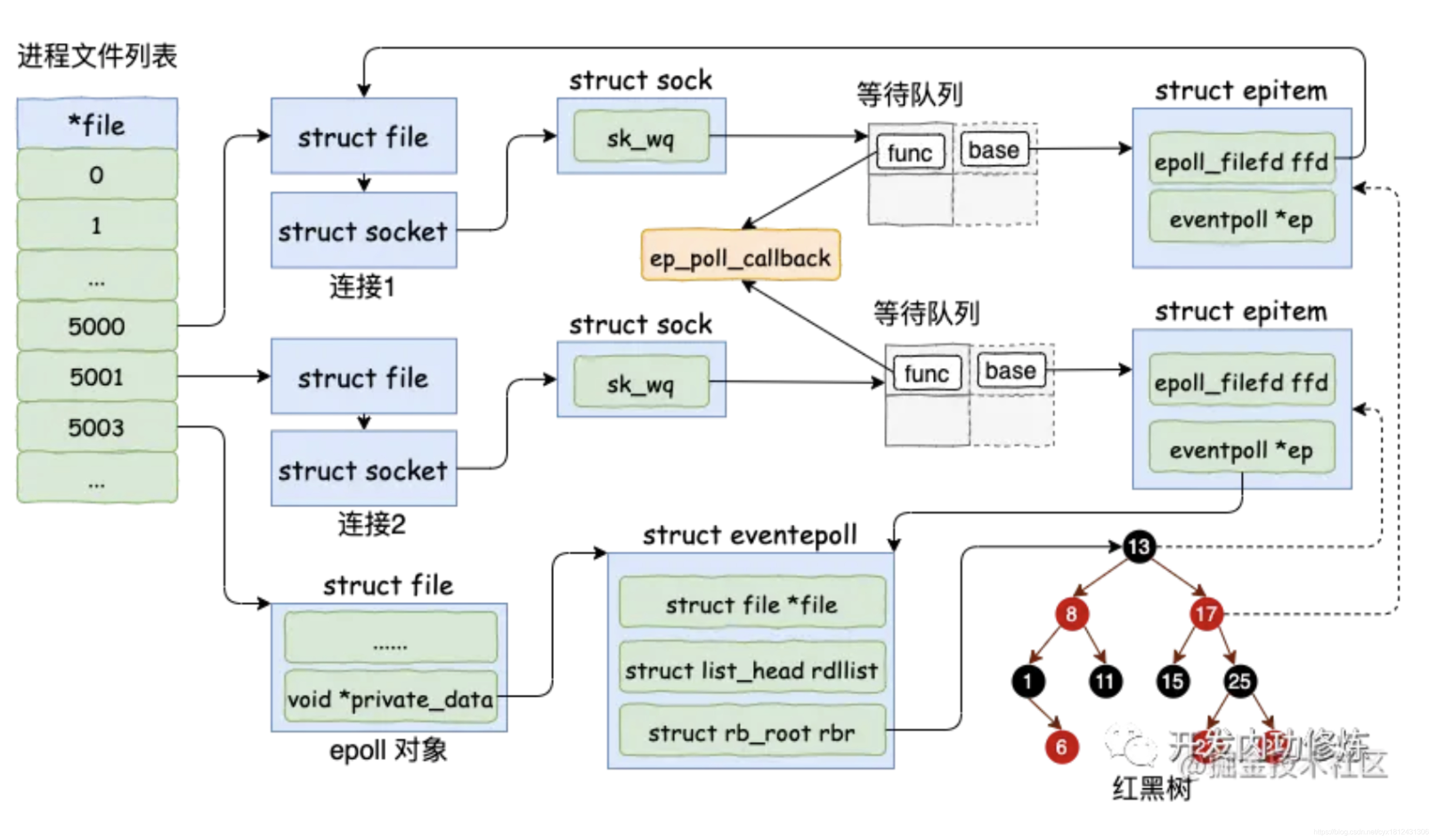

When the process opens a file, it will create a file structure to represent the context of opening the file, and then the process will access the file through fd of type int. in fact, the process maintains a file array, and fd is the subscript of this array. Call epoll_ When CTL, the fd will be passed in. Find the file corresponding to epoll and the eventpoll object.

- epoll_ctl call steps

- Initialize a red black tree node epitem object

- Initialize the socket wait queue,

struct epitem {

//Red black tree node

struct rb_node rbn;

//socket file descriptor information

struct epoll_filefd ffd;

//The eventpoll object to which it belongs

struct eventpoll *ep;

//Waiting queue

struct list_head pwqlist;

}

static int ep_insert(struct eventpoll *ep, const struct epoll_event *event,

struct file *tfile, int fd, int full_check)

{

int error, pwake = 0;

__poll_t revents;

long user_watches;

struct epitem *epi;

struct ep_pqueue epq;

struct eventpoll *tep = NULL;

if (is_file_epoll(tfile))

tep = tfile->private_data;

lockdep_assert_irqs_enabled();

user_watches = atomic_long_read(&ep->user->epoll_watches);

if (unlikely(user_watches >= max_user_watches))

return -ENOSPC;

// Allocate epitem listener

if (!(epi = kmem_cache_zalloc(epi_cache, GFP_KERNEL)))

return -ENOMEM;

/* Item initialization follow here ... */

INIT_LIST_HEAD(&epi->rdllink);

epi->ep = ep;

ep_set_ffd(&epi->ffd, tfile, fd);

epi->event = *event;

epi->next = EP_UNACTIVE_PTR;

if (tep)

mutex_lock_nested(&tep->mtx, 1);

/* Add the current item to the list of active epoll hook for this file */

if (unlikely(attach_epitem(tfile, epi) < 0)) {

kmem_cache_free(epi_cache, epi);

if (tep)

mutex_unlock(&tep->mtx);

return -ENOMEM;

}

if (full_check && !tep)

list_file(tfile);

atomic_long_inc(&ep->user->epoll_watches);

// Join the red black tree of eventpoll

ep_rbtree_insert(ep, epi);

if (tep)

mutex_unlock(&tep->mtx);

/* Callback function to be called to initialize kernel */

epq.epi = epi;

init_poll_funcptr(&epq.pt, ep_ptable_queue_proc);

// Register the callback function, and the callback function EP will be called when the target object has an event_ ptable_ queue_ proc

revents = ep_item_poll(epi, &epq.pt, 1);

/*

* We have to check if something went wrong during the poll wait queue

* install process. Namely an allocation for a wait queue failed due

* high memory pressure.

*/

if (unlikely(!epq.epi)) {

ep_remove(ep, epi);

return -ENOMEM;

}

/* We have to drop the new item inside our item list to keep track of it */

write_lock_irq(&ep->lock);

/* record NAPI ID of new item if present */

ep_set_busy_poll_napi_id(epi);

/* If the file is already "ready" we drop it inside the ready list */

if (revents && !ep_is_linked(epi)) {

list_add_tail(&epi->rdllink, &ep->rdllist);

ep_pm_stay_awake(epi);

/* Notify waiting tasks that events are available */

if (waitqueue_active(&ep->wq))

wake_up(&ep->wq);

if (waitqueue_active(&ep->poll_wait))

pwake++;

}

write_unlock_irq(&ep->lock);

/* We have to call this outside the lock */

if (pwake)

ep_poll_safewake(ep, NULL);

return 0;

}

Suppose two socket and epoll objects are created, and their corresponding files are 5000, 5001 and 5002 respectively

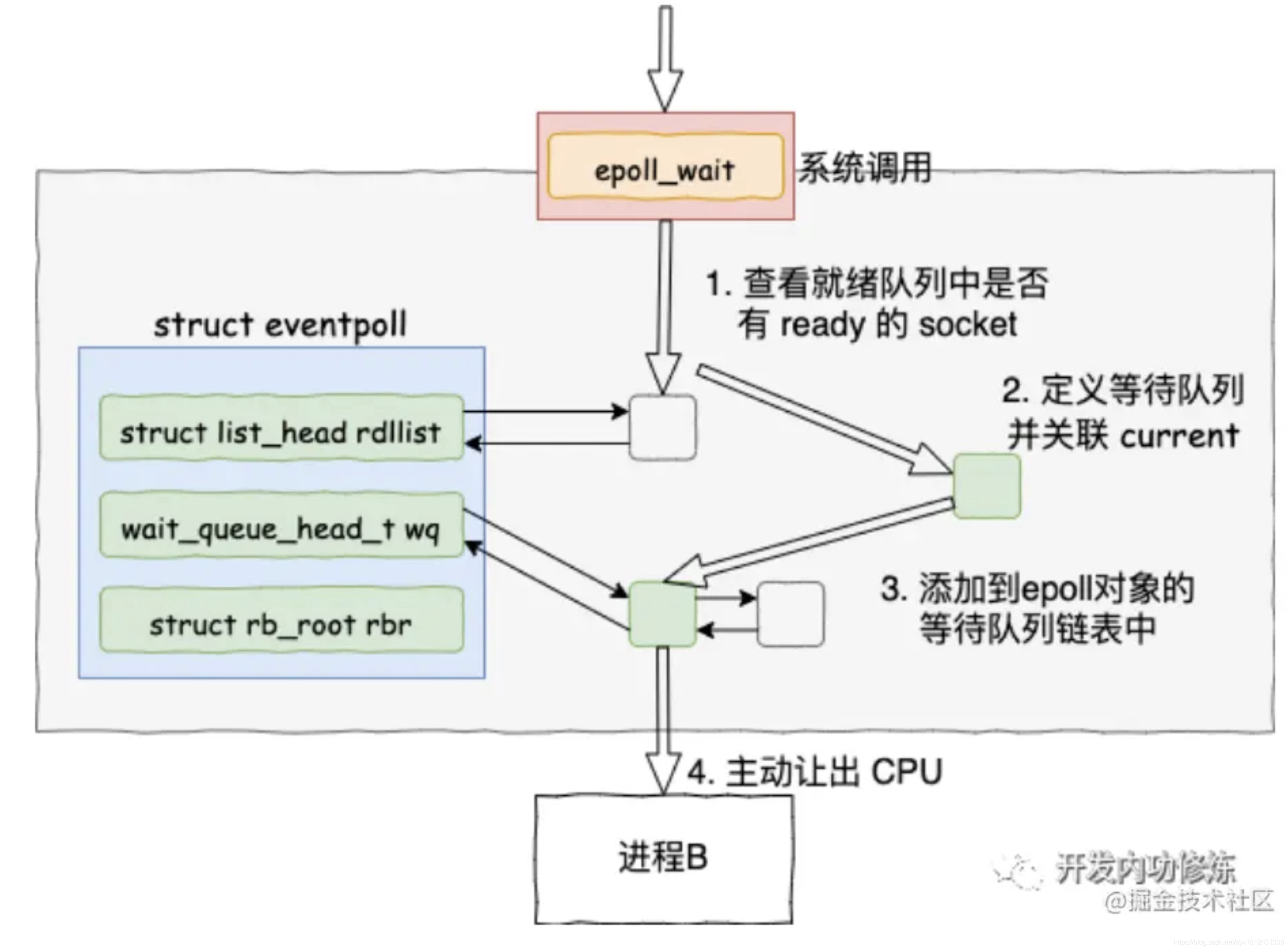

- epoll_ When the wait is called, it is observed that the ready linked list returns when there is data. If there is no data, a waiting list is created and added to the ready list of epollevent to block itself.

static int do_epoll_wait(int epfd, struct epoll_event __user *events,

int maxevents, struct timespec64 *to)

{

int error;

struct fd f;

struct eventpoll *ep;

/* The maximum number of event must be greater than zero */

if (maxevents <= 0 || maxevents > EP_MAX_EVENTS)

return -EINVAL;

/* Verify that the area passed by the user is writeable */

if (!access_ok(events, maxevents * sizeof(struct epoll_event)))

return -EFAULT;

/* Get the file of eventpoll */

f = fdget(epfd);

if (!f.file)

return -EBADF;

// Get eventpoll

ep = f.file->private_data;

// Snooping events

error = ep_poll(ep, events, maxevents, to);

error_fput:

fdput(f);

return error;

}