Detailed explanation of Nginx load balancing configuration and algorithm

yjssjm 2020-03-12 21:57:53 2148 collection 13 force plan

Category column: Note article label: linux operation and maintenance nginx

copyright

note

The column contains this content

36 articles 1 subscription

Subscription column

1. Brief introduction to load balancing

If your nginx server acts as a proxy for two web servers, and the load balancing algorithm uses polling, when a web program of one of your machines is closed and the web cannot be accessed, the nginx server will still distribute requests to the inaccessible web server. If the response connection time here is too long, the client's page will be waiting for a response, For users, the experience is discounted. How can we avoid this. Here I'll attach a picture to illustrate the problem.

If this happens to web2 in load balancing, nginx will first go to web1 for requests, but nginx will continue to distribute requests to web2 in case of improper configuration, and then wait for web2 response. We will not redistribute the requests to web1 until our response time expires. If the response time is too long, the user will wait longer.

2. Preparation

Three virtual machines equipped with nginx, one as a reverse proxy server and the other two as real servers to simulate load balancing.

192.168.13.129 #Reverse proxy server 192.168.13.133 #Real server 192.168.13.139 #Real server

3. Configuration of three servers

#We are on the reverse proxy server(192.168.13.139)Configure as follows: [root@real-server ~]# vim /etc/nginx/conf.d/default.conf #Empty and add the following upstream test_server { server 192.168.13.133:8080; server 192.168.13.139:8080; } server { listen 80; server_name localhost; location / { proxy_pass http://test_server; } } [root@server ~]# nginx -t [root@server ~]# nginx -s reload

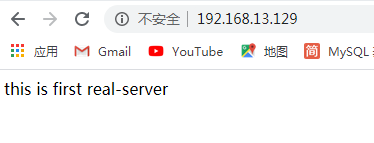

First real server configuration(192.168.13.133): [root@real-server ~]# vim /etc/nginx/conf.d/default.conf Empty and add the following server { listen 80; server_name localhost; location / { root /usr/share/nginx/html/login; index index.html index.html; } } [root@real-server ~]# nginx -t [root@real-server ~]# nginx -s reload #Create directories and files and write test data [root@real-server ~]# mkdir -p /usr/share/nginx/html/login [root@real-server ~]# echo "this is first real-server" > /usr/share/nginx/html/login/index.html

Second real server configuration(192.168.13.139): [root@real-server ~]# vim /etc/nginx/conf.d/default.conf Empty and add the following server { listen 80; server_name localhost; location / { root /usr/share/nginx/html/login; index index.html index.html; } } [root@real-server ~]# nginx -t [root@real-server ~]# nginx -s reload #Create directories and files and write test data [root@real-server ~]# mkdir -p /usr/share/nginx/html/login [root@real-server ~]# echo "this is second real-server" > /usr/share/nginx/html/login/index.html

Test:

Open the web page and enter the reverse proxy server ip: http://192.168.13.129/

Refresh it

4. Load balancing algorithm

upstream supports four load balancing scheduling algorithms:

A. Polling (default): each request is allocated to different back-end servers one by one in chronological order;

B,ip_hash: each request is allocated according to the hash result of the access IP. The same IP client accesses a back-end server. It can ensure that requests from the same IP are sent to a fixed machine and solve the session problem.

C,url_hash: allocate the request according to the hash result of the access URL, so that each URL is directed to the same back-end server. Efficiency when the background server is caching.

D. Fair: This is a more intelligent load balancing algorithm than the above two. This algorithm can intelligently balance the load according to the page size and loading time, that is, allocate requests according to the response time of the back-end server, and give priority to those with short response time. Nginx itself does not support fair. If you need to use this scheduling algorithm, you must download nginx's upstream_fair module.

Configuration instance

1. Hot standby: if you have two servers, only when the first server has an accident can you enable the second server to provide services.

upstream test_server { server 192.168.13.133:80; server 192.168.13.139:80 backup; }

Then access the proxy server ip again

2. Polling: nginx is polling by default, and its weight is 1 by default. The access order of the server is the first server, the second server, the first server, the second server, and then cycle again (because polling is the default, no parameters need to be added)

3. Weighted polling: distribute different number of requests to different servers according to the configured weight. If not set, it defaults to 1.

upstream test_server { server 192.168.13.133:80 weight=1; server 192.168.13.139:80 weight=3; }

4,ip_hash:nginx will make the same client IP request the same server.

upstream test_server { server 192.168.13.133:80; server 192.168.13.139:80; ip_hash; }

5. nginx load balancing configuration status parameters

down indicates that the current server does not participate in load balancing temporarily.

Backup, reserved backup machine. When all other non backup machines fail or are busy, the backup machine will be requested, so the pressure on this machine is the least.

max_ Failures, the number of times a request is allowed to fail. The default value is 1. When the maximum number of times is exceeded, an error is returned.

fail_timeout, after experiencing max_ The time in seconds that the service is suspended after failures times. max_ Failures can be compared with fail_ Use with timeout.

upstream test_server { server 192.168.13.133:80 weight=2 max_fails=2 fail_timeout=2; #The weight of weighted polling is 2, allowing the request to fail twice, and then suspending the service for two seconds server 192.168.13.139:80 weight=3 max_fails=3 fail_timeout=3; #The weight of weighted polling is 3, allowing the request to fail three times, and then suspending the service for three seconds }