Transformer details

Attention is all you need It is a paper that gives full play to the idea of Attention, which comes from Google. In this paper, a new model called Transformer is proposed, which abandons CNN and RNN used in previous deep learning tasks (in fact, it is not completely, but also uses one-dimensional convolution). This model is widely used in NLP fields, such as machine translation, question answering system, text summarization and speech recognition.

reference material:

- PyTorch implementation of Transformer model

- Attention is All You Need (Introduction + code)

- Attention mechanism in deep learning - cloud + community - Tencent cloud

- Attention? Attention!

- Building the Mighty Transformer for Sequence Tagging in PyTorch

1 Transformer overall framework

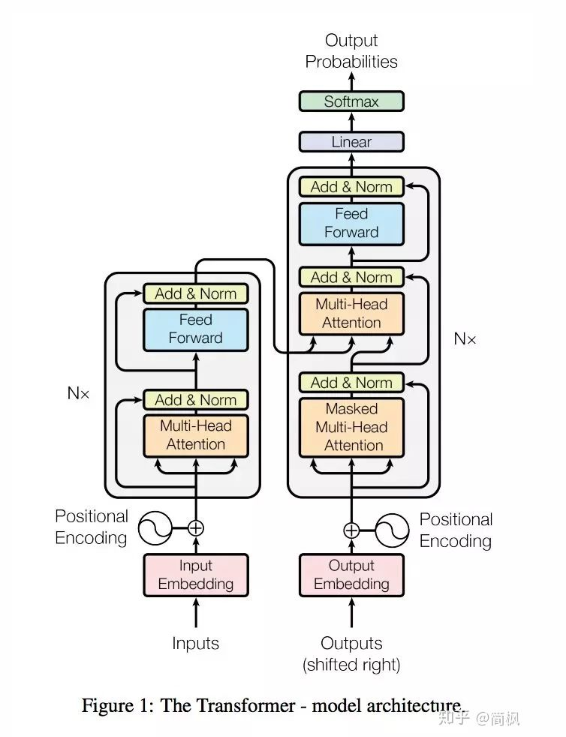

Like the classical seq2seq model, the Transformer model also adopts encoer decoder architecture. The left half of the figure above is framed by NX, which represents one layer of encoder. The encoder in the paper has a total of six layers. The right half of the figure above is framed with NX, which represents one layer of decoder, and there are also six layers.

Define the input sequence, first through word embedding, and then add it to positive encoding, and then input it into the encoder. The output sequence is processed the same as the input sequence, and then input to the decoder.

Finally, the output of the decoder passes through a linear layer and then connected to Softmax.

The above is the overall framework of Transformer. Let's introduce encoder and decoder first.

1.1 Encoder

The encoder consists of six identical layers, and each layer consists of two parts:

- The first part is multi head self attention

- The second part is the position wise feed forward network, which is a full connection layer

Both parts have a residual connection, followed by Layer Normalization.

1.2 Decoder

Similar to the encoder, the decoder is also composed of six identical layers, and each layer includes the following three parts:

- The first part is multi head self attention mechanism

- The second part is multi head context attention mechanism

- The third part is a position wise feed forward network

Like the encoder, each of the above three parts has a residual connection followed by Layer Normalization.

The differences between decoder and encoder are in multi head context attention mechanism

1.3 Attention

As I mentioned in previous articles, if Attention is described in one sentence, it is that the output of the encoder layer is weighted average and then input into the decoder layer. It is mainly used in seq2seq model. This weighting can be expressed by matrix, also known as Attention matrix. It represents the Attention of each part of input x for output y at a certain time. This Attention is what we just said.

There are many kinds of Attention, two of which are typical: additive Attention and multiplicative Attention. Additive Attention directly concat enates the implicit state of the input, while multiplicative Attention does dot operations on the input and output.

In Google's paper, the Attention model used is multiplicative Attention.

I said before ESIM A soft align attention has been written in the model article. You can refer to it.

1.4 Self-Attention

When we talk about the attention mechanism above, we will talk about two hidden states hi and St. the former is the hidden state generated at the ith position of the input sequence, and the latter is the hidden state generated at the t position of the output sequence. The so-called self attention actually means that the output sequence is the input sequence. So you can calculate your attention score.

1.5 Context-Attention

Context attention is the attention between encoder and decoder. It is the attention between two different sequences, which is different from self attention derived from itself.

No matter what kind of attention it is, we can choose many methods when calculating the weight of attention. The commonly used methods are

- additive attention

- local-base

- general

- dot-product

- scaled dot-product

The Transformer model adopts the last one: scaled dot product attention.

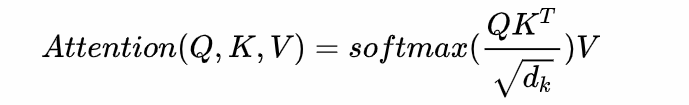

1.6 Scaled Dot-Product Attention

So what is scaled dot product attention?

In the paper, Google describes the Attention mechanism as follows:

An attention function can be described as a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility of the query with the corresponding key.

The weight distribution of value is determined by the similarity between query and key. The formula in the paper looks like this:

See Q, K, V will be a little dizzy, it's okay, I'll explain later.

The structure of scaled dot product attention is shown below.

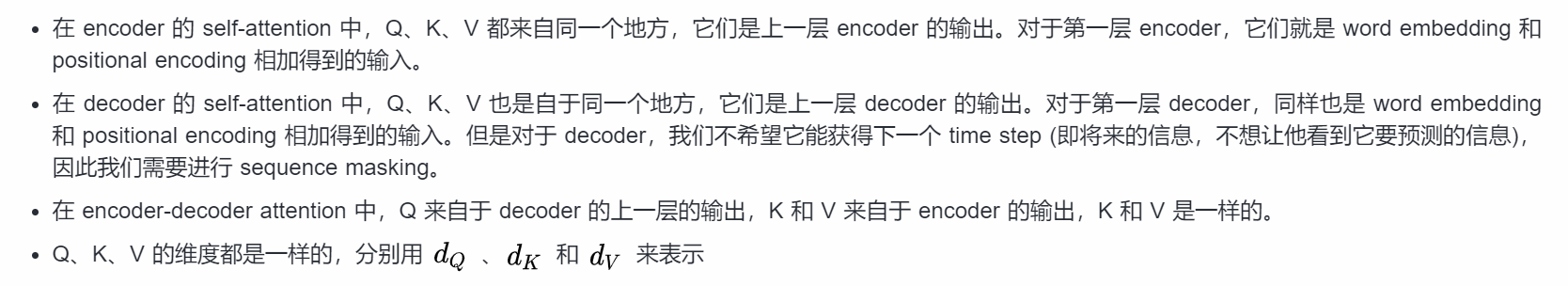

Now let's talk about what K, Q and V stand for:

At present, the description may be a little abstract and not easy to understand. Combined with some applications, for example, if it is in the automatic question and answer task, Q can represent the word vector sequence of the answer, and K = V is the word vector sequence of the question, then the output is the so-called Aligned Question Embedding.

One of the main contributions of Google paper is that it shows that internal attention is very important in sequence coding of machine translation (even general Seq2Seq tasks), while previous studies on Seq2Seq basically only used the attention mechanism at the decoding end.

1.7 implementation of scaled dot product attention

import torch

import torch.nn as nn

import torch.functional as F

import numpy as np

class ScaledDotProductAttention(nn.Module):

"""Scaled dot-product attention mechanism."""

def __init__(self, attention_dropout=0.0):

super(ScaledDotProductAttention, self).__init__()

self.dropout = nn.Dropout(attention_dropout)

self.softmax = nn.Softmax(dim=2)

def forward(self, q, k, v, scale=None, attn_mask=None):

"""

Forward propagation.

Args:

q: Queries Tensor, shape[B, L_q, D_q]

k: Keys Tensor, shape[B, L_k, D_k]

v: Values Tensor, shape[B, L_v, D_v],Generally speaking k

scale: Scaling factor, a floating-point scalar

attn_mask: Masking Tensor, shape[B, L_q, L_k]

Returns:

Context tensor sum attention tensor

"""

attention = torch.bmm(q, k.transpose(1, 2))

if scale:

attention = attention * scale

if attn_mask:

# Set a negative infinity value where the mask is needed

attention = attention.masked_fill_(attn_mask, -np.inf)

# Calculate softmax

attention = self.softmax(attention)

# Add dropout

attention = self.dropout(attention)

# Dot product with V

context = torch.bmm(attention, v)

return context, attention

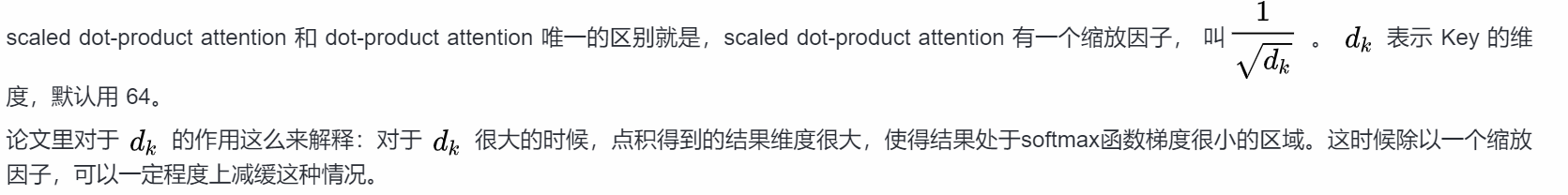

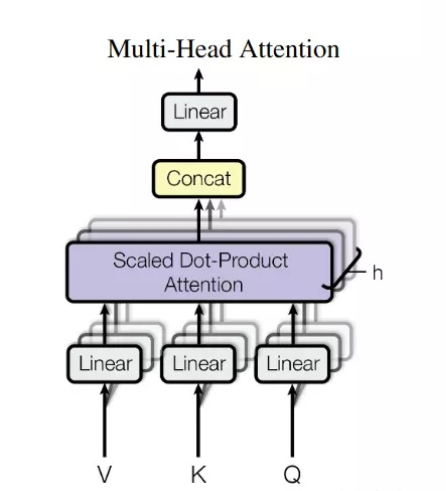

1.8 Multi-head attention

It's easy to understand scaled dot product attention and multi head attention. The paper mentioned that they found that after Q, K and V were divided into H parts through a linear mapping, it was better to carry out scaled dot product attention on each part. Then, the results of each part are combined, and the final output is obtained through linear mapping again. This is called multi head attention. The above super parameter h is the number of heads. The default is 8.

The structure of multi head attention is shown below.

1.9 implementation of multi head attention

class MultiHeadAttention(nn.Module):

def __init__(self, model_dim=512, num_heads=8, dropout=0.0):

super(MultiHeadAttention, self).__init__()

self.dim_per_head = model_dim // num_heads

self.num_heads = num_heads

self.linear_k = nn.Linear(model_dim, self.dim_per_head * num_heads)

self.linear_v = nn.Linear(model_dim, self.dim_per_head * num_heads)

self.linear_q = nn.Linear(model_dim, self.dim_per_head * num_heads)

self.dot_product_attention = ScaledDotProductAttention(dropout)

self.linear_final = nn.Linear(model_dim, model_dim)

self.dropout = nn.Dropout(dropout)

# layer norm is required after multi head attention

self.layer_norm = nn.LayerNorm(model_dim)

def forward(self, key, value, query, attn_mask=None):

# Residual connection

residual = query

dim_per_head = self.dim_per_head

num_heads = self.num_heads

batch_size = key.size(0)

# linear projection

key = self.linear_k(key)

value = self.linear_v(value)

query = self.linear_q(query)

# split by heads

key = key.view(batch_size * num_heads, -1, dim_per_head)

value = value.view(batch_size * num_heads, -1, dim_per_head)

query = query.view(batch_size * num_heads, -1, dim_per_head)

if attn_mask:

attn_mask = attn_mask.repeat(num_heads, 1, 1)

# scaled dot product attention

scale = (key.size(-1)) ** -0.5

context, attention = self.dot_product_attention(

query, key, value, scale, attn_mask)

# concat heads

context = context.view(batch_size, -1, dim_per_head * num_heads)

# final linear projection

output = self.linear_final(context)

# dropout

output = self.dropout(output)

# add residual and norm layer

output = self.layer_norm(residual + output)

return output, attention

What appears in the code above Residual connection As I mentioned in a previous article, I will not repeat it here, but only explain Layer normalization.

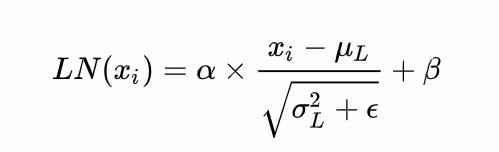

1.10 Layer normalization

There are many kinds of normalization, but they all have a common purpose, that is to convert the input into data with a mean of 0 and a variance of 1. We normalize the data before sending it into the activation function, because we don't want the input data to fall in the saturation area of the activation function.

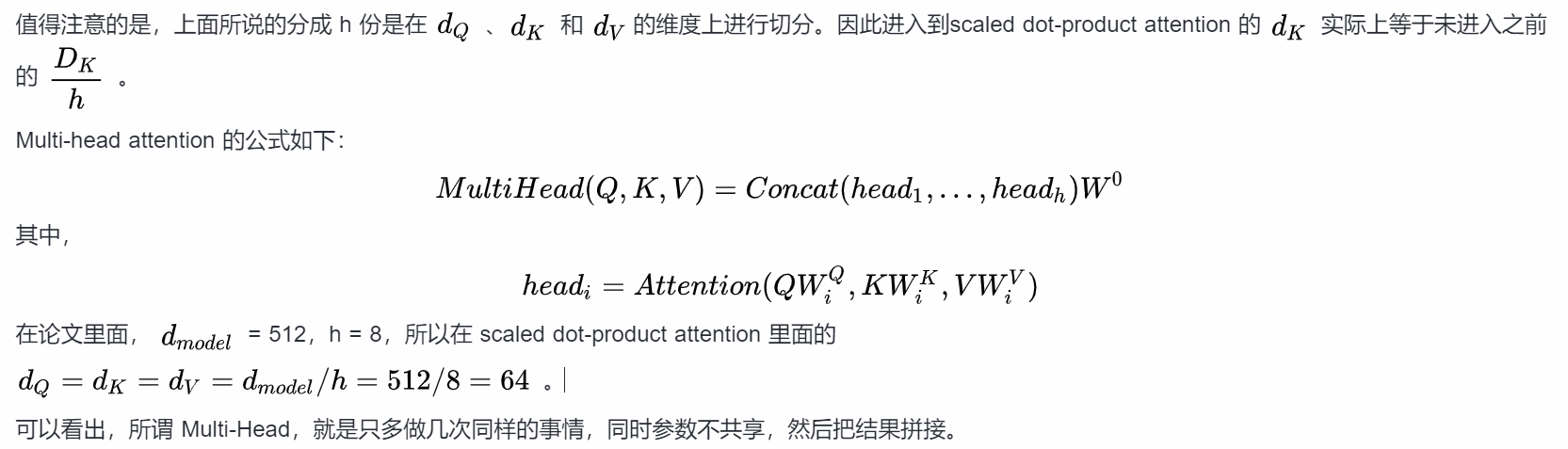

When it comes to normalization, Batch Normalization must be mentioned.

The main idea of BN is to normalize each batch of data in each layer. We may normalize the input data, but after the action of the network layer, our data is no longer normalized. With the development of this situation, the data deviation becomes larger and larger. My back propagation needs to take these large deviations into account, which forces us to use a small learning rate to prevent gradient disappearance or gradient explosion.

The specific method of BN is to normalize each small batch of data in the direction of batch. As shown in the figure below:

It can be seen that the average value of the right half is calculated along the direction of data batch N!

The calculation formula of Batch normalization is as follows:

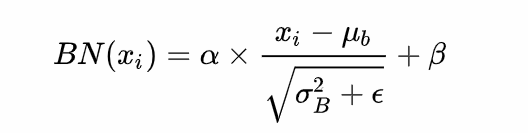

So what is Layer normalization? It is also a way to normalize data, but LN calculates the mean and variance on each sample, rather than BN in the batch direction!

The following is the schematic diagram of LN:

By comparing with the BN diagram above, we can see the difference between the two!

Let's look at the formula of LN:

1.11 Mask

Mask represents a mask that masks some values so that they have no effect when parameters are updated. Transformer model involves two types of masks, namely padding mask and sequence mask.

Among them, the padding mask is used in all scaled dot product attention, while the sequence mask is only used in the self attention of the decoder.

Padding Mask

What is a padding mask? Because the input sequence length of each batch is different, that is, we need to align the input sequence. Specifically, it is to fill 0 after a shorter sequence. Because these filled positions are meaningless, our attention mechanism should not focus on these positions, so we need to deal with them.

The specific method is to add a very large negative number (negative infinity) to the values of these positions. In this way, after softmax, the probability of these positions will be close to 0!

Our padding mask is actually a tensor, and each value is a Boolean. The place where the value is false is where we need to deal with it.

realization:

def padding_mask(seq_k, seq_q):

# seq_k and seq_ The shape of Q is [B,L]

len_q = seq_q.size(1)

# `PAD` is 0

pad_mask = seq_k.eq(0)

pad_mask = pad_mask.unsqueeze(1).expand(-1, len_q, -1) # shape [B, L_q, L_k]

return pad_mask

Sequence mask

As mentioned earlier in the article, the sequence mask is used to prevent the decoder from seeing future information. That is, for a sequence, at time_ When step is t, our decoding output should only depend on the output before t, not after t. Therefore, we need to find a way to hide the information after t.

So what exactly? It is also very simple: generate an upper triangle matrix, the values of the upper triangle are all 1, the values of the lower triangle are 0, and the diagonal is also 0. By applying this matrix to each sequence, we can achieve our goal.

The specific code implementation is as follows:

def sequence_mask(seq):

batch_size, seq_len = seq.size()

mask = torch.triu(torch.ones((seq_len, seq_len), dtype=torch.uint8),

diagonal=1)

mask = mask.unsqueeze(0).expand(batch_size, -1, -1) # [B, L, L]

return mask

The effect is as follows,

- For the self attention of the decoder, the scaled dot product attention used in it requires both the padding mask and the sequence mask as the attn_mask. The specific implementation is to add two masks as attn_mask.

- Other cases, Attn_ The mask is always equal to the padding mask.

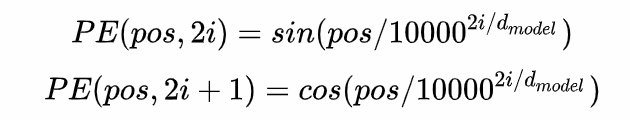

1.12 Positional Embedding

The current Transformer architecture has not extracted the sequence order information, which is very important for the sequence. If this information is missing, our result may be that all words are right, but we can't form meaningful statements.

To solve this problem. This paper uses Positional Embedding: encoding the position of words in the sequence.

The sine and cosine function is used in the implementation. The formula is as follows:

Among them, pos refers to the position of words in the sequence. It can be seen that sine coding is used in even positions and cosine coding is used in odd positions.

The position code above is an absolute position code. But the relative position of words is also very important. This is why the paper uses trigonometric function!

Sinusoidal function can express relative position information. The main mathematical basis is the following two formulas:

The specific implementation is as follows:

class PositionalEncoding(nn.Module):

def __init__(self, d_model, max_seq_len):

"""initialization.

Args:

d_model: A scalar. The dimension of the model is 512 by default

max_seq_len: A scalar. Maximum length of text sequence

"""

super(PositionalEncoding, self).__init__()

# According to the formula given in the paper, the PE matrix is constructed

position_encoding = np.array([

[pos / np.power(10000, 2.0 * (j // 2) / d_model) for j in range(d_model)]

for pos in range(max_seq_len)])

# Even columns use sin and odd columns use cos

position_encoding[:, 0::2] = np.sin(position_encoding[:, 0::2])

position_encoding[:, 1::2] = np.cos(position_encoding[:, 1::2])

# In the first row of the PE matrix, add a vector whose row is all 0 to represent the positive encoding of the 'PAD'

# 'UNK' is often added to word embedding, which represents word embedding of position words. The two are very similar

# So why do you need this additional PAD coding? Very simple, because the length of the text sequence is different, we need to align,

# For short sequences, we use 0 to complete at the end. We also need the coding of these completion positions, that is, the position coding corresponding to 'PAD'

pad_row = torch.zeros([1, d_model])

position_encoding = torch.cat((pad_row, position_encoding))

# For embedding operation, + 1 is due to the addition of the code of the complement position 'PAD',

# In Word embedding, if 'UNK' is added to the dictionary, we also need + 1. See, the two are very similar

self.position_encoding = nn.Embedding(max_seq_len + 1, d_model)

self.position_encoding.weight = nn.Parameter(position_encoding,

requires_grad=False)

def forward(self, input_len):

"""Forward propagation of neural network.

Args:

input_len: A tensor whose shape is[BATCH_SIZE, 1]. The value of each tensor represents the corresponding length in this batch of text sequence.

Returns:

The position codes of this batch of sequences are returned and aligned.

"""

# Find the maximum length of this batch of sequences

max_len = torch.max(input_len)

tensor = torch.cuda.LongTensor if input_len.is_cuda else torch.LongTensor

# Align the position of each sequence and add 0 after the position of the original sequence

# Here, the range starts from 1 to avoid the position of PAD(0)

input_pos = tensor(

[list(range(1, len + 1)) + [0] * (max_len - len) for len in input_len])

return self.position_encoding(input_pos)

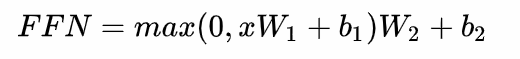

1.13 Position-wise Feed-Forward network

This is a fully connected network, including two linear transformations and a nonlinear function (actually ReLU). The formula is as follows

This linear transformation behaves the same at different locations and uses different parameters between different layers.

Two one-dimensional convolutions are used here.

The implementation is as follows:

class PositionalWiseFeedForward(nn.Module):

def __init__(self, model_dim=512, ffn_dim=2048, dropout=0.0):

super(PositionalWiseFeedForward, self).__init__()

self.w1 = nn.Conv1d(model_dim, ffn_dim, 1)

self.w2 = nn.Conv1d(ffn_dim, model_dim, 1)

self.dropout = nn.Dropout(dropout)

self.layer_norm = nn.LayerNorm(model_dim)

def forward(self, x):

output = x.transpose(1, 2)

output = self.w2(F.relu(self.w1(output)))

output = self.dropout(output.transpose(1, 2))

# add residual and norm layer

output = self.layer_norm(x + output)

return output

2. Implementation of transformer

Now you can start to complete the construction of the Transformer model. The encoder side and the decoder side have six layers respectively. The implementation is as follows: first

2.1 Encoder end

class EncoderLayer(nn.Module):

"""Encoder The first floor."""

def __init__(self, model_dim=512, num_heads=8, ffn_dim=2048, dropout=0.0):

super(EncoderLayer, self).__init__()

self.attention = MultiHeadAttention(model_dim, num_heads, dropout)

self.feed_forward = PositionalWiseFeedForward(model_dim, ffn_dim, dropout)

def forward(self, inputs, attn_mask=None):

# self attention

context, attention = self.attention(inputs, inputs, inputs, padding_mask)

# feed forward network

output = self.feed_forward(context)

return output, attention

class Encoder(nn.Module):

"""multi-storey EncoderLayer form Encoder. """

def __init__(self,

vocab_size,

max_seq_len,

num_layers=6,

model_dim=512,

num_heads=8,

ffn_dim=2048,

dropout=0.0):

super(Encoder, self).__init__()

self.encoder_layers = nn.ModuleList(

[EncoderLayer(model_dim, num_heads, ffn_dim, dropout) for _ in

range(num_layers)])

self.seq_embedding = nn.Embedding(vocab_size + 1, model_dim, padding_idx=0)

self.pos_embedding = PositionalEncoding(model_dim, max_seq_len)

def forward(self, inputs, inputs_len):

output = self.seq_embedding(inputs)

output += self.pos_embedding(inputs_len)

self_attention_mask = padding_mask(inputs, inputs)

attentions = []

for encoder in self.encoder_layers:

output, attention = encoder(output, self_attention_mask)

attentions.append(attention)

return output, attentions

2.2 Decoder end

class DecoderLayer(nn.Module):

def __init__(self, model_dim, num_heads=8, ffn_dim=2048, dropout=0.0):

super(DecoderLayer, self).__init__()

self.attention = MultiHeadAttention(model_dim, num_heads, dropout)

self.feed_forward = PositionalWiseFeedForward(model_dim, ffn_dim, dropout)

def forward(self,

dec_inputs,

enc_outputs,

self_attn_mask=None,

context_attn_mask=None):

# self attention, all inputs are decoder inputs

dec_output, self_attention = self.attention(

dec_inputs, dec_inputs, dec_inputs, self_attn_mask)

# context attention

# query is decoder's outputs, key and value are encoder's inputs

dec_output, context_attention = self.attention(

enc_outputs, enc_outputs, dec_output, context_attn_mask)

# decoder's output, or context

dec_output = self.feed_forward(dec_output)

return dec_output, self_attention, context_attention

class Decoder(nn.Module):

def __init__(self,

vocab_size,

max_seq_len,

num_layers=6,

model_dim=512,

num_heads=8,

ffn_dim=2048,

dropout=0.0):

super(Decoder, self).__init__()

self.num_layers = num_layers

self.decoder_layers = nn.ModuleList(

[DecoderLayer(model_dim, num_heads, ffn_dim, dropout) for _ in

range(num_layers)])

self.seq_embedding = nn.Embedding(vocab_size + 1, model_dim, padding_idx=0)

self.pos_embedding = PositionalEncoding(model_dim, max_seq_len)

def forward(self, inputs, inputs_len, enc_output, context_attn_mask=None):

output = self.seq_embedding(inputs)

output += self.pos_embedding(inputs_len)

self_attention_padding_mask = padding_mask(inputs, inputs)

seq_mask = sequence_mask(inputs)

self_attn_mask = torch.gt((self_attention_padding_mask + seq_mask), 0)

self_attentions = []

context_attentions = []

for decoder in self.decoder_layers:

output, self_attn, context_attn = decoder(

output, enc_output, self_attn_mask, context_attn_mask)

self_attentions.append(self_attn)

context_attentions.append(context_attn)

return output, self_attentions, context_attentions

Combine it

2.3 Transformer model

class Transformer(nn.Module):

def __init__(self,

src_vocab_size,

src_max_len,

tgt_vocab_size,

tgt_max_len,

num_layers=6,

model_dim=512,

num_heads=8,

ffn_dim=2048,

dropout=0.2):

super(Transformer, self).__init__()

self.encoder = Encoder(src_vocab_size, src_max_len, num_layers, model_dim,

num_heads, ffn_dim, dropout)

self.decoder = Decoder(tgt_vocab_size, tgt_max_len, num_layers, model_dim,

num_heads, ffn_dim, dropout)

self.linear = nn.Linear(model_dim, tgt_vocab_size, bias=False)

self.softmax = nn.Softmax(dim=2)

def forward(self, src_seq, src_len, tgt_seq, tgt_len):

context_attn_mask = padding_mask(tgt_seq, src_seq)

output, enc_self_attn = self.encoder(src_seq, src_len)

output, dec_self_attn, ctx_attn = self.decoder(

tgt_seq, tgt_len, output, context_attn_mask)

output = self.linear(output)

output = self.softmax(output)

return output, enc_self_attn, dec_self_attn, ctx_attn

num_heads, ffn_dim, dropout)

self.linear = nn.Linear(model_dim, tgt_vocab_size, bias=False)

self.softmax = nn.Softmax(dim=2)

def forward(self, src_seq, src_len, tgt_seq, tgt_len):

context_attn_mask = padding_mask(tgt_seq, src_seq)

output, enc_self_attn = self.encoder(src_seq, src_len)

output, dec_self_attn, ctx_attn = self.decoder(

tgt_seq, tgt_len, output, context_attn_mask)

output = self.linear(output)

output = self.softmax(output)

return output, enc_self_attn, dec_self_attn, ctx_attn