Preface

We are familiar with Android network programming, but we have to ask, do we really know Volley? How is Volley implemented internally? Why can a few lines of code quickly build a network request? We should not only know what it is, but also know why it is. For this purpose, this paper mainly describes the source code of Volley in detail, and makes a detailed analysis of the internal process.

Part 1. Starting with RequestQueue

(1) Do you remember the first step in building the request? Create a new request queue, for example:

RequestQueue queue = Volley.newRequestQueue(context)

Although seemingly just a code thing, there is a lot of preparation behind it. We tracked the source code and found the Volley newRequestQueue () method:

/**

* Creates a default instance of the worker pool and calls {@link RequestQueue#start()} on it.

* You may set a maximum size of the disk cache in bytes.

*

* @param context A {@link Context} to use for creating the cache dir.

* @param stack An {@link HttpStack} to use for the network, or null for default.

* @param maxDiskCacheBytes the maximum size of the disk cache, in bytes. Use -1 for default size.

* @return A started {@link RequestQueue} instance.

*/

public static RequestQueue newRequestQueue(Context context, HttpStack stack, int maxDiskCacheBytes) {

File cacheDir = new File(context.getCacheDir(), DEFAULT_CACHE_DIR);

String userAgent = "volley/0";

try {

String packageName = context.getPackageName();

PackageInfo info = context.getPackageManager().getPackageInfo(packageName, 0);

userAgent = packageName + "/" + info.versionCode;

} catch (NameNotFoundException e) {

}

/**

* Instantiate different request classes according to different system version numbers. If the version number is less than 9, use HttpClient

* If the version number is greater than 9, use HttpUrlConnection

*/

if (stack == null) {

if (Build.VERSION.SDK_INT >= 9) {

stack = new HurlStack();

} else {

// Prior to Gingerbread, HttpUrlConnection was unreliable.

// See: http://android-developers.blogspot.com/2011/09/androids-http-clients.html

stack = new HttpClientStack(AndroidHttpClient.newInstance(userAgent));

}

}

//Pass the instantiated stack into the Basic Network and instantiate the Network

Network network = new BasicNetwork(stack);

RequestQueue queue;

if (maxDiskCacheBytes <= -1){

// No maximum size specified

//Instantiate RequestQueue class

queue = new RequestQueue(new DiskBasedCache(cacheDir), network);

}

else {

// Disk cache size specified

queue = new RequestQueue(new DiskBasedCache(cacheDir, maxDiskCacheBytes), network);

}

//Call the start() method of RequestQueue

queue.start();

return queue;

}

First, we look at the parameters, there are three. In fact, we default to using a method with only one parameter context, which is the corresponding overload method. Finally, we call the method with three parameters. Context is the context environment; stack represents the network connection request class that needs to be used, which is generally not set up. Different networks will be invoked according to the version number of the current system. Connection request classes (HttpUrlConnection and HttpClient); the last parameter is the size of the cache. Then let's look inside the method, where we first create a cache file, then instantiate different request classes according to different system version numbers, and use stack to refer to this class. Then a Basic Network is instantiated, which is described below. Then we go to the place where we actually instantiate the request queue: new RequestQueue(), where we receive two parameters, cache and network (Basic Network). After instantiating the RequestQueue, the start() method is called and the RequestQueue is returned.

(2) Let's follow RequestQueue to see what its constructor does:

/**

* Creates the worker pool. Processing will not begin until {@link #start()} is called.

*

* @param cache A Cache to use for persisting responses to disk

* @param network A Network interface for performing HTTP requests

* @param threadPoolSize Number of network dispatcher threads to create

* @param delivery A ResponseDelivery interface for posting responses and errors

*/

public RequestQueue(Cache cache, Network network, int threadPoolSize,

ResponseDelivery delivery) {

mCache = cache;

mNetwork = network;

//Instantiate the network request array, whose size defaults to 4

mDispatchers = new NetworkDispatcher[threadPoolSize];

mDelivery = delivery;

}

public RequestQueue(Cache cache, Network network, int threadPoolSize) {

this(cache, network, threadPoolSize,

//ResponseDelivery is an interface and the implementation class is ExecutorDelivery.

new ExecutorDelivery(new Handler(Looper.getMainLooper())));

}

public RequestQueue(Cache cache, Network network) {

this(cache, network, DEFAULT_NETWORK_THREAD_POOL_SIZE);

}

As you can see, the passed cache and network are passed as variables to the constructor of four parameters. Here, several member variables of RequestQueue are initialized: mCache (file cache), mNetwork (basic network instance), mDispatchers (network request thread array), and mDelivery (interface for dispatching request results). The specific meaning can be seen from the above annotations.

(3) After the construction of RequestQueue, we can see from (1) that its start() method is finally called. Let's look at this method, RequestQueue#start():

public void start() {

stop(); // Make sure any currently running dispatchers are stopped.

// Create the cache dispatcher and start it.

mCacheDispatcher = new CacheDispatcher(mCacheQueue, mNetworkQueue, mCache, mDelivery);

mCacheDispatcher.start();

// Create network dispatchers (and corresponding threads) up to the pool size.

for (int i = 0; i < mDispatchers.length; i++) {

NetworkDispatcher networkDispatcher = new NetworkDispatcher(mNetworkQueue, mNetwork,

mCache, mDelivery);

mDispatchers[i] = networkDispatcher;

networkDispatcher.start();

}

}

First, CacheDispatcher is instantiated, and the CacheDispatcher class inherits from Thread, then its start() method is called to start a new cache thread. Next is a for loop, which opens multiple network request threads according to the size of the mDispatchers array, defaulting to four network request threads.

So far, the Volley.newRequestQueue() method has been completed, that is, the first step of our network request is to establish a request queue to complete.

To sum up, the task of establishing the request queue is to create a file cache (default), instantiate Basic Network, instantiate Delivery for sending thread requests, create a cache thread and four network request threads (default) and run them.

Part 2. Implementation Principle of Network Request

After creating the request queue, the next step is to create a request, which can be in the form of String Request, Json Array Request or Image Request, etc. What is the principle behind these requests? Let's take the simplest StringRequest, which inherits from Request, and Request is the parent of all requests, so if you want to customize a network request, you should inherit from Request. Next, let's look at the source code of StringRequest, because no matter what the subclasses of Request are, the general idea of implementation is the same, so we understand StringRequest, then the understanding of other request classes is the same. The following is the StringRequest source code:

public class StringRequest extends Request<String> {

private Listener<String> mListener;

public StringRequest(int method, String url, Listener<String> listener,

ErrorListener errorListener) {

super(method, url, errorListener);

mListener = listener;

}

...

@Override

protected void deliverResponse(String response) {

if (mListener != null) {

mListener.onResponse(response);

}

}

@Override

protected Response<String> parseNetworkResponse(NetworkResponse response) {

String parsed;

try {

parsed = new String(response.data, HttpHeaderParser.parseCharset(response.headers));

} catch (UnsupportedEncodingException e) {

parsed = new String(response.data);

}

return Response.success(parsed, HttpHeaderParser.parseCacheHeaders(response));

}

}

Source code is not long, our main concern is the delivery Response method and parseNetwork Response. As you can see, both methods are rewritten. Looking at the corresponding methods of the parent class Request, we find that they are abstract methods, indicating that both methods must be rewritten in each custom Request. Here is a brief description of the role of these two methods. Look at the delivery Response method first: It calls the mListener.onResponse(response) method internally, which is the onResponse method rewritten by the listener we added when we wrote a request, that is, the onResponse() method is called here after the response is successful. Next, look at the pareNetworkResponse method, and you can see that the main thing here is to make some processing of the response response response. The method of comparing different request classes will be different, so this method makes different processing for different request types. For example, if it is a String Request, the response is wrapped as a String type; if it is a JsonObjectRequest, the response is wrapped as a JsonObject. Now it should be clear: for a particular type of request, we can customize a Request and rewrite both methods.

Here's a summary: The Request class mainly initializes some parameters, such as the request type, the url of the request, the wrong callback method; and any of its subclasses overrides the delivery Response method to achieve a successful callback, and overrides the parseNetworkResponse() method to process the response data; so far, a complete Request request has been built.

Part 3. Adding requests

The creation of the request queue has been completed before, and the creation of the Request request, so the next step is to add the request to the queue. Let's look at the RequestQueue add () source code:

/**

* Adds a Request to the dispatch queue.

* @param request The request to service

* @return The passed-in request

*/

public <T> Request<T> add(Request<T> request) {

// Tag the request as belonging to this queue and add it to the set of current requests.

//Mark the current request to indicate that the request is processed by the current RequestQueue

request.setRequestQueue(this);

synchronized (mCurrentRequests) {

mCurrentRequests.add(request);

}

// Process requests in the order they are added.

//Get the serial number of the current request

request.setSequence(getSequenceNumber());

request.addMarker("add-to-queue");

// If the request is uncacheable, skip the cache queue and go straight to the network.

//If the request cannot be cached, it is added directly to the network request queue. By default, it can be cached.

if (!request.shouldCache()) {

mNetworkQueue.add(request);

return request;

}

// Insert request into stage if there's already a request with the same cache key in flight.

// Lock the current block of code, only one thread can execute

synchronized (mWaitingRequests) {

String cacheKey = request.getCacheKey();

//Is the same request being processed?

if (mWaitingRequests.containsKey(cacheKey)) {

// There is already a request in flight. Queue up.

//If the same request is being processed, put it in mWaiting Request and wait.

Queue<Request<?>> stagedRequests = mWaitingRequests.get(cacheKey);

if (stagedRequests == null) {

stagedRequests = new LinkedList<Request<?>>();

}

stagedRequests.add(request);

mWaitingRequests.put(cacheKey, stagedRequests);

if (VolleyLog.DEBUG) {

VolleyLog.v("Request for cacheKey=%s is in flight, putting on hold.", cacheKey);

}

} else {

// Insert 'null' queue for this cacheKey, indicating there is now a request in

// flight.

//Without the same request, put the request in mWaiting Requests and also in the mCacheQueue cache queue

//This means that the request is already running in the cache thread

mWaitingRequests.put(cacheKey, null);

mCacheQueue.add(request);

}

return request;

}

}

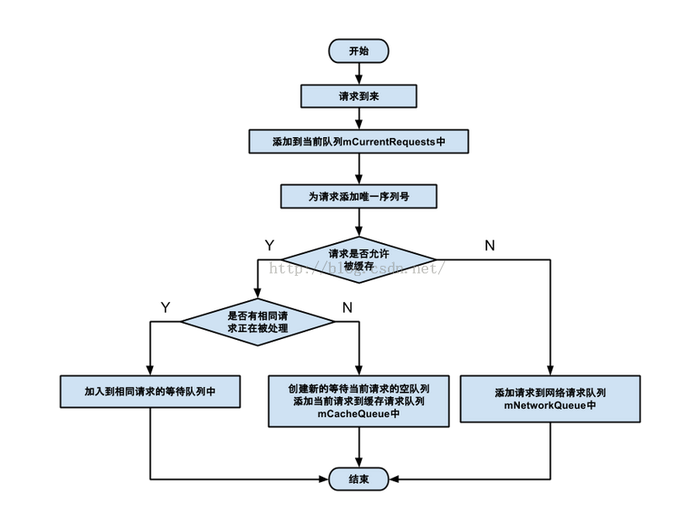

Combined with the corresponding annotations, we draw the following conclusions: in this add method, we mainly judge whether a Request request can be cached (default is cacheable), if not directly added to the network request queue to start network communication; if yes, we further determine whether the same request is currently in progress, and if there are the same requests, let this request be If there is no same request, it will be put into the cache queue directly. If you have any questions about this, you can see the following flow chart (pictures from the network):

RequestQueue#add() method flow chart

Part 4. Cache threads

At the end of part1, the cache thread is instantiated and started to run. It is always in a waiting state, and the request is added to the cache thread. Then the cache thread begins to work really. Let's look at the source code for caching threads, mainly its run() method, CacheDispatcher#run():

@Override

public void run() {

if (DEBUG) VolleyLog.v("start new dispatcher");

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

// Make a blocking call to initialize the cache.

mCache.initialize();

Request<?> request;

while (true) {

// release previous request object to avoid leaking request object when mQueue is drained.

request = null;

try {

// Take a request from the queue.

//Remove requests from the cache queue

request = mCacheQueue.take();

} ...

try {

request.addMarker("cache-queue-take");

// If the request has been canceled, don't bother dispatching it.

if (request.isCanceled()) {

request.finish("cache-discard-canceled");

continue;

}

// Attempt to retrieve this item from cache.

//Remove the result of this request from the file cache

Cache.Entry entry = mCache.get(request.getCacheKey());

if (entry == null) {

request.addMarker("cache-miss");

// Cache miss; send off to the network dispatcher.

mNetworkQueue.put(request);

continue;

}

// If it is completely expired, just send it to the network.

//Determine whether the cache expires

if (entry.isExpired()) {

request.addMarker("cache-hit-expired");

request.setCacheEntry(entry);

mNetworkQueue.put(request);

continue;

}

// We have a cache hit; parse its data for delivery back to the request.

request.addMarker("cache-hit");

//Wrap the result of the response into a NetworkResponse, and then call the Request subclass

//ParseNetwork Response Method to Parse Data

Response<?> response = request.parseNetworkResponse(

new NetworkResponse(entry.data, entry.responseHeaders));

request.addMarker("cache-hit-parsed");

if (!entry.refreshNeeded()) {

// Completely unexpired cache hit. Just deliver the response.

//Call the ExecutorDelivey#postResponse method

mDelivery.postResponse(request, response);

} else {

....

}

} catch (Exception e) {

VolleyLog.e(e, "Unhandled exception %s", e.toString());

}

}

}

In the run() method, we can see that there is a while(true) loop at the beginning, indicating that it has been waiting for new requests from the cache queue. Next, we determine whether the request has a corresponding cached result, if not directly added to the network request queue; then we determine whether the cached result has expired, and if it has expired, it will also be added to the network request queue; next, we will process the cached result, we can see that the cached result is first packaged into the NetworkResponse class, and then invoked. Request's parseNetworkResponse, which we said in part2, requires subclasses to override this method to process response data. Finally, the processed data is posted to the main thread, where the Executor Delivery # postResponse () method is used, which will be analyzed below.

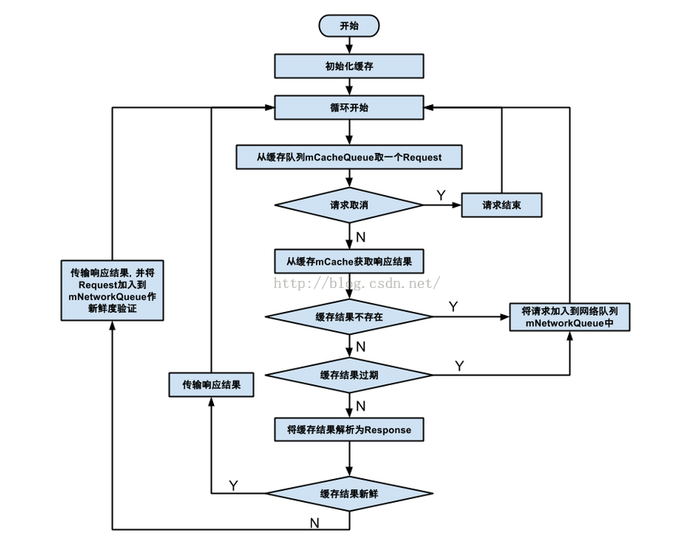

Summary: The Cache Dispatcher thread mainly judges whether the request has been cached, whether it has expired, and puts it into the network request queue as needed. At the same time, the corresponding results were packaged and processed, and then handed over to Executor Delivery. Here is a flow chart showing its complete workflow:

CacheDispatcher Thread Workflow

Part 5. Network request threads

As mentioned above, requests cannot be cached, cached results do not exist, and when the cache expires, requests will be added to the request queue. At this time, the network request threads that have been waiting for all the time will finally start to work because they get requests. Let's look at the Network Dispatcher run () method:

@Override

public void run() {

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

Request<?> request;

while (true) {

long startTimeMs = SystemClock.elapsedRealtime();

// release previous request object to avoid leaking request object when mQueue is drained.

request = null;

try {

// Take a request from the queue.

request = mQueue.take();

} ...

try {

...

// Perform the network request.

//Call the Basic Network implementation class to make network requests and get response 1

NetworkResponse networkResponse = mNetwork.performRequest(request);

...

// Parse the response here on the worker thread.

//Analyzing the response

Response<?> response = request.parseNetworkResponse(networkResponse);

request.addMarker("network-parse-complete");

...

request.markDelivered();

mDelivery.postResponse(request, response);

// Write to cache if applicable.

//Write the response results into the cache

if (request.shouldCache() && response.cacheEntry != null) {

mCache.put(request.getCacheKey(), response.cacheEntry);

request.addMarker("network-cache-written");

}

} ...

}

}

Source code has been deleted appropriately, basically the same logic as Cache Dispatcher. Here we focus on the number 1 code. Here we call the BasicNetwork perfromRequest () method to pass the request in. It can be guessed that this method implements the operation of network request. So let's go in and see, BasicNetwork perfromRequest ():

@Override

public NetworkResponse performRequest(Request<?> request) throws VolleyError {

long requestStart = SystemClock.elapsedRealtime();

while (true) {

HttpResponse httpResponse = null;

byte[] responseContents = null;

Map<String, String> responseHeaders = Collections.emptyMap();

try {

// Gather headers.

Map<String, String> headers = new HashMap<String, String>();

addCacheHeaders(headers, request.getCacheEntry());

httpResponse = mHttpStack.performRequest(request, headers); // 1

StatusLine statusLine = httpResponse.getStatusLine();

int statusCode = statusLine.getStatusCode();

responseHeaders = convertHeaders(httpResponse.getAllHeaders());

// Handle cache validation.

if (statusCode == HttpStatus.SC_NOT_MODIFIED) {

Entry entry = request.getCacheEntry();

if (entry == null) {

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, null,

responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart); // 2

}

// A HTTP 304 response does not have all header fields. We

// have to use the header fields from the cache entry plus

// the new ones from the response.

// http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec10.3.5

entry.responseHeaders.putAll(responseHeaders);

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, entry.data,

entry.responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart);

}

...

}

}

We mainly look at the number code, mHttpStack.performRequest(); here we call the mHttpStack's performRequest method, so what is mHttpStack? We can flip up and see the stack value passed when we instantiate Basic Network at part1. This value is the HttpStack object instantiated according to different system version numbers (HurlStack is greater than 9, HttpClientStack is smaller than 9). So we can see that the HurlStack.performRequest() method is actually called here, and the inner part of the method is basically about HttpUrlConnection. The logic code, I will not expand here. It can be said that HurlStack encapsulates HttpUrlConnection, while HttpClientStack encapsulates HttpClient. This method returns httpResponse, and then handles the response at 2, encapsulates it as a NetworkResponse object and returns it. After the NetworkDispatcher run () method obtains the returned NetworkResponse object, it parses the response and calls back the parsed data through the ExecutorDelivery#postResponse() method, the process is the same as that of CacheDispatcher.

Part 6. Executor Delivery notifies the main thread

The ExecutorDelivery#postResponse() method is finally called in both CacheDispatcher and Network Dispatcher, so what exactly is this method doing? Since neither the cache thread nor the network request thread is the main thread, the main thread needs a "person" to inform it that the network request has been completed, and this "person" is acted by Executor Delivery. After completing the request, through the ExecutorDelivery#postResponse() method, the onResponse() method rewritten in the main thread is eventually called back. Let's look at the source code for this method, ExecutorDelivery#postResponse():

@Override

public void postResponse(Request<?> request, Response<?> response) {

postResponse(request, response, null);

}

@Override

public void postResponse(Request<?> request, Response<?> response, Runnable runnable) {

request.markDelivered();

request.addMarker("post-response");

mResponsePoster.execute(new ResponseDeliveryRunnable(request, response, runnable));

}

The mResponsePoster#execute() method is called inside the method, so where does the mResponsePoster come from? In fact, this member variable is instantiated at the same time when Executor Delivery is instantiated, while Executor Delivery is instantiated at the same time when RequestQueue is instantiated. Readers can see the corresponding construction methods by themselves. In fact, all of these work has been done when par 1 establishes the request queue. Then we can look at the following code, Executor Delivery # Executor Delivery ():

public ExecutorDelivery(final Handler handler) {

// Make an Executor that just wraps the handler.

//Instantiate Executor and override the execute method

mResponsePoster = new Executor() {

@Override

public void execute(Runnable command) {

//The handler obtained here is the handler of the main thread, as shown in part 1 (2)

handler.post(command);

}

};

}

The execute() method receives a Runnable object, so let's go back to the above

mResponsePoster.execute(new ResponseDeliveryRunnable(request, response, runnable));

You can see here that a ResponseDelivery Runnable object is instantiated as a Runnable object. ResponseDelivery Runnable is an internal class of Executor, which implements the Runnable interface. Let's see:

/**

* A Runnable used for delivering network responses to a listener on the

* main thread.

*/

@SuppressWarnings("rawtypes")

private class ResponseDeliveryRunnable implements Runnable {

private final Request mRequest;

private final Response mResponse;

private final Runnable mRunnable;

//Construction method

...

@Override

public void run() {

// If this request has canceled, finish it and don't deliver.

if (mRequest.isCanceled()) {

mRequest.finish("canceled-at-delivery");

return;

}

// Deliver a normal response or error, depending.

if (mResponse.isSuccess()) {

mRequest.deliverResponse(mResponse.result); // 1

} else {

mRequest.deliverError(mResponse.error);

}

...

}

}

}

Above, code 1 calls back to the delivery Response method of Request, which notifies the main thread that the request has been completed. At this time, the delivery Response method rewritten in the Request subclass will call the onResponse() method, so our Activity can easily call the parsed request result.

So far, the source code for Volley has been parsed.

summary

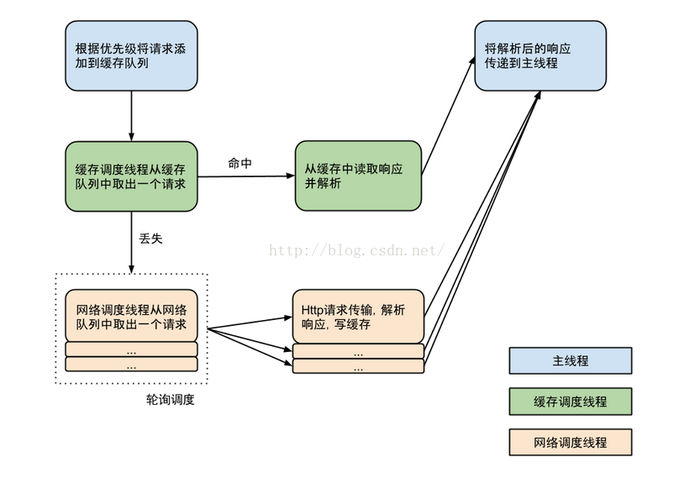

Use an official google image to illustrate the relationship between the above parts (pictures from the Internet):

Volley flow chart

The blue part represents the main thread, the green part represents the cache thread, and the orange part represents the network request thread. Readers can easily understand the process described in part 1-6 according to the relationship shown in this picture. Finally, thank you for your reading.

Author: A Dream of Blue Sky and White Cloud

Link: https://www.jianshu.com/p/15e6209d2e6f

Source: Brief Book

The copyright of the brief book belongs to the author. For any form of reprinting, please contact the author for authorization and indicate the source.