1, Foreword

Requirement: obtain the user information registered in the system according to the face seen in the lens

Design scheme:

① Registration: the user takes pictures from the front-end system and sends them to the back-end for registration. The back end analyzes the face feature data of the picture and saves the feature data to the user database.

② Recognition: when the user is facing the device lens and recognizes the face through the front end, analyze the face to obtain the feature data, and send the feature data to the back end to obtain the user information. The back-end compares the feature data and returns the user with the highest similarity of more than 0.9.

get ready: arcsoft Register account to get APP_ID and SDK_KEY (the paid version also needs an Active_Key) and download the SDK

2, Initial SDK

To use the SDK, you must first put the SDK into the project, and then activate the SDK for the first time. Each time you use the SDK, you need to initialize it

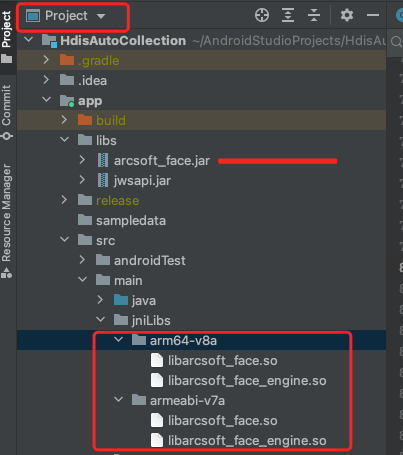

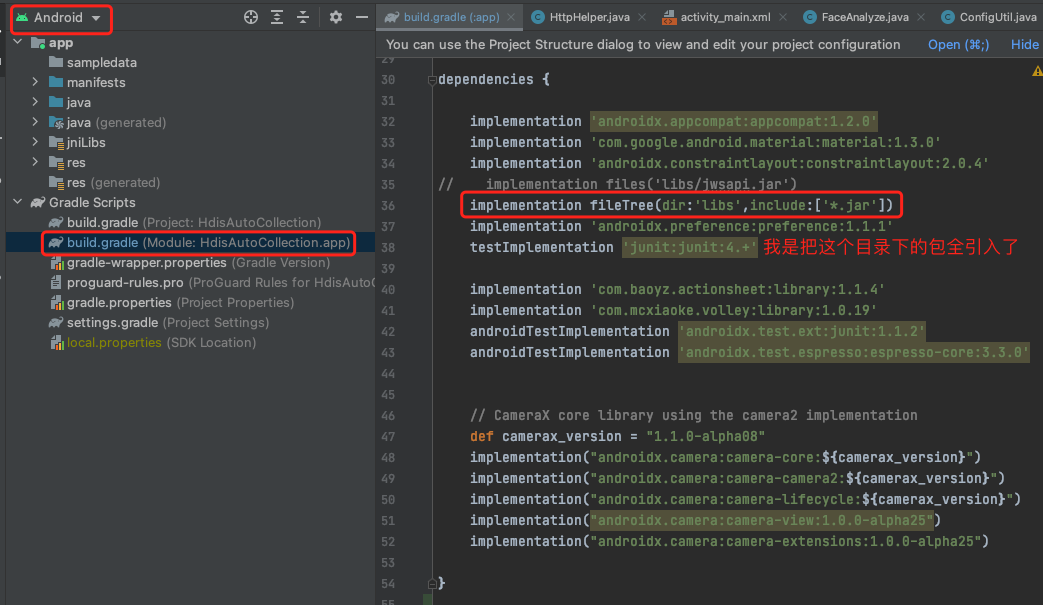

1. First switch to the Project view and put the downloaded SDK} in the corresponding location. Then in build Introduce it into gradle

2. Activate the SDK in MainActive first. Don't deactivate it every time in actual production. Just record the activation for the first time

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

toActiveFaceSDK(); // activation

}

public void toActiveFaceSDK(){

int status=FaceEngine.activeOnline(this, Yours APP_ID, Yours SDK_KEY);

if(status == ErrorInfo.MOK || status == ErrorInfo.MERR_ASF_ALREADY_ACTIVATED){

Toast.makeText(this, "Activation succeeded", Toast.LENGTH_LONG).show();

initEngine();//If the activation is successful, initialize the SDK and prepare to use it

}else if(status == ErrorInfo.MERR_ASF_VERSION_NOT_SUPPORT){

Toast.makeText(this, "Android version does not support face recognition", Toast.LENGTH_LONG).show();

}else{

Toast.makeText(this, "Activation failed, status code:" + status, Toast.LENGTH_LONG).show();

}

}3. Initialize the SDK and prepare it for use. Remember to uninstall the SDK each time the page onDestroy

public void initEngine(){ // Initialize sdk

faceObj = new FaceEngine();

DetectFaceOrientPriority ftOrient = DetectFaceOrientPriority.ASF_OP_ALL_OUT; // The default is full angle detection of the obtained image

// DetectFaceOrientPriority ftOrient = DetectFaceOrientPriority.ASF_OP_270_ONLY; // 270

afCode = faceObj.init(that.getApplicationContext(),

DetectMode.ASF_DETECT_MODE_VIDEO,ftOrient,16, 3,

faceObj.ASF_FACE_DETECT | faceObj.ASF_AGE |faceObj.ASF_FACE3DANGLE

|faceObj.ASF_GENDER | faceObj.ASF_LIVENESS| faceObj.ASF_FACE_RECOGNITION);

if (afCode != ErrorInfo.MOK) {

if(afCode == ErrorInfo.MERR_ASF_NOT_ACTIVATED){

Toast.makeText(this, "equipment SDK not active", Toast.LENGTH_LONG).show();

}else{

Toast.makeText(this, "initialization failed"+afCode, Toast.LENGTH_LONG).show();

}

}

}

public void unInitEngine() { //Uninstall SDK

if (afCode == 0) {

afCode = faceObj.unInit();

}

}3, Configure camera

There are three generations of Android Camera API so far. The official ArcFace tutorial gives Camera 1, but when I introduce it into AS, I will draw a delete line, prompt Out, and suggest using Camera2 instead. Therefore, I used Camera2 to try to replace the official generation writing method. Although it was barely successful, various adaptation problems emerged one after another, and more than half of the adaptation code was written. So I finally used CameraX. This preview adaptation is invincible in the world! Recommend< Why use CameraX>.

1. CameraX needs to introduce several packages into build.gradle, as follows:

def camerax_version = "1.1.0-alpha08"

implementation("androidx.camera:camera-core:${camerax_version}")

implementation("androidx.camera:camera-camera2:${camerax_version}")

implementation("androidx.camera:camera-lifecycle:${camerax_version}")

implementation("androidx.camera:camera-view:1.0.0-alpha25")

implementation("androidx.camera:camera-extensions:1.0.0-alpha25")2. Create preview View

Page definition variables

public int rational;

private Size mImageReaderSize;

private FaceEngine faceObj; //sdk object

private int afCode = -1; // sdk status

private ProcessCameraProvider cameraProvider = null; // cameraX object

private Preview mPreviewBuild = null; //preview of cameraX

private PreviewView viewFinder = null; // X's own preview view

private byte[] nv21 = null; // Real time nv21 image stream

protected void onCreate()(

RelativeLayout layout=that.findViewById(R.id.camerax_preview);

viewFinder =new PreviewView(that);//I created a Preview dynamically. You can also write it directly on the page

layout.addView(viewFinder);

Point screenSize = new Point(); // Get total screen width and height

getWindowManager().getDefaultDisplay().getSize(screenSize);//getRealSize will include the status bar

rational = aspectRatio(screenSize.x, screenSize.y);

startCameraX();

}

private double RATIO_4_3_VALUE = 4.0 / 3.0;

private double RATIO_16_9_VALUE = 16.0 / 9.0;

private int aspectRatio(int width, int height) {

double previewRatio = Math.max(width, height) * 1.00 / Math.min(width, height);

if (Math.abs(previewRatio - RATIO_4_3_VALUE) <= Math.abs(previewRatio - RATIO_16_9_VALUE)) {

return AspectRatio.RATIO_4_3;

}

return AspectRatio.RATIO_16_9;

}3. Initialize camera

private void startCameraX() {

ListenableFuture<ProcessCameraProvider> providerFuture = ProcessCameraProvider.getInstance(that);

providerFuture.addListener(() -> {

try { // Detect CameraProvider availability

cameraProvider = providerFuture.get();

} catch (ExecutionException | InterruptedException e) {

Toast.makeText(this, "camera X Not available", Toast.LENGTH_LONG).show();

e.printStackTrace();

return;

}

CameraSelector cameraSelector = new CameraSelector.Builder().requireLensFacing(

CameraSelector.LENS_FACING_FRONT

// CameraSelector.LENS_FACING_BACK

).build();

//Binding Preview

mPreviewBuild = new Preview.Builder()

// . setTargetRotation(Surface.ROTATION_180) / / set the preview rotation angle

.build();

mPreviewBuild.setSurfaceProvider(viewFinder.getSurfaceProvider()); // Binding display

// Image analysis, monitor and try to obtain the image stream

ImageAnalysis imageAnalysis = new ImageAnalysis.Builder()

.setTargetAspectRatio(rational)

.setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST)

//Blocking mode: ImageAnalysis.STRATEGY_BLOCK_PRODUCER (in this mode, the actuator will receive frames from the corresponding camera in sequence; this means that if the time used by the analyze() method exceeds the delay time of a single frame at the current frame rate, the received frame may no longer be the latest frame, because the new frame will be blocked from entering the pipeline before the method returns)

//Non blocking mode: imageanalysis.strategy_keep_only_last (in this mode, the executor receives the latest available frames from the camera when calling the analyze() method. If this method takes more time than the delay time of a single frame at the current frame rate, it may skip some frames to analyze() Obtain the latest available frame in the camera pipeline the next time data is received)

.build();

imageAnalysis.setAnalyzer(

ContextCompat.getMainExecutor(this),

new FaceAnalyze.MyAnalyzer() //Bind to my image analysis class

);

// Unbind before binding lifecycle

cameraProvider.unbindAll();

// binding

Camera mCameraX = cameraProvider.bindToLifecycle(

(LifecycleOwner) this

,cameraSelector

,imageAnalysis

,mPreviewBuild);

}, ContextCompat.getMainExecutor(this));

}4. Image analysis class, the code in ImageUtil is given at the end

/**

* Real time preview Analyzer processing

*/

private class MyAnalyzer implements ImageAnalysis.Analyzer {

private byte[] y;

private byte[] u;

private byte[] v;

private ReentrantLock lock = new ReentrantLock();

private Object mImageReaderLock = 1;//1 available,0 unAvailable

private long lastDrawTime = 0;

private int timerSpace = 300; // Identification interval

@Override

public void analyze(@NonNull ImageProxy imageProxy) {

@SuppressLint("UnsafeOptInUsageError") Image mediaImage = imageProxy.getImage();

// int rotationDegrees = imageProxy.getImageInfo().getRotationDegrees();

if(mediaImage != null){

synchronized (mImageReaderLock) {

/*Identify frequency and status*/

long start = System.currentTimeMillis();

if (start - lastDrawTime < timerSpace || !mImageReaderLock.equals(1)) {

mediaImage.close();

imageProxy.close();

return;

}

lastDrawTime = System.currentTimeMillis();

/*Identification frequency End*/

//Judging the YUV type, the format type we applied for is YUV_420_888

if (ImageFormat.YUV_420_888 == mediaImage.getFormat()) {

Image.Plane[] planes = mediaImage.getPlanes();

if (mImageReaderSize == null) {

mImageReaderSize = new Size(planes[0].getRowStride(), mediaImage.getHeight());

}

lock.lock();

if (y == null) {

y = new byte[planes[0].getBuffer().limit() - planes[0].getBuffer().position()];

u = new byte[planes[1].getBuffer().limit() - planes[1].getBuffer().position()];

v = new byte[planes[2].getBuffer().limit() - planes[2].getBuffer().position()];

}

//Obtain y, u and v variable data from planes respectively

if (mediaImage.getPlanes()[0].getBuffer().remaining() == y.length) {

planes[0].getBuffer().get(y);

planes[1].getBuffer().get(u);

planes[2].getBuffer().get(v);

if (nv21 == null) {

nv21 = new byte[planes[0].getRowStride() * mediaImage.getHeight() * 3 / 2];

}

if (nv21 != null && (nv21.length != planes[0].getRowStride() * mediaImage.getHeight() * 3 / 2)) {

return;

}

// The returned data is YUV422

if (y.length / u.length == 2) {

ImageUtil.yuv422ToYuv420sp(y, u, v, nv21, planes[0].getRowStride(), mediaImage.getHeight());

}

// The returned data is YUV420

else if (y.length / u.length == 4) {

nv21 = ImageUtil.yuv420ToNv21(mediaImage);

}

//Call Arcsoft algorithm to obtain face features

getFaceInfo(nv21);

}

lock.unlock();

}

}

}

//Be sure to turn it off

mediaImage.close();

imageProxy.close();

}

}5. Face feature analysis

/**

* Get face features

*/

private int lastFaceID=-1;

private void getFaceInfo(byte[] nv21) {

List<FaceInfo> faceInfoList = new ArrayList<FaceInfo>();

FaceFeature faceFeature = new FaceFeature();

//The first step is to send data to arcsoft sdk to check whether there is face information.

int code = faceObj.detectFaces(nv21, mImageReaderSize.getWidth(),

mImageReaderSize.getHeight(),

faceObj.CP_PAF_NV21, faceInfoList);

//If the data check is normal and contains face information, proceed to the next step of face recognition.

if (code == ErrorInfo.MOK && faceInfoList.size() > 0) {

int newFaceID = faceInfoList.get(0).getFaceId();// The ID of the same face that does not leave the lens each time is the same

if (lastFaceID == newFaceID) { // return if you have the same face

Log.i("Face ID identical", "used" + lastFaceID);// +", new" + newFaceID

return;

}

lastFaceID = newFaceID;

// long start = System.currentTimeMillis();

// Analysis of characteristic data

code = faceObj.extractFaceFeature(nv21, mImageReaderSize.getWidth(), mImageReaderSize.getHeight(),faceObj.CP_PAF_NV21, faceInfoList.get(0), faceFeature);

if (code != ErrorInfo.MOK) {

return;

}

byte[] featureData=faceFeature.getFeatureData();//Final feature data!!!

post(featureData); //Send the data to the back end to check the information

// Log.e(TAG, "feature length" + faceFeature.getFeatureData().length);

// long end = System.currentTimeMillis();

// Log.e(TAG+lastFaceID, "time consuming:" + (end start) + "Ms characteristic data --:" + featureData.length);

}

}4, ImageUtil code

public class ImageUtil {

/**

* Convert data of Y:U:V == 4:2:2 to nv21

*

* @param y Y data

* @param u U data

* @param v V data

* @param nv21 The generated nv21 needs to allocate memory in advance

* @param stride step

* @param height Image height

*/

public static void yuv422ToYuv420sp(byte[] y, byte[] u, byte[] v, byte[] nv21, int stride, int height) {

System.arraycopy(y, 0, nv21, 0, y.length);

// Note that if the length value is y.length * 3 / 2, there is a risk that the array is out of bounds, and the real data length should be used for calculation

int length = y.length + u.length / 2 + v.length / 2;

int uIndex = 0, vIndex = 0;

for (int i = stride * height; i < length; i += 2) {

nv21[i] = v[vIndex];

nv21[i + 1] = u[uIndex];

vIndex += 2;

uIndex += 2;

}

}

/**

* Convert data of Y:U:V == 4:1:1 to nv21

*

* @param y Y data

* @param u U data

* @param v V data

* @param nv21 The generated nv21 needs to allocate memory in advance

* @param stride step

* @param height Image height

*/

public static void yuv420ToYuv420sp(byte[] y, byte[] u, byte[] v, byte[] nv21, int stride, int height) {

System.arraycopy(y, 0, nv21, 0, y.length);

// Note that if the length value is y.length * 3 / 2, there is a risk that the array is out of bounds, and the real data length should be used for calculation

int length = y.length + u.length + v.length;

int uIndex = 0, vIndex = 0;

for (int i = stride * height; i < length; i++) {

nv21[i] = v[vIndex++];

nv21[i + 1] = u[uIndex++];

}

}

public static byte[] yuv420ToNv21(Image image) {

Image.Plane[] planes = image.getPlanes();

ByteBuffer yBuffer = planes[0].getBuffer();

ByteBuffer uBuffer = planes[1].getBuffer();

ByteBuffer vBuffer = planes[2].getBuffer();

int ySize = yBuffer.remaining();

int uSize = uBuffer.remaining();

int vSize = vBuffer.remaining();

int size = image.getWidth() * image.getHeight();

byte[] nv21 = new byte[size * 3 / 2];

yBuffer.get(nv21, 0, ySize);

vBuffer.get(nv21, ySize, vSize);

byte[] u = new byte[uSize];

uBuffer.get(u);

//Replace V every other bit to achieve VU alternation

int pos = ySize + 1;

for (int i = 0; i < uSize; i++) {

if (i % 2 == 0) {

nv21[pos] = u[i];

pos += 2;

}

}

return nv21;

}

public static Bitmap nv21ToBitmap(byte[] nv21,int w, int h) {

YuvImage image = new YuvImage(nv21, ImageFormat.NV21, w, h, null);

ByteArrayOutputStream stream = new ByteArrayOutputStream();

image.compressToJpeg(new Rect(0, 0, w, h), 80, stream);

Bitmap newBitmap = BitmapFactory.decodeByteArray(stream.toByteArray(), 0, stream.size());

try{

stream.close();

}catch (Exception e){}

return newBitmap;

}

}That's all

Thanks !!!

Attached:

Pit in human face< Face recognition pit>

How to send binary feature data to the background, please refer to my other article< Upload binary in Android form data>