In the last section, the implementation of CoAp client and server, the data is also sent to kafka in the format defined by the installation, followed by the implementation of Mapping server, which maps physical device data to abstract devices and gives data business meaning.

iot-mapping

Building iot-mapping module, introducing kafka public module

SourceListener

SourceListener listens for the raw data sent by Coap server and pulls the product model and device data of Web Management cache from redis. Since redis are more common, it also builds an iot-redis module to operate with redis.

Logical Description

- Get data from kafka to KafkaSourceVO

- Get RedisDeviceVO from redis based on imei

- Get RedisProductVO from productId

- Determine whether byte or json processing is based on format in RedisProductVO

@Autowired private BaseRedisUtil redisUtil; @KafkaListener(topics = SOURCE_TOPIC) public void iotListener(String msg){ System.out.println("-----------"+msg); KafkaSourceVO sourceVO = JSONObject.parseObject(msg, KafkaSourceVO.class); //Device Information RedisDeviceVO deviceVO = redisUtil.get(sourceVO.getImei()); //Product Information RedisProductVO productVO = redisUtil.get(deviceVO.getProductId()); if (EDataFormat.BYTE.getFormat().equals(productVO.getFormat())){ analysisByte(sourceVO,productVO,deviceVO); }else if (EDataFormat.JSON.getFormat().equals( productVO.getFormat())){ analysisJson(sourceVO,productVO,deviceVO); } }

byte parsing

- Get the original data from KafkaSourceVO and convert it to char[]

- Getting the attribute model RedisPropertyVO in the product model

- Get the attribute model and get the corresponding value in the original data char[] according to the ofset defined in the attribute model

- Encapsulate the parsed data as KafkaDownVO

/** * analysis */ public void analysisByte(KafkaSourceVO sourceVO,RedisProductVO productVO,RedisDeviceVO deviceVO){ char[] chars = sourceVO.getData().toCharArray(); List<RedisPropertyVO> propertys = productVO.getPropertys(); List<KafkaDownVO> downVOList = new ArrayList<>(propertys.size()); propertys.forEach(property->{ String[] str = property.getOfset().split("-"); int begin = Integer.valueOf(str[0]); int end = Integer.valueOf(str[1]); KafkaDownVO vo = new KafkaDownVO(); vo.setDeviceId(deviceVO.getId()); vo.setPropertyId(property.getId()); StringBuilder sb = new StringBuilder(); for (int i = begin;i <= end; i++){ sb.append(chars[i]); } vo.setData(sb.toString()); downVOList.add(vo); }); System.out.println("byte---"+downVOList); }

json parsing

- In the json definition, the key sent by the physical device corresponds to the identifier in the attribute model one-to-one, while in encapsulating RedisPropertyVO, the identifier is assigned to OFSET in order to maintain uniformity with byte, so the attribute model is obtained here and converted to Map<ofset, id>format.

- The original data in KafkaSourceVO is also serialized to map<key, value>

- Convenient attribute model maps (propertyMaps) and retrieves the corresponding data from the original data map(dataMap)

- For the uniform format of the data, the data is also encapsulated here as KafkaDownVO

public void analysisJson(KafkaSourceVO sourceVO,RedisProductVO productVO,RedisDeviceVO deviceVO){ Map<String,Long> propertyMap = productVO.getPropertys().stream(). collect(Collectors.toMap(RedisPropertyVO :: getOfset, RedisPropertyVO::getId)); Map<String,String> dataMap = JSONObject.parseObject( sourceVO.getData(), HashMap.class); List<KafkaDownVO> downVOList = new ArrayList<>(dataMap.size()); dataMap.forEach((key,val)->{ KafkaDownVO vo = new KafkaDownVO(); vo.setPropertyId(propertyMap.get(key)); vo.setDeviceId(deviceVO.getId()); vo.setData(val); vo.setCollTime(sourceVO.getCollTime()); downVOList.add(vo); }); System.out.println("json---"+downVOList); }

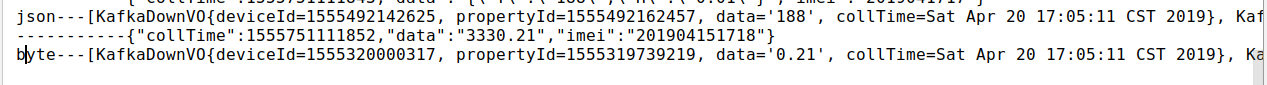

Start the project and verify that the data is properly encapsulated

Designed with iot-pt architecture, now you need to write mapped data into kakfa again for subscription services

kafka writing

Add writing to Mapping data in iot-kafka module

[@Component](https://my.oschina.net/u/3907912) public class KafkaMapping { @Autowired private KafkaTemplate kafkaTemplate; public void send(List<KafkaDownVO> list){ String json = JSONArray.toJSONString(list); kafkaTemplate.send(DOWN_TOPIC,json); } }

Modify analysisByte() and analysisJson()

public void analysisByte(KafkaSourceVO sourceVO, RedisProductVO productVO,RedisDeviceVO deviceVO){ char[] chars = sourceVO.getData().toCharArray(); List<RedisPropertyVO> propertys = productVO.getPropertys(); List<KafkaDownVO> downVOList = new ArrayList<>(propertys.size()); propertys.forEach(property->{ String[] str = property.getOfset().split("-"); int begin = Integer.valueOf(str[0]); int end = Integer.valueOf(str[1]); KafkaDownVO vo = new KafkaDownVO(); vo.setDeviceId(deviceVO.getId()); vo.setPropertyId(property.getId()); StringBuilder sb = new StringBuilder(); for (int i = begin;i <= end; i++){ sb.append(chars[i]); } vo.setData(sb.toString()); vo.setCollTime(sourceVO.getCollTime()); downVOList.add(vo); }); kafkaMapping.send(downVOList); }

public void analysisJson(KafkaSourceVO sourceVO, RedisProductVO productVO,RedisDeviceVO deviceVO){ Map<String,Long> propertyMap = productVO.getPropertys().stream(). collect(Collectors.toMap( RedisPropertyVO :: getOfset,RedisPropertyVO::getId)); Map<String,String> dataMap = JSONObject.parseObject(sourceVO.getData(), HashMap.class); List<KafkaDownVO> downVOList = new ArrayList<>(dataMap.size()); dataMap.forEach((key,val)->{ KafkaDownVO vo = new KafkaDownVO(); vo.setPropertyId(propertyMap.get(key)); vo.setDeviceId(deviceVO.getId()); vo.setData(val); vo.setCollTime(sourceVO.getCollTime()); downVOList.add(vo); }); kafkaMapping.send(downVOList); }

Start the project again to teach and study whether Mapping data is written to kakfa

Concluding remarks

The next step is the implementation of the subscription service, so listen to the next breakdown. The code details are in git