Distributed transaction solution -- Seata -- Implementation

Seata

Seata is an open source distributed transaction solution, which is committed to providing high-performance and easy-to-use distributed transaction services under the microservice architecture.

The purpose of this paper is to record and summarize the pits stepped in the development process of Seata, without introducing the principle of Seata, please refer to the official documents if you are interested.

Background

When applying the distributed architecture, we will inevitably encounter the problem of distributed transactions. There are many mature solutions on the market. This time, we choose the open-source Seata of Alibaba as the solution of distributed transactions, mainly because Alibaba is now committed to building a distributed Alibaba ecological environment, and many components in spring cloud have stopped changing, while the open-source one of Alibaba has been selected The series components are more stable and continuously updated, so Alibaba series is selected.

Seata version selection

At present, the latest version of Seata is 1.20 (2020-04-20), and the version of Seata used in this development is 1.10 (2020-02-19). I'm afraid that the latest version is unstable here.

Seata download address:

Link: http://seata.io/zh-cn/blog/download.html.

Seata practice

When using Seata for development, I encountered some problems and made some records and summaries

1. Problem description

Install and configure according to the official documents, and register the three microservices under the same global transaction group, named fsp_tx_group, Seata server and microservice are all started normally, but global transaction rollback fails. The specific problems are as follows:

The logic business is: the micro service A writes to the database A, then calls the micro service B to write to the database B, finally calls the micro service C to write to the database C. The call of the three micro services constitutes a global transaction. If any one part of the link goes wrong, the database A, B and C must be rolled back, but according to the correct configuration of the document, it is found that the database C always rolls back the failure, that is, the database A. Both B and B are rolled back, but the changes of database C are still reserved and not rolled back.

2. Problem location

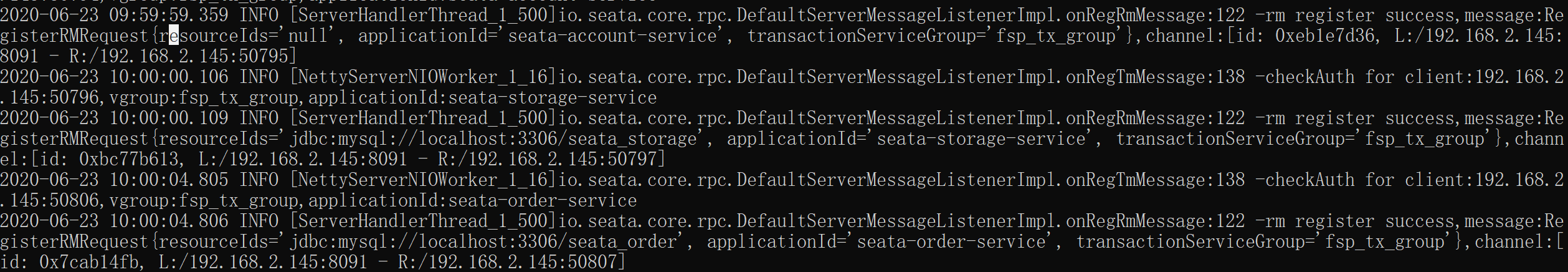

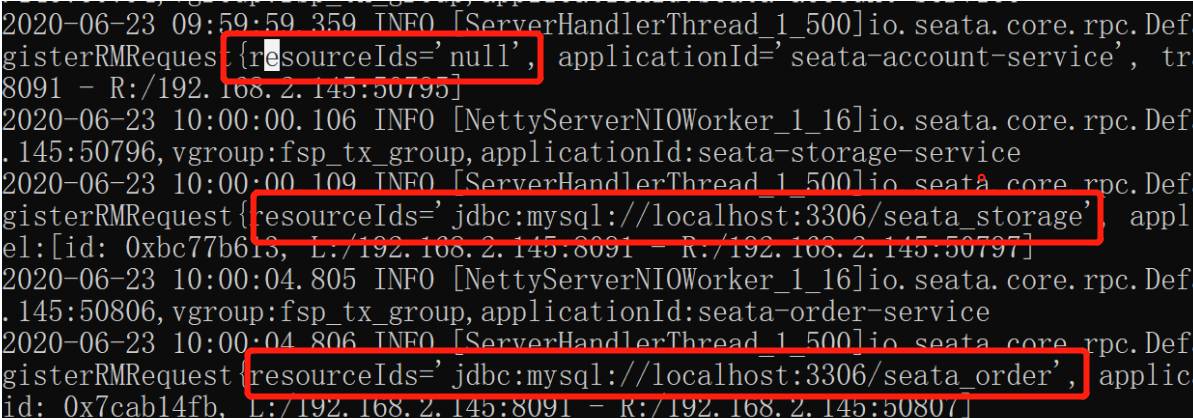

After troubleshooting, no errors were found in the code and configuration file, but some clues were found in the startup log of the Seata server, as shown in the following figure:

The large picture is as follows:

There is a problem with the registered address of RM (Resource Manager). The following two microservices can be registered in the Mysql database, but the top RM is not registered in the Mysql database. RM is one of the three components of Seata. Please refer to the official documents for the components and principles.

But why can't you roll back if you can't register to the database? Because there is one in the database named undo_log table, which records the snapshot, global transaction ID and other information before data change, so when RM cannot connect to the database, it cannot record these information to undo_ In the log table, the rollback fails.

undo_ The log table is given in the official document. It is configured when establishing the development environment.

3. Solutions

As for why RM makes mistakes when connecting to the database, after analysis, we found that application.yml The database mapping in the file is not misconfigured, and the database is normal, but the registration is not successful,

So try three solutions:

1. Delete the database and re create the database and table. After verification, the problem is not solved.

2. Rewrite the file.conf Configuration file, verified, unresolved.

3. Delete the entire module of IDE, rebuild the module, copy the same code and configuration file, and solve the problem after verification.

Attached below are the Seata profile and application.yml

file.conf:

transport { # tcp udt unix-domain-socket type = "TCP" #NIO NATIVE server = "NIO" #enable heartbeat heartbeat = true #thread factory for netty thread-factory { boss-thread-prefix = "NettyBoss" worker-thread-prefix = "NettyServerNIOWorker" server-executor-thread-prefix = "NettyServerBizHandler" share-boss-worker = false client-selector-thread-prefix = "NettyClientSelector" client-selector-thread-size = 1 client-worker-thread-prefix = "NettyClientWorkerThread" # netty boss thread size,will not be used for UDT boss-thread-size = 1 #auto default pin or 8 worker-thread-size = 8 } shutdown { # when destroy server, wait seconds wait = 3 } serialization = "seata" compressor = "none" } service { #vgroup->rgroup vgroupMapping.fsp_tx_group = "default" #only support single node default.grouplist = "127.0.0.1:8091" #degrade current not support enableDegrade = false #disable disable = false #unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent max.commit.retry.timeout = "-1" max.rollback.retry.timeout = "-1" disableGlobalTransaction = false } client { async.commit.buffer.limit = 10000 lock { retry.internal = 10 retry.times = 30 } report.retry.count = 5 tm.commit.retry.count = 1 tm.rollback.retry.count = 1 } ## transaction log store store { ## store mode: file,db mode = "db" ## file store file { dir = "sessionStore" # branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions max-branch-session-size = 16384 # globe session size , if exceeded throws exceptions max-global-session-size = 512 # file buffer size , if exceeded allocate new buffer file-write-buffer-cache-size = 16384 # when recover batch read size session.reload.read_size = 100 # async, sync flush-disk-mode = async } ## database store db { ## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc. datasource = "dbcp" ## mysql/oracle/h2/oceanbase etc. db-type = "mysql" driver-class-name = "com.mysql.jdbc.Driver" url = "jdbc:mysql://127.0.0.1:3306/seata" user = "root" password = "phoenix1991mh" min-conn = 1 max-conn = 3 global.table = "global_table" branch.table = "branch_table" lock-table = "lock_table" query-limit = 100 } } lock { ## the lock store mode: local,remote mode = "remote" local { ## store locks in user's database } remote { ## store locks in the seata's server } } recovery { #schedule committing retry period in milliseconds committing-retry-period = 1000 #schedule asyn committing retry period in milliseconds asyn-committing-retry-period = 1000 #schedule rollbacking retry period in milliseconds rollbacking-retry-period = 1000 #schedule timeout retry period in milliseconds timeout-retry-period = 1000 } transaction { undo.data.validation = true undo.log.serialization = "jackson" undo.log.save.days = 7 #schedule delete expired undo_log in milliseconds undo.log.delete.period = 86400000 undo.log.table = "undo_log" } ## metrics settings metrics { enabled = false registry-type = "compact" # multi exporters use comma divided exporter-list = "prometheus" exporter-prometheus-port = 9898 } support { ## spring spring { # auto proxy the DataSource bean datasource.autoproxy = false } }

registry.conf:

registry { # file ,nacos ,eureka,redis,zk type = "nacos" nacos { serverAddr = "localhost:8848" namespace = "" cluster = "default" } eureka { serviceUrl = "http://localhost:8761/eureka" application = "default" weight = "1" } redis { serverAddr = "localhost:6381" db = "0" } zk { cluster = "default" serverAddr = "127.0.0.1:2181" session.timeout = 6000 connect.timeout = 2000 } file { name = "file.conf" } } config { # file,nacos ,apollo,zk type = "file" nacos { serverAddr = "localhost" namespace = "" cluster = "default" } apollo { app.id = "fescar-server" apollo.meta = "http://192.168.1.204:8801" } zk { serverAddr = "127.0.0.1:2181" session.timeout = 6000 connect.timeout = 2000 } file { name = "file.conf" } }

application.yml

server: port: 2004 spring: application: name: seata-account-service cloud: alibaba: seata: tx-service-group: fsp_tx_group nacos: discovery: server-addr: localhost:8848 datasource: driver-class-name: com.mysql.jdbc.Driver url: jdbc:mysql://localhost:3306/seata_account username: root password: phoenix1991mh logging: level: io: seata: info mybatis: mapperLocations: classpath:mapper/*.xml