docker network

Docker network can be divided into container network on a single host and network across multiple hosts

docker network type

View docker's native network

[root@docker01 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 68f4c1f9f020 bridge bridge local 85317d309423 host host local 0699d506810c none null local [root@docker01 ~]#

none network

The container in this state is completely isolated and closed without ip address;

Usage scenario: the network with high safety factor is basically not used

[root@docker01 ~]# docker run -itd --name none --net none busybox

View network

[root@docker01 ~]# docker exec -it none ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

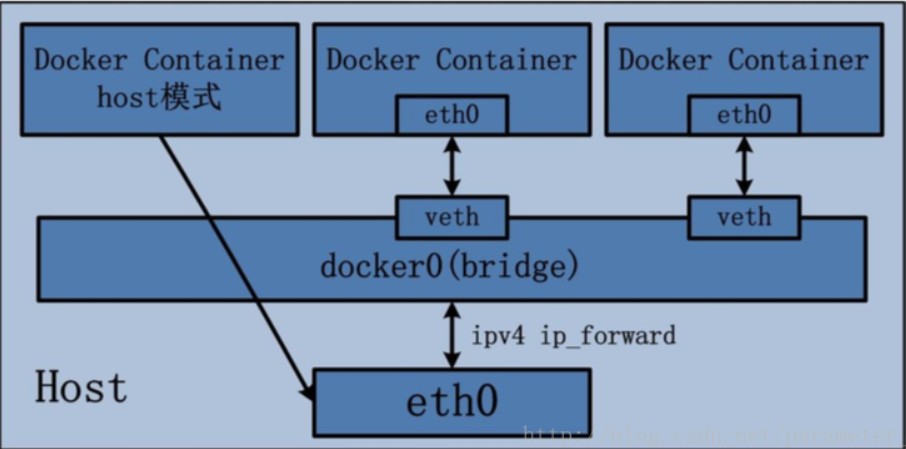

Host mode

The host mode will share a Network Namespace with the host like the network card of dockerhost. The container will not virtualize its own network card and configure its own IP

[root@docker01 ~]# docker run -itd --name host --net host busybox

[root@docker01 ~]# docker exec -it host ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:9a:af:82 brd ff:ff:ff:ff:ff:ff

inet 172.16.46.111/24 brd 172.16.46.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::87c7:dfca:c8d:46d8/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue qlen 1000

link/ether 52:54:00:6b:c4:36 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 qlen 1000

link/ether 52:54:00:6b:c4:36 brd ff:ff:ff:ff:ff:ff

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue

link/ether 02:42:7d:fe:68:77 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

PS:

As like as two peas, the network of Host network is exactly the same as the host network, because the Net stack is not isolated from its network at the beginning of the container, but is directly used by the host network stack.

Usage scenario: the biggest benefit is performance. If you require high network transmission efficiency, you can choose host; The biggest drawback is that it is not flexible enough. Too many ports may easily lead to port conflicts

bridge network

bridge is the default mode of docker. 172.17.0.1 is used as the gateway by default

After opening docker, the host will create its own docker0 bridge. After starting docker, it will connect to this virtual bridge, allocate an IP from the docker0 subnet to the container, and set the IP address of docker0 as the default gateway of the container;

Bridging network is presented in the form of Veth pair in container and docker; One end in the container and one end in the host; Name it after vethxxx and add it to docker0 bridge;

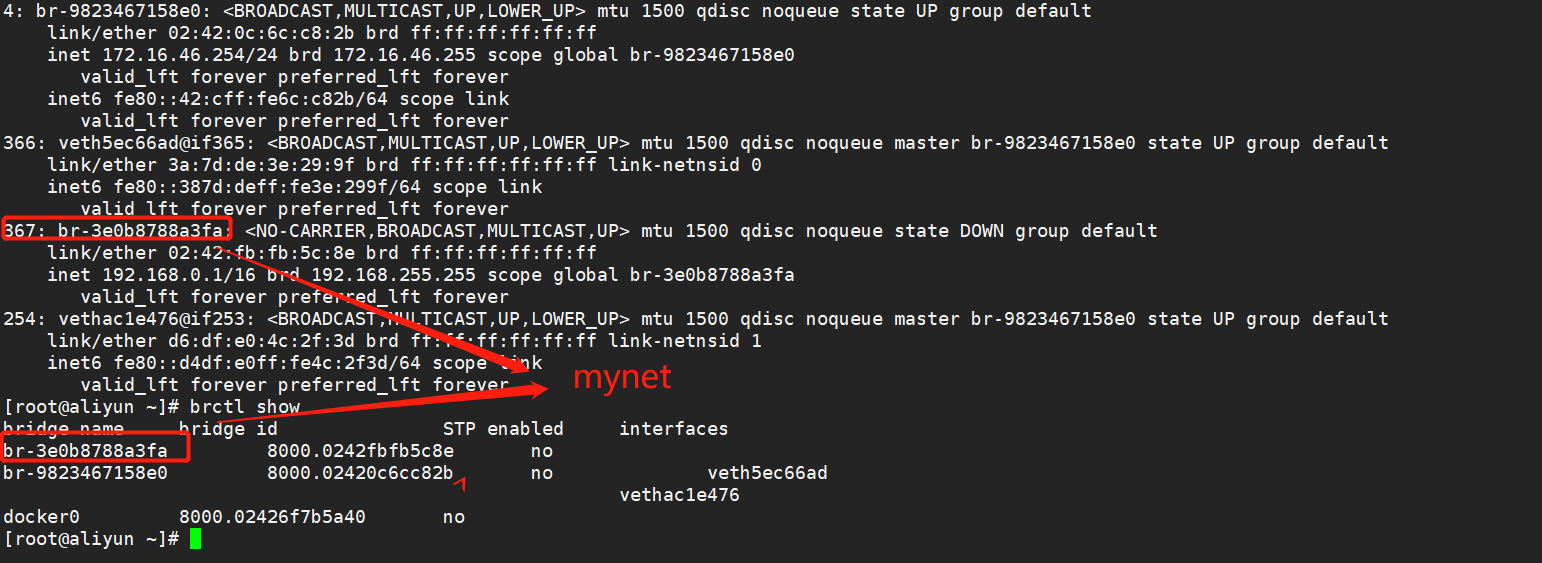

If there is a new bridge, such as the following mynet, the same as above, the allocated Veth XXX is just adding the mynet bridge instead of docker0

[root@aliyun ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 94e3a737c800 bridge bridge local 9abcb6d81f9f host host local 9823467158e0 lnmp bridge local 3e0b8788a3fa mynet bridge local 05efb7a8d771 none null local # brctl show #View docker Bridge Information [root@aliyun ~]# brctl show bridge name bridge id STP enabled interfaces br-3e0b8788a3fa 8000.0242fbfb5c8e no br-9823467158e0 8000.02420c6cc82b no veth5ec66ad vethac1e476 docker0 8000.02426f7b5a40 no

In bridge mode, the container can be ping ed with the host

docker run -itd --name dc01 busybox:latest

[root@docker01 ~]# docker exec -it dc01 ping 172.16.46.111 PING 172.16.46.111 (172.16.46.111): 56 data bytes 64 bytes from 172.16.46.111: seq=0 ttl=64 time=0.066 ms 64 bytes from 172.16.46.111: seq=1 ttl=64 time=0.102 ms 64 bytes from 172.16.46.111: seq=2 ttl=64 time=0.080 ms ^C --- 172.16.46.111 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.066/0.082/0.102 ms

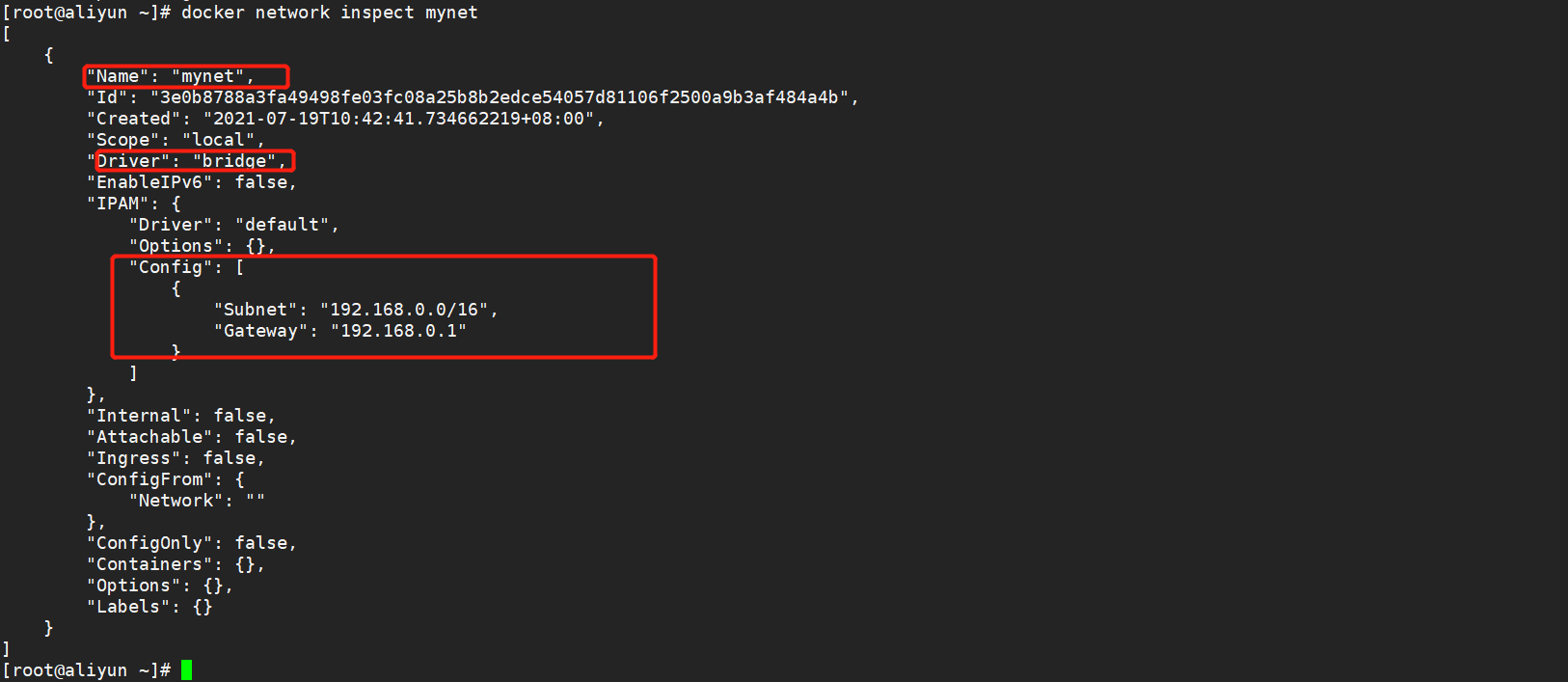

Custom network

If we want to change the network segment custom ip, docker supports creating a network card

docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

docker network inspect mynet view network card information

#--Network specifies the network card to which it belongs docker run -itd --name busybox_net01 --network mynet busybox:latest docker run -itd --name busybox_net02 --network mynet busybox:latest

ps: custom network card also supports custom ip

docker run -itd --name dc03 --network mynet --ip 192.168.0.100 busybox:latest

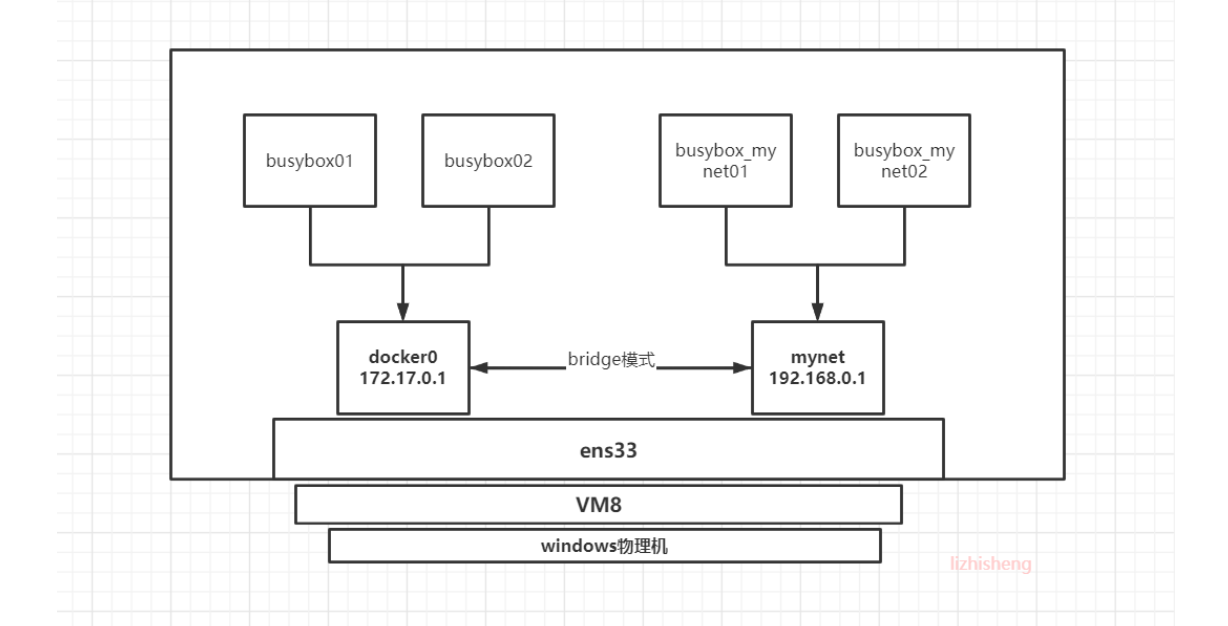

bridge mode architecture diagram

The ip assigned by the container belongs to the corresponding bridge (docker0, mynet). The data forwarding of the bridge is forwarded based on the local real network card ens33. In the case of a virtual machine, the ens33 network card is mapped from the V8 network card of the host computer, and finally forwarded by the network card of the host computer.

As shown in the figure above, we know that we can ping the same network segment, but busybox01 and busybox_ Can mynet01 Ping?

Answer: of course not

Not in the same network segment, it is impossible to ping!!!

Solution: add the container to your network card! How is docker implemented?

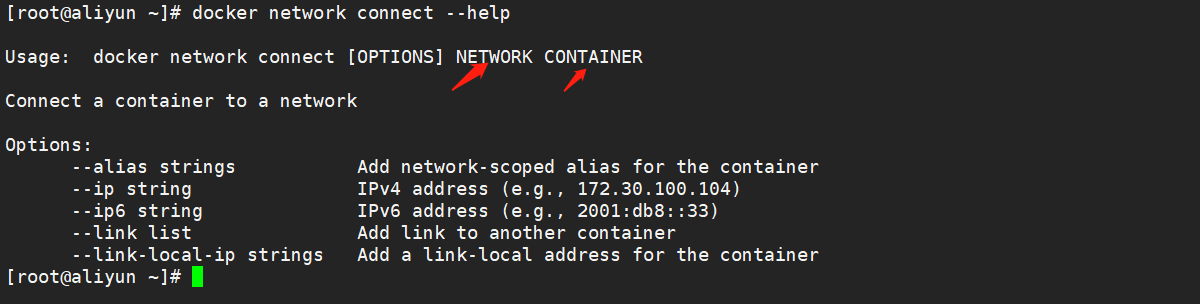

docker network connect --help

Add containers for other bridges to the mynet bridge

docker network connect mynet busybox01

After the addition is successful, there will be two different bridge IPS on the modified container. At this time, you can ping individual containers of different network segments

[root@docker01 ~]# docker exec -it busybox01 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

13: eth1@if14: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:c0:a8:00:03 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.3/16 brd 192.168.255.255 scope global eth1

valid_lft forever preferred_lft forever

At this point, busybox01 can be connected with busybox_mynet01ping pass

[root@docker01 ~]# docker exec -it busybox01 ping busybox_mynet01 PING dc02 (192.168.0.2): 56 data bytes 64 bytes from 192.168.0.2: seq=0 ttl=64 time=0.118 ms 64 bytes from 192.168.0.2: seq=1 ttl=64 time=0.105 ms 64 bytes from 192.168.0.2: seq=2 ttl=64 time=0.103 ms 64 bytes from 192.168.0.2: seq=3 ttl=64 time=0.122 ms ^C --- dc02 ping statistics --- 4 packets transmitted, 4 packets received, 0% packet loss round-trip min/avg/max = 0.103/0.112/0.122 ms

Benefits of customizing your network

It comes with a ContainerDNSserver function (domain name resolution)

#Pinging the host name directly will not pass [root@docker01 ~]# docker exec -it busybox01 ping busybox02 ping: bad address 'busybox02' #The default bridge can ping busybox02's ip address [root@docker01 ~]# docker exec -it busybox01 ping 172.17.0.3 PING 172.17.0.3 (172.17.0.3): 56 data bytes 64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.115 ms 64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.140 ms ^C --- 172.17.0.3 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.115/0.127/0.140 ms #Custom bridge [root@docker01 ~]# docker exec -it busybox_net01 ping busybox_net02 PING busybox_net02 (192.168.0.3): 56 data bytes 64 bytes from 192.168.0.3: seq=0 ttl=64 time=0.063 ms 64 bytes from 192.168.0.3: seq=1 ttl=64 time=0.074 ms 64 bytes from 192.168.0.3: seq=2 ttl=64 time=0.097 ms ^C

Container (shared network protocol stack)

The two containers share an ip address, but apart from ip, the other two containers are isolated from each other

[root@docker01 ~]# docker run -itd --name web5 busybox:latest

6a1bf2b8bbfa724d9f94afcef141a4a3bea235265235e97aeb91a5a04f0a81f1

[root@docker01 ~]# docker run -itd --name web6 --network container:web5 busybox:latest

bf39a692d2aeec28ec921d7fa2a61aa9138ba105a2091c8eef9b7f889b43797e

[root@docker01 ~]# docker exec -it web5 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

22: eth0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@docker01 ~]# docker exec -it web6 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

22: eth0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

PS: due to the particularity of this network, this network can be selected when the same service is running and qualified services need to be monitored. Log collection or network monitoring has been carried out; Generally, there are few running scenarios